An experimental gesture recognition tool using the Microbit's accelerometer, built using ml5js. Ml5js is built on top of TensorFlow.js.

DEMO: https://ttseng.github.io/microbit-ml/

Follow the instructions below for running the demo app

- Load this Hex file onto your Microbit. The firmware is setup so that the Microbit can continually stream accelerometer data via bluetooth.

-

Open up the Gesture Recognizer app in a Chrome browser (full info on system requirements for Web BLE here).

-

Click

Pair Your Microbit.If your Microbit is successfully paired, you should see a smiley face on the Microbit's LED array. -

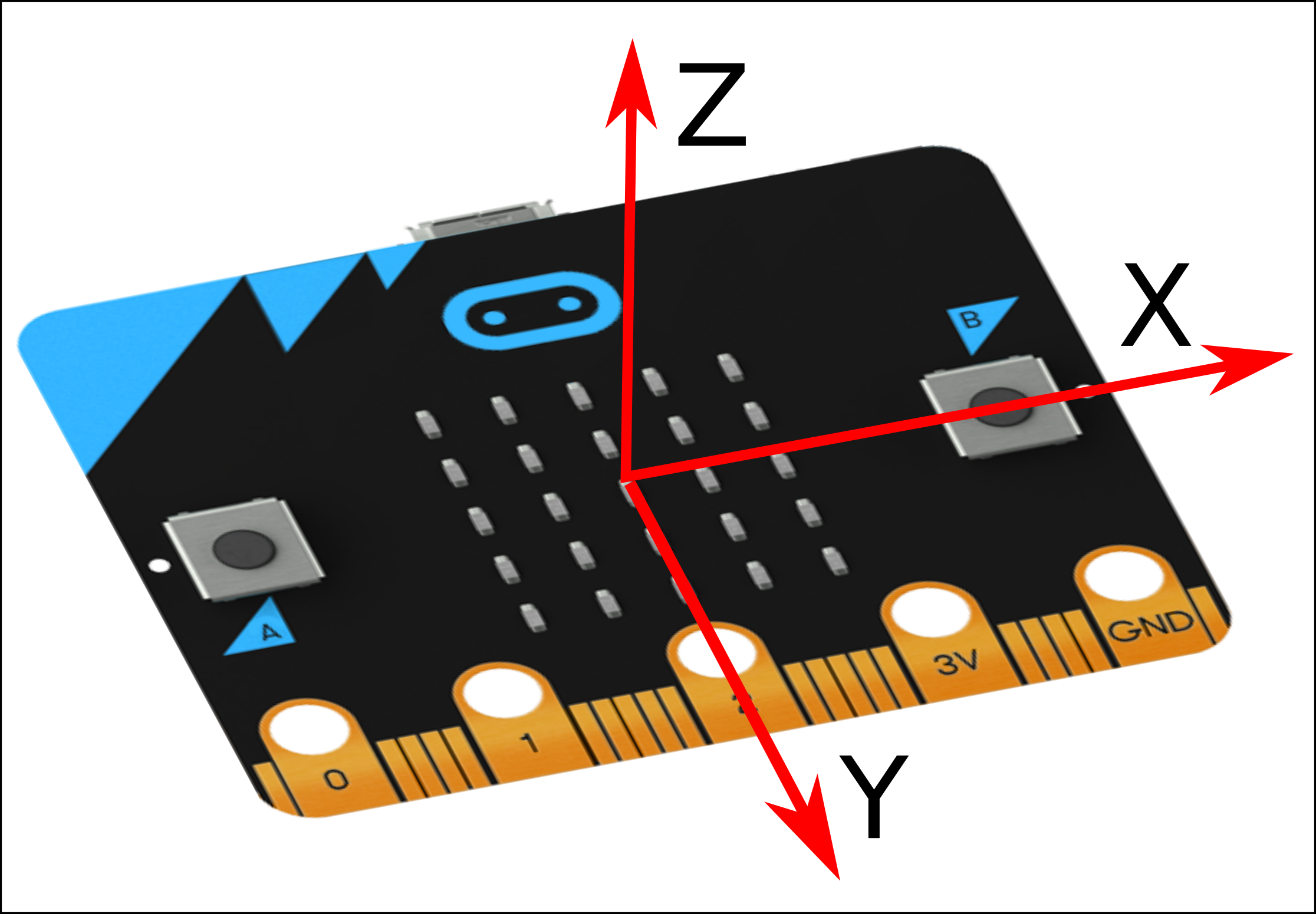

+ Add New Gestureto record samples of your gesture. You have 2 seconds to record each sample, and there is a 3 second lead-in countdown. You'll be able to view the x, y, and z accelerometer plot after each sample and remove individual samples as needed.

-

When you have at least 3 samples of 2 or more gestures, click

Train Modelto build the machine learning model. -

After the model is trained, you can test out the gesture recognizer.

- If you find that a paticular gesture is not being recognized accurately, you can add more samples to see if the model improves, or you can adjust the

confidence thresholdbased on the prediction percentage values you see in the console.

The ML model is a neural network with 8 distinct inputs, all captured over a 2 second sample

ax_max- the max accelerometer value for the x axisax_min- the min accelerometer value for the x axisax_std- the standard deviation of the accelerometer for the x axisax_peaks- the number of positive peaks for the accelerometer for the x axisay_maxay_minay_stday_peaksaz_maxaz_minaz_stdaz_peaks

When predicting gestures from live data, the application takes 2 second samples from incoming data and runs a prediction based on the trained gestures.

The Debugging interface lets you see how the model performs based on 10 seconds of data and can be accesssed by clicking Debug last 10 seconds after the model has been trained.

If you'd like to play around with editing this app, download the source code and run a local web server (required by p5js). I recommend using the Web Server for Chrome extension - full instructions here!