| title | date | author |

|---|---|---|

Intro to Bash Shell |

April 2021 |

Tim Dennis |

exec bash- switches to bash from zsh- no longer needed on mac as zsh is default, so won't be as disorienting to learners

- enlarge text size - via preferences

export PS1='$ '- changes command promp to$export PROMPT_COMMAND="history 1 >> ~/Dropbox/UnixHistory.txt"- Turn off the text coloring in terminal (

Terminal -> Preferences -> ANSI)- alternately, use a different user account on your Mac so you don't pick up themed or supped up CLI's

- have students check software installation:

Unix, git for windows, ask when student come in - Etherpad link: https://pad.carpentries.org/2021-ucla-spring-unix

- Get data for workshop: http://swcarpentry.github.io/shell-novice/data/data-shell.zip

- Unzip and put on desktop

- Most tasks in the shell can be done with mouse on Desktop. Why do anything differently?

- A way to combine powerful tools together using minimal keystrokes

- Let's us automate repetitive tasks: moving & processing files/data, run our research analysis, build applications

- Install software & other third party tools, configure software & tools

- Often required to use with remote machines: high performance computing (HPC), cloud computing, web servers

- Might be good for brief exercise in etherpad: jargon around command line, bash, etc.?

Objectives: orient to shell and how it relates to the computer, understand the benefit of CLI

- run programs

- store data

- communicate with each other

- communicate with us → today you'll learn a new way of doing this

- graphical user interface: GUI

- command line interface: CLI

- you type something - LOOP

- computer reads it - READ

- executes command - EVAL

- prints output - PRINT

- We use command shell to make this happen: this is the interface between user and computer

- bash: Bourne again shell, most commonly used, default on most modern implementations

- zsh - a variant of bash, now default on mac, for us today bash and zsh are interchangable

NOTE: I often skip this

- Our friend Nelle has six months worth of survey data collected from the North Pacific

- 300 samples of goo

- Her pipeline:

- Determine the abundance of 300 proteins

- Each sample has one output file with one line for each protein

- calculate statistics for each protein separately using program

goostat - compare statistics for proteins using program called

goodiff - write up results and submit by end of month

- If she enters all commands by hand, will need to do 45,150 times.

- What can she do instead? Use the command line

- Automate repetitive tasks (30 minutes vs. 2 weeks)

- Prevent user error, manual error

- Processing pipelnes are re-usable and sharable

- REPL Read-Evaluate-Print-Loop, let's you interactively work things out

Objectives: paths, learn basic commands for working with files and directories, learn syntax of commands, tab-completion

- prompt:

$indicates computer is ready to accept commands

whoamiThis command:

- finds program

- runs program

- displays program's output

- displays new prompt

- let's see where we are in our file system

pwdpwdstands for print working directory, in this case it is also the home directory- note that the home directory will look different on different OS's

- To understand "home directory" let's look at an image

- root directory: holds everything else, begins with slash

/ - structure of directories are below that in a tree type structure

- slashes

\can also be a separator between names lslisting, prints names of files and directories in current directory and prints in alphabetical order

ls- make the output more comprehensible by using the flag

-F

ls -F-Fadds trailing / to names of directories (note: on Windows git bash there's syntax highlighting for directories )- spaces and capitalization in commands are important!

-Fis an option, argument, or flaglshas lots of other options. Let's find out what they are by:

ls --help- many bash commands and programs support a

--helpflag to display more information - for even more detailed information on how to usr

lstypeman ls(caveat WINDOWS users) manis for manual and prints the description of a command and options- Git for Windows doesn't come with the

manfiles, instead do a web search forunix man page COMMAND - to navigate

manfiles use the up and down arrows, or space bar and b for paging, to quitq

- We can also

lsto see contents of another directory:

ls -F Desktop- we

ls -Fto the Desktop from our home directory and we see thedata-shell/folder we unziped there earlier - let's look inside

data-shell

ls -F Desktop/data-shell- Let's change directories into that folder

- What do you think the command is for changing directories?

- Yes,

cd

- Yes,

cd Desktop

cd data-shell

cd data- see where we are:

pwdls -F- We can go down the directory structure, how do we go up?

- We might try:

cd data-shelldata-shellis a level above our current location, but we can go up a level this way- but there are different ways to navigate to directories above your current

pwd

cd ....goes up one level in file hierarchy..is special directory name meaning "the dir containing this one" (parent)- let's confirm it worked:

pwd..won't show up usinglsby itself- but we can do this to see hidden files:

ls -F -a

-ashows hidden files, including.and..-astands for show all.is for current directory, this can be useful if you want to reference your current location in the file system

- What happens if we type

cdby itself? go ahead and do this and type in the chat what it does

cd- by itself will return you to your

homedirectory pwd- how do we get back to our

datafolder?

cd Desktop/data-shell/data- we can string together a list of directories at once

- so far we have been using relative paths paths starting from our current directory

- we can also use absolute paths in

lsandcd

cd /Users/nelle/Desktop/data-shellpwd- two more short cuts:

~and- - challenges: open: http://swcarpentry.github.io/shell-novice/02-filedir/#absolute-vs-relative-paths

Objectives: create directory hierarchy that matches given diagram, create files, look in folders, delete folders

- go back to the

data-shelldirectory (how?) - glad you asked!

pwd

ls -F- Let's create a directory called

thesis

mkdir thesis

mkdirMAKES directories

good names for directories:

- don't use whitespaces - whitespaces break arguments on CLI unless quoted, avoid, use

-or_(or combination) - don't start a name with

-- commands treat names starting with

-as options

- commands treat names starting with

- stay with letters, numbers,

-and_ - if you need to refer to names of files or directories that have whitespaces, quote them

cd thesis

ls -F

- nothing inside our new dir yet

- let's change directory to inside the

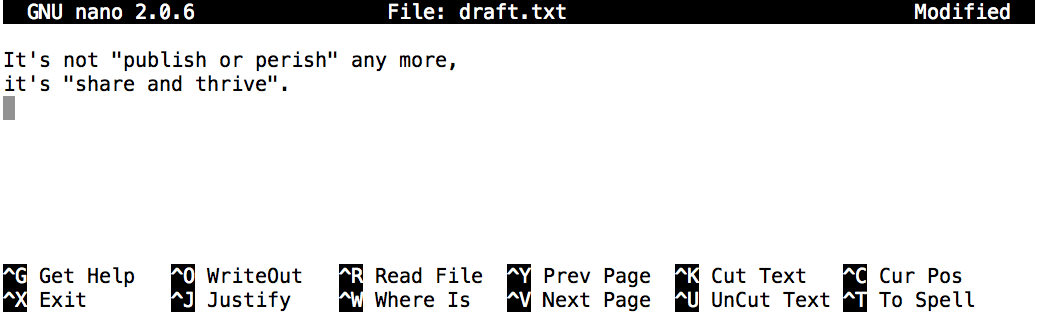

thesisand create a file calleddraft.txt

nano draft.txt

- Creates file, opens text editor

- Editors are like cars -- everyone wants to customize them, so there are hundreds if not thousands of different models

- Write some text

- use

Control+Oto save file shorthand is^O) Control+Xto exit- I don't like this draft, let's remove it:

ls

rm draft.txt

ls- where does file go? Can i get it back?

- Gone Pecan!

- Deleting is forever!

- Let's recreate the file then move up one directory

nano draft.txt

cd ..- now let's try and remove a directory

- removing a directory:

rm thesis # error

rmdir thesis # still get error

rm thesis/draft.txt

rmdir thesis

- unix won't let us delete a directory with something inside of it as a precaution

- we could have also used

rm -r thesis, but that can be dangerous!

- Let's recreate thesis and draft.

mkdir thesisnano thesis/draft.txt

ls thesis- but

draft.txtisn't very informative, let's rename it using themvcommand

mv thesis/draft.txt thesis/quotes.txt

ls thesis- first part of

mvis what you want to move, second is to where and including the new name - note:

mvworks on directories as well - let's move

quotesinto the current directory. What does.mean again?

mv thesis/quotes.txt .ls thesisls <filename>will only list that file, let's see that our file is there

ls quotes.txt- if we want to keep the old version, we can use copy

cp quotes.txt thesis/quotations.txtls quotes.txt thesis/quotations.txt- let's removed the copied version

rm quotes.txt

ls quotes.txt thesis/quotations.txt- challenges: open http://swcarpentry.github.io/shell-novice/03-create/#renaming-files

- mkdir

- nano (editor)

- rm, rmdir

- mv, cp

Objectives: redirect command output to file, construct pipelines

data: http://swcarpentry.github.io/shell-novice/data/data-shell.zip

- now we can move around and create things, let's see how we can combine existing programs in new ways

- Let's go into the molecules directory

pwd

ls moleculescd molecules- the

.pdbformat indicates these are Protein Data Bank files - Let’s run an example command

wcon cubane.pdb:

wc *.pdb- we haven't covered the

*yet. It is a wild card operator - the

*matches zero or more characters, so the shell turns*.pdbinto a list of all.pdbfiles - word count: lines, words, characters

- let's only look at the number of lines

- what flag do you think will produce this?

wc -l *.pdb- only report number of lines

- what if we run this? what do you think will happen?

wc -l *.pdb > lengths.txt- this will send output (redirect it) to new file named lengths.txt

- but let's confirm that it worked by using a new command

cat- let's us look inside the file catstands for concatenate

cat lengths.txt- can't remember how wc reports? use

man wc(qto exit),wc -h, orwc –help(this should work for most unix commands), also web searchunix man wc

- now let's use the

sortcommand to sort the contents of our file - let's look at some options for

sort

man sort

sort --help

- we will use the

-nflag to tell sort to sort by numerical rather than alpha

sort -n lengths.txt- compare to:

sort lengths.txt

- sort by first column, using numerical order

- does not change file, just prints output to screen

- if we want to save results, what can we use?

- yes, we use our rediretion operator

>to save to file

sort -n lengths.txt > sorted-lengths.txt- arrow up to recall last few commands

- let's use head to see the biggest:

head -1 sorted-lengths.txt- Saving intermediate files like

sorted-lengths.txtcan get messy and confusing, hard to track - We can make it easier to understand by combining these commands together

sort -n lengths.txt | head -n 1

- vertical bar is called the pipe in unix

- it sends output of command on left as input to command on right

headprints specified number of lines from top of file- we can chain multiple commands together

- for example send the output of

wcdirectly tosort, and then the resulting output tohead

wc -l *.pdb | sort -n

- adding

headthe full pipeline becomes:

$ wc -l *.pdb | sort -n | head -n 1

- this pipe and filter programming model is important conceptually

- let's review what we covered via this image:

- note: you only enter the original files once!

- start in her home directory (

users/Nelle)

cd north-pacific-gyre/2012-07-03- all files should contain same amount of data

- any files contain too little data?

wc -l *.txt | sort -n | head -5

- any files contain too much data?

wc -l *.txt | sort -n | tail -5

- file marked with Z? outside naming convention, may contain missing data

ls *Z.txt

- records note no depth recorded for these samples

- may not want to remove, but will later select all other files using

[AB].txt - Socrative questions 5 and 6

Objectives: write loops that apply commands to series of files, trace values in loops, explain variables vs values, why spaces and punctuation shouldn't be used in file names, history, executing commands again

- what if you wanted to perform the same commands over and over again on multiple files?

- supposed we have several hundred genome data files named xxx.dat, bbb.dat, etc.

- go to creatures directory

data-shell/creatures - may try:

cp *.dat original-*.dat- but doesn't work. Why?

- really you are saying this:

cp basilisk.dat unicorn.dat original-*.dat- problem is that when copy receives two files it expects the last one to be a directory in which to copy the files

- you can perform these operations using a loop

- first, let's looking at first three lines in each file

for filename in basilisk.dat unicorn.dat

do

head -3 $filename

done- what does this look like when you arrow back up?

- explain syntax: filename is variable, what does it stand for? how is it represented later?

- shell prompt changes, if you get stuck, use

control+cto get out - can specify whatever variable name you want

- why might it be problematic to have filenames with spaces?

- you can include multiple commands in a loop:

for filename in *.dat

do

echo $filename

head -100 $filename | tail -20

done- use of wildcard. what does echo do? why is this useful for loops?

echo Hey you

- strategy:

echocommand before running to make sure the loop is functioning the way you expect

- write a for loop to resolve the original problem of creating a backup (copy of original data)

- going back to the original file copying problem, we can solve this with the following loop:

for filename in *.dat

do

cp $filename original-$filename

done- nelle's example:

cd north-pacific-gyre/2012-07-03- check:

for datafile in *[AB].txt; do; echo $datafile; done`

- add command:

for datafile in *[AB].txt; do; echo $datafile stats-$datafile; done

- add command:

<!-- for datafile in *[AB].txt; do; goostats $datafile stats-$datafile; done -->

- (kill job using ^C)

- add echo:

for datafile in *[AB].txt; do; echo $datafile; goostats $datafile stats-$datafile; done

- tab completion: move to start of line using

^Aand end of line^E(option with arrows to move by one word) history: see old commands, find line number (repeat using !number)

Objectives: write shell script to run command or series of commands for fixed set of files, run shell script from command line, write shell script to operate on set of files defined on command line, create pipelines including user-written shell scripts

- go back to molecules in nelle's directory

- create file called

middle.shand add this command:head -15 octane.pdb | tail -5 bash middle.sh.shmeans it's a shell script- very important to make these in a text editor, rather than in Word!

- edit

middle.shand replace file name with"$1" - quotations accommodates spaces in filenames

bash middle.sh octane.pdb, should get same output- try another file:

bash middle.sh pentane.pdb - edit

middle.shwithhead “$2” “$1” | tail “$3” bash middle.sh pentane.pdb -20 -5- to remember what you've done, and allow for other people to use: add comments to top of file

#select lines from middle of a file

#usage: middle.sh filename -end_line -num_lines-

explain comments

-

how would we use the

forloop? -

what if we wanted to operate on many files? create new file:

sorted.sh -

wc -l “$@” | sort -n- unix special parameters

-

bash sorted.sh *.pdb ../creatures/*.dat -

add comment!

-

save last few lines of history to file to remember how to do work again later:

-

history | tail -4 > redo-figure.sh -

history | tail -5 | colrm 1 7(1-7 characters)- nelle problem

-

run goostats on all data files

-

do-stats.sh:

#calculate reduced stats for data files at J = 100 C/bp

for datafile in “$@”

do

echo $datafile

bash goostats -J 100 -r $datafile stats-$datafile

done-

bash do-stats.sh *[AB].txt -

or just report

bash do-stats.sh *[AB].txt | wc -l- Socrative question 9

Objectives: grep to select lines in text which match patterns, find to find files whose names match patterns, nesting files, text vs binary files

- move to writing subdirectory

cat haiku.txtgrep not haiku.txt: find lines that contain "not"grep day haiku.txt: find lines that contain "day"grep -w day haiku.txt: searches only for whole wordsgrep -n it haiku.txt: includes the numbers on lines that matchgrep -n -w the haiku.txt: combine flagsgrep -n -w -i the haiku.txt: make case insensitivegrep -n -w -v the haiku.txt: invert selection, only lines that do NOT contain the- the real strength of grep, and the origin of its name, is "regular expressions," which describes ways of programmatically describing text strings/search patterns, but we don't have time to cover these today (many awesome lessons and tutorials online)

difference between grep and find

-

find . -type d: look for things that are directories in given path -

find . -type f: look for files instead -

find is automatically recursive (keeps drilling down into file hierarchy)

- can specify depth:

find . -maxdepth 1 -type f - or

-mindepth

- can specify depth:

-

can match by name:

find . -name *.txt(will only give one filename! expands name prior to running command) -

correct way:

find . -name '*.txt' -

find similar to list, but has more refined parameter searching

-

can combine together: count all lines in a group of files

-

wc -l $(find . -name '*.txt') -

nesting or subshell

-

equivalent command:

wc -l ./data/one.txt ./data/two.txt ./haiku.txt -

can also combine find and grep: find .pbd files that contain Iron

grep FE $(find .. -name '*.pdb')

-

today we've only talked about text files, what about images, databases, etc? those are binary (machine readable)

-

Socrative question 10

- stop shell script output