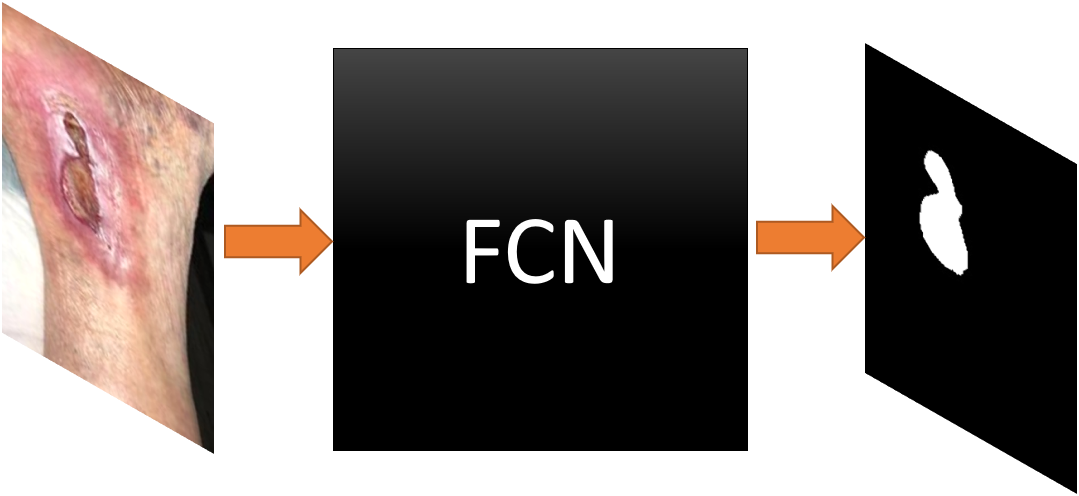

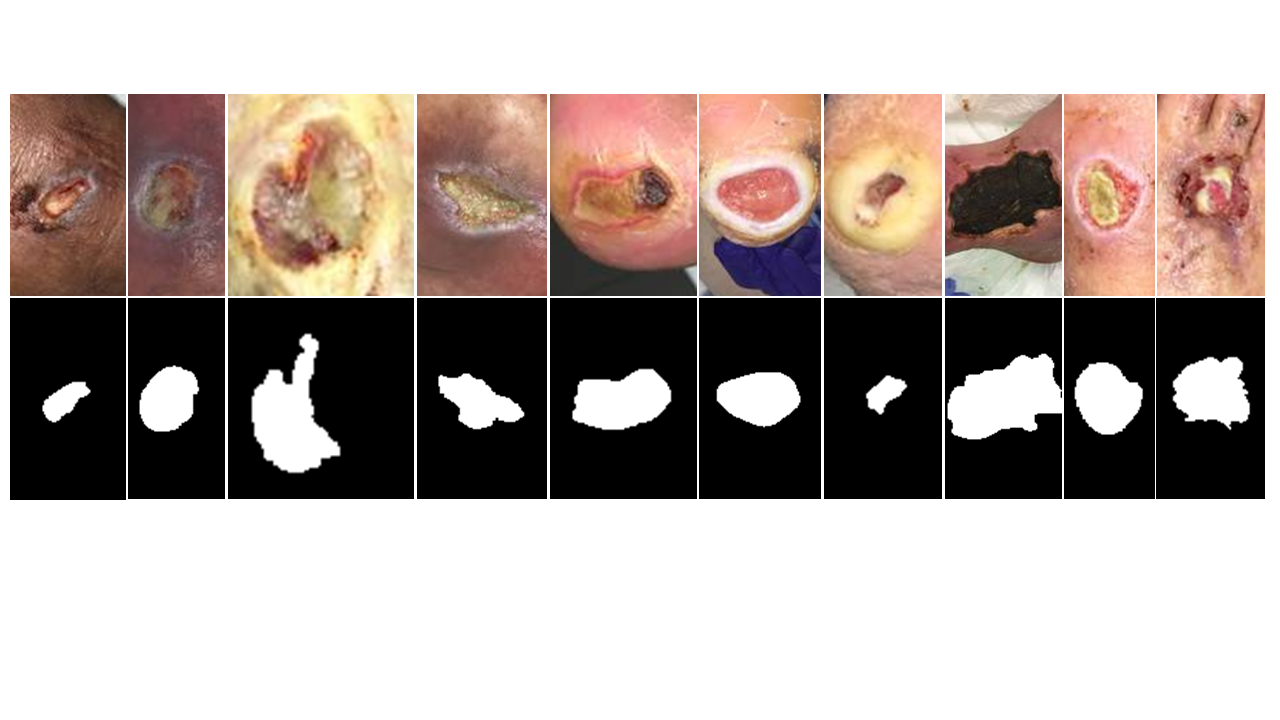

This project aims at wound area segmentation from natural images in clinical settings. The architectures tested so far includes: U-Net, MobileNetV2, Mask-RCNN, SegNet, VGG16.

C. Wang, D.M. Anisuzzaman, V. Williamson, M.K. Dhar, B. Rostami, J. Niezgoda, S. Gopalakrishnan, and Z. Yu, “Fully Automatic Wound Segmentation with Deep Convolutional Neural Networks”, Scientific Reports, 10:21897, 2020. https://doi.org/10.1038/s41598-020-78799-w

The training dataset is built by our lab and collaboration clinic, Advancing the Zenith of Healthcare (AZH) Wound and Vascular Center. With their permission, we are sharing this dataset (./data/wound_dataset/) publicly. This dataset was fully annotated by wound professionals and preprocessed with cropping and zero-padding.

3/12/2021:

The dataset is now available as a MICCAI online challenge. The training and validation dataset are published here and we will start evaluating on the testing dataset in August 2021. Please find more details about the challenge here and here.

12/13/2021:

The code is updated to be compatible with tf 2.x

tensorflow==2.6.0

python3 train.py

python3 predict.py

Thanks for the AZH Wound and Vascular Center for providing the data and great help with the annotations. MobileNetV2 is forked from here. Some utility code are forked from here.