Interpolation

Linear interpolation is a method to construct new data points within the range of a discrete set of known data points.

This method allows estimating intermediate values between two known points, assuming there's a straight line between the two known points. A coefficient "alpha", with a range from 0 to 1, is used to determine how close the estimated point should be to either one of the known points, which can be formulated as: interpolation = (1 - alpha) * A + alpha * B, where a higher value of alpha makes the interpolation closer to B and smaller values closer to A.

In regards to machine learning models, it has been tested that it's possible to consider models as if they exist in a Euclidean space and the filter's weight can be interpolated to obtain intermediate values, linearly combining the transformations that both models produce.

In order for two models to be compatible for interpolation, they have to belong to the same "family tree", so that their filters positions are correlated. A simple way to achieve this is to use models that were trained using the same pretrained model or are derivates of the same pretrained model.

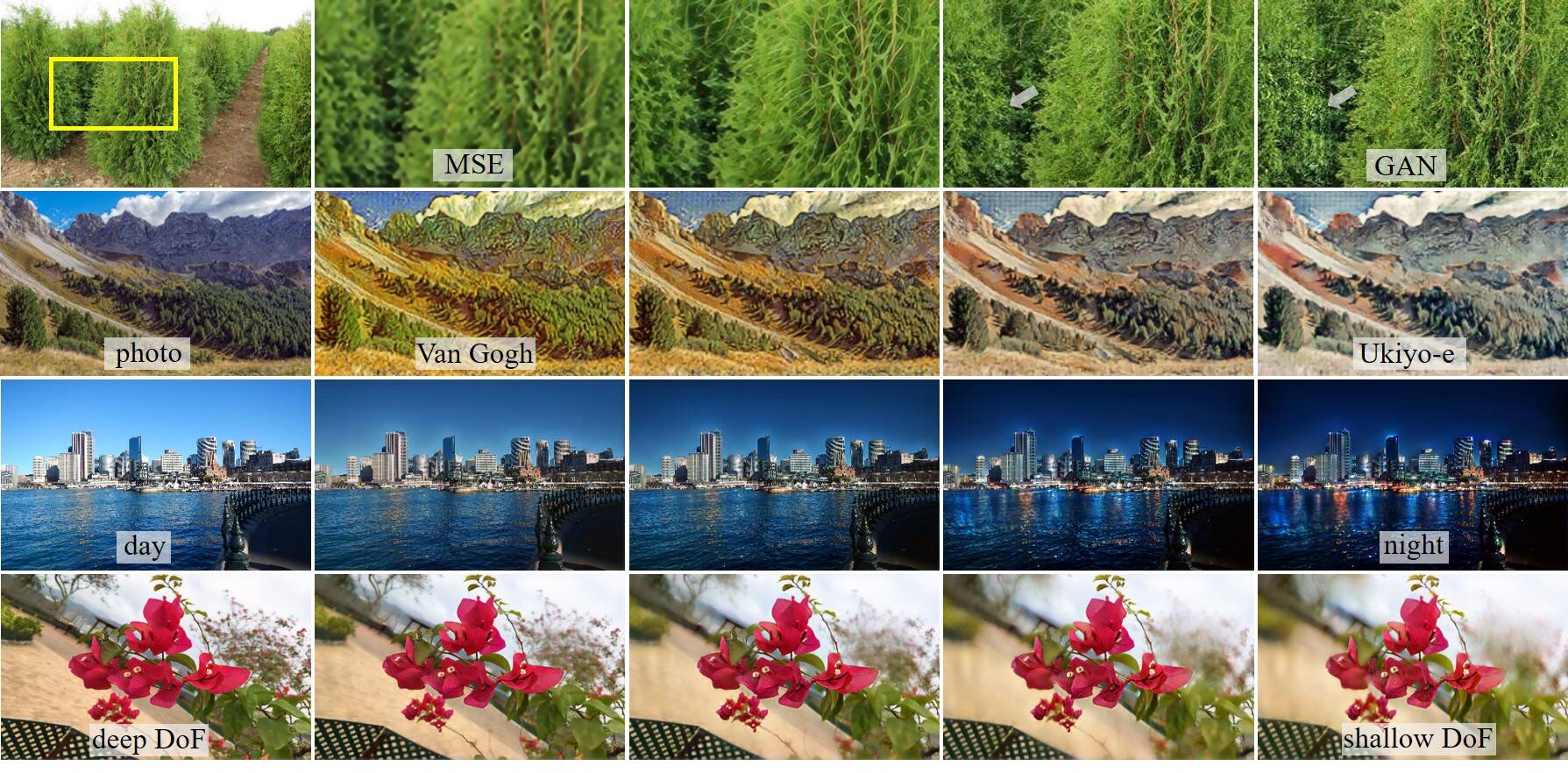

The original author of ESRGAN and DNI demonstrated this capability by interpolating the PSNR pretrained model (which is not perceptually good, but results in smooth images) with the ESRGAN resulting models that have more details but sometimes is excessive to control a balance in the resulting images, instead of interpolating the resulting images from both models, giving much better results.

The authors continued exploring the capabilities of linearly interpolating models of different architectures in their new paper "DNI" (CVPR19): Deep Network Interpolation for Continuous Imagery Effect Transition with very interesting results and examples. A script for interpolation can be found in the net_interp.py file, but a new version with more options will be committed at a later time. This is an alternative to create new models without additional training and also to create pretrained models for easier fine tuning.

Additionally, it is possible to combine more than two models with varying alpha coefficients in every case allowing to explore the parameter space with different models for fine-tuning. A simple way to average all models within a directory is using the script at dir_interp.py.

Lastly, random splicing of models' filters is being explored to introduce random mutations to models, using net_splice.py.

In order to facilitate model interpolations at any scale (and between any scale, more on that later), I transformed the original ESRGAN model at 1x, 2x, 8x and 16x scales so they can be used as pretrained model for any of those scales, instead of starting from scratch AND will be compatible to interpolate with other models. I have run a small training session (1k iterations with 65k images), because the final upscale layers have to set in (adjust) at the new scale, but 99.9% of the model is the same as basic ESRGAN.

As has been mentioned before, an important advantage of using a pretrained model instead of starting from scratch is that the task will become only finetuning, so it will require less time/images to train. As some have also tested/show, higher scales are much more difficult for the model to learn, so even a model converted from ESRGAN struggles with those scales, but at least it's closer to the objective than doing it from scratch. 1x and 2x could be used as is, but it's better if finetuned. 8x and 16x do need further finetuning.

For reference, the PSNR for each scale after those 1k iterations was: