New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Connection issues with NetKVM + Windows Server 2022 combination #583

Comments

|

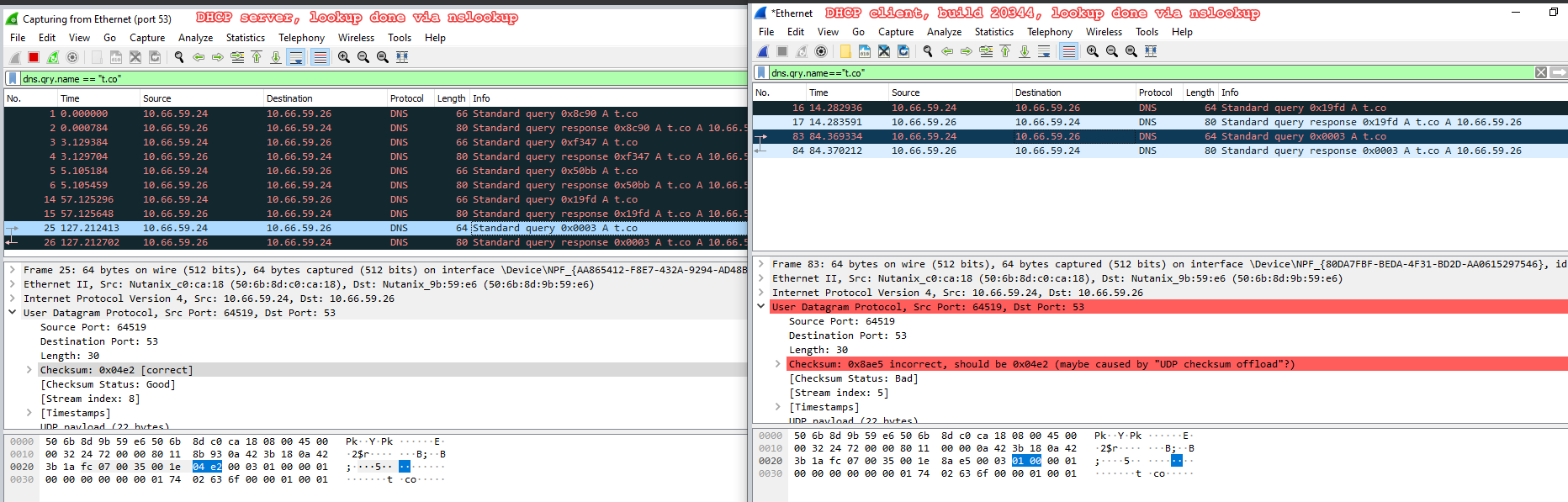

Hello Olli, Thank you for reporting this issue. I have had a quick look and indeed can reproduce the same behavior with Windows Server 2022 (preview build 20344), even though the previous preview build does not have this issue. I found that disabling Offload.Tx.Checksum mitigates the issue. Therefore, may I ask you to verify the same? You can disable Tx Checksum offloading via Device Manager -> Network Adapters -> Open properties of "Nutanix VirtIO Ethernet Adapter" -> Find "Offload.Tx.Checksum" parameter in the list on 'Advanced' tab and then change "Value" to "Disabled" or "TCP(v4)". BR, |

|

@sb-ntnx Yes, that looks to be valid workaround. Thanks, I will use that then on my lab. |

|

Try to reproduce it on my host, but failed. qemu version:qemu-kvm-6.0.0-16.module+el8.5.0+10848+2dccc46d.x86_64 The qemu command line: Related files: |

|

@sb-ntnx Hi Sergey Based on the previous comment, do you think that the issue is specific to AHV? Thanks, |

|

Hi @YanVugenfirer, Unfortunately, I've not had time to look into the issue yet. I hope to have a further look later this week. BR, |

|

Just thought I would share that I am encountering this issue with the Server 2022 image using the Get-NetAdapter |

Where-Object DriverFileName -eq 'netkvm.sys' |

Set-NetAdapterAdvancedProperty -RegistryKeyword 'Offload.TxChecksum' -RegistryValue 0 |

|

Sorry for the delay. It has been fairly busy recently, so I've not had time yet to throughly investigate the issue. However, I tested with qemu-6.0.0 (with AHV) and got the same behavior. BR, |

|

@sb-ntnx Can you please specify what was the Linux kernel version? |

|

@jborean93 What was the host OS when you ran VirtualBox? |

It was Fedora 34, the current kernel is |

|

@YanVugenfirer: The latest kernel of AHV that I tested was based off 5.4.109 |

|

@leidwang, thank you for the tests. |

|

@sb-ntnx |

…ET_BUFFER can be greater than size of NET_BUFFER itself. As the result, the size of the packet can be calculated incorrectly. Instead of using sum of MDLs lengths, we can cap the max length with the size of NET_BUFFER. Bug: #583 Signed-off-by: Sergey Bykov <sergey.bykov@nutanix.com>

|

@YanVugenfirer: I guess we can close this bug out as the issue is understood and the PR is merged into the upstream? @olljanat: We will make this change as part of the VirtIO 1.1.7 suite. Cannot commit to the release date, but we will try to expedite it. |

Yes, I will close it. Thanks a lot for PR. |

|

FYI, @sb-ntnx's fix is included in Fedora's 0.1.208-1 release of the |

|

This caused a crash in SmartOS: TritonDataCenter/illumos-kvm-cmd#25 But moreover, it affects all Windows OSes, not just Win2022, as this condition is hit when using WSK, which is used by various system components and drivers such as WireGuardNT. The impact isn't just "networking breaks", but also it appears to be leaking uninitialized kernels memory out onto the network, which could contain secrets. Therefore, you might want to promote this to a security release and push it out on Windows Update. |

|

@zx2c4 Thanks. |

|

Can confirm that this is an issue in Nutanix AHV with Windows Server 2022. Disabling Offload Tx.Checksum did mitigate the issue. |

|

@wioxjk as it's been mentioned in #583 (comment), the fix was delivered in Nutanix VirtIO 1.1.7. |

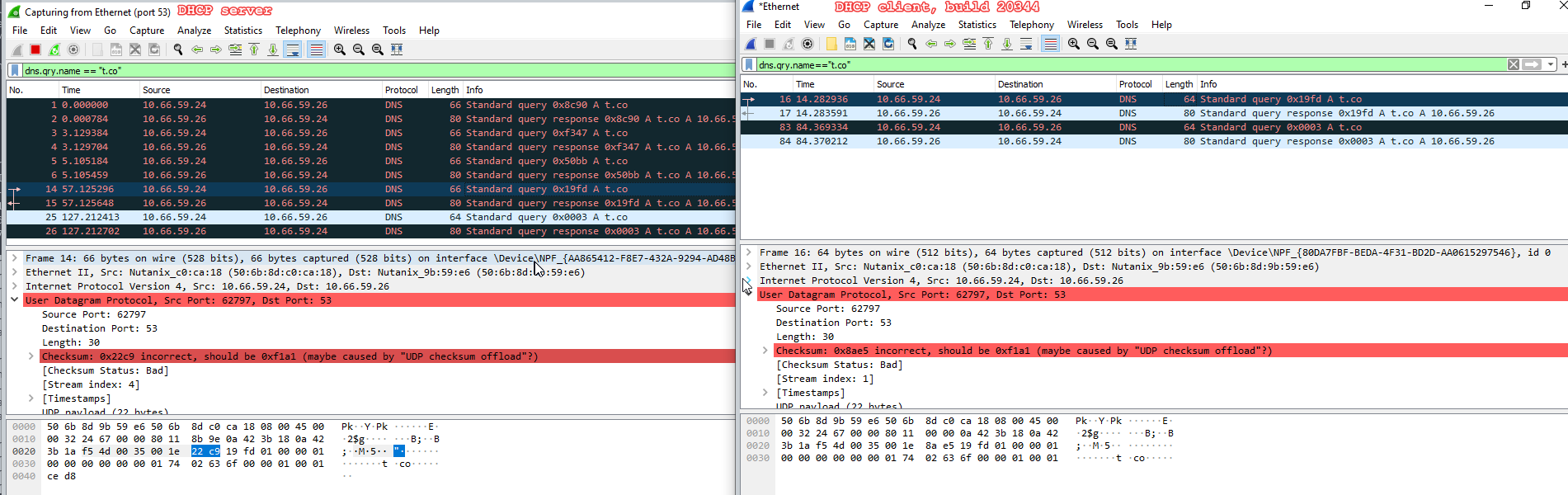

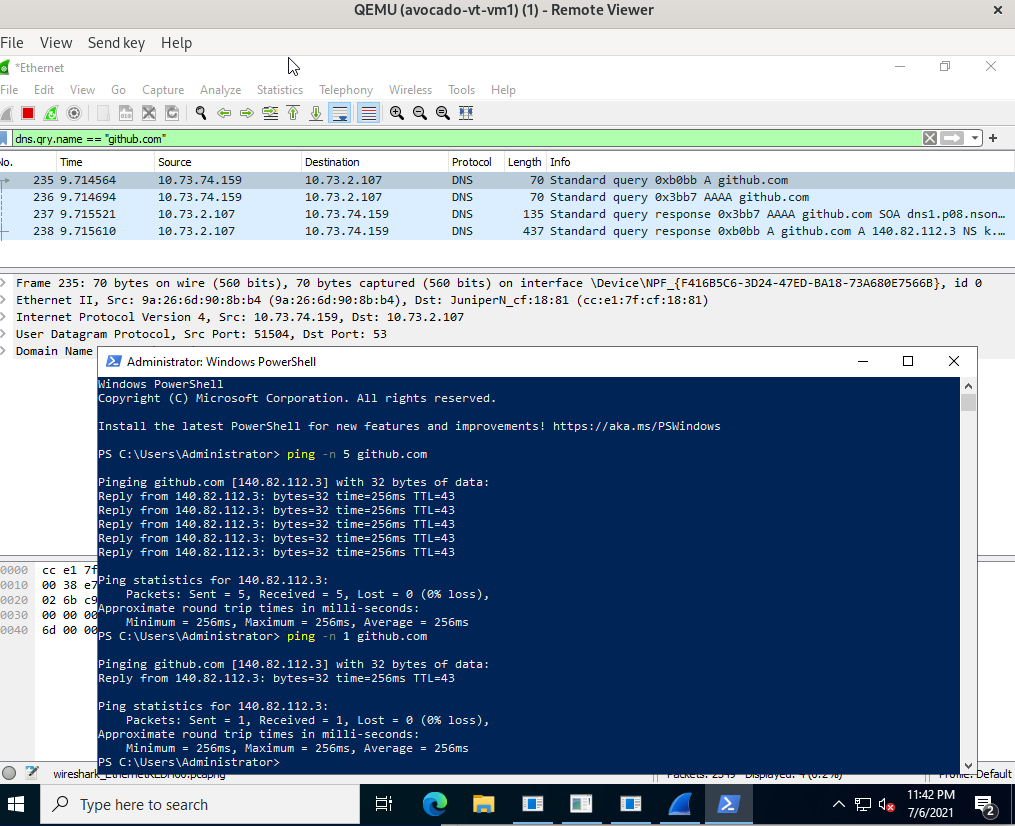

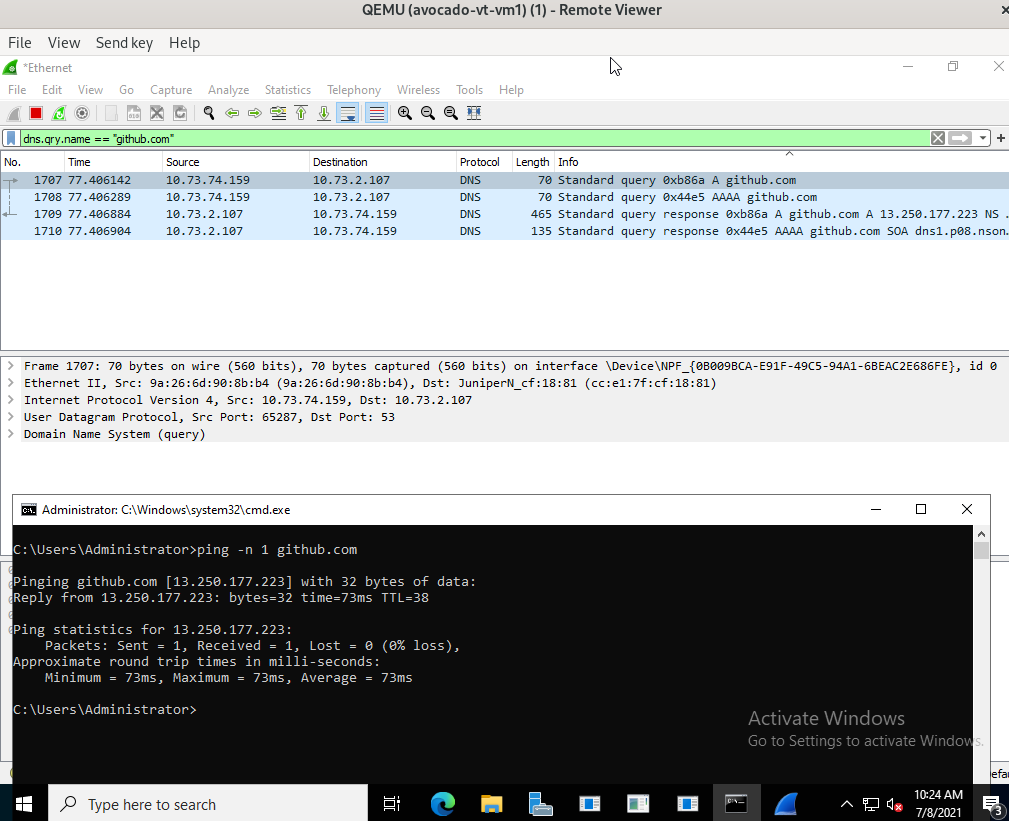

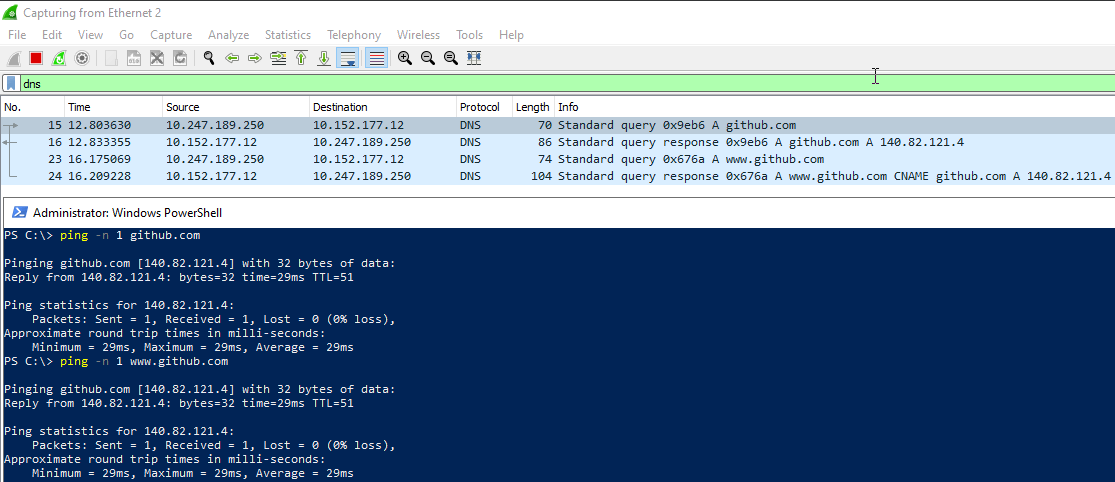

Looks that something have changed on Windows Server 2022 (preview build 20344) comparing to Windows Server 2019 (version 1809) because NetKVM driver does not works correctly on it.

Issues which I have seen are that:

If I switch to Intel e1000 emulated NIC then those issues disappear:

It is also worth to mention that I run this test VM on Nutanix AHV platform so if others are not able to reproduce issue then it might be platform specific too (even when it looks like driver + Windows compatibility issue for me). I tested with both latest version of Nutanix packaged driver and 0.1.190 from here and both have same issue.

PS. I reported this one also to Windows Server Insiders forum https://techcommunity.microsoft.com/t5/windows-server-insiders/connection-issues-with-netkvm-windows-server-2022-combination/m-p/2371881

The text was updated successfully, but these errors were encountered: