-

Notifications

You must be signed in to change notification settings - Fork 20

OpenAI Gym

OpenAI gym is a framework and the de-facto API for reinforcement learning environments. Reinforcement learning is a branch of machine learning that deals with sequential decisions that optimize for the best possible sum of rewards.

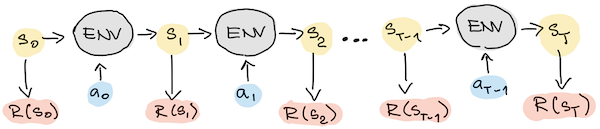

Typical episode in an environment is shown on the following picture. The environment ENV is at each time-step receiving an action a calculated by the agent and returns a reward R and new observation s. The cycle repeats the following time-step.

You must import autodrome.envs before calling gym.make(...) method to create an instance of the environment. This is neccessary because OpenAI gym environments are registered dynamically at runtime. Currently, one environment for each game is available: ATS-Indy500-v0 and ETS2-Indy500-v0. Both environments consist of a simple circular track with railing that cause damage to the truck when hit. The episode ends whenever the truck gets damaged.

Here's an example code and that runs a single episode in the ETS2 version of the environment. It uses a suicidal agent that slams the throttle and doesn't bother steering.

import gym

import numpy as np

import autodrome.envs

env = gym.make('ETS2-Indy500-v0') # or 'ATS-Indy500-v0'

done, state = False, env.reset()

while not done: # Episode ends when the truck crashes

action = np.array([1, 2]) # Straight and Full Throttle :)

state, reward, done, info = env.step(action)

env.render() # Display map of the world and truck positionThe following video shows how the code is executed. The env.render() displays a small windows with a 2D map of the game world and the actual truck position.

The env.step(...) function consumens an action a and returns a reward R, an observation s, done flag and an info dictionary.

The reward R is the distance travelled during the executed time-step. The reward is returned until the truck crashes. The intention of this reward function is to encourage the agent to drive as quickly as possible and for as long as possible since longer drive result in larger cummulative reward.

The action space where a belongs to is a discrete set of controls corresponding to hitting the keyboard arrow keys. The space is an instance of the gym.spaces.MultiDiscrete class. Shifting, clutch and other truck controls are set to automatic and are handled by the logic built into the game. The meaning of the two components is:

-

Component 0 - 0:

←left, 1:→right -

Component 1 - 0:

↑throttle, 1:↓brake.

Observation space are raw pixels of RGB screen images. Resolution of the returned image is 640x480. Autodrome automatically switches to the front camera view before starting the episode. This view is ideal for reinformcement learning purposes since no truck gometry obscures the view and all pixels convey useful information about the game world.

The info dictionary contains an instance of the Policeman class that contains links to the map and the world defintions.