-

Notifications

You must be signed in to change notification settings - Fork 252

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

add web demo/model to Huggingface #2

Comments

|

@williamyang1991 also could there be a option in colab to use cpu for inference? |

|

@williamyang1991 web demo was added (https://huggingface.co/spaces/hysts/DualStyleGAN) by https://huggingface.co/hysts on huggingface, made a PR to link it #3 |

Thank you for the impressive web demo!

I will modify it when I have free time. |

|

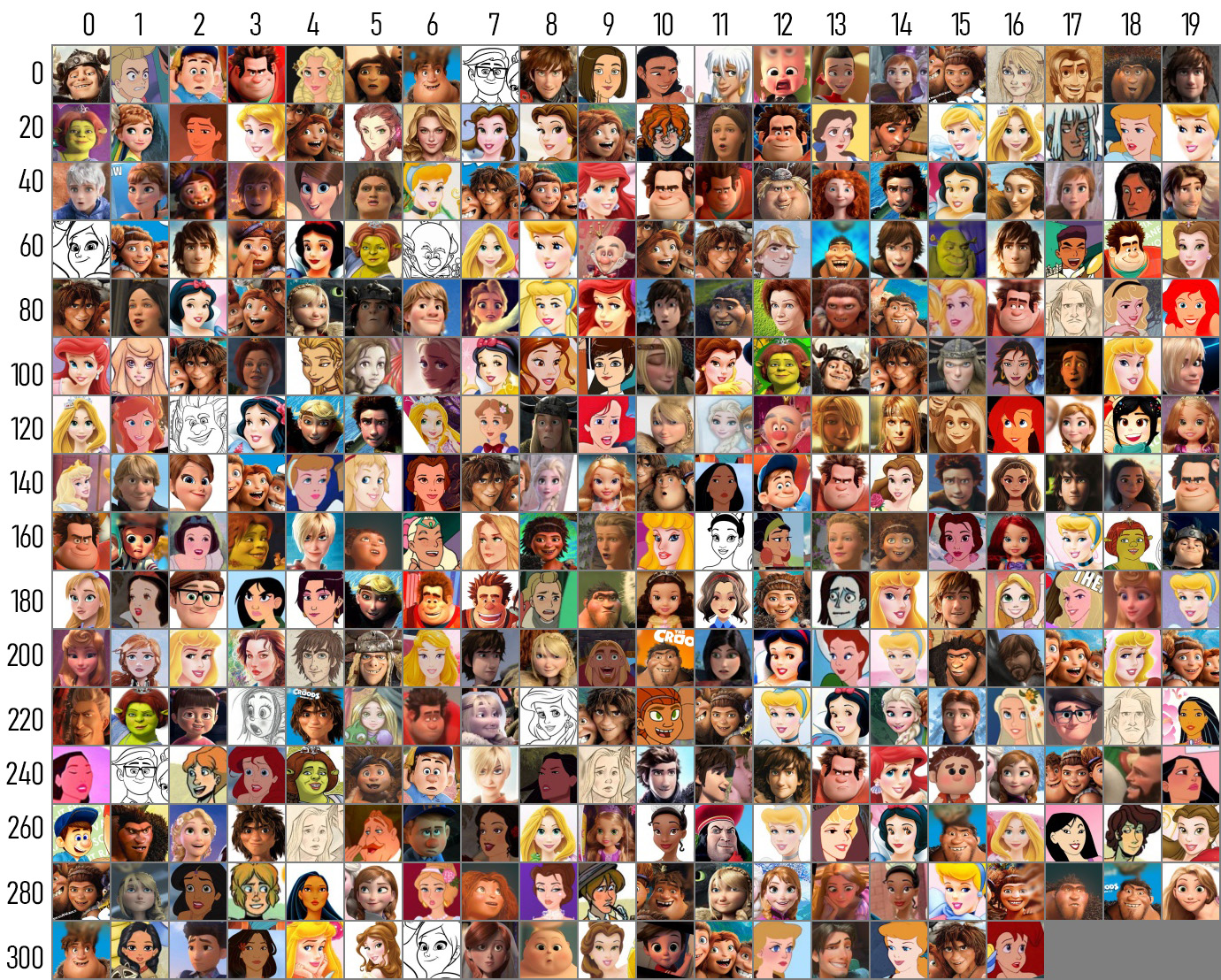

Hi, @AK391, it seems that there is an issue on your impressive web demo: #4 (comment) I think it will be great if you can kindly add the style overview below to the web demo, |

Hi, @AK391 , I modified the code and successfully ran the notebook on CPU following the intruction in ReadMe:

|

|

@williamyang1991 thanks the demo was setup by a community member: https://huggingface.co/hysts, it was recently updated to add the table |

|

Hi, awesome work! I wrote the code for the web demo, and happened to find this issue, so I added the style image table you provided. Thank you. |

|

@hysts thanks for setting up the demo, looks great, are there plans to add the other styles for example arcane, anime, slam dunk? |

|

@AK391 @williamyang1991 |

|

@hysts |

|

I see.

I think that would work. By the way, I updated the web demo to include other styles, currently without the reference style images except cartoon. |

|

@williamyang1991 |

|

@hysts |

|

@hysts just saw that the demo is down, can you restart it by going to settings -> restart button? |

|

@AK391 |

|

@williamyang1991 tagging about the memory issue and @hysts there is another error now RuntimeError: can't start new thread edit: it is back up now |

|

@AK391 Not sure what causes the memory issue. I'm not familiar with the technical implementation of the web demo. |

|

I'm not sure, but I guess it was just a temporary glitch in the environment of Hugging Face Spaces because I did nothing but it's back up now. Also, the app worked just fine in my local environments (mac and ubuntu), and in a GCP environment with 4 CPUs and 16 GB RAM, while the document of hf spaces says

|

Hi, would you be interested in adding DualStyleGAN to Hugging Face? The Hub offers free hosting, and it would make your work more accessible and visible to the rest of the ML community. We can create a username similar to github to add the models/spaces/datasets to.

Example from other organizations:

Keras: https://huggingface.co/keras-io

Microsoft: https://huggingface.co/microsoft

Facebook: https://huggingface.co/facebook

Example spaces with repos:

github: https://github.com/salesforce/BLIP

Spaces: https://huggingface.co/spaces/akhaliq/BLIP

github: https://github.com/facebookresearch/omnivore

Spaces: https://huggingface.co/spaces/akhaliq/omnivore

and here are guides for adding spaces/models/datasets to your org

How to add a Space: https://huggingface.co/blog/gradio-spaces

how to add models: https://huggingface.co/docs/hub/adding-a-model

uploading a dataset: https://huggingface.co/docs/datasets/upload_dataset.html

Please let us know if you would be interested and if you have any questions, we can also help with the technical implementation.

The text was updated successfully, but these errors were encountered: