-

Notifications

You must be signed in to change notification settings - Fork 328

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

默认配置24g显存还是会爆 #4

Comments

|

感谢反馈问题,把batch_size都改为1,context_length=32试一试。别的情况,我再试一试 |

改完了也是爆显存,显卡是RTX4090 24GB,配置(如果有需要我可以把ssh开放给你研究研究x) |

|

我看了一下我torch是2.0,我改成1.13试试看(仍然爆显存 |

|

用INT4量化后的模型可以大幅减少显存,有没有直接微调INT4模型的可能性? |

|

查看这个配置#5 (comment) |

|

torch.cuda.OutOfMemoryError: CUDA out of memory. Tried to allocate 148.00 MiB (GPU 0; 22.38 GiB total capacity; 21.49 GiB already allocated; 87.94 MiB free; 21.52 GiB reserved in total by PyTorch) If reserved memory is >> allocated memory try setting max_split_size_mb to avoid fragmentation. See documentation for Memory Management and PYTORCH_CUDA_ALLOC_CONF |

|

22g显寸不够

发自我的 iPhone

在 2023年3月23日,17:26,Adherer ***@***.***> 写道:

torch.cuda.OutOfMemoryError: CUDA out of memory. Tried to allocate 148.00 MiB (GPU 0; 22.38 GiB total capacity; 21.49 GiB already allocated; 87.94 MiB free; 21.52 GiB reserved in total by PyTorch) If reserved memory is >> allocated memory try setting max_split_size_mb to avoid fragmentation. See documentation for Memory Management and PYTORCH_CUDA_ALLOC_CONF

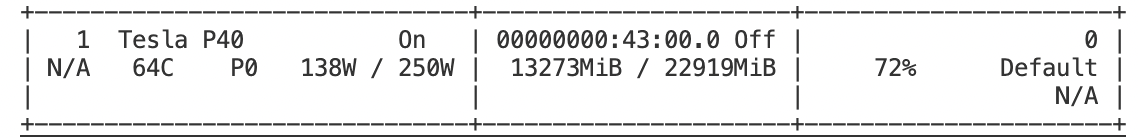

我用P40跑的,22G显存,一样的问题,context_length设置成了32

—

Reply to this email directly, view it on GitHub<#4 (comment)>, or unsubscribe<https://github.com/notifications/unsubscribe-auth/AHJRI6JAQJ6JUG2IWYTTU4TW5QJNHANCNFSM6AAAAAAWD3FFLE>.

You are receiving this because you commented.Message ID: ***@***.***>

|

试过了,无效 |

我参考了一下其他repo,8bit量化可以16G的显存finetune,目前暂无支持多卡finetune的版本。因此,后续是否有如下两个优化方向:

|

|

目前在做两个方向:

花了一天了,还没什么进展😂,继续努力~ |

可以参考下这个代码:https://github.com/mymusise/ChatGLM-Tuning |

这个我也跑通了,但不知道是不是方法有问题,训练效果并不理想,似乎是在胡说八道( |

|

目前不会考虑8bit。因为要安装bitstandbytes。这个包检测不到cuda(在我的电脑上)懒得搞

这个仓库我看了,非常优秀。

但是我的目的是使用huggingface的全家桶来做训练,

而且那个仓库代码封装过多,我也不喜欢。

发自我的 iPhone

在 2023年3月24日,00:31,Adherer ***@***.***> 写道:

目前在做两个方向:

1. 使用torch.utils.checkpoint来降低显存压力。

2. 单机多卡

花了一天了,还没什么进展😂,继续努力~

可以参考下这个代码:https://github.com/mymusise/ChatGLM-Tuning,我跑通了,正在训练中,明天有空我改下,改成中文训练的<https://github.com/mymusise/ChatGLM-Tuning%EF%BC%8C%E6%88%91%E8%B7%91%E9%80%9A%E4%BA%86%EF%BC%8C%E6%AD%A3%E5%9C%A8%E8%AE%AD%E7%BB%83%E4%B8%AD%EF%BC%8C%E6%98%8E%E5%A4%A9%E6%9C%89%E7%A9%BA%E6%88%91%E6%94%B9%E4%B8%8B%EF%BC%8C%E6%94%B9%E6%88%90%E4%B8%AD%E6%96%87%E8%AE%AD%E7%BB%83%E7%9A%84>

—

Reply to this email directly, view it on GitHub<#4 (comment)>, or unsubscribe<https://github.com/notifications/unsubscribe-auth/AHJRI6LKKIAC5HIYNUDEJ7DW5R3E5ANCNFSM6AAAAAAWD3FFLE>.

You are receiving this because you commented.Message ID: ***@***.***>

|

|

已经有不少人跑出来了。不知道你们这边是怎么回事,要求就是显存问题。#5 (comment) 可以看截图,跑起来的时候,显寸占用为24330MB |

对windows没啥好感,刚双系统打开windows,弹窗问我是否创建GPT分区表,我点了一下确定,训练集LVM分区炸了得重新配置了,正好重试一下( |

相关问题已解决,模型裁剪即可,现可用13G+显存即可finetune: |

相关问题已解决,模型裁剪即可,现可用13G+显存即可finetune: |

怎么裁剪…咕 |

torch.cuda.OutOfMemoryError: CUDA out of memory. Tried to allocate 64.00 MiB (GPU 0; 23.87 GiB total capacity; 23.08 GiB already allocated; 9.38 MiB free; 23.12 GiB reserved in total by PyTorch) If reserved memory is >> allocated memory try setting max_split_size_mb to avoid fragmentation. See documentation for Memory Management and PYTORCH_CUDA_ALLOC_CONF

改batch size好像没什么用,看监视是12g瞬间到24g然后就无了

The text was updated successfully, but these errors were encountered: