This repository is for Differentiable Linearized ADMM (to appear in ICML 2019)

By Xingyu Xie, Jianlong Wu, Zhisheng Zhong, Guangcan Liu and Zhouchen Lin.

For more details or questions, feel free to contact:

Xingyu Xie: nuaaxing@gmail.com and Jianlong Wu: jlwu1992@pku.edu.cn

we propose Differentiable Linearized ADMM (D-LADMM) for solving the problems with linear constraints. Specifically, D-LADMM is a K-layer LADMM inspired deep neural network, which is obtained by firstly introducing some learnable weights in the classical Linearized ADMM algorithm and then generalizing the proximal operator to some learnable activation function. Notably, we mathematically prove that there exist a set of learnable parameters for D-LADMM to generate globally converged solutions, and we show that those desired parameters can be attained by training D-LADMM in a proper way.

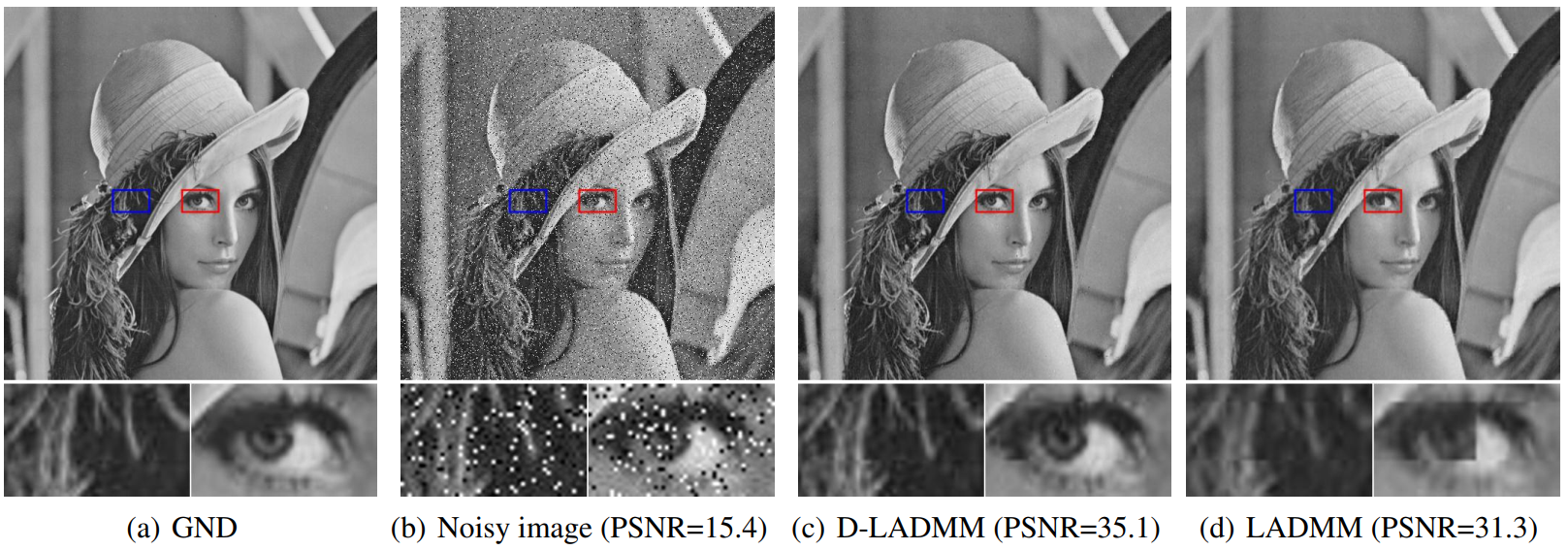

Here we give a toy example of D-LADMM for the Lena image denoise. An example to run this code:

python main_lena.pyThe testing result of the Lena image denoise. The whole process takes about 30 epochs.

| Model | Training Loss | PSNR |

|---|---|---|

| D-LADMM (d = 5) | 1.667 | 33.57 |

| D-LADMM (d = 10) | 1.663 | 35.44 |

| D-LADMM (d = 15) | 1.659 | 35.61 |

d means the depth (the number of layers) of the D-LADMM model.

Denoising results of the Lena image:

If you find this page useful, please cite our paper:

@inproceedings{xie2019differentiable,

title={Differentiable Linearized ADMM},

author={Xingyu Xie and Jianlong Wu and Zhisheng Zhong and Guangcan Liu and Zhouchen Lin},

booktitle={International Conference on Machine Learning},

pages={6902--6911},

year={2019}

}

All rights are reserved by the authors.