Detailed Guides Troubleshooting

Welcome to the known list of bugs, the known intricate parts of installation, and also to the help with troubleshooting failures.

This guide is intended for people who already have problems and who want to see if we've also encoutered them. You can also always contact us over email, by first registering as a Beta-tester, and then sending a mail to the dev team. See http://beta.kave.io

If you're not trying to troubleshoot an install, go back to the main page.

Should an install fail, it will be reported through the ambari web interface. From there you can find the stdout and stderr which occurs during the failure. The following common problems may occur for you:

- No internet access, wget times out or yum reports no mirrors found. Solution, temporarily give your nodes internet access.

- Package conflicts during yum install. If you do not have a blank centos image, for example, if you already have some other version of nagios or ganglia or perl installed, then the installation may fail with errors talking about inconsistent packages. You must take a more flavorless image to start off with, or try uninstalling/yum eraseing components, but this can be dangerous. Discuss with a qualified linux sys admin.

If IPTables and selinux are still running on restart, then there is a conflict with giving control of users to FreeIPA. The symptom is that no FreeIPA users exist on the system any longer after a reboot.

To fix this, you need to first:

- change selinux rules/iptables rules or permanently disable both

- do a complicated re-installation of the FreeIPA client. It is not a simple thing to do and is described below.

The FreeIPA client install is protected with certain lock files to ensure restarting the service does not fail or attempt to download anything.

In AmbariKave 2.1-Beta:

- ssh to the ambari node of your machine as the root user.

- use pdsh to remove the ipa_client_install_lock_file from all machines

pdsh -g <clustername> rm -f ipa_client_install_lock_file

- use pdcp to copy the robot-admin-password file to all machines

pdcp -g <clustername> robot-admin-password robot-admin-password

- use pdsh to uninstall the client from the target machine

pdsh -g <clustername> ipa-client-install --uninstall

Then you have three options to redo the installation. (1) with a restart through the web interface. (2) with the restart_all_services script. (3) through the ambari API.

- Navigate through the ambari web interface to the FreeIPA service and call refresh-client-configs on the affected nodes.

- Download the restart all services script and run it (restart_all_services.sh someclustername)

- Through the Ambari API, you will need to issue DELETE and PUT commands for the FREEIPA_CLIENT on all affected nodes.

Very similar to the above issue, but no pdsh commands are necessary, instead scp and ssh to the single affected machine.

We now see two different classes of errors with keytabs, the first occurs if keytabs are note generated correctly or expired, the second occurs if kerberos tickets cannot be read properly in Java

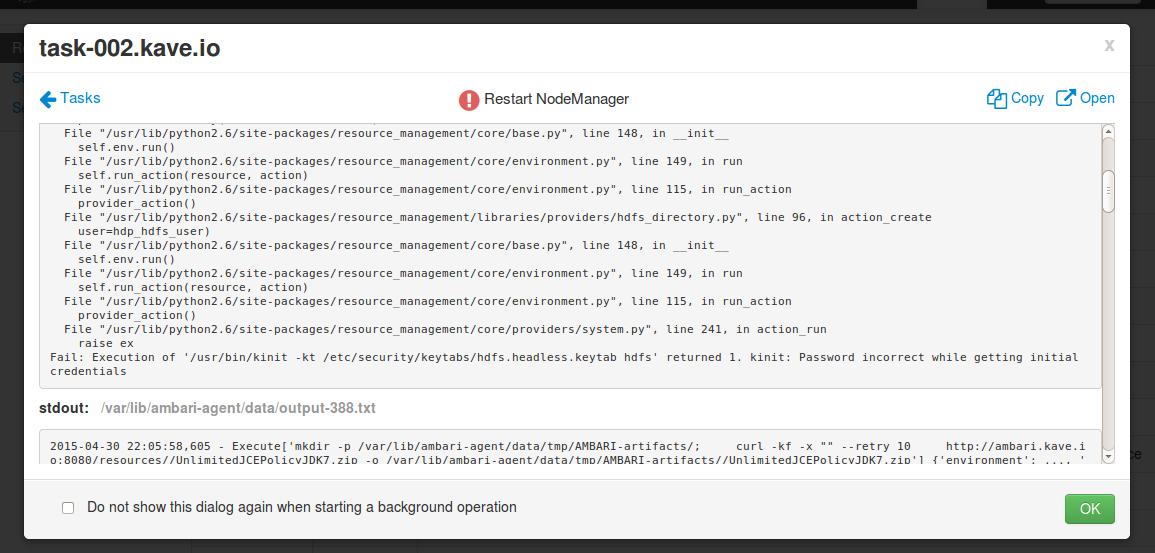

If your local keytabs are not generated correctly during FreeIPA install/Kerberization, this would result in errors like such:

Password incorrect while getting initial credentials

You can test this yourself by running the same kinit by keytab command which failed, on the correct machine, as the correct user.

e.g.:

ssh SOMEHOST su hdfs bash -c '/usr/bin/kinit -kt /etc/security/keytabs/hdfs.headless.keytab hdfs'

The error can be resolved by throwing away the problematic keytab on the given machine and regenerating it. This procedure looks something like this:

kdestroy

kinit admin

# e.g. for a headless hdfs user on all datanodes and namenodes

ls -l /etc/security/keytabs/hdfs.headless.keytab # make note of the security settings ..

rm /etc/security/keytabs/hdfs.headless.keytab

ipa-getkeytab -s ambari.kave.io -p hdfs -k /etc/security/keytabs/hdfs.headless.keytab

chmod 0440 /etc/security/keytabs/hdfs.headless.keytab

chown hdfs:hadoop /etc/security/keytabs/hdfs.headless.keytab

The exact keytab and principal will be specified in the error message you saw. The chmod/chown will need to be copied from the previous file or taken from a previously downloaded kerberos.csv from ambari.

If you don't chown the file correctly, you will see something like:

Generic preauthentication failure while getting initial credentials

If too many attempts to restart services have been made with the wrong keytab, the principal/identity/user might be locked out of kerberos. In this case you will keep seeing:

Clients credentials have been revoked while getting initial credentials

You will need to unlock the user and regenerate a new keytab, try (on the freeIPA node):

kadmin -p admin

kadmin: modprinc -unlock hdfs

If this does not work because the admin user is not allowed to edit things in kerberos, you can allow then through the /var/kerberos/krb5kdc/kadm5.acl file e.g.:

more /var/kerberos/krb5kdc/kadm5.acl

*/admin@KAVE.IO *

admin@KAVE.IO *

admin@KAVE.IO a *

admin@KAVE.IO i *

admin@KAVE.IO x *

admin@KAVE.IO m *

...

service ipa restart

During the kerberization wizard, ambari asks "I confirm that I have installed JCE across every node in the cluster". This is a very important step. If the latest java cryptographic extensions are not installed within the java version for the service that you are running then the kerberos ticket will not be properly readable amongst other issues. You will see the following error.

safemode: Failed on local exception: java.io.IOException: javax.security.sasl.SaslException: GSS initiate failed [Caused by GSSException: No valid credentials provided (Mechanism level: Failed to find any Kerberos tgt)]; Host Details :

The solution is to correctly install JCE into whatever java versions are running:

FreeIPA client installation should take care of this, but in case you encounter problems manual re-installation may be required.

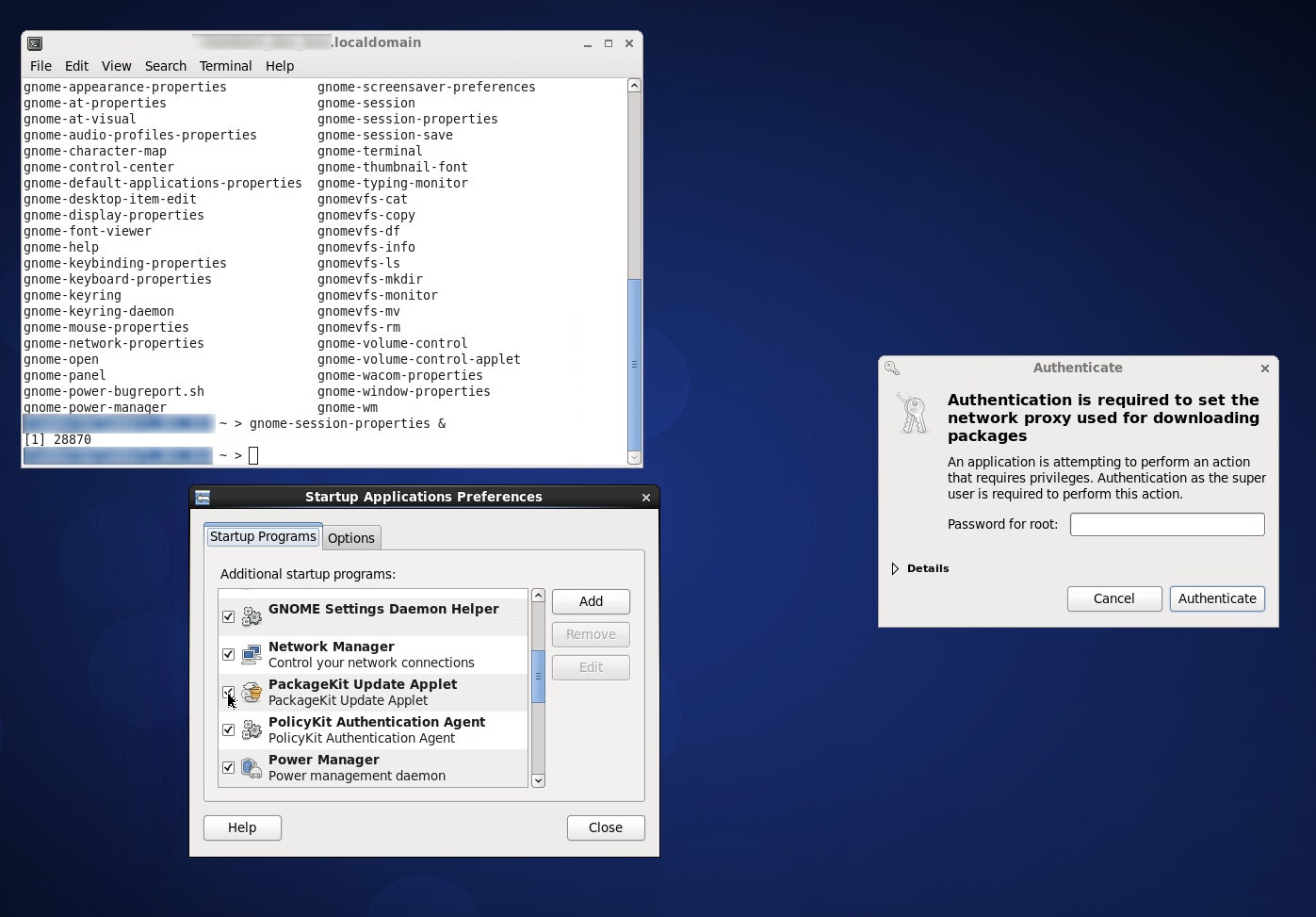

"Authorisation is required to set the network proxy used for downloading packages"

The PackageKit of gnome is trying to check for updates periodically, but this doesn't make sense for most users. Turn off the package kit in your gnome-session-properties as shown:

Often when you have just added a new user in FreeIPA it takes time for user caches to be updated on all the nodes. For example, it can take up to an hour for all the new sudo rules to be propagated across the cluster, and although new users might be available through ldap and even on login, they might not be available on twiki, hue or gitlab for a long time.

Caching by FreeIPA is done in several places, including the client nodes, where answers from ldap groups and logins may be cached separately.

You can invalidate and eliminate caching if needed for quicker testing. You need to go to the node in question as a sudoer, and run the following:

sss_cache -U

sss_cache -E

sed -i 's/cache_credentials = False/cache_credentials = True/g' /etc/sssd/sssd.conf

service sssd restartAmbari agents keep a cached copy of service control scripts in the /var/lib/ambari-agent/cache directory. If you needed to edit the master script on the server for some reason (for example, to apply a hotfix/patch) the cache does not update except on fresh install of the service. You can force the update of the cache manually by overwriting the content on the required machine.

If you have pdsh installed on your ambari node to help manage the cluster (automatically added by deploy_from_blueprint.py) then you can overwrite the cache manually using a 'group' pdcp command issued from the root account on the ambari node.

Replace/fill entire cache:

pdcp -R ssh -g <name_of_group_or_cluster> -r /var/lib/ambari-server/resources/stacks /var/lib/ambari-agent/cache

Where <name_of_group_or_cluster> is a predefined pdsh group. deploy_from_blueprint.py makes certain pre-defined groups for you, names after the cluster and host_group definition.

Replace only one service:

pdcp -R ssh -g <name_of_group_or_cluster> -r /var/lib/ambari-server/resources/stacks/<stack>/services/<service> /var/lib/ambari-agent/cache/<stack>/services/

This is usually your local browser which is blocking things:

- Not allowed to run unsafe/unverified scripts (look for the tell-tale icon in the browser toolbar)

- Not allowed to display mixed content (if the trying to display https, look for the tell-tale icon in the browser toolbar)

In the first case, you can simply permit scripts running, by clicking on the correct icon and choosing the correct option.

In the second case, there are tow solutions. Best is to restart/modify mpld3 options with the correct options to switch to https for the javascript part aswell. The other option is to allow mixed content in your browser for ipython notebooks.

http://stackoverflow.com/questions/21089935/unable-plot-with-vincent-in-ipython https://mpld3.github.io/modules/API.html https://mpld3.github.io/modules/API.html#mpld3.enable_notebook

If /var/log keeps filling up with messages containing:

Dec 23 18:51:02 node-001 pulseaudio[23475]: main.c: Module load failed.

Dec 23 18:51:02 node-001 pulseaudio[23475]: main.c: Failed to initialize daemon.

Dec 23 18:51:02 node-001 pulseaudio[23473]: main.c: Daemon startup failed.

This is due to a linux daemon attempting to connect to a non-existant soundcard. You can modify this and turn it off even, using the instructions given here: http://www.linuxplanet.com/linuxplanet/tutorials/7130/2

You can also turn off pulseaudio in the gnome-session-properties dialogue as shown above for the PackageKit problem.

Once these two steps are followed, then ps faux | grep pulse, and kill the remaining processes

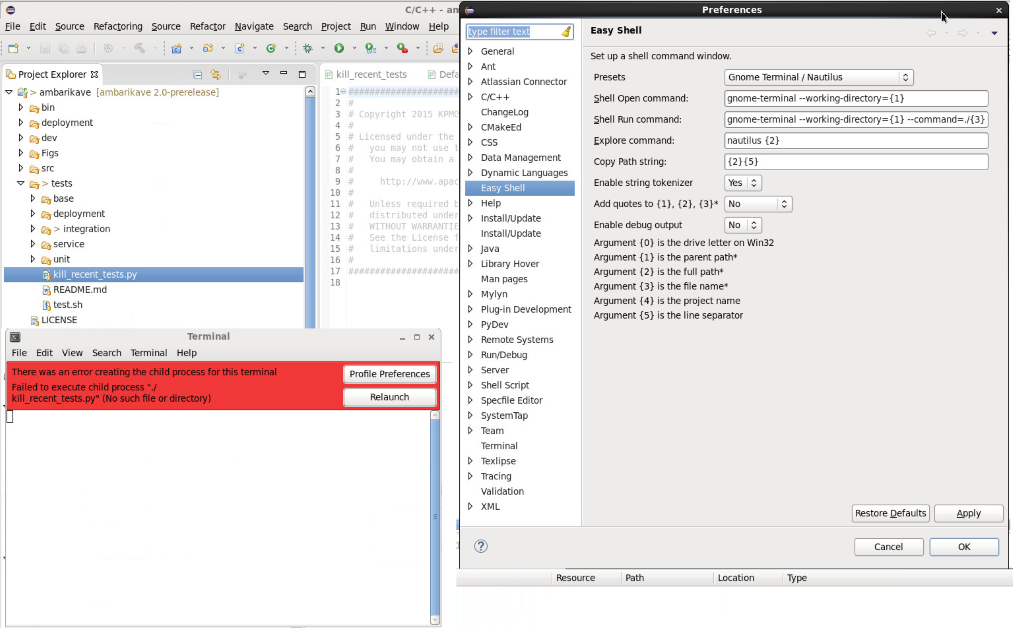

If when trying to run a script with easyshell in eclipse, you see an error about "Creating the child process" this is because of a miconfiguration in the EasyShell default preferences.

Go to the preferences (Window->Preferences) find EasyShell and remove the sets of double-quotes around the numbers {2} {1} {3}, apply/ok and then it will work as expected next time.

You can do:

echo 0 >/selinux/enforce

service iptables stop

sed -i 's/SELINUX=enforcing/SELINUX=permissive/g' /etc/selinux/config

chkconfig iptables off

resource_management.core.exceptions.Fail: Execution of 'source /usr/hdp/current/zookeeper-server/conf/zookeeper-env.sh ; env ZOOCFGDIR=/usr/hdp/current/zookeeper-server/conf ZOOCFG=zoo.cfg /usr/hdp/current/zookeeper-server/bin/zkServer.sh start' returned 127. env: /usr/hdp/current/zookeeper-server/bin/zkServer.sh: No such file or directory

The HDP 2.3 installation on Ambari 2.2.1 seems to mess up softlinks, or do them in the wrong order so we can end up with the wrong content of /usr/hdp/current

The correct content should look like:

ls -l /usr/hdp/current

lrwxrwxrwx. 1 root root 31 Mar 3 16:28 zookeeper-client -> /usr/hdp/2.3.0.0-2557/zookeeper

lrwxrwxrwx. 1 root root 31 Mar 3 16:55 zookeeper-server -> /usr/hdp/2.3.0.0-2557/zookeeper

If one of the softlinks does not exist, or if HDP has accidentally created a complete directory instead instead of a softlink, copy all the contents of this directory to the correct place in /usr/hdp/2.3.0.0-2557/zookeeper, then recreate the correct softlink with:

ln -s /usr/hdp/2.3.0.0-2557/zookeeper /usr/hdp/current/zookeeper-server

In AmbariKAVE 2.1-Beta we attempted to move all services to Java 1.8 . This was not successful for the JBOSS services due to issues and discontinued support for JBOSS. While we migrate to WildFly/Docker combinations as a long-term solution, we note the following work-around is possible.

- To install different java version:

yum -y install java-1.7.0-openjdk java-1.7.0-openjdk-devel

- Recommended: To configure this java version as the system default:

jdir=`ls -dt /usr/lib/jvm/java-1.7*-openjdk* | head -n 1`

alternatives --install /usr/bin/java java ${jdir}/jre/bin/java 20000

alternatives --install /usr/bin/javac javac ${jdir}/bin/javac 20000

alternatives --install /usr/bin/javaws javaws ${jdir}/jre/bin/javaws 20000

alternatives --set java ${jdir}/jre/bin/java

alternatives --set javac ${jdir}/bin/javac

alternatives --set javaws ${jdir}/jre/bin/javaws

- Alternate if not changing system default: manually setting JAVA_HOME for jboss is possible, find the line exporting JAVA_HOME in /etc/init.d/jboss and switch it to:

vi /etc/init.d/jboss

...

export JAVA_HOME=`ls -dt /usr/lib/jvm/java-1.7*-openjdk* | head -n 1`

- then restart jboss service

service jboss stop

service jboss start

A similar procedure can fix other java issues experienced by the user if they require a different system version of java.

If you are doing the installation away from a fast network or out of the US/EU region it is likely you may experience timeouts from slow yum mirrors. It is possible to overcome this by creating local mirrors of yum, as described elsewhere, or by modifying yum properties to be more robust.

Installing the fastestmirror package will slow down the first installation a lot, but then will cache the mirror pings and so speed up all subsequent installations. in /etc/yum.conf one could add ip_resolve=4 to force ipv4 usage, and add retries=0 to force infinite download retries.

For users, installers, and other persons interested in the KAVE, or developing solutions on top of a KAVE.

-

Developer Home

-

Onboarding

-

Architecture

-

Identities

-

Repository

-

FreeIPA

-

Installation

-

AWS Dev, getting started with aws within our dev team.

-

AWS CLI, more details and discussion over using aws for this development

-

DockerDev, Installation and usage of Docker

-

Onboarding

For someone who modifies the AmbariKave code itself and contributes to this project. Persons working on top of existing KAVEs or developing solutions on top of KAVE don't need to read any of this second part.