-

Notifications

You must be signed in to change notification settings - Fork 1

Neural Networks: An Introduction to TensorFlow

Word Count: 941

The advent of neural networks has transformed and revolutionized numerous data-driven industries in terms of their ability to process and analyze data. TensorFlow, a Machine Learning Framework created by Google, enables programmers to define and train these networks for their business and personal use. We will discuss how to utilize TensorFlow for creating and testing simple networks with labeled data, to show the broader applications of the technology.

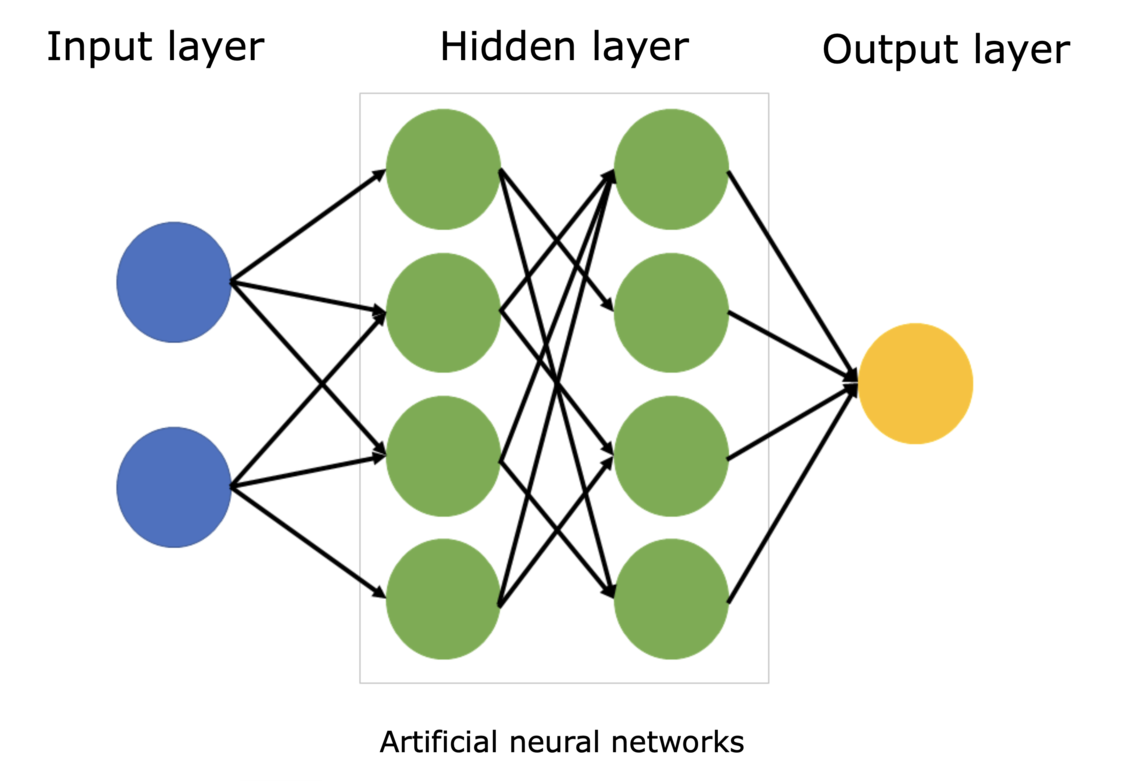

The central inspiration behind neural networks are within the intricacies of the human brain. The human brain consists of a highly connected network of cells called neurons. These neurons communicate with each other by utilizing a variety of connections by passing on electrical signals from one another. With the numerous inputs to the neuron, the cell can either create an action potential, which it sends to other neurons, or remain dormant. In a similar sense, a neural network passes values through perceptrons (neurons) which reside on the layers of a given network. Depending on the inputs to the perceptron, it will forward a response to the perceptrons on the subsequent layer. These responses can learn certain features within the input data and send it to the next layer for further analysis, which simplifies feature extraction for developers. It is also important to note that each layer can be configured differently to process the input data so that features within the data can be learnt for classification

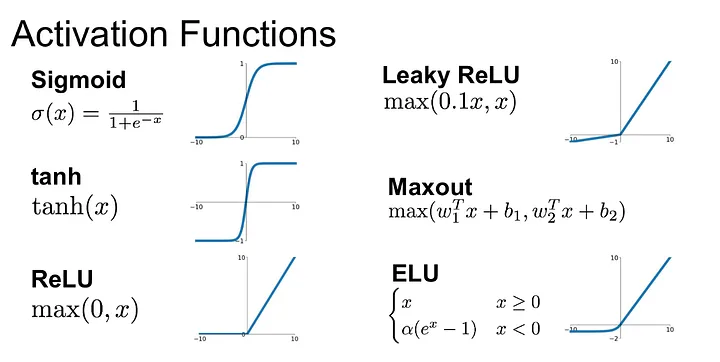

Another key component of numerous neural networks is the use of activation functions. Activation functions can be thought of as the decision makers in a network that decide if a given neuron should “fire” or not, similar to the action potential scenario. Well-known functions like the step, sigmoid, and RELU functions are used at the output of a neuron and can change the overall behavior of a network. For example, with ReLU, if the input is positive, then the neuron fires, otherwise it will output zero. Each of these activation functions serves as a unique "decision-maker" within the neural network, enabling it to learn and make predictions effectively. Most networks utilize the ReLU function as it does not have the “vanishing” gradients problem that some of the others have.

Finally, to build a neural network, we must first install the TensorFlow and NumPy Python package using the various methods available. Afterwards, we must have some labeled data that can be processed in Python using TensorFlow. This data can be preprocessed with various augmentations or filters that could help with performance of the model. Additionally, the data must be split into training,validation, and test sets. The training set is used to teach the model, the validation set helps fine-tune the model and prevent overfitting, and the test set evaluates the model's performance on unseen data. TensorFlow provides functions to help with the data preparation steps mentioned above, and can be found in the documentation.

Now that the data is ready, we can construct a network corresponding to the data. Let’s consider a simple feed-forward network for image classification. The input layer would be the neurons that are the input pixels in the image. The hidden layers would perform complex computations on the input pixels it acquires and is able to learn specific features and forwards them to the next layer. Finally, at the output layer, a value between 0 and 1 is outputted for each possible label, and the model would choose the highest value. The number of neurons in each layer, the choice of activation functions, and the network's depth are critical design decisions that impact the network's ability to learn from the data. TensorFlow offers many ways to define a network, and typically the Sequential Class is used to link all the layers together.

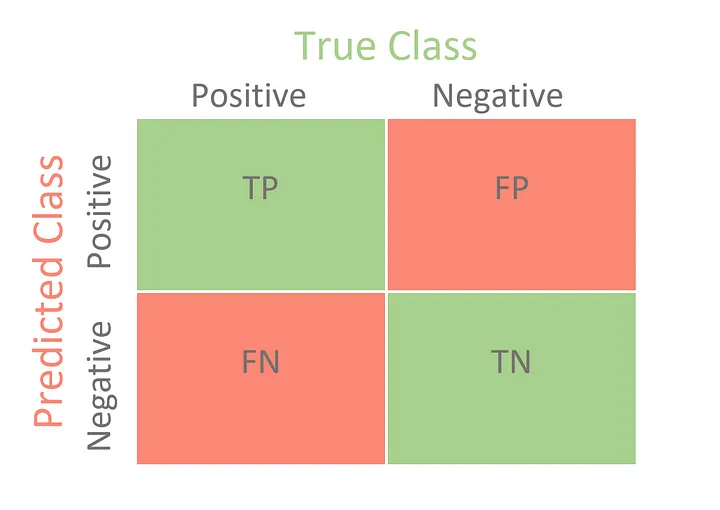

After the architecture is defined, the next step is to compile the model. The three main components of this are the loss function, optimizer, and the evaluation metrics. The loss function determines how good the network’s predictions are compared to the labels. For instance, for regression, mean-squared error is used as the loss function whereas in classification tasks, cross-entropy loss is used. The optimizer is responsible for adjusting the weights and biases in the network to minimize the loss function. The specific optimizer can greatly impact the training speed as well as the general performance of the model. Lastly, the evaluation metrics such as the accuracy, f1-score, precision, recall are necessary to quantify the performance of the model. In TensorFlow, the compile function can be used and has to be called with the parameters loss, optimizer, and metrics in order to specify specific options.

Training a network requires multiple iterations over the dataset. It involves forward propagating input into the network, computing the loss function, and back propagating the gradients of the network to modify weights and biases. This process is repetitively done until the loss function is optimized as much as possible. The TensorFlow equivalent to this is the fit function, which takes in the training and validation set.

After training, you'll need to evaluate the model's performance. You can use the validation set to fine-tune the model and select the best model based on its performance. Subsequently, the test set is used to assess the model's ability to generalize to new, unseen data. TensorFlow provides tools to make predictions and calculate various metrics to evaluate your model's accuracy, precision, recall, and other performance indicators like the confusion matrix below.

To conclude, TensorFlow is a powerful tool that enables the creation of neural networks for various types of classification tasks. With a good fundamental understanding, the full potential of this technology can be fully utilized among different types of tasks.

References:

https://www.sciencelearn.org.nz/images/5156-neural-network-diagram

https://medium.com/@shrutijadon/survey-on-activation-functions-for-deep-learning-9689331ba092

https://towardsdatascience.com/confusion-matrix-for-your-multi-class-machine-learning-model-ff9aa3bf7826