-

Notifications

You must be signed in to change notification settings - Fork 563

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

repremult: Fix subtle edge case in color conversion with unpremult=1 #2447

Conversation

Troy Sobotka pointed out to me that the implementation of colorconvert where unpremult=1 (i.e., unpremult, then transform, then premult) was wrong: the unpremult operation was careful to preserve the color value of pixels where alpha==0, but the premult we did at the end was just a straight multiply of the color by the alpha. This would have the effect of crushing those "glow" (A==0, RGB > 0) pixels to black in their round trip, whereas we want the original glow to be preserved. So really, the kind of premult we want for a one-time conversion of unpremultiplied to premultiplied is subtly different from the "re-premult" that we want as part of a round-trip operation. The fix in this patch is to add IBA::repremult() to do this modified operation (multiply color by alpha, unless alpha is 0), and also that is the correct operation to use in the unpremult-transform-repremult round trip that we do as `colorconvert(unpremult=true)`. Also noticed that the recent CMake overhaul inadvertently left testsuite/oiiotool-color un-tested, so re-enable that (which also lets us test this fix).

|

I’m hoping I haven’t exploded everything by bringing this up. |

|

Not at all. This was a pretty localized change and should even be safe to backport to the 2.1 release. |

|

Good catch. It's probably worth mentioning that Nuke makes this same error (when using the "(un)premult" knob, available on most nodes, but disabled by default). So this fix will diverge Nuke/OIIO results for a matching color convert operation. I'm not putting that forward as an argument against this fix, which LGTM, but good to be aware of. |

|

OMG, does anybody ever get color science right? |

|

So what should I do? Do the color conversion operations in oiio all need a third option to do it incorrectly to match Nuke? |

|

Please no. Please no. Be a beacon on how to do it properly. It's also plausible that folks will look to OIIO as a reference standard, and push it up the hill to software like Nuke. |

|

I'm inclined to merge this into master right away. I think there's little question that it's the right behavior, and that the round trip through colorconvert should never have crushed pure glows to black, that's definitely wrong and undesired behavior. @sobotka , how quickly do you need this fix in a release branch? I tend to do tagged releases on the 1st of each month, which is very soon. I can therefore squeeze it in if people are running into this as a problem in the release, people who can't use master. But if this is not time critical, or you can use the fix in master, it feels like maybe it's prudent to do the backport to 2.1 after this week's release, so that we can get comfortable with it in master and find any problem cases before it becomes the official supported release. Opinions? |

|

@lgritz No rush here; I happened across it after a discussion of appropriate handling and realizing that it was plausible not many pieces of software handle it correctly. |

|

Then I will stick with the aforementioned plan -- since Mike blessed it as well as it following your suggestion in the first place, I will merge into master in the short term, maybe wait just a couple more days in case anybody else has comments. I'll backport to 2.1 after January's release has gone out the door (so the fix will make it into the next 2.1 patch a month later, giving us some padding to find any problems using it in practice by people who work from master). |

|

I'll put in a request to Foundry to investigate the same issue, to at least put it on their radar. Maybe they would consider it as an option. |

|

I was just looking this issue because @johnhaddon pointed me at it, because I recently added the same sort of unpremultiplied toggle to some nodes in Gaffer's image compositing tools. I thought maybe I should mention that after playing with it a bit, my conclusion is that Nuke's current approach may actually be the best one, at least for an interactive compositing tool. My arguments: Because OIIO is more likely to be used in automated processes, I can certainly see why it's more attractive to try and automagically do the right thing where possible. But I'm not sure there's a good reason for Foundry to switch - and it's not clear to me that this new code is any more "correct color science" than the old code. The only technically correct thing to say is that doing unpremultiplied processing of pixels with zero alpha has an undefined result - it's a question of what is more likely to be convenient for users, which then depends on who your users are and what sort of images they have. I'm open to being persuaded though - if there is a strong argument that the Foundry should switch how this works in Nuke, that argument would likely apply to Gaffer as well. |

|

A pixel could have most of its color come from an additive element, but

have a tiny bit of alpha from a tiny bit of coverage of an opaque element.

A tiny bit of dust in the middle of your glowing cloud will produce

completely different results. A pixel with an alpha of 0 can obviously be

treated as purely additive, but what about an alpha of 1e-30? Or 1e-10? Or

1e-3? Any decision about where to draw this line will produce horrible

edges somewhere.

Correct me if I'm wrong here, but I believe the basic emission / occlusion

nature of associated alpha still applies here? Zero is zero, representing

zero occlusion, and corresponding emission encoded in the RGB. The small

value would only be an issue at the quantisation threshold of the current

bit depth, but for proper colourimetric transforms, it is still required.

C) Because trying to magically do the right thing

I don't understand where the code is trying to do anything magically? This

is more or less following the emission / occlusion model appropriately?

and it's not clear to me that this new code is any more "correct color

science" than the old code.

The disassociation is required for proper colourimetric conversions. That's

not the question here.

The question was whether or not blindly multiplying without care and

attention to potential emissions stored in the RGB channels was appropriate

behaviour?

With respect,

TJS

|

|

It's a good point to raise that in the context of interactive compositing (which will likely contain a chaos of different inputs and operations, that may not all be mathematically ideal), it's a judgement call by the artist to do what looks right. If Nuke or another compositing engine where to consider this change, I would suggest it be implemented as an option only (a "preserve glow" checkbox in-line with the (un)premult knob for example). That gives control to the artist, with a helpful tooltip to explain why they should consider using it. I did write Foundry and pointed them to this PR, and suggested they investigate it as an option to consider. Nuke would likely never be able to turn this on all the time since it would have potential to change image output for any op utilizing the unpremult feature of NukeWrapper. @danieldresser-ie it would be great to have a test image that would illustrate your point about broken edges as a result of this change as a reference, if you have something from your testing. |

|

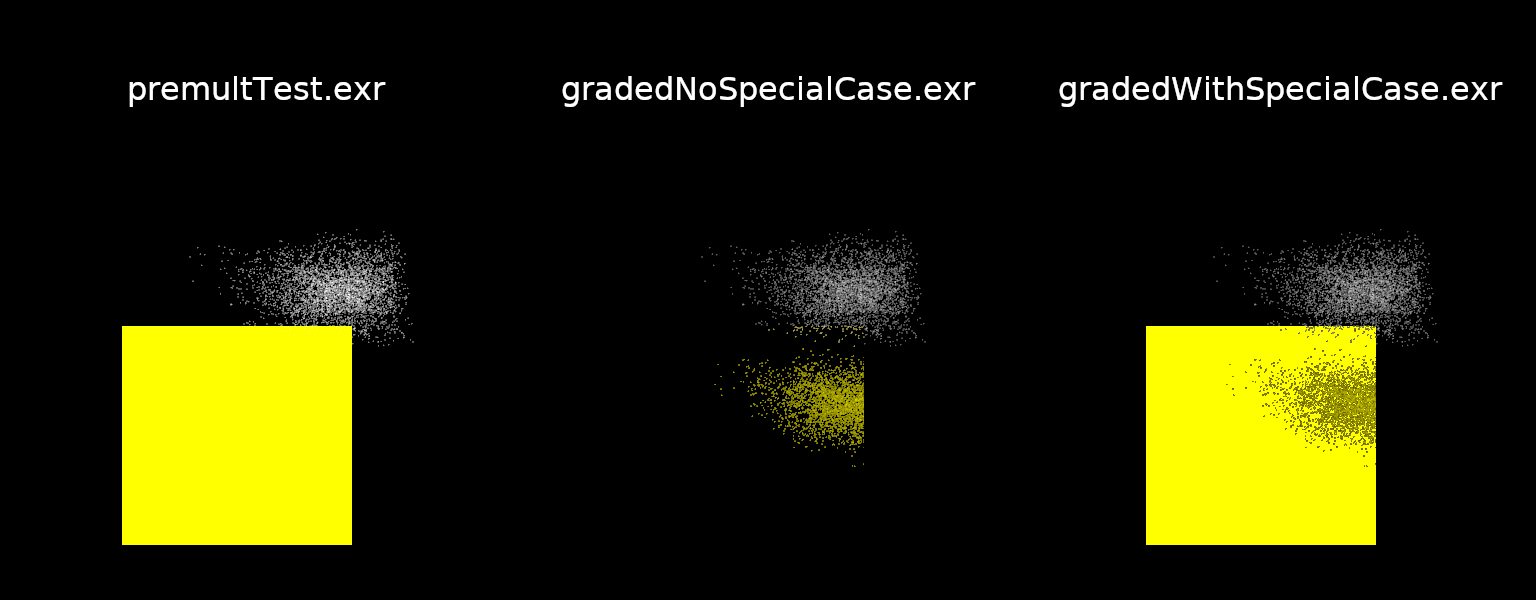

Hey, @michdolan, I realize that was probably a kinda confusing way to try and explain it - I was just looking into putting up an image, lets see if this works: Sample images here: https://github.com/danieldresser-ie/gaffer/tree/premultTestImages/testResults This shows a simple scene with 3 elements: a bright cloud of tiny opaque particles ( color <3,3,3> ), a black cloud of opaque particles, and in front, an additive yellow square with an alpha of 0. The next two images show the result of applying a gamma of 2 to the image, with unpremultiplying and repremultiplying. The first result is naive, blindly multiplying by 0 alpha afterwards, the second result adds a special case like in this PR, skipping the remultiply by 0. In this example, both results are terrible. Without the special case, the additive square gets crushed to black anywhere it doesn't overlap the particles. With the special case, the pixel where the alpha is actually 0 are fine, but the pixels where the square overlaps a particle with a non-zero alpha get treated completely differently ( even if this alpha is close to zero ). The desired result of an "unpremultiplied" color grade would be that the yellow square would be unaffected by the black particles behind it, as if you'd changed it's color in the rendered scene, but this is impossible to achieve. Just looking at the pixel data, we can't tell the difference between the white particles at the top of the image, and yellow overlapping pixels at the bottom. Now, this test case isn't a strong argument either way - neither approach produces good results. But it seems like a lot of practical cases would be messy like this - if there are no pixels with non-zero alpha, why would the image even have an alpha channel. Before I encountered this issue, I was thinking that this fix is an obvious win - but now I'm thinking if it's impossible to reliably get it 100% right, it may be more important that it be understandable for compositors ( mostly for the interactive case, this applies much less to OIIO ). But it is worth talking about now for us - I'm just adding the unpremult option to Gaffer, so this would be our one chance to get it right from start, without the baggage that you point out Nuke has. I'm just not entirely convinced it actually is the right option. |

Can you tell me what you are doing with the grade in the demo? Is it only the power function? I know that having mucked around this for a long time, the largest issue is quantisation, as hitting The primary workaround that works most ideally at the risk of a slight loss of precision is to temporarily flip to a log encoding for both occlusion and emission, grade with a careful eye to how the math on log translates, then revert back when complete. This should save the I'd be interested to see if the issue you are hitting is due to hitting the quantisation wall. I would expect the yellow + darker particles to grade darker where the alpha indicates occluding. That would seem to be a reasonable the goal of such an exponent based grading operation? Unless I'm completely misreading you, you seem to want to be able to maintain geometry based on a single alpha value, and not impact the composited result but rather the pre-composited result? Given adjusting the contrast has no real physically plausible context, that seems like the correct output? I may just be completely missing your vantage though. |

Yeah. I just needed something that is non-linear to demonstrate. A lot of color space transforms would actually be a lot worse, since often color space transforms totally crush values that get too high. The weird edge is because a pure additive pixel just directly uses |

|

The contrast feels exactly what I’d expect; it doesn’t have any physically plausible correlation and all we have is the alpha encoded image. As such, we expect exactly what we get; those pixels are semi-occluding the background by a degree, hence the non-physically plausible contrast adjustment is changing the contrast on the occluding pixels. With physically plausible manipulations such as exposure, etc. the result however, is correct. More correct than accidentally torching emissive pixels that are unoccluding. I don’t see a problem here?

They “have”? It doesn’t mean literally “premultiplied”, it means emissive yet unoccluding, so the scale seems correct? Everything appears to be behaving exactly as expected, given the limited and somewhat “lost” information? |

|

I may be a bit out of my depth here, but I have an interest in getting this right first time in Gaffer, so let me pretend I know what's going on and you can all tell if if I'm understanding correctly... This isn't about very small alpha and hitting

I'm not sure we can tell compositors to limit themselves to physically plausible adjustments, so we do need to factor non-linear operations into our thinking somehow. Correct me if I'm wrong, but if the operations are linear, isn't the whole unpremult-grade-premult process unnecessary anyway? The way I'm thinking of it is this. As a compositor, I could start out with two separate elements - one emissive and one opaque (the square and particles in Daniel's example). With the new "repremult" behaviour I could apply the same non-linear grade to each separately, and be totally satisfied with the results. Then I could composite them together with an Over and also be satisfied with the results. I'd chalk this up as a definite win for "repremult". Alternatively, I could first Over the elements together and then apply the same non-linear grade, again with the new "repremult" behaviour. Now I get the unintuitive results from Daniel's example. Sure, they're the expected results if you think about the maths, but that's not the same as intuitive. The problem is that the Over has thrown away the information about what proportion of each pixel is "emissive and unoccluding" and what proportion is "literally premultiplied". We simply don't have enough information to match the results of the grade-then-composite operation for such "mixed" pixels. Sure, I should grade before merging, but maybe I was given the two elements in a pre-merged form. Or maybe I'm just trying to make a nice picture and hit a deadline and it's not clear to me why order of operations should be relevant. Or maybe I'm comping on a frame where the issue isn't apparent, and I won't notice problems on other frames until next morning in dailies. It's this latter case where we're unsure if "repremult" is a win in an interactive compositing scenario. We know we can't match the grade-then-composite results, so we have a choice between two imperfect options. Either we go with the old behaviour, where the purely emissive pixels disappear entirely, or we go with the new behaviour, where purely emissive pixels are OK, but "mixed" pixels come out darker than wanted. The argument in favour of the old behaviour is that it is so obviously wrong that it forces you to back up and reevaluate. The "repremult" behaviour is more subtly wrong, and hence has some potential to be overlooked. Assuming you don't tell me I've completely misunderstood everything, I think I'm personally erring towards adopting the "repremult" behaviour for Gaffer. I'd like to get things right automatically where we can. |

I believe we are in agreement then; the result is as correct as it can be given the reduced information provided. I may be very wrong here, but the missing information I believe is depth, and I think deep compositing might have a more valid result? |

I believe so too. Daniel and I are just musing about whether being obviously inaccurate might be more pragmatic than being subtly inaccurate in Gaffer's interactive compositing context. In case we haven't been clear enough, we're not objecting to this PR in any way or trying to influence OIIO's direction. We're merely hoping to pick your brains...

Yep, with deep, a single pixel could have different samples for the emissive and opaque components, so they could be graded independently before flattening. When merging two deep images, all input samples are retained in the output image. We now have deep support in Gaffer, so this seems to add further weight to following OIIO's lead. |

Deep math makes my head explode, so I may be wrong here. Isn’t the geometry of coverage lower with deep, meaning Daniel’s case results in some of the geometry being compiled in, with the near emissive pixel now retaining some of the emissive “size”? That is, the rear pixels end up taking up less area of the pixel window. It seems to me that this might be a case of the actual merging operation that happens before the grade. It seems very similar to the side cases of uncorrelated / disjoint / conjoint over, where the calculation of alpha is what the issue is here?

It’s the exact same nuanced question that Gritz brought up. The answer is that the assumptions are that the RGB values are radiometric. This is false as best as I can see. Uniform values are fine where R=G=B, but other adjustments break I believe. Which brings up an interesting point if the disassociation is required for a power? Perhaps it isn’t? No radiometric expert here, so the following is to be consumed with caution. If we change a hue for example, via a grade, we are not operating on purely radiometric spectral components, and as such, the math goes wildly wrong. We don’t have access to the underlying radiometric spectral components, and only the pseudo values in RGB. So if we shift a hue from a greenish to a reddish in some arbitrary RGB rendering space, the weighting of the resulting RGB goes sideways breaking the alpha association, and the glowing / darkened edges pop up as a result. The same problems should appear on generic colour space transforms I believe. TL;DR: In changing a hue the underlying spectral radiometry is changing in a nonlinear and unknown fashion with respect to the RGB baked output. I think that with spectral this isn’t the case, as it comes in before the CMFs. I haven’t rolled it through Colour to verify, but it seems logical standing in the idiot corner. |

|

I'm no color scientist and am scratching my head over all of this as much as you guys. I have no particular agenda about which computations OIIO does when color correcting --- I will do whatever you guys think is the consensus right way. To be honest, what I would really like to do is outsource the problem entirely by having OCIO provide API functions that do color transformations on RGBA pixels (not just RGB), implemented as best as they can and with as many options or varieties as necessary, and OIIO will just straightforwardly call that rather than feeling like it has to "prepare/disassociate" before the OCIO step and then undo/reassociate after. (To this day, I cannot articulate with any real emotional conviction why you need the disassociate/reassociate step surrounding the color conversion, sorry Jeremy and Troy who have tried so hard to explain it to me.) When Troy suggested this change, it made some intuitive sense to me, in the sense that it was clearly wrong that we lost the (r,g,b,0) pixels -> (0,0,0,0) in the round trip, this fix addresses that special case without changing the processing of any other pixel values (right or wrong as they may already have been). I figured that has to be a (small) step forward -- right? -- even while acknowledging that the whole exercise of trying to color correct pixels with non-1 alpha is a house of cards to begin with. Like I said to Troy in an off-list conversation, I'm a rendering guy at heart and so I always try to reason about these things in terms of light transport. If this were about detecting light with a camera, all you have physically is how much light hits the sensor -- there is not really such a thing as alpha, everything is inherently "premultiplied" (I hate that term), and the color channels represent spectral components. Ideally with a linear scene-referred encoding of the channels as well. That's the only principled approach, and everything else piles on ambiguity, confusion, or incomplete analogies. There are several concepts in CG that I'm inherently leery of: alpha (nonphysical, and conflates "coverage" with transparency), any nonlinear values along with non-1 alpha, color spaces that are not spectral non-cross-talking channels, and the very idea of unassociated alpha (which means... "the light you would get in that pixel if the object covered the whole pixel, but actually it doesn't, it only covers half the pixel" -- ick). The very situation we are worried about combines every one of those muddled concepts, so I'm not the least bit surprised that there is no one way to handle the color conversion in a principled way that "works" in all situations. We are adjudicating which of several clearly wrong approaches is the most tolerable. :-) |

|

This has ended up being fairly interesting - particularly bringing up deep images, which I hadn't considered. I don't think I'm misrepresenting things too much to say we can basically classify the images where we might want to do an unpremultiplied color transform into 4 types: A) Everything is occluding. Everything works fine, the fix is not relevant So, I think in the end, my conclusion is that for Gaffer we should match the same behaviour as this PR, because I find the deep case compelling, and it helps for B), and C) is pretty inconclusive. Thanks for indulging me in this conversation - I guess I have reached the same conclusion you folks did, though the route was a bit different. It is interesting to see how people think about this stuff. As Larry says, it is all a bit of a mess, though I feel like it generally possible to define a correct result based on what you would get if you went back to a render and made the change there - though we're usually taking shortcuts that mean we don't get anywhere near matching that correct result, and defining what error is reasonable is certainly tricky. |

|

Foundry agreed this would be a useful option to have in Nuke in the future and logged it as a feature request: |

|

I wanted to thank everyone in this thread. It's always a terrific way to feel small when wizened minds focus and tackle a problem. Plenty to think about. |

Troy Sobotka pointed out to me that the implementation of colorconvert

where unpremult=1 (i.e., unpremult, then transform, then premult) was

wrong: the unpremult operation was careful to preserve the color value

of pixels where alpha==0, but the premult we did at the end was just a

straight multiply of the color by the alpha. This would have the

effect of crushing those "glow" (A==0, RGB > 0) pixels to black in

their round trip, whereas we want the original glow to be preserved.

So really, the kind of premult we want for a one-time conversion of

unpremultiplied to premultiplied is subtly different from the "re-premult"

that we want as part of a round-trip operation.

The fix in this patch is to add IBA::repremult() to do this modified

operation (multiply color by alpha, unless alpha is 0), and also that is

the correct operation to use in the unpremult-transform-repremult round

trip that we do as

colorconvert(unpremult=true).Also noticed that the recent CMake overhaul inadvertently left

testsuite/oiiotool-color un-tested, so re-enable that (which also lets

us test this fix).