- Description

- Goals

- Software design

- Code quality

- Installation

- Third-party dependencies

NetworkService is an easy-to-use C++17 project that aims at executing network-related commands more securely by relying on tools such as: ip, iptables, ebtables, nftables, a custom network tool, etc. It won't change your everyday life but for developers and entreprises working on systems that need to communicate with the rest of the world through a network, a service like this could be very useful.

By studying the source code carefully, you might tell yourself this project is just many lines of code to take a configuration file as input then perform several fork+exec and write to some network-related files. That's indeed what it roughly does but one must remember that the goal of professional software development is not just making software work.

Thus, NetworkService has been designed and developed so that best practices in software design and object-oriented programming, code quality and also secure programming have been taken into consideration. At least, I tried somehow:-). Let me know if something could be improved; One never stops learning.

Why creating a native service for that purpose instead of directly using needed tools? Yes, there are other means but none met my objectives due to licensing or security issue, a need for a correct architecture, etc. Here is a non exhaustive overview:

- Calling commands one by one in a bash script

- Using successive system() calls

- Statically or dynamically linking with used tools' libraries (when available)

Usually, I develop all my personal softwares in C language. One reason to that is because it is one of the programming languages I'm most comfortable with. This time, I needed to practice a recent version of the C++ language, a widely used programming language which I've not used much even if I understand it quite well.

However, using C++17 is not the only purpose of this project. Indeed, I had time so I decided to set up many of the steps that I think are essential in a software development process, always starting with the software design.

I do not consider that the most important in this project is the final software, which can obviously be useful, but all that made it possible to produce it: design, used tools, continuous integration, automatic checks of programming errors, applied good practices, etc.

The above flowchart is a simplified graphical view of what the service should do. Once a valid configuration file is provided, it is parsed and loaded into memory to get the full list of network and firewall commands to execute. It is not mandatory to provide firewall commands. Indeed, the service can be used to only configure network interfaces: set ip addresses, add network interfaces, update routing tables, etc.

Firewall commands can allow to monitor incoming and outgoing traffic to a network interface and filter it according to user-defined rules. A rule can simply be something like: "Reject UDP packets coming from IP address 10.0.0.1/8".

Note that, when firewall rules are provided, network commands have to be executed first so that network interfaces are correctly configured before use.

From the flowchart, one can at least extract three components:

- Configuration: To load the configuration file

- Network: To check that physical interfaces to be used exist and execute network commands

- Firewall: To configure firewall by executing rules

Logger component has been added to allow logging messages that can help debugging the service when necessary, inform about interesting operations it is doing, etc. The Network service core organizes how components work together to fulfill the objectives.

The core service only depends on (stable) abstractions. It is not supposed to change a lot as it has no knowledge of low level details. These are handled by other components which are kind of plugins from the service's point of view. Reader, Writer and Command executor are underlying helper classes to improve maintainability, ... and ease executing commands.

Note that extending the service is easy. It consists in adding new code (Each XXX can be an extension); no update of existing code should be necessary.

Concretely, several tools have been used to reach the level of quality expected in this project. Most of them are automatically enabled when generating a debug version of the software.

It's easy to forget to manually run tools/build targets that check for conformance with expected code quality. Therefore, automating these checks becomes a no brainer. That's what is done in this project (See .travis.yml). Thus, when new pull requests are proposed, following checks are performed by continuous integration tool:

- Checks to ensure that there is no whitespace

- Checks to ensure that doxygen comments are correctly written

- Checks to ensure that code formatting is correct

- Checks to ensure that static analysis/linting returns no error

- Checks to ensure that the software compiles in all configurations

- etc.

Automating things that are better handled by tools is a good habit to take because developers can then focus on what really brings a value-added for the entreprise.

Doxygen has been used to document classes and, more generally, all header files that composed the project, and generate the documentation. The resulting documentation, which has been generated based on Doxyfile can be browsed online.

The GCC compiler comes with several interesting compilation flags (See compilation-options.cmake for more details on those used in this project) and GCC options to understand their meanings.

Address sanitizer aka ASAN is a memory error detector for C/C++. As described in this link, it allows to detect errors such as: buffer overflow, use after free, etc.

For more details, see ENABLE_ASAN in compilation-options.cmake.

Several compilation options can be used to help harden a resulting binary against memory corruption attacks, or provide additional warning messages during compilation. That helps producing a secure software protected against many known security vulnerabilities with no real effort.

For more details, see ENABLE_SECU in compilation-options.cmake.

Include What You Use aka IWYU is a tool that checks all header and source files to make sure "#include"-ed files are really used. It may be particularly useful when, for example, you've reworked some source files and would like to know if all "#include" inside are still necessary.

However, note that IWYU can be a bit verbose due to the fact that it expects for each source file to explicitly include all header files it uses. Consider A.h which includes B.h, if Main.cpp includes A.h but also uses functions in B.h, IWYU will warn because a #include "B.h" is not found in Main.cpp. Also, the tool sometimes recommends using some system's internal headers instead of their public versions. Besides, it often tries to enforce the use of forward declarations which can certainly speed up the build time but must be used intelligently (See Forward declarations: pros vs cons for the pros and cons of using forward declaration).

For all reasons described above, I decided to not apply all its recommendations and to not enable it by default even in debug mode. For more details, see ENABLE_IWYU in compilation-options.cmake.

Link What You Use aka LWYU means what the name suggests: it's about ensuring that the only linked libraries are those really used by the software. It consists in adding the linker flag "-Wl,--no-as-needed" and run the command "ldd -r -u" on the generated executable.

From ld manual page: "Normally the linker will add a DT_NEEDED tag for each dynamic library mentioned on the command line, regardless of whether the library is actually needed or not. --as-needed causes a DT_NEEDED tag to only be emitted for a library that satisfies an undefined symbol reference from a regular object file or, if the library is not found in the DT_NEEDED lists of other libraries linked up to that point, an undefined symbol reference from another dynamic library. --no-as-needed restores the default behaviour.".

For more details, see ENABLE_LWYU in compilation-options.cmake.

Code formatting is a useful way to keep source code consistent regarding look and feel. Developers that already have performed code reviewing on gerrit for example will probably agree that there's nothing worse than being forced to focus on formatting/styling issues instead of using that time to review what's really important: the content, the architecture. Clearly, that should never happen.

In this project, code formatting can be done automatically thanks to Git hook or manually using make clang-format command. Formatting is also automatically checked by continuous integration tool when submitting a new commit.

For more details, see Clang Format and the .clang-format configuration file.

Static analysis is a set of processes for finding source code defects and vulnerabilities. In static analysis, the code under examination is not executed.

On the other hand, Code linters are usually defined as style-checkers tools but they can also behave as basic static code analyzers.

There are a wide variety of static analysis tools (clang-tidy, Cppcheck, Coverity Scan, etc.). Some like Clang-Tidy can be configured to have both roles: linter and static analyzer.

Which one is better? I believe that each can report errors others did not find so ideally, better use more than one. This project started with Clang-Tidy based on .clang-tidy configuration file. However, Coverity Scan and maybe more will be added afterward.

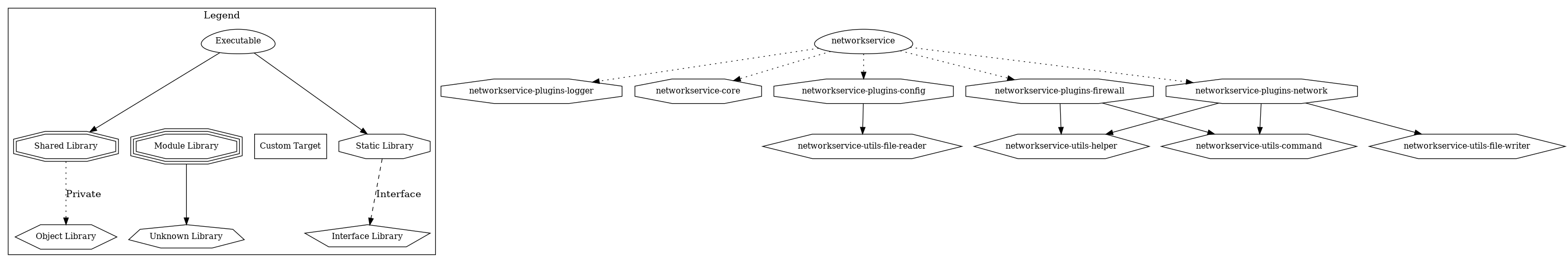

Wikipedia defines it as "a directed graph representing dependencies of several objects towards each other". I use Graphviz to generate the dependency graph which shows how the different CMake's build targets I've defined are related to each other. Here is the result:

A dependency graph is extremely important when it comes to finding whether or not there are dependency cycles in the software design. A dependency cycle occurs when for example a software component A → B → C → D → B (→ meaning "depends on"). Suppose that each software component is under a specific team's responsibility. In this example, Team A can't release a new version of its component without ensuring that B, the direct component it depends on, is compatible. As B depends on C, Team A must also do the same for C and so on with D, with B again which must be compatible with D, etc. Thus, simply testing component A requires too many checks.

It should always be possible for a team to work on its side, publishing new releases whenever it wants, integrating other teams' releases whenever it wants, without being much impacted by changes in components the team should not depend on. In previous example, a change in component B may force D to make some updates which in turn require changes in C, then in B and so on. Imagine nightly builds that fail in loop just because each team is at the mercy of the other.

Hope it's clear there must never be cycles in a software architecture. It doesn't matter if it's component (developer's responsibility) or system level (architect's responsibility).

Unit testing is a level of software testing whose purpose is to validate that each small piece of the software aka System Under Test performs as designed. It absolutely does not guarantee that the software is free of bugs; just that it does things one expected it to do.

It's not necessary to come back on properties a good unit test should have. I would just insist on one important point: Do not wait until writing unit tests before asking yourself if your system is testable or not. Testability has to be taken into account when designing the system.

Who write unit tests for a specific component? The usual answer is the developer who wrote that component. However, developers sometimes tend to write tests to make them work with the code they have already written. I mean when they made mistakes in their code, they can involuntarily write wrong unit tests instead of making them reveal those mistakes. For this reason, an expert in functional safety told me one day that the developer who wrote the unit under test should not be responsible for writing the corresponding unit tests. I think it makes sense.

GoogleTest and GoogleMock frameworks have been used to write unit tests in this project. You can find tests here.

Wikipedia defines it quite well: "In computer science, test coverage is a measure used to describe the degree to which the source code of a program is executed when a particular test suite runs. A program with high test coverage, measured as a percentage, has had more of its source code executed during testing, which suggests it has a lower chance of containing undetected software bugs compared to a program with low test coverage.".

Code coverage results are available online. Unit tests are automatically built and launched by continuous integration tool which then sends the coverage to coveralls.io.

It may be interesting to also generate test coverage locally on development machine so that one can get an idea before submitting any change. A make coverage command has been added to generate the report. After a successful make install, open "out/share/coverage/html/index.html" with your favorite browser to see the HTML report.

Note that a Cobertura XML report is also generated (see "out/share/coverage/xml/cobertura.xml"). It might be interesting when displaying coverage report with your CI/CD tool (e.g. Jenkins) is needed.

Git hooks are scripts that Git executes before or after events such as: commit, push. Git hooks can be very useful for identifying certain issues before a new change is submitted to code review. Pointing these issues out before code review has the huge advantage of allowing code reviewers to focus on the content, the architecture of the change thus avoiding to waste time with issues relative to style, formatting, trailing whitespaces, etc.

Performing quality-related checks on the whole software (static analysis, code formatting, linting, ...) takes time mainly due to Clang's analyzer. Also, it's not a good idea to rely on developers to manually run those checks before any commit. Manual actions can sometimes be unintentionnaly forgotten.

For these reasons, a pre-commit hook has been added to this project to make some checks on changed files only. It is automatically installed into hooks directory; no manual action is required.

The pre-commit hook is executed on "git commit" action. It verifies trailing whitespaces, fixes code formatting and runs the static analyzer on staged files. As it currently needs version 10 of clang-format and clang-tidy, you have to installed these tools on your host machine if you want to fully take benefit from the hook.

Everything needed to build and test this project is provided in Dockerfile. Before moving to next sections, first generate the docker image:

git clone https://github.com/BoubacarDiene/NetworkService.git [-b <version>] && cd NetworkService

docker build -t networkservice-image ci/

All commands in next sections have to be run inside docker container. Here is how to start the container:

docker run --privileged -it -u $(id -u) --rm -v $(pwd):/workdir networkservice-image:latest

| Name | Options | Default | Description |

|---|---|---|---|

| CONFIG_LOADER | json, fake | json | Where to retrieve network configuration from? |

| LOGS_OUTPUT | std | std | Which logger to use? (standard streams, ...) |

| ENABLE_UNIT_TESTING | ON, OFF | OFF | Allow to enable/disable unit testing |

| EXECUTABLE_NAME | Any valid executable name | networkservice | Name of the generated executable |

| Short option | Long option | Values | Description |

|---|---|---|---|

| -c | --config | e.g. /etc/myconfig.json | Path to the configuration file |

| -s | --secure | true OR false | true: Secure mode / false: Non secure mode |

Above runtime options are required to run the service. The configuration file contains commands to execute while the secure mode refers (more or less) to features used when executing commands. Running the service securely means "sanitize files", "drop privileges", "reseed PRNG" before executing commands.

To improve execution time of the service, it might be interesting to test both modes then make your choice depending on your time constraints.

mkdir -p build && cd build

cmake .. -DCMAKE_INSTALL_PREFIX=./out -DCMAKE_BUILD_TYPE=Debug -DENABLE_UNIT_TESTING=ON

make && make install

Note: You can add -DCONFIG_LOADER=fake to CMake options if you want to use the fake version of the config loader. I added it to develop the software without worrying about how the configuration would be provided to the service (JSON, YAML, SQL, XML, etc.).

ctest -V

make coverage && make install

Notes:

- Make sure to run coverage command after unit tests (

ctest -Vcommand) and before starting the application - The index.html file can then be found at "out/share/coverage/html/index.html"

make dependency-graph && make install

Note: The resulting graph can then be found at "out/share/dependency-graph"

make doc && make install

Notes:

- The index.html file can then be found at "out/share/doc/html/index.html"

- If the command fails, more details can be found at "doc/doxygen_warnings.txt"

make clang-format

make clang-tidy

make analysis

Notes:

- This command takes time due to Clang's analyzer

- It automatically performs following actions:

out/bin/networkservice --config ../res/example.json --secure true

or

valgrind out/bin/networkservice --config ../res/example.json --secure true

Note: Obviously, res/example.json file is just a sample configuration file to test the software and show how to write config files.

mkdir -p build && cd build

cmake .. -DCMAKE_INSTALL_PREFIX=./out -DCMAKE_BUILD_TYPE=Release -DENABLE_UNIT_TESTING=OFF

make && make install

out/bin/networkservice --config ../res/example.json --secure true

The NDK (Native Development Kit) can be used to build the software. That's not the only option but that's the preferred one. To do so, make sure to respect the following requirements when configuring the project:

- Android API Level >= 24: Means that at least Android 7.0 Nougat must be used (due to use of "ifaddrs.h")

- Android NDK >= r22: CLI11 uses "std::filesystem" which is supported from r22

First, start the docker container by mounting the android NDK as a volume:

docker run --privileged -it -u $(id -u) --rm \

-v $(pwd):/workdir \

-v <path_to_ndk_on_host_machine>/android-ndk-r22:/opt/ndk \

networkservice-image:latest

Then, configure the project and compile it:

mkdir -p build && cd build

cmake .. -DCMAKE_INSTALL_PREFIX=./out -DCMAKE_BUILD_TYPE=Release \

-DCMAKE_SYSTEM_NAME=Android -DCMAKE_ANDROID_NDK=/opt/ndk \

-DCMAKE_SYSTEM_VERSION=24 -DCMAKE_ANDROID_ARCH_ABI=arm64-v8a

make && make install

The output should start with something like:

-- Android: Targeting API '24' with architecture 'arm64', ABI 'arm64-v8a', and processor 'aarch64'

-- Android: Selected unified Clang toolchain

-- The CXX compiler identification is Clang 11.0.5

-- Detecting CXX compiler ABI info

-- Detecting CXX compiler ABI info - done

-- Check for working CXX compiler: /opt/ndk/toolchains/llvm/prebuilt/linux-x86_64/bin/clang++ - skipped

-- Detecting CXX compile features

-- Detecting CXX compile features - done

-- Release build

-- Found Doxygen: /usr/bin/doxygen (found version "1.8.13") found components: doxygen dot

-- Configuring done

-- Generating done

-- Build files have been written to: /workdir/build

Above commands generate a non stripped executable. It could be stripped using:

/opt/ndk/toolchains/llvm/prebuilt/linux-x86_64/bin/aarch64-linux-android-strip out/bin/networkservice

Results:

BEFORE:

$ du -sh build/out/bin/networkservice

6,4M build/out/bin/networkservice

$ file build/out/bin/networkservice

build/out/bin/networkservice: ELF 64-bit LSB shared object, ARM aarch64, version 1 (SYSV),

dynamically linked, interpreter /system/bin/linker64, not stripped

AFTER:

$ du -sh build/out/bin/networkservice

744K build/out/bin/networkservice

$ file build/out/bin/networkservice

build/out/bin/networkservice: ELF 64-bit LSB shared object, ARM aarch64, version 1 (SYSV),

dynamically linked, interpreter /system/bin/linker64, stripped

For more details:

- https://developer.android.com/ndk/downloads

- https://developer.android.com/guide/topics/manifest/uses-sdk-element#ApiLevels

- https://developer.android.com/ndk/guides/abis

- https://cmake.org/cmake/help/v3.19/manual/cmake-toolchains.7.html#cross-compiling-for-android-with-the-ndk

First, start the docker container by mounting your toolchain as a volume:

docker run --privileged -it -u $(id -u) --rm \

-v $(pwd):/workdir \

-v <path_to_your_toolchain_on_host_machine>:/opt/my-toolchain \

networkservice-image:latest

Then, configure the project and compile it:

mkdir -p build && cd build

cmake .. -DCMAKE_INSTALL_PREFIX=./out -DCMAKE_BUILD_TYPE=Release \

-DCMAKE_SYSTEM_NAME=Linux -DCMAKE_SYSTEM_PROCESSOR=arm \

-DCMAKE_CXX_COMPILER=/opt/my-toolchain/aarch64-linux-gnu_.../bin/aarch64-linux-gnu-g++

make && make install

Notes:

- "aarch64-linux-gnu_.../bin/aarch64-linux-gnu-g++" is an example. Please, provide path to your compiler instead.

- As for android, a non stripped executable is generated by above commands. It can be stripped using e.g. "aarch64-linux-gnu_.../bin/aarch64-linux-gnu-strip"

- For more details, please see https://cmake.org/cmake/help/v3.19/manual/cmake-toolchains.7.html#cross-compiling-for-linux

- CMake v3.18.2: Build the source code

- CLI11 v1.9.0: Parse command line options (Header-only)

- nlohmann/json v3.7.3: Parse configuration file (Header-only)

- GTest/GMock v1.10.0: Unit testing and mocking