-

Notifications

You must be signed in to change notification settings - Fork 37

Interfaces

The Web Audio Evaluation Tool comes with a number of interface styles, each of which can be customised extensively, either by configuring them differently using the many optional features, or by modifying the JavaScript files.

To set the interface style for the whole test, set the attribute of the setup node to interface="APE", where "APE" is one of the interface names below.

This section describes the different templates available in the Interfaces folder (./interfaces),

-

Blank Use this template to start building your own, custom interface (JavaScript and CSS).

-

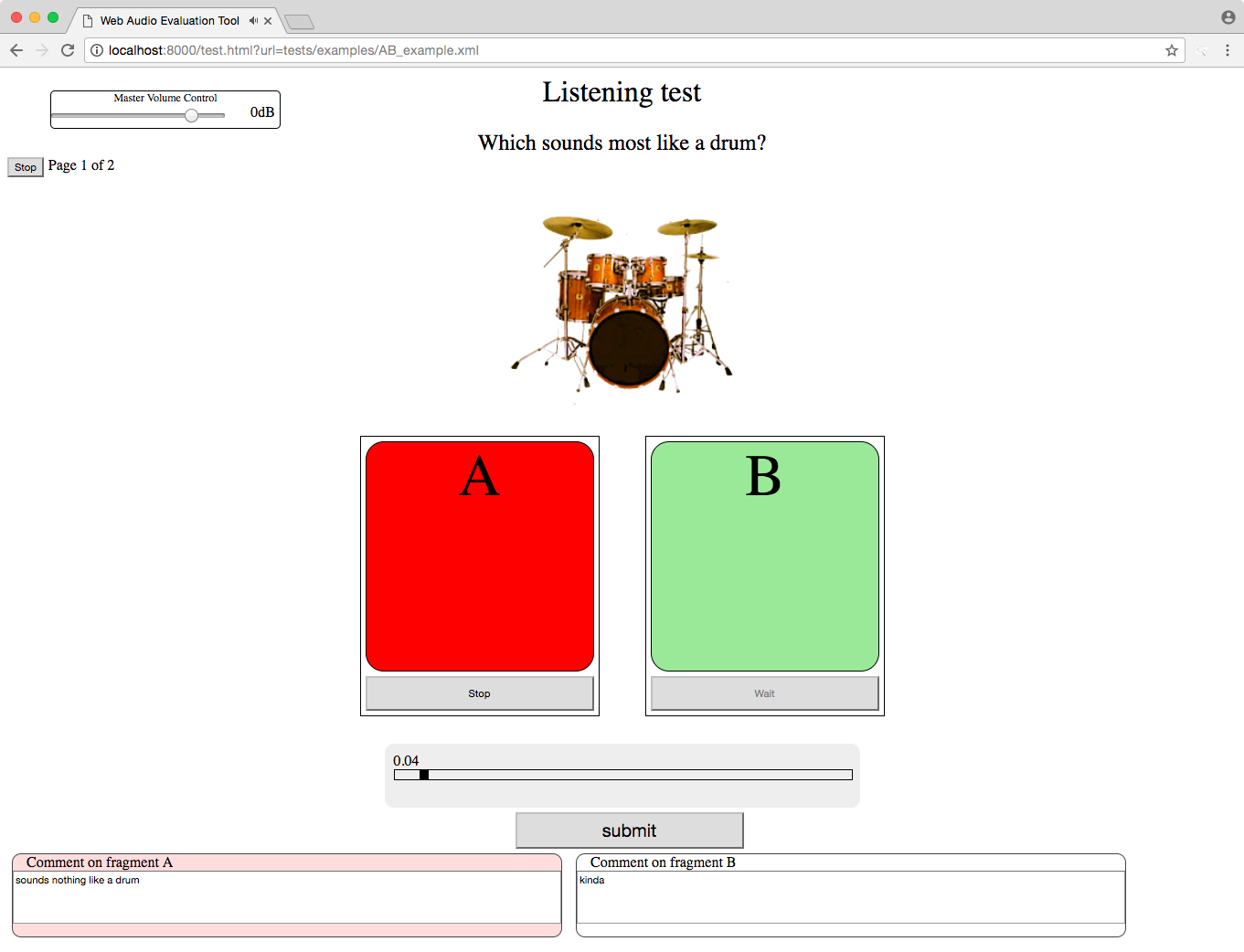

AB Performs a pairwise comparison, but supports n-way comparison (in the example we demonstrate it performing a 7-way comparison).

-

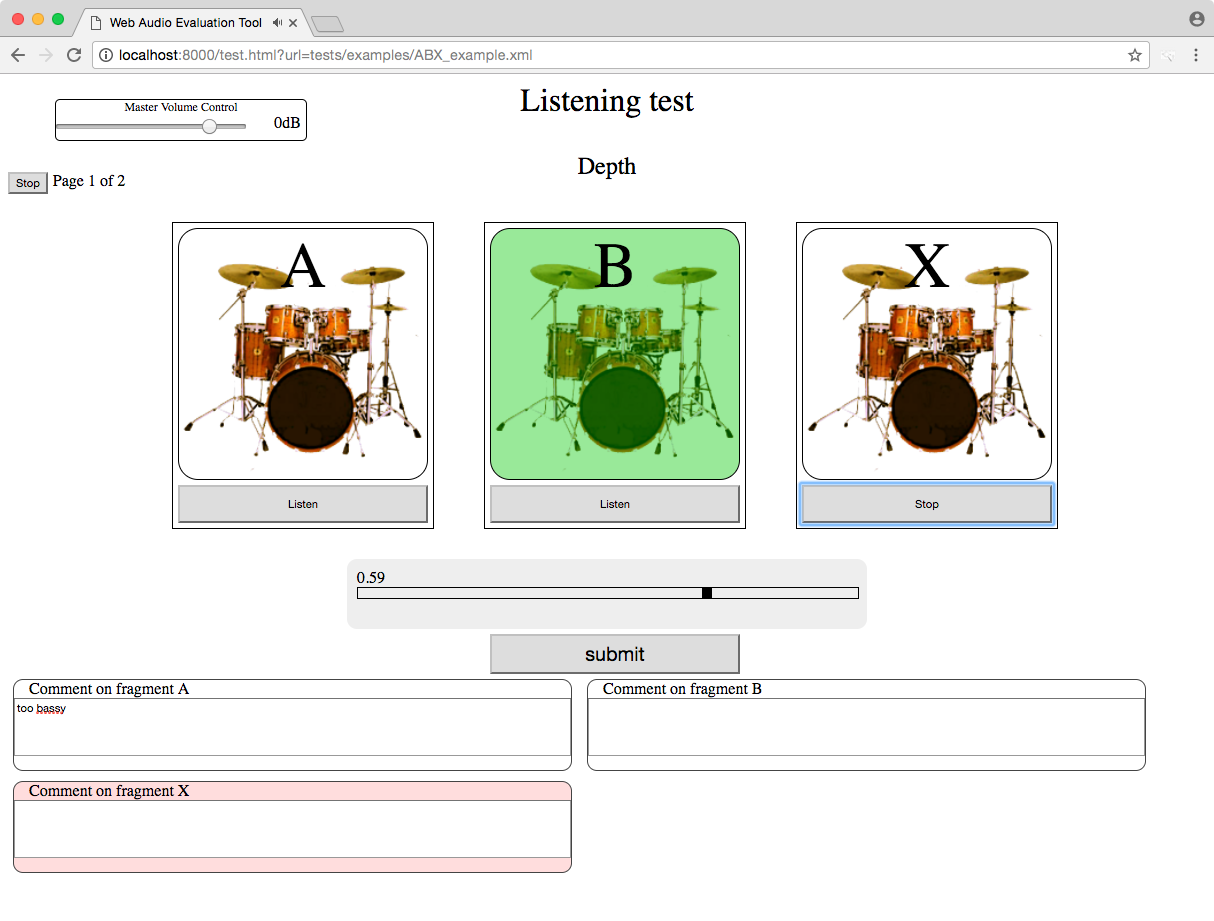

ABX Like AB, but with an unknown sample X which has to be identified as being either A or B.

-

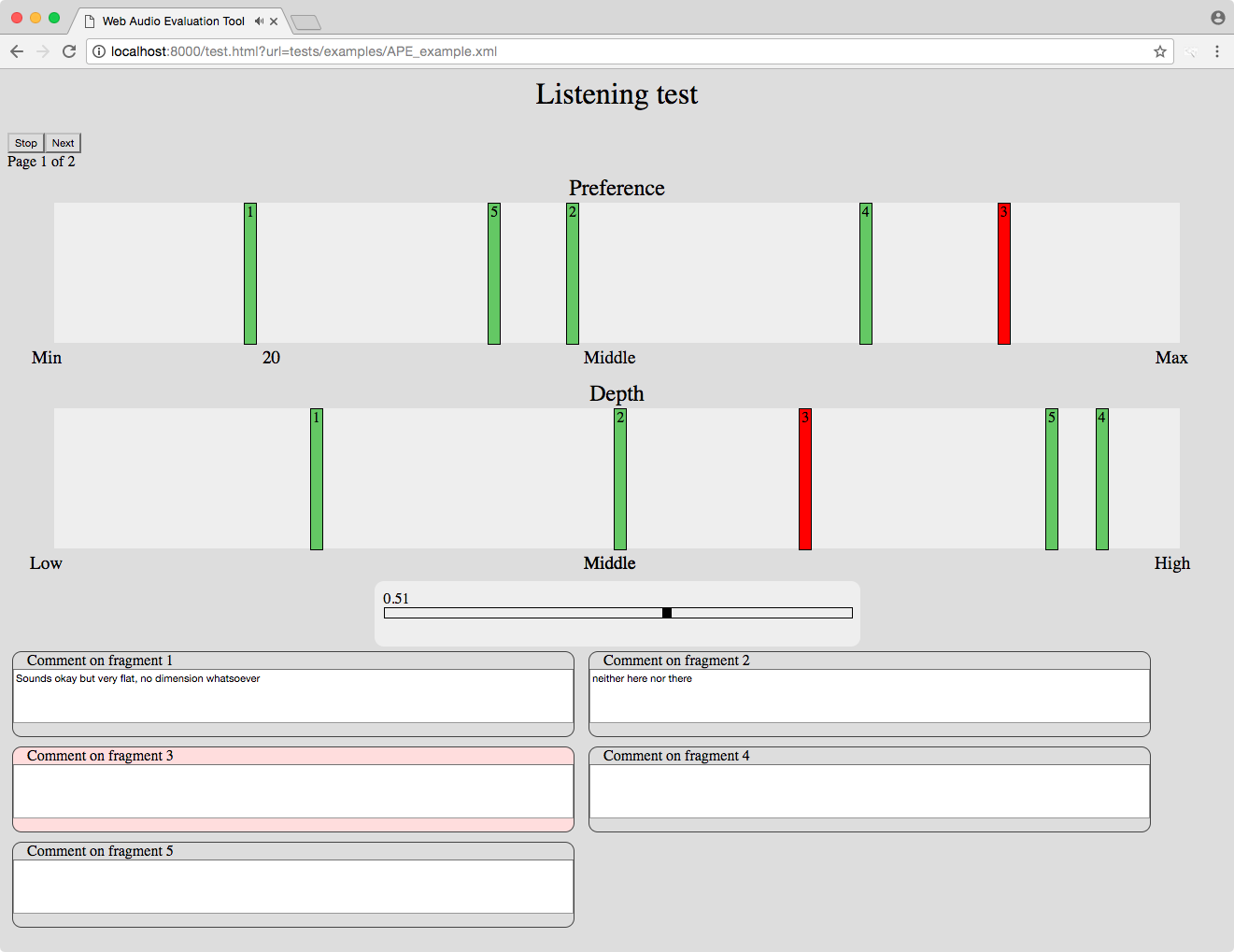

APE The APE interface is based on [1], and consists of one or more axes, each corresponding with an attribute to be rated, on which markers are placed. As such, it is a multiple stimulus interface where (for each dimension or attribute) all elements are on one axis so that they can be maximally compared against each other, as opposed to rated individually or with regards to a single reference. It also contains an optional text box for each element, to allow for clarification by the subject, tagging, and so on.

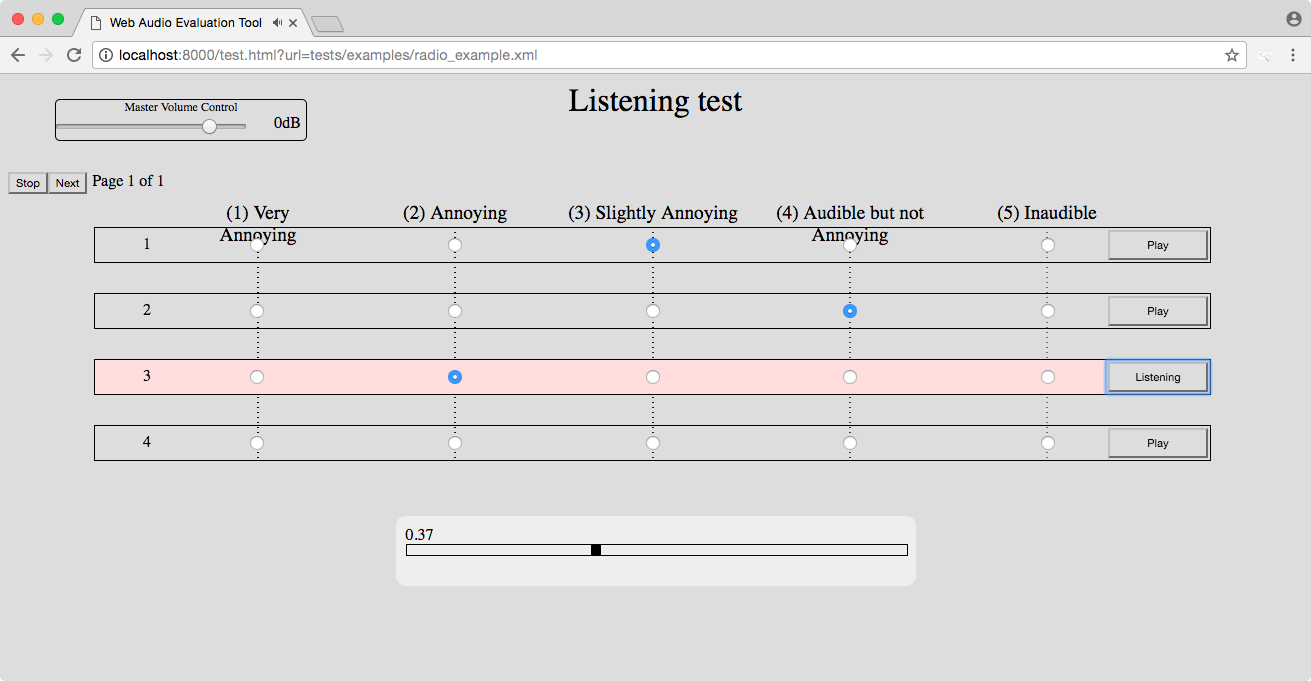

- Discrete Each audio element is given a discrete set of values based on the number of slider options specified. For instance, Likert specifies 5 values and therefore each audio element must be one of those 5 values.

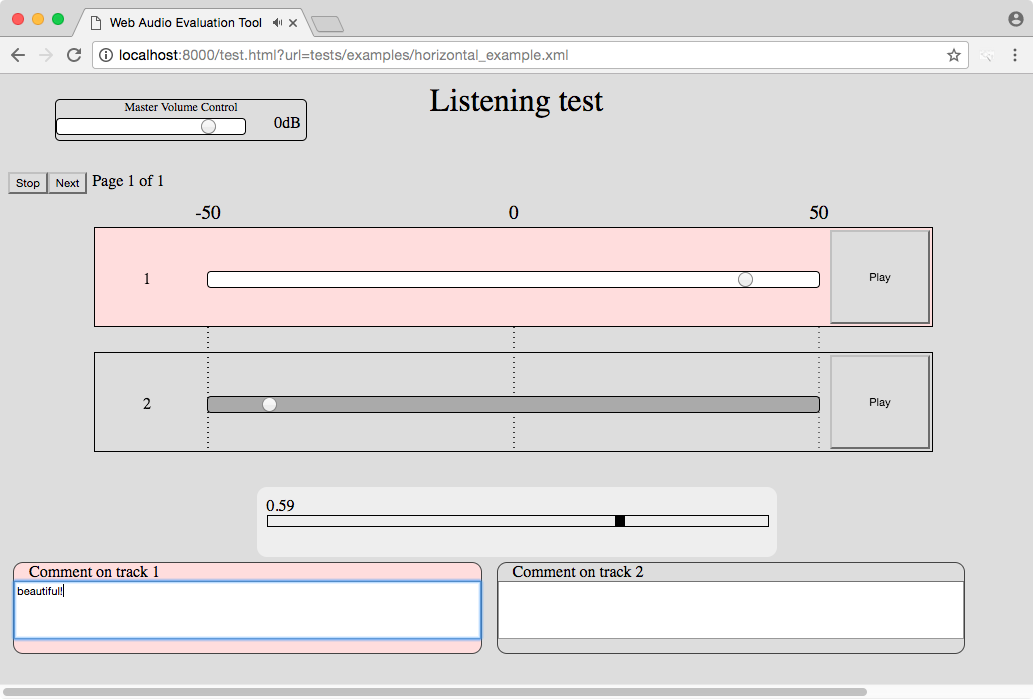

- Horizontal sliders Creates the same interfaces as MUSHRA except the sliders are horizontal, not vertical.

- MUSHRA-style This allows a straightforward implementation of [3], especially common for the rating of audio quality, for instance for the evaluation of audio codecs. This can also operate any vertical slider style test and does not necessarily have to match the MUSHRA specification.

- Ordinal Drag and drop the different stimuli in some order.

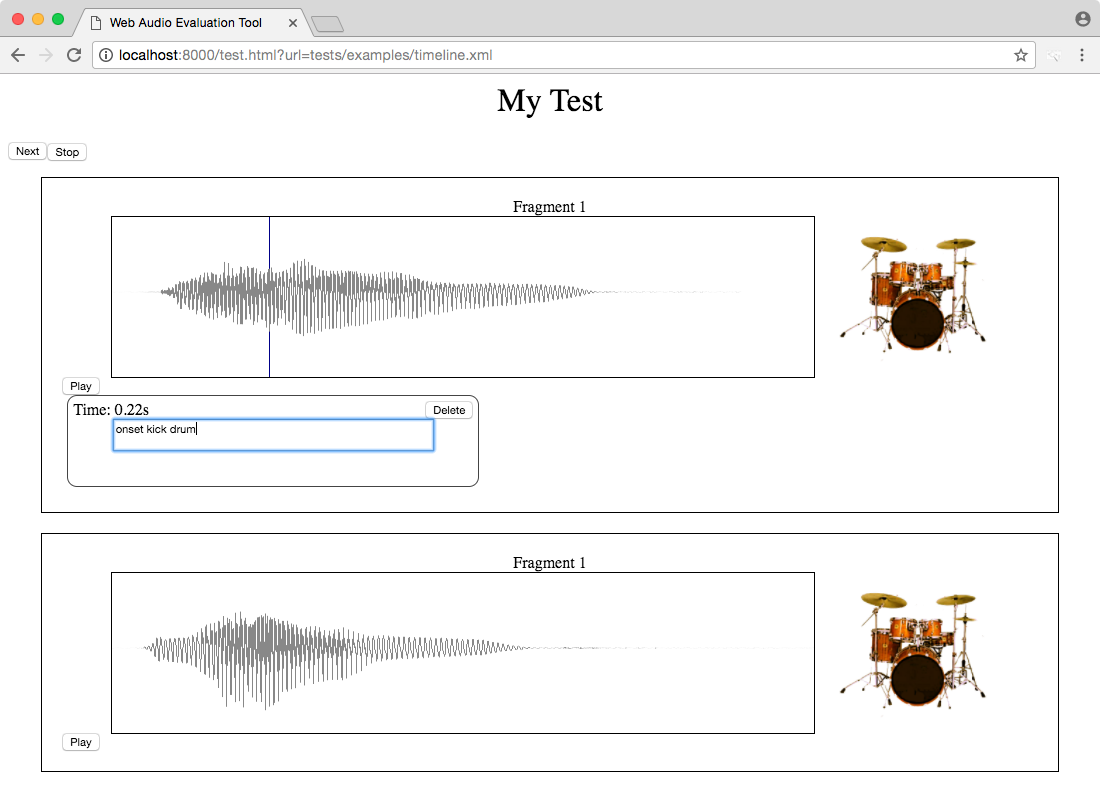

- Timeline Each audio element is presented with a clickable waveform of itself. When clicked on, a comment box is generated tied to the clicked position in the audio stream, allowing comments to be directly tied to a position in the audio. There is no unit or value returned for this but can be used for classification tasks, annotation or even precision/recall style tests.

Below are a number of established interface types, which are all supported using the templates from the previous section. From [2].

- AB Test / Pairwise comparison [4, 5]: Two stimuli presented simultaneously, participant selects a preferred stimulus. (AB)

- ABC/HR (ITU-R BS. 1116) (Mean Opinion Score: MOS) [6]: each stimulus has a continuous scale (5-1), labeled as Imperceptible, Perceptible but not annoying, Slightly annoying, Annoying, Very annoying.

- -50 to 50 Bipolar with reference: each stimulus has a continuous scale -50 to 50 with default values as 0 in middle and a reference.

- Absolute Category Rating (ACR) Scale [7]: Likert but labels are Bad, Poor, Fair, Good, Excellent

- ABX Test [8]: Two stimuli are presented along with a reference and the participant has to select a preferred stimulus, often the closest to the reference. (ABX)

- APE [1]: Multiple stimuli on one or more axes for inter-sample rating. (APE)

- Comparison Category Rating (CCR) Scale [7]: ACR & DCR but 7 point scale, with reference: Much better, Better, Slightly better, About the same, Slightly worse, Worse, Much worse.

- Degredation Category Rating (DCR) Scale [7]: ABC & Likert but labels are (5) Inaudible, (4) Audible but not annoying, (3) Slightly annoying, (2) Annoying, (1) Very annoying.

- ITU-R 5 Point Continuous Impairment Scale [9]: Same as ABC/HR but with a reference.

- Likert scale [10]: each stimulus has a five point scale with values: Strongly agree, Agree, Neutral, Disagree and Strongly disagree.

- MUSHRA (ITU-R BS. 1534) [3]: Multiple stimuli are presented and rated on a continuous scale, which includes a reference, hidden reference and hidden anchors. (MUSHRA)

- Pairwise Comparison (Better/Worse) [5]: every stimulus is rated as being either better or worse than the reference.

- Rank Scale [12]: stimuli ranked on single horizontal scale, where they are ordered in preference order.

- 9 Point Hedonic Category Rating Scale [13]: each stimulus has a seven point scale with values: Like extremely, Like very much, Like moderate, Like slightly, Neither like nor dislike, Dislike extremely, Dislike very much, Dislike moderate, Dislike slightly. There is also a provided reference.

core.js handles several very important nodes which you should become familiar with. The first is the Audio Engine, initialised and stored in variable AudioEngineContext. This handles the playback of the web audio nodes as well as storing the AudioObjects. The AudioObjects are custom nodes which hold the audio fragments for playback. These nodes also have a link to two interface objects, the comment box if enabled and the interface providing the ranking. On creation of an AudioObject the interface link will be nulled, it is up to the interface to link these correctly.

The specification document will be decoded and parsed into an object called `specification'. This will hold all of the specifications various nodes. The test pages and any pre/post test objects are processed by a test state which will proceed through the test when called to by the interface. Any checks (such as playback or movement checks) are to be completed by the interface before instructing the test state to proceed. The test state will call the interface on each page load with the page specification node.

Whilst there is very little code actually needed, you do need to instruct core.js to load your interface file when called for from a specification node. There is a function called loadProjectSpecCallback which handles the decoding of the specification and setting any external items (such as metric collection). At the very end of this function there is an if statement, add to this list with your interface string to link to the source. There is an example in there for both the APE and MUSHRA tests already included. Note: Any updates to core.js in future work will most likely overwrite your changes to this file, so remember to check your interface is still here after any update that interferes with core.js.

Any further files can be loaded here as well, such as css styling files. jQuery is already included.

Your interface file will get loaded automatically when the interface attribute of the setup node matches the string in the loadProjectSpecCallback function. The following functions must be defined in your interface file. A template file is provided in interfaces\blank.js.

-

loadInterface- Called once when the document is parsed. This creates any necessary bindings, such as to the metric collection classes and any check commands. Here you can also start the structure for your test such as placing in any common nodes (such as the title and empty divs to drop content into later). -

loadTest(audioHolderObject)- Called for each page load. The audioHolderObject contains a specification node holding effectively one of the audioHolder nodes. -

resizeWindow(event)- Handle for any window resizing. Simply scale your interface accordingly. This function must be here, but can me an empty function call.

This function is called by the interface once the document has been parsed since some browsers may parse files asynchronously. The best method is simply to put `loadInterface()' at the top of your interface file, therefore when the JavaScript engine is ready the function is called.

By default the HTML file has an element with id "topLevelBody" where you can build your interface. Make sure you blank the contents of that object. This function is the perfect time to build any fixed items, such as the page title, session titles, interface buttons (Start, Stop, Submit) and any holding and structure elements for later on.

At the end of the function, insert these two function calls: testState.initialise() and testState.advanceState();. This will actually begin the test sequence, including the pre-test options (if any are included in the specification document).

This function is called on each new test page. It is this functions job to clear out the previous test and set up the new page. Use the function audioEngineContext.newTestPage(); to instruct the audio engine to prepare for a new page. audioEngineContext.audioObjects = []; will delete any audioObjects, interfaceContext.deleteCommentBoxes(); will delete any comment boxes and interfaceContext.deleteCommentQuestions(); will delete any extra comment boxes specified by commentQuestion nodes.

This function will need to instruct the audio engine to build each fragment. Just passing the constructor each element from the audioHolderObject will build the track, audioEngineContext.newTrack(element) (where element is the audioHolderObject audio element). This will return a reference to the constructed audioObject. Decoding of the audio will happen asynchronously.

You also need to link audioObject.interfaceDOM with your interface object for that audioObject. The interfaceDOM object has a few default methods. Firstly it must start disabled and become enabled once the audioObject has decoded the audio (function call: enable()). Next it must have a function exportXMLDOM(), this will return the xml node for your interface, however the default is for it to return a value node, with textContent equal to the normalised value. You can perform other functions, but our scripts may not work if something different is specified (as it will breach our results specifications). Finally it must also have a method getValue, which returns the normalised value.

It is also the job the interfaceDOM to call any metric collection functions necessary, however some functions may be better placed outside (for example, the APE interface uses drag and drop, therefore the best way was to call the metric functions from the dragEnd function, which is called when the interface object is dropped). Metrics based upon listening are handled by the audioObject. The interfaceDOM object must manage any movement metrics. For a list of valid metrics and their behaviours, look at the project specification document included in the repository/docs location. The same goes for any checks required when pressing the submit button, or any other method to proceed the test state.

[1] Brecht De Man and Joshua D. Reiss, “APE: Audio perceptual evaluation toolbox for MATLAB,” 136th Convention of the Audio Engineering Society, April 2014.

[2] Nicholas Jillings, Brecht De Man, David Moffat, Joshua D. Reiss and Ryan Stables “Web Audio Evaluation Tool: A framework for subjective assessment of audio,” 2nd Web Audio Conference, April 2016.

[3] “Recommendation ITU-R BS.1534-1: Method for the subjective assessment of intermediate quality level of coding systems”, International Telecommunication Union, 2003.

[4] S. P. Lipshitz and J. Vanderkooy, “The Great Debate: Subjective evaluation,” Journal of the Audio Engineering Society, vol. 29, no. 7/8, pp. 482–491, 1981.

[5] H. A. David, The method of paired comparisons, vol. 12. DTIC Document, 1963.

[6] “Recommendation ITU-R BS. 1116-1: Methods for the subjective assessment of small impairments in audio systems including multichannel sound systems,” International Telecommunication Union, 1997.

[7] “Recommendation ITU-T P. 800: Methods for subjective determination of transmission quality,” International Telecommunication Union, 1996.

[8] D. Clark, “High-resolution subjective testing using a double-blind comparator,” Journal of the Audio Engineering Society, vol. 30, no. 5, pp. 330–338, 1982.

[9] “Recommendation ITU-R BS. 562-3: Subjective assessment of sound quality,” International Telecommunication Union, 1997.

[10] R. Likert, “A technique for the measurement of attitudes,” Archives of Psychology, 1932.

[11] “Recommendation ITU-R BS.1534-1: Method for the subjective assessment of intermediate quality levels of coding systems,” International Telecommunication Union, 2003.

[12] G. C. Pascoe and C. C. Attkisson, “The evaluation ranking scale: A new methodology for assessing satisfaction,” Evaluation and program planning, vol. 6, no. 3, pp. 335–347, 1983.

[13] D. R. Peryam and N. F. Girardot, “Advanced taste-test method,” Food Engineering, vol. 24, no. 7, pp. 58–61, 1952.

Anything unclear or inaccurate? Please let us know at mail@brechtdeman.com