-

Notifications

You must be signed in to change notification settings - Fork 6

Add option to export GraphQL results to CSV #130

Conversation

All other endpoints return a wrapper object, not a raw list. Wrapping the response in an object is more future proof (client code doesn't have to change if we want to add an extra field) and makes it possible to treat this endpoint like all others in decorators.

To make it easy for clients to consume the CSV, the CSV files are written to a container on blob and made accessible via an access-token embedded in the URL.

This is a change required for the following pull request: CatalystCode/project-fortis-services#130

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

LGTM - but what about the possibility of duplicate CSVs?

| /** | ||

| * @param {{limit: Int!, fromDate: String!, periodType: String!, toDate: String!, externalsourceid: String!, pipelinekeys: [String]!, bbox: [Float]}} args | ||

| * @returns {Promise.<{runTime: string, edges: Array<{name: string, mentions: number, placeid: string, avgsentiment: float}>}>} | ||

| */ |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

It would probably be good to update the comment so everyone can be more clear on the types that this function accepts and returns.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

There's too much flux in the interfaces so it's not worth it currently.

src/resolvers-cassandra/shared.js

Outdated

| @@ -96,6 +102,33 @@ function parseLimit(limit) { | |||

| return limit > 0 ? limit : DEFAULT_LIMIT; | |||

| } | |||

|

|

|||

| function asCsvExporter(promiseFunc, exportPropertyName, container, expiryMinutes) { | |||

| container = container || 'csv-export'; | |||

| expiryMinutes = expiryMinutes || 2 * 60; | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Could make 2 * 60 a constant.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Done in a8cc80a.

|

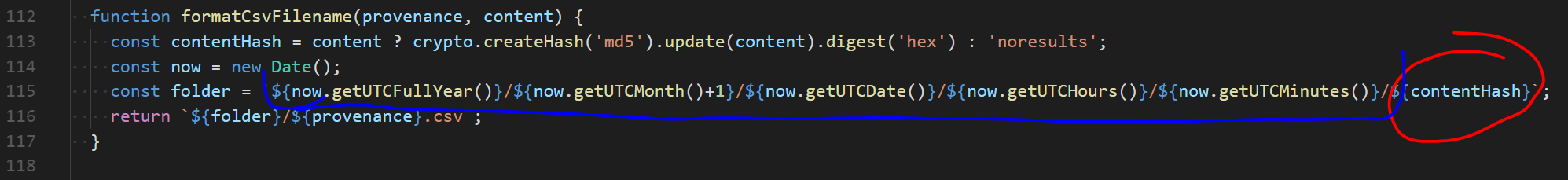

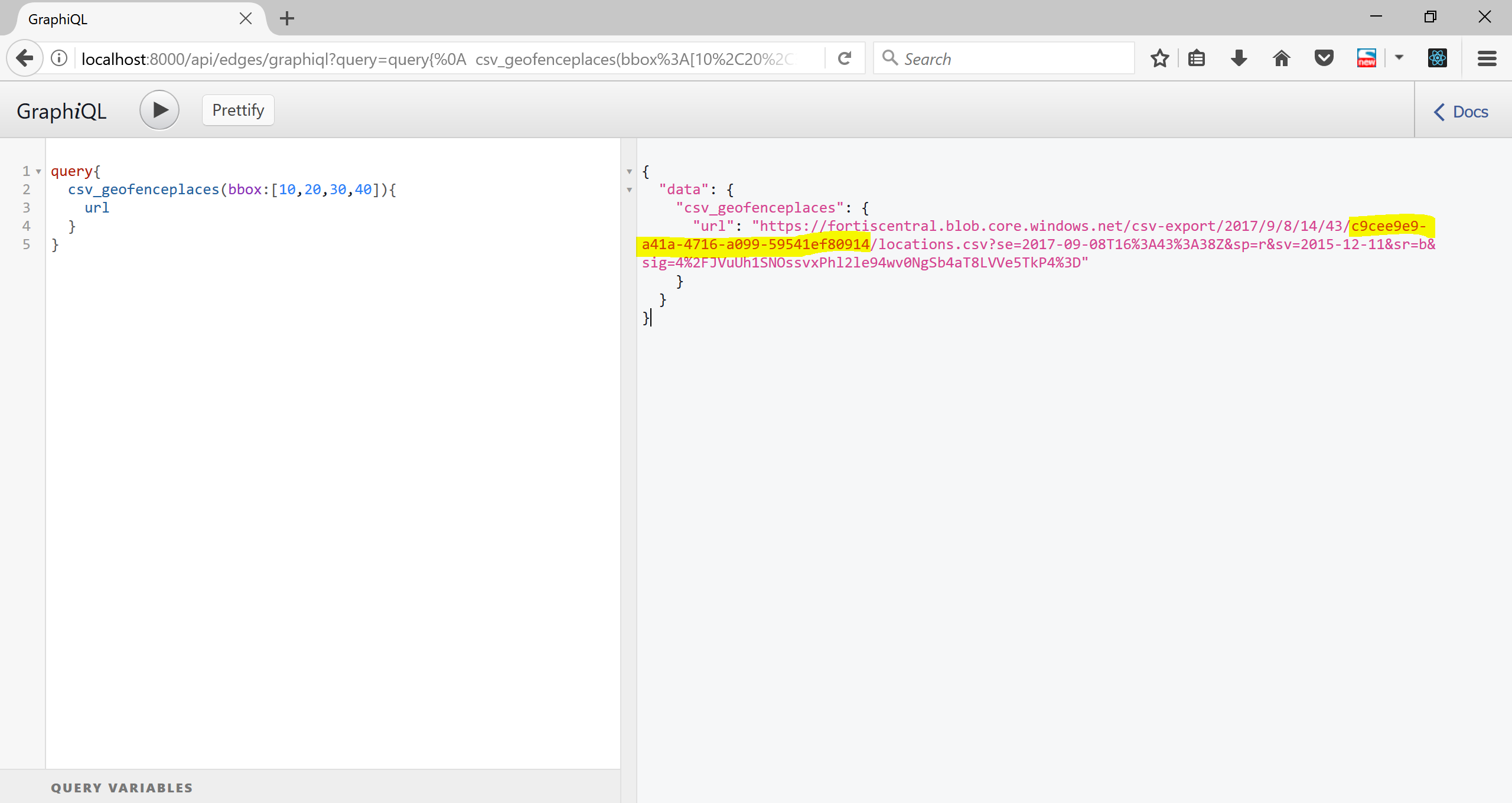

@Smarker Could you clarify what you mean by duplicate CSVs? Every file is namespaced by its content hash (red in screenshot below) and creation time (blue in screenshot below) so if two people request the exact same report at the same time, they'll simply get URLs to the same file with slightly different SAS tokens which will both be valid. The reason why the content hash of the file is in the folder structure and not the filename is to be able to give the user a nice-looking filename when downloading the report, like In any case, given that this confused a developer, it's worth changing. In 6db29d4 we're now including a UUID in the folder name (see yellow highlight in screenshot below) to make it explicit that the download location will be unique. |

|

What I meant about duplicates was not in the filename, but in the content of the csv. Wouldn't there be a chance that the contents could be the same if a user requests a csv twice in a short time? But after thinking about this further any timestamps will be updated, so I don't think there would be any duplicates. I think this change is fine. |

This is a change required for the following pull request: CatalystCode/project-fortis-services#130

What's this?

To deploy Fortis in Latin America, UN OCHA would like the ability to export data from Fortis so that it can be aggregated with other systems. CSV is a common data exchange format, so Fortis should be able to export all query results as CSV.

This pull requests adds additional endpoints to the GraphQL Edges schema that vend the same data as the normal endpoints but formatted as CSV. When the new endpoint is queried, the GraphQL response gets converted to CSV, stored on Blob and a read-only access link to the file is returned to the client. This link can then be used to embed the file or download it in the fortis-interfaces project.

Implementation notes

GraphQL vs REST

It would also have been possible to implement this outside of GraphQL so that we can return straight away a file response to the client. However, this would make integration in the frontend harder as we'd now have to talk to the server in two ways: GraphQL and REST. It's desirable to keep everything in one paradigm so that we can leverage smart clients like Apollo.

Extensibility

The CSV-exporter is implemented as a simple decorator. As such, we can easily add the export functionality to more endpoints than just the Edges queries for which it is implemented now.

Long-term operations

Azure blob storage currently does not support automatic deletion of files after a certain period of time. As such, the CSV files are stored in a time-slice folder structure which will make it easy for us to add automation in the future to delete old files if required.

API surface changes

This pull request introduces a non-backwards-compatible change to the geofenceplaces query in the Edges schema: we're now wrapping the returned place array in an object, i.e., instead of returning

[placeX]we now return{places: [placeX]}. There is currently no usage of geofenceplaces in the fortis-interfaces project (search link) so no client code needs to be updated.Environment changes

This pull request introduces additional environment variables so a change in fortis-pipeline is required. The companion change is submitted as CatalystCode/project-fortis-pipeline#141.

Sample usage

GraphQL request and response

Accessing the signed URL

Viewing the exported results