New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Very Slow Workflow Tasks Page when logged in EPerson is member of many Groups #9053

Comments

|

Workflow task page (slower): After calling this rest api above, and with the entry log_statement = 'all' in postgresql.conf, 8 MB of log are generated (attached). |

|

@aroldorique : Thanks for creating this ticket and sharing the logs! I've pulled this over to our 7.6.x maintenance board for more analysis. Could you describe your Group/EPerson structure? You mentioned there only being 14 reviewers / 10 editors but that they are shared among many Collections. I'm assuming that each Collection must have at least 2-3 groups? Are you assigning the EPersons directly into each Group? Are you using nested groups (i.e. groups within groups)? Does each group just have a small number of members, or are there any that are very large? Based on the logs you shared, I'm trying to understand your Group structure (and how many total groups exist) as it appears that PostgreSQL is first running a very large number of queries against the "epersongroup" and "group2groupcache" tables. I see after that it also is building some very large queries possibly per object (against the |

|

Hi Tim. You can learn about the communities and collections of our repository at this link: https://biblioteca.mpf.mp.br/repositorio/community-list We are currently submitting new items in Dspace 6 only, and at the end of the day, those new items will make their way into the new Dspace 7.5. We have 270 collections spread across communities and subcommunities. We created 3 groups to manage these collections. Repository Administrators Group (10 people); These groups have, respectively, the roles of Administrators, Submitters and Editors of each collection, as shown in the image below of one of the collections: There are no groups within the 3 groups mentioned above. |

|

All collections have only 3 groups (which are the same for all of them), administrators, submitters and editors. |

|

I guess that this is related to that line or a similar one from another solr query plugin the problem is that all the groups where the current eperson is a member of are retrieved from the database with all their eager relation. My suggestion is to introduce a method on the DAO that instead than returning the list of Group entity, it should just return the list of plain uuid. Also with this solution, it could be still problematic because we are going to create a very massing SOLR filter query with potentially thousand of uuid. We should see which is the limit of SOLR in manage something like that |

|

Based on discussion in today's Developer meeting, we believe the core issue here is that the logged in EPerson is a member of potentially hundreds or thousands of Groups. In this scenario, the EPerson is a member of just one major group (e.g. Administrator) and that major Group is used as a sub-group to 100s of other groups. So, via Group inheritence, that means that the EPerson is considered a member of those 100s of Groups. As @abollini notes above, the core problem seems to be that while determining the logged in EPerson's permissions, every group they are a member of is appended into a very large SOLR query. Not only that, but every group they are a member of is first loaded into memory from the database in order to just get the Group's UUID. Both of these are likely causing the extreme slowness. I'll update the ticket title to clarify where the problem seems to reside. We are still looking for a volunteer to investigate possible solutions. |

|

Tim, currently, as our group of 10 editors can edit all collections in the repository, we have to add you to all 270 collections. Perhaps it would be better if we could create editing, submission and administration groups per community, or even for the entire repository, this would significantly reduce the number of groups, in cases similar to mine. Database queries about what items an editor could change would also be much faster. I found some indexes that are not being used in PostgreSQL. Later I'll send what they are, but I don't think that helps much with our current problem. |

|

@aroldorique : Please create a new ticket for any additional issues you've found. I would not recommend adding "fixing null indexes" as a requirement to solve this ticket as it may slow things down. We should have a separate ticket to analyze that, otherwise developers will get confused. (I also admit, I don't understand the file you attached, so it needs more explanation in a separate ticket). |

|

@tdonohue Our first part of the analysis has been completed. We've identified that the slowness is not related to solr, but rather to the REST Conversion. |

|

Thank you for the update @benbosman ! Just so you are aware, we are waiting on this ticket before we release 7.6.1, as this seems to be a very high priority fix. So, please keep me posted on how the investigation goes. I'm still hoping we can get 7.6.1 released sometime in early to mid-November, but that is highly dependent on what you discover here. Therefore, I'd appreciate it if you could prioritize this highly within your team, if possible. Thanks again! |

|

@aroldorique is the slowness in your case happening for people who are also admin? |

|

Hi.

Yes, all participants in the editors group are also part of the collections

administrators group.

We only have 3 groups:

Repository administrators;

National editors;

National submitters.

The Administrators, Editors, and Submitters groups for all collections are

the same.

People are also complaining about the slowness in finalizing the submission

of items. The group of national Submitters has 10 participants in common

with the group of national Editors, but has 5 more participants.

Em seg., 23 de out. de 2023 às 13:17, benbosman ***@***.***>

escreveu:

… @aroldorique <https://github.com/aroldorique> is the slowness in your

case happening for people who are also admin?

Our further testing has revealed that in the REST Conversion, roughly 80%

of the load is coming from isAdmin checks.

—

Reply to this email directly, view it on GitHub

<#9053 (comment)>,

or unsubscribe

<https://github.com/notifications/unsubscribe-auth/AHP6KYW7UZOAMK74B4RXRUDYA2KCNAVCNFSM6AAAAAA4NYAFJSVHI2DSMVQWIX3LMV43OSLTON2WKQ3PNVWWK3TUHMYTONZVGU2TEMZTGQ>

.

You are receiving this because you were mentioned.Message ID:

***@***.***>

|

|

@tdonohue This is however not code we can contribute as it breaks tests and may create security issues Our testing still points at E.g. the It would make much more sense if this data is only embedded when explicitly requested, instead of always embedding this data All timings are of course dependent on infrastructure, database, …. This is why we just picked one test setup for all tests, which is not very fast (making it easier to compare data) |

|

@benbosman : It's unclear to me what you are recommending as next steps (if you have any), but the analysis you've posted is definitely useful feedback. I agree it'd be nice to improve when data is embedded to ensure we are not embedding/loading more data than is necessary.. Along these lines, did you also review the suggestions / guess that @abollini had above regarding the behavior of the The reason I ask is that I'm wondering if there are any "quick fixes" we could make to 7.6.1 to at least improve the current behavior. I definitely agree that there may be a need to more closely analyze when data is embedded. However, I worry that could be a detailed / complex effort that may not as easily fit into 7.6.1. In the simple scenario above of the If there are other simple/quick fixes you can see, I'd welcome them. I'm just concerned about holding up 7.6.1 for too long...and it'd be good to find some quick improvements while we dig deeper on larger speed improvements. |

|

Hi @tdonohue

Because of this ratio, I didn't spend much time analyzing the solr part. Assuming that it's similar, and the solr part is rather limited relative to the REST conversion, I would want some feedback on what to invest in next:

Given the timeline for 7.6.1, what would you want us to work on next? |

|

I'm going to try to take the tests this week, I don't know if I'll be able

to do it. Did you change backend and frontend files?

Em qua., 25 de out. de 2023 08:22, benbosman ***@***.***>

escreveu:

… Hi @tdonohue <https://github.com/tdonohue>

From our tests, the part which:

- Retrieves all data to build the solr query (the

SolrServiceResourceRestrictionPlugin part)

- Performs the solr query

- Creates the Discovery Results

was consuming less than 10% of the part which converted the Discovery

Results to REST objects

Because of this ratio, I didn't spend much time analyzing the solr part.

Of course this may also be dependent on the actual database (I did work

with a database which had similar characteristics as described here, but we

don't have this exact database)

It may be useful for @aroldorique <https://github.com/aroldorique> to

merge https://github.com/atmire/DSpace/tree/test-github-9053 in their

code, perform the

server/api/discover/search/objects?sort=score,DESC&page=0&size=10&configuration=workflow&embed=thumbnail&embed=item%2Fthumbnail

call, and share the output to ensure the load happens on the same place

Assuming that it's similar, and the solr part is rather limited relative

to the REST conversion, I would want some feedback on what to invest in

next:

- We can check whether we can improve the isAdmin checks in a way

that's reliable, safe and doesn't break tests. The very hacky change we

initially checked did show a substantial improvement, not to solve the

slowness completely, but rather to improve the responsiveness in a way you

can clearly notice

- We can improve data embedding in the workflow. I think this should

happen independent of this performance ticket even to make it compliant

with the standards for data embedding in the remainder of DSpace. But this

will be a larger code change, having an impact on the REST response, and

also a change for Angular to be aware of which embeds are relevant for

which page. Another very hacky change (which simply stopped populating this

data in REST) showed this also didn't solve the slowness completely, but

improved the performance clearly as well

- The combination of the above did half the response time in our tests

(with our test database). And even after those two changes the solr part

was only roughly 15% of the processing time

Given the timeline for 7.6.1, what would you want us to work on next?

—

Reply to this email directly, view it on GitHub

<#9053 (comment)>,

or unsubscribe

<https://github.com/notifications/unsubscribe-auth/AHP6KYTX5ILDDX3LDUP2NBDYBDY7FAVCNFSM6AAAAAA4NYAFJSVHI2DSMVQWIX3LMV43OSLTON2WKQ3PNVWWK3TUHMYTONZZGA2DSOJUGI>

.

You are receiving this because you were mentioned.Message ID:

***@***.***>

|

|

@aroldorique : From what @benbosman notes above, he only changed the backend files in this branch: https://github.com/atmire/DSpace/tree/test-github-9053 He's asking you if you can try to install that backend branch and run a specific REST API query to see if the performance is improved. @benbosman : With regards to next steps, I think we need to find a "quick fix" which can solve at least part of the performance issues, while also working on a longer term fix. From what you describe, it's difficult for me to know which solution is that "quick fix", but it sounds like possibly the The reason I'm hoping for a "quick fix" is simply that we need to get 7.6.1 out the door soon and shift concentration to 8.0. Ideally, 7.6.1 is released by mid-November (at the latest), which gives you only about one more week (or less) to work on a "quick" solution before we need to review/test it quickly. It does sound like this work will need a "follow-up" ticket with more fixes. Those can be assigned to your team as well, but perhaps the follow-up improvements will be scheduled for a 7.6.2 release (whenever that comes out) and/or aligned with 8.0. So, in summary, I'd appreciate if it we can break this down into two "tasks":

Hopefully that helps, but please let me know if you need more advice. |

|

Thanks for the feedback @tdonohue The feedback from @aroldorique would still be useful (it's just some logging that gets added in REST) I have some holidays coming up, but I will work with our developers to try and build a reliable and robust solution for the |

|

@aroldorique : A possible performance improvement is now available in this small backend PR #9161. It would only require installing on the backend... no changes needed on the frontend. If you can find time to test this to see if it improves the performance on your end, that'd be very helpful to getting this code added to DSpace. Keep in mind, it may not fix everything, but you should see an improvement in the performance of the MyDSpace page (in the UI) and the "Workflow tasks" REST API call that you mentioned in the description ( |

|

After testing #9161 today and looking at the responses, I see exactly what @benbosman correctly noted in his comment above. The response from the As you can clearly see...

Much of the embedded information is unnecessary and unused in the User Interface. Where needed, it should be instead requested via |

|

Sorry, guys.

My team is very lacking and I wasn't able to set up a test scenario exactly

like the one in production last week. This week, I'm on vacation, but next

week, I've already spoken to my boss and these tests will be a priority.

Em qua., 1 de nov. de 2023 18:14, Tim Donohue ***@***.***>

escreveu:

… After testing #9161 <#9161> today

and looking at the responses, I see exactly what @benbosman

<https://github.com/benbosman> correctly noted in his comment above

<#9053 (comment)>.

The response from the

/server/api/discover/search/objects?configuration=workflow search is *embedding

more information then is necessary*. This is most easily visible by

looking at the JSON Response from that endpoint, whose structure looks like

this (with all details removed):

// Example ClaimedTask

"indexableObject" : {

"type" : "claimedtask",

"_embedded": {

"owner" : { ... eperson metadata ... },

"action": { ... workflow action metadata ... },

"workflowitem": {

"_embedded": {

"submitter": { ... eperson metadata .. },

"item" : { ...item metadata... },

"submissionDefinition": { ...submission details...},

"collection": { ... collection metadata ... },

},

},

},

// Example PoolTask

"indexableObject" : {

"type" : "pooltask",

"_embedded": {

"eperson" : { ... eperson metadata ... },

"group": { ... group metadata ... },

"workflowitem": {

"_embedded": {

"submitter": { ... eperson metadata .. },

"item" : { ...item metadata... },

"submissionDefinition": { ...submission details...},

"collection": { ... collection metadata ... },

},

},

},

As you can clearly see...

- Every PoolTask embeds:

- EPerson object who can claim it. (Usually null as a PoolTask is

usually matched with a Group)

- Group object who can claim it.

- WorkflowItem object, which also then embeds

- Submitter who submitted Item

- Item object

- Submission form details

- Collection it was submitted to.

- Every ClaimedTask embeds:

- EPerson object who has claimed it

- Actions available

- WorkflowItem object, which also then embeds

- Submitter who submitted Item

- Item object

- Submission form details

- Collection it was submitted to.

Much of the embedded information is unnecessary and unused in the User

Interface. Where needed, it should be instead requested via embed params

(instead of preloading it for every object).

—

Reply to this email directly, view it on GitHub

<#9053 (comment)>,

or unsubscribe

<https://github.com/notifications/unsubscribe-auth/AHP6KYTAPMQTAXKMDG6ZBYDYCK3TLAVCNFSM6AAAAAA4NYAFJSVHI2DSMVQWIX3LMV43OSLTON2WKQ3PNVWWK3TUHMYTOOBZGY4TMOJXHA>

.

You are receiving this because you were mentioned.Message ID:

***@***.***>

|

|

@benbosman : After some further testing, I've found that there seems to be a MASSIVE speed increase if we simply don't embed the entire HOWEVER, the side-effect here is that this In other words, this issue might be semi-related to #8791, which I see is also assigned to Atmire but hasn't been updated in some time. As a possible "quick fix", if we could find a way to conditionally embed this information only for WorkspaceItems (for the Submission form), then this might provide a massive speed improvement for the "Workflow Tasks" page. I have not figured out a way to do that, but I wanted to share notes here in case it brings up ideas. I'll also mention this in tomorrow's DevMtg in case others have ideas. |

|

@tdonohue I agree that the embeds are indeed problematic, but as I pointed out earlier this part is trickier to adjust as a quick fix E.g. the I did run tests to exclude all of that before, this also excluded the I still believe the best way forward with this is to adjust the whole workflow embedding to be in line with our standards, ensuring the data is only embedded when the embed is requested. But this will take time. |

|

@benbosman : Thanks for your feedback. We discussed this in today's DevMtg in more detail, and I agree with your recommendations overall. As noted in today's meeting, I also prefer a fix of the workflow/workspace embedding logic (to allow the UI control over what is embedded & minimize what is embedded). That work will definitely need to wait for a 7.6.2 release, but development can begin immediately. (My hope is that this work can occur n a bug-fix release like 7.6.2 because I worry this embedding issue will cause additional reports of performance problems on the MyDSpace pages. So, I want to ensure it is fixed in some manner in 7.6.x. But, if that expectation is incorrect, then I'm OK with major work going into 8.0 while also trying to backport "as much as reasonable" to 7.6.x.) All that said, I also noted that if anyone can find a quick fix to embed less information (e.g. the SubmissionDefinitionRest fix I mentioned above), I'm still open to that sort of fix being included in 7.6.1. I feel this performance issue is severe enough that even temporary fixes should be welcome, as that temporary fix could alleviate MyDSpace performance frustrations for many production sites.. But, I honestly don't know that anyone will find such a quick fix...so we won't delay 7.6.1 any longer to wait for such work. Finally, I also did stress that we should include #9161 in 7.6.1. I feel this small PR is beneficial for the exact scenario described in this ticket. It's just not a complete fix for the embedding issues...so I'll make sure the embedding issues are described in a separate ticket and assigned back to you for work in 7.6.2. If there are any questions, let me know! |

|

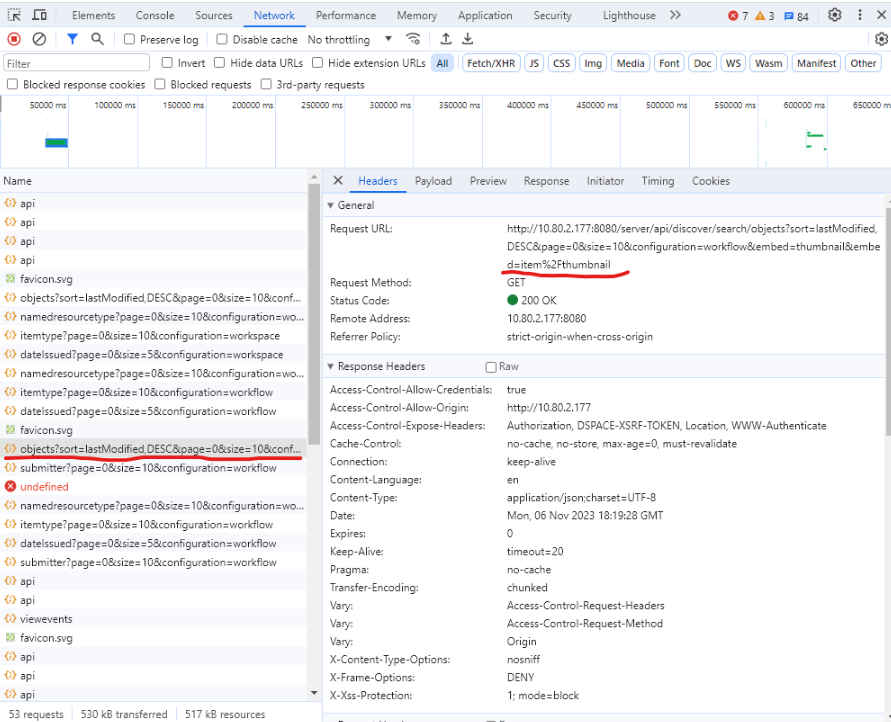

Hy everybody.

I installed the version 7.6 backend and repeated the test below.

Rest

API: server/api/discover/search/objects?sort=lastModified,DESC&page=0&size=10&configuration=workflow&embed=thumbnail&embed=item%2Fthumbnail

I'm sending the postgre.sql log of this rest call. Are you sure you made

any changes?

[new log.zip](https://github.com/DSpace/DSpace/files/13271724/new.log.zip)

Em qui., 2 de nov. de 2023 às 13:42, Tim Donohue ***@***.***>

escreveu:

… @benbosman <https://github.com/benbosman> : Thanks for your feedback. We

discussed this in today's DevMtg in more detail, and I agree with your

recommendations overall. As noted in today's meeting, I also prefer a fix

of the workflow/workspace embedding logic (to allow the UI control over

what is embedded & minimize what is embedded). That work will definitely

need to wait for a 7.6.2 release, but development can begin immediately.

(My hope is that this work can occur n a bug-fix release like 7.6.2 because

I worry this embedding issue will cause additional reports of performance

problems on the MyDSpace pages. So, I want to ensure it is fixed in some

manner in 7.6.x. But, if that expectation is incorrect, then I'm OK with

major work going into 8.0 while also trying to backport as "much as

reasonable" to 7.6.x.)

All that said, I also noted that if anyone can find a *quick fix* to

embed *less information* (e.g. the SubmissionDefinitionRest fix I

mentioned above

<#9053 (comment)>),

I'm still open to that sort of fix being included in 7.6.1. I feel this

performance issue is severe enough that even *temporary fixes* should be

welcome, as that temporary fix could alleviate MyDSpace performance

frustrations for many production sites.. But, I honestly don't know that

anyone will find such a quick fix...so we won't delay 7.6.1 any longer to

wait for such work.

Finally, I also did stress that we should include #9161

<#9161> in 7.6.1. I feel this small

PR is beneficial for the exact scenario described in this ticket. It's just

not a complete fix for the embedding issues...so I'll make sure the

embedding issues are described in a separate ticket and assigned back to

you for work in 7.6.2.

If there are any questions, let me know!

—

Reply to this email directly, view it on GitHub

<#9053 (comment)>,

or unsubscribe

<https://github.com/notifications/unsubscribe-auth/AHP6KYU7LNFASXTW7CWNQ2TYCPEPFAVCNFSM6AAAAAA4NYAFJSVHI2DSMVQWIX3LMV43OSLTON2WKQ3PNVWWK3TUHMYTOOJRGA4TGOBWGQ>

.

You are receiving this because you were mentioned.Message ID:

***@***.***>

|

|

@aroldorique : Your message came across garbled. It appears you tried to include some images which we cannot see in GitHub. It's unclear if you simply tried the 7.6 backend again, or if you installed the Pull Request code in #9161 . To install the proposed fix, you'd need to pull down the code from that PR and install it. One way of doing this is via our guide at https://wiki.lyrasis.org/display/DSPACE/Testing+DSpace+7+Pull+Requests (However, you don't need to install the PR via Docker. You could instead check it out directly on any system and rebuild the backend using "mvn -U clean package" and reinstall via "ant update"). If that doesn't make sense or I've misunderstood what you've tried, please do ask questions. We'll do our best to help as we'd appreciate any feedback you can provide. |

|

Tim, I downloaded the backend from this link: https://github.com/atmire/DSpace/tree/test-github-9053 |

|

New log: |

|

@aroldorique : You did everything correct, except we have an updated backend. The one you downloaded has no changes but just had extra logging to help @benbosman debug what was taking a long time. However, that should have no changes to performance. The performance fixes we are reviewing are instead in this branch: https://github.com/atmire/DSpace/tree/w2p-107891_fix-isAdmin-check-performance If you download that branch & perform the same steps you did above, that should install the new code added in #9161. It should have some performance benefits, but it may not be perfect yet. The larger performance fixes are still being worked on..so this won't be the final performance improvement. |

|

Ok Tim, I didn't know about the backend of that link, sorry. I will repeat

with the backend of this link.

Em seg., 6 de nov. de 2023 às 16:55, Tim Donohue ***@***.***>

escreveu:

… @aroldorique <https://github.com/aroldorique> : You did everything

correct, except we have an updated backend. The only you downloaded has *no

changes* but just had extra logging to help @benbosman

<https://github.com/benbosman> debug what was taking a long time.

However, that should have *no changes* to performance.

The performance fixes we are reviewing are instead in this branch:

https://github.com/atmire/DSpace/tree/w2p-107891_fix-isAdmin-check-performance

If you download that branch & perform the same steps you did above, that

should install the new code added in #9161

<#9161>. It should have some

performance benefits, but it may not be perfect yet. The larger performance

fixes are still being worked on..so this won't be the final performance

improvement.

—

Reply to this email directly, view it on GitHub

<#9053 (comment)>,

or unsubscribe

<https://github.com/notifications/unsubscribe-auth/AHP6KYWWCQOVDUERBOHSBW3YDE6DJAVCNFSM6AAAAAA4NYAFJSVHI2DSMVQWIX3LMV43OSLTON2WKQ3PNVWWK3TUHMYTOOJWGI3DSMJTHA>

.

You are receiving this because you were mentioned.Message ID:

***@***.***>

|

|

Tim, I'm sending the new log, with the correction. In fact, the consultation was much faster. server/api/discover/search/objects?sort=lastModified,DESC&page=0&size=10&configuration=workspace&embed=thumbnail&embed=item%2Fthumbnail But completing a submission is very slow, about 2 minutes. I don't know if you're also checking this out right now. |

|

@aroldorique : This ticket is specific to the Workflow Tasks Page, so we have not looked at the Submission form. Good to hear though that the fix is "much faster" based on your testing. Regarding any slowness in the Submission form, we'd need to look at that separately. It may or may not be related to this ticket, so I'd recommend we log a separate ticket with details of how to reproduce that issue (as best as can be determined). It's best not to assume that issue is the same as this one... there's no telling until we can gather further details. Thanks for your testing. It sounds like the immediate fix in #9161 (which is the code in https://github.com/atmire/DSpace/tree/w2p-107891_fix-isAdmin-check-performance) is successful! |

This is very similar to what we did as a customization. We created a "MyDSpace" projection, which basically does that: @Override

public <T> T transformModel(T modelObject) {

if (modelObject instanceof Collection) {

return null;

}

return modelObject;

}modified witem.setItem(converter.toRest(item, projection));

CollectionRest collectionRest = collection != null ? converter.toRest(collection, projection) : null;

witem.setCollection(collectionRest);

if (submitter != null) {

witem.setSubmitter(converter.toRest(submitter, projection));

}

if (collectionRest == null) {

return;

}With the projection, Finally we modified our frontend to request that projection in MyDSpace |

Hello everybody. Our Workflow tasks page is taking a long time to load, over a minute. Something similar also happens on the new item submission page.

We currently have over 150,000 items, spread across 270 collections, spread across various communities and subcommunities.

We created 3 groups of people, a group of Administrators, another one of Submitters and another one of Editors. These 3 groups are administrators, submitters and editors of all collections.

We've already increased the memory of Solr, Tomcat and the machine (SLES 15), but the slowness (and user complaints) continues...

The 2 REST API requests that take the most time are these:

Workflow task page (slower):

server/api/discover/search/objects?sort=score,DESC&page=0&size=10&configuration=workflow&embed=thumbnail&embed=item%2Fthumbnail

We have a team of 14 submitters who can submit new items in any of the 270 collections. We also have another team of 10 editors, who can accept or reject submitted items in all collections. When we had 100 items submitted in the queue for editing, the above rest call took 2 minutes to complete.

Deposit a new item (the delay here doesn't bother you that much):

server/api/workflow/workflowitems?projection=full

The text was updated successfully, but these errors were encountered: