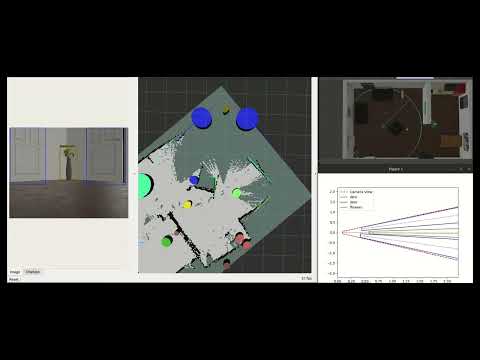

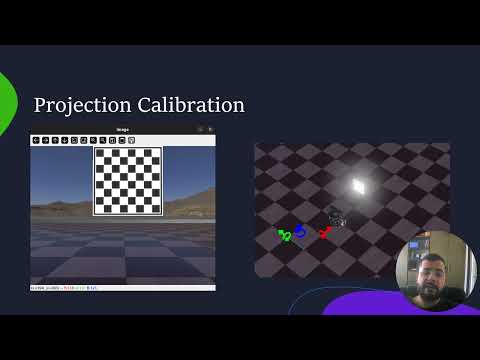

Simultaneous Localization and Mapping (SLAM) allows robots to understand where they currently are in relation to an environment, and at the same time create a map of their surroundings. Object-Conscious SLAM (OCSLAM) introduces an additional layer of knowledge by allowing the robot to locate and classify objects within this world. I propose a simple method that leverages LIDAR information already being used for regular SLAM, in combination with the You Only Look Once (YOLO) network for locating, classifying and tracking objects.

-

Notifications

You must be signed in to change notification settings - Fork 0

This project aims to leverage existing LIDAR data used in SLAM alongside the You Only Look Once (YOLO) network for efficient object lo- calization, classification, and tracking.

License

Esteb37/object-conscious-slam

This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository.

Folders and files

| Name | Name | Last commit message | Last commit date | |

|---|---|---|---|---|

Repository files navigation

About

This project aims to leverage existing LIDAR data used in SLAM alongside the You Only Look Once (YOLO) network for efficient object lo- calization, classification, and tracking.

Topics

Resources

License

Stars

Watchers

Forks

Releases

No releases published

Packages 0

No packages published