-

Notifications

You must be signed in to change notification settings - Fork 477

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Download timeout after 15 minutes #6927

Comments

|

You could try adjusting the Apache Proxy timeout? It's a bit buried in the guide, on the Shibboleth page : If your files are in S3 there's a download-direct JVM option for Dataverse which may work around Apache entirely. |

|

I changed it for a test run.

This changes nothing. I would have been surprised, because the default for this timeout is 600 seconds and not 900 seconds. |

Hi @lmaylein and @donsizemore . We have the same problem in e-cienciaDatos and we can't solve it yet. We has an alternative download address that avoid the Glassfish for large files as workaronund . |

|

Is it possible to prioritize this issue higher? This is a big problem for our customers. |

|

@lmaylein larger data support is certainly one of the goals of the project but it will challenging to prioritize specific work here in the short term. It may be helpful to get some information about the infrastructure that you're running so that we can suggest workarounds. To discuss future plans in this area, I encourage you to attend the "Remote Storage/Large Datasets" session on June 19th at #dataverse2020: https://projects.iq.harvard.edu/dcm2020/agenda @juancorr, can you provide some more information about the alternate address that avoids Glassfish, and how you provide that address to users? |

|

Also, @qqmyers, do you have any thoughts in this area, related to that work you're doing for TDL w/r/t increasing upload size limits? Is there parallel work on the download side, or is there less issue in that setup because of redirecting to S3? |

|

@djbrooke , we have created a symbolic link under the Apache www path which points to the real file. It is not a clean solution, it is only a workaround. You can see an example in the notes related to the WordEmbeddings.zip file here: https://doi.org/10.21950/AQ1CVX https://doi.org/10.21950/wordembeddings.zip points to the link in the Apache server. You can see it in the disk too: $ ls -l /var/www/html/redirects/AQ1CVX/WordEmbeddings.zip |

|

We discussed this a bit in Slack the other day: https://iqss.slack.com/archives/C010LA04BCG/p1590504250002200 "To answer the user above - 15 min. does sound like one of the server timeouts. We had to adjust timeouts on multiple levels in our prod. - both inside Glassfish and Apache... if it's not already documented in the guides, we should add it." "The answer to what that person was asking was "all of the above" - the timeouts need to be increased everywhere - Apache, Glassfish and ajpproxy... But I was hoping we were already explaining it in the guide." I hope this helps a little. Obviously, as we're saying above, we should document these settings. Someone else who might know is @Venki18 who got 20 GB upload working: #4439 (comment) |

|

We have changed all timeouts that we have seen: glassfish/domains/domain1/config/domain.xml: <thread-pool idle-thread-timeout-seconds="3600" name="http-thread-pool"></thread-pool> libapache2-mod-jk/workers.properties:worker.worker1.cache_timeout=3600 But we have not be able to allow download a file more than 15 minutes by Glassfish. The download works with apache. |

|

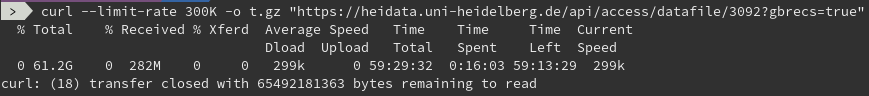

more thoughts later but thought I'd report some findings. I've tried using curl with --limit-rate 1K to get the file from Heidelberg and seen the connection die after 28,36, and 72 minutes. Similarly, trying to get the WordEmbeddings.zip file above I have a slow curl connection that has been downloading for 34+ minutes. So I don't think we're seeing timeouts on the overall download time. Perhaps they are related to server load, e.g. Dataverse gets so busy it stops sending packets for longer than some timeout. |

|

Most of the timeouts that I'm aware of are concerned with the time to wait for a first response from dataverse or the time between subsequent increments to the response rather than the overall time a response takes. For example, one of the things I helped TDL with was related to uploads where Dataverse was taking too long unzipping a file and storing the individual files before responding to the uploader. There's really no equivalent during download so I think the only things that would stop a download via Dataverse (e.g. not redirecting to S3) would be the disk or glassfish server becoming so busy they don't respond for awhile. (I guess it's still possible that there's some overall timeout happening but that would contradict my findings on both servers reporting problems that I have curl downloads lasting for an hour+.) I guess one direction that suggests for debugging would be to watch server and disk load when downloads are happening/failing. If it glassfish load, the only thing I've done much of is to increase memory, but others probably know more about the best ways to optimize/speed things. In some sense, if its a timeout or resource issue, it isn't really something that can be fixed in the Dataverse code (aside from trying to make Dataverse more efficient/use less memory overall). The switch to allowing a redirect to S3 for downloads (and uploads) is one way that Dataverse has been adapted to help with this. There have been suggestions that the redirect mechanism could also be added for file stores (not sure if the two servers reporting issues here are on files or S3 - if the latter, turning on the S3 redirect would be useful). There's also discussion of implementing the ability to handle range requests for downloads. This is the thing needed on the server side to allow smart clients to restart downloads that fail in the middle somewhere (you just ask the server for bytes starting where you last got to). This is probably a good thing to do in general, but it is essentially a way to mask the problem that downloads are failing rather than a fix for the underlying issue. (But, if that issue is something else, like a flaky network that's not under your control, the work-around is all you can do.) If these issues can't be resolved by system configuration changes, having IQSS/GDCC prioritize implementing the range requests might be the fastest way to help (I think support file redirects would be more work, but I could be wrong, and, if the issue is not glassfish, using a file redirect might not solve the problem.) Another note - with upload issues, changes that were made to report HTTP errors in the upload UI helped in debugging the timeout errors and we could see in the browser console/developer tools which piece of software was trigger the timeout - it would be reported as the Server: in the Response Headers (so we saw something like 'AWS LB' instead of 'Apache' when it was a timeout on a load balancer, etc.). The downloads work differently and return a 200 response up front (at least in my tests here) and the failure occurs as the data streams. I don't know how one can debug the type of thing being seen with downloads except checking the logs of any proxy/load balancer/httpd service etc between glassfish and the user. |

Is it possible to get access here? |

|

Here my test results with "limit-rate": withoud limit-rate: The connection is terminate at 15:06 With rate-limit 1M: The connection is terminate at 15:18 With rate-limit 300K: I still think a timeout is striking here. The extra seconds beyond the 15 minutes may be due to an overhead in the connection between the client and the apache server. The load on the server was very low in both attempts. |

|

And here are my test results when downloading directly over the Glassfish (via localhost): Without limit-rate Download takes less than 15 minutes - no problem With limit-rate 1M: So the problem is definitely not the connection apache - glassfish. |

|

I think that the problem is in the keep alive Glassfish timeout, but I couldn't change it yet. I have to test it in Payara but I can not time yet. HTTP/1.1 200 OK |

|

@juancorr I don't think so - the Keep-Alive is in seconds, not minutes. And it is only used for idle connections... https://developer.mozilla.org/en-US/docs/Web/HTTP/Headers/Keep-Alive |

|

FWIW: Hmm - My rate limit was much more limiting - 1K, but the time-spent reported from curl was 1:12:08 from HeiDATA (same 61GB file) for the longest run. |

|

To make sure that the problem is not caused by the underlying storage infrastructure, I once used a "scp -l(imit)". Here the file access does not abort. |

|

I'm out of good ideas... doing a search on "java.lang.InterruptedException at org.glassfish.grizzly.http.io.OutputBuffer.blockAfterWriteIfNeeded" - which is in the log above - led me to https://stackoverflow.com/questions/26990616/connection-timeout-glassfish-java-ee-application which has some discussion of circumstances where load could cause timeouts. The only parameter mentioned in the answers there that I've ever tweaked is the max-thread-pool-size for the http-thread-pool - in domain.xml . I've increased that to 50 on some instances where we were debugging other memory/slow response issues. If you have a low number for that, you could try an increase. The answer there also points to some timeout and buffer-size config options at the TCP transport layer that is also configurable in domain.xml . The timeouts are again not global timeouts but timeouts for how long to wait for more bytes, so they are consistent with failures at different times. (The issue links things together - few threads and small buffers lead to situations where a download thread could be blocked and end up waiting for longer than the timeout, so work-arounds could be a combination of more threads, bigger buffers, longer timeouts.) Making changes at this level is beyond my experience - perhaps others know more - and I hesitate to suggest trying things without knowing much about them. That said, if you're stuck, these are things you can try and then remove from you config if they don't work. |

|

I tried:

and

That didn't help. |

|

@lmaylein I've been meaning to ask you what you think about the symlink hack/workaround @juancorr mentioned earlier: #6927 (comment) My understanding is that you'd be using Apache (or similar) to serve up the file. Not a long term solution, of course, but perhaps it could give you some relief. |

|

@pdurbin Our workaround was to split up the file. The Apache symlink would require a change of the rights configuration on our server, because only the glassfish user has access to the file, but not the Apache user. In case of need, however, we would change that. |

|

Okay, very interesting: instead of And this is the result: The connection aborts after 30 minutes, instead of 15 minutes as before. |

|

Apparently the default is 15 minutes after all: |

|

Hmm - as far as I know you're correct that all these timeouts are related to idle time not accumulated transfer times, so the coincidence with when you're seeing the connection drop is odd (but I did see a really slow connection stay up for an hour already). The other timeouts I've seen are at the transport level - I've only seen it in the glassfish v3 docs so far: https://docs.oracle.com/cd/E19798-01/821-1753/girmh/index.html - the buffer size is in that doc too. The timeouts here are all ~ 30 seconds, so they could be involved if there is some random time when threads block etc. |

|

@pdurbin we achieved the large file upload and downloads with the following settings, we are using Apache as the frontend to Glassfish

I hope this helps. Sorry for the delay in replying. |

|

@Venki18 Thank you very much. This confirms my tests. @qqmyers Just to rule out any coincidence, I have now also increased request-timeout-seconds to 3600. The result is as expected: Abortion after 1:00:52 I think the Apache ProxyTimeout parameter is not necessary. This is probably really an idle timeout. Maybe the I think we can close this issue? |

|

@djbrooke if you’ll leave the issue open i’ll submit a PR for the docs. |

|

@Venki18 thank you! @lmaylein @juancorr glad it works! @donsizemore yes, yes, docs please! |

…s per landreev and pdurbin

|

I still have a question about this closed issue: |

This became "GLASSFISH_REQUEST_TIMEOUT" for new installations, which now defaults to 30 minutes: If you have an existing installation, you may want to bump this above the default 15 minutes: |

|

I had hoped that there would be a parameter by now that defines the timeout between single packets and not for the whole duration of the download. |

@lmaylein would that mean opening an issue upstream with Payara? Here is there issue tracker: https://github.com/payara/Payara/issues |

|

I'm just not sure if I've got it all right. Probably there is a timeout on packet level and the timeout http.request-timeout-seconds is only additional. Then maybe it would not be harmful to set it as high as possible. But I don't know if setting a very high value might cause a vulnerability against DOS attacks. |

We have a problem with downloading large files from a Dataverse instance (v. 4.18.1 build 267-a91d370) The download of the large file in https://heidata.uni-heidelberg.de/dataset.xhtml?persistentId=doi:10.11588/data/TMEDTX aborts after exactly 15 minutes. But I still don't understand if this is a timeout of the Apache webserver or the glassfish server.

The problem was reported by a user and I can reproduce it.

Can you help me? Where do I have to look for the corresponding timeout?

Apache log:

147.142.***.*** - - [22/May/2020:14:38:01 +0200] "GET /api/access/datafile/3092?gb recs=true HTTP/1.1" 200 36631117824 "https://heidata.uni-heidelberg.de/dataset.x html?persistentId=doi:10.11588/data/TMEDTX" "Mozilla/5.0 (X11; Ubuntu; Linux x86 _64; rv:76.0) Gecko/20100101 Firefox/76.0Glassfish log:

[2020-05-22T14:53:01.451+0200] [glassfish 4.1] [SEVERE] [] [org.glassfish.jersey.server.ServerRuntime$Responder] [tid: _ThreadID=52 _ThreadName=jk-connector(5)] [timeMillis: 1590151981451] [levelValue: 1000] [[ An I/O error has occurred while writing a response message entity to the container output stream. org.glassfish.jersey.server.internal.process.MappableException: java.io.IOException: java.lang.InterruptedException at org.glassfish.jersey.server.internal.MappableExceptionWrapperInterceptor.aroundWriteTo(MappableExceptionWrapperInterceptor.java:97) at org.glassfish.jersey.message.internal.WriterInterceptorExecutor.proceed(WriterInterceptorExecutor.java:162) at org.glassfish.jersey.message.internal.MessageBodyFactory.writeTo(MessageBodyFactory.java:1154) at org.glassfish.jersey.server.ServerRuntime$Responder.writeResponse(ServerRuntime.java:621) at org.glassfish.jersey.server.ServerRuntime$Responder.processResponse(ServerRuntime.java:377) at org.glassfish.jersey.server.ServerRuntime$Responder.process(ServerRuntime.java:367) at org.glassfish.jersey.server.ServerRuntime$1.run(ServerRuntime.java:274) at org.glassfish.jersey.internal.Errors$1.call(Errors.java:271) at org.glassfish.jersey.internal.Errors$1.call(Errors.java:267) at org.glassfish.jersey.internal.Errors.process(Errors.java:315) at org.glassfish.jersey.internal.Errors.process(Errors.java:297) at org.glassfish.jersey.internal.Errors.process(Errors.java:267) at org.glassfish.jersey.process.internal.RequestScope.runInScope(RequestScope.java:297) at org.glassfish.jersey.server.ServerRuntime.process(ServerRuntime.java:254) at org.glassfish.jersey.server.ApplicationHandler.handle(ApplicationHandler.java:1028) at org.glassfish.jersey.servlet.WebComponent.service(WebComponent.java:372) at org.glassfish.jersey.servlet.ServletContainer.service(ServletContainer.java:381) at org.glassfish.jersey.servlet.ServletContainer.service(ServletContainer.java:344) at org.glassfish.jersey.servlet.ServletContainer.service(ServletContainer.java:221) at org.apache.catalina.core.StandardWrapper.service(StandardWrapper.java:1682) at org.apache.catalina.core.ApplicationFilterChain.internalDoFilter(ApplicationFilterChain.java:344) at org.apache.catalina.core.ApplicationFilterChain.doFilter(ApplicationFilterChain.java:214) at org.ocpsoft.rewrite.servlet.RewriteFilter.doFilter(RewriteFilter.java:226) at org.apache.catalina.core.ApplicationFilterChain.internalDoFilter(ApplicationFilterChain.java:256) at org.apache.catalina.core.ApplicationFilterChain.doFilter(ApplicationFilterChain.java:214) at edu.harvard.iq.dataverse.api.ApiBlockingFilter.doFilter(ApiBlockingFilter.java:168) at org.apache.catalina.core.ApplicationFilterChain.internalDoFilter(ApplicationFilterChain.java:256) at org.apache.catalina.core.ApplicationFilterChain.doFilter(ApplicationFilterChain.java:214) at edu.harvard.iq.dataverse.api.ApiRouter.doFilter(ApiRouter.java:30) at org.apache.catalina.core.ApplicationFilterChain.internalDoFilter(ApplicationFilterChain.java:256) at org.apache.catalina.core.ApplicationFilterChain.doFilter(ApplicationFilterChain.java:214) at org.apache.catalina.core.ApplicationDispatcher.doInvoke(ApplicationDispatcher.java:873) at org.apache.catalina.core.ApplicationDispatcher.invoke(ApplicationDispatcher.java:739) at org.apache.catalina.core.ApplicationDispatcher.processRequest(ApplicationDispatcher.java:575) at org.apache.catalina.core.ApplicationDispatcher.doDispatch(ApplicationDispatcher.java:546) at org.apache.catalina.core.ApplicationDispatcher.dispatch(ApplicationDispatcher.java:428) at org.apache.catalina.core.ApplicationDispatcher.forward(ApplicationDispatcher.java:378) at edu.harvard.iq.dataverse.api.ApiRouter.doFilter(ApiRouter.java:34) at org.apache.catalina.core.ApplicationFilterChain.internalDoFilter(ApplicationFilterChain.java:256) at org.apache.catalina.core.ApplicationFilterChain.doFilter(ApplicationFilterChain.java:214) at org.apache.catalina.core.StandardWrapperValve.invoke(StandardWrapperValve.java:316) at org.apache.catalina.core.StandardContextValve.invoke(StandardContextValve.java:160) at org.apache.catalina.core.StandardPipeline.doInvoke(StandardPipeline.java:734) at org.apache.catalina.core.StandardPipeline.invoke(StandardPipeline.java:673) at com.sun.enterprise.web.WebPipeline.invoke(WebPipeline.java:99) at org.apache.catalina.core.StandardHostValve.invoke(StandardHostValve.java:174) at org.apache.catalina.connector.CoyoteAdapter.doService(CoyoteAdapter.java:415) at org.apache.catalina.connector.CoyoteAdapter.service(CoyoteAdapter.java:282) at com.sun.enterprise.v3.services.impl.ContainerMapper$HttpHandlerCallable.call(ContainerMapper.java:459) at com.sun.enterprise.v3.services.impl.ContainerMapper.service(ContainerMapper.java:167) at org.glassfish.grizzly.http.server.HttpHandler.runService(HttpHandler.java:201) at org.glassfish.grizzly.http.server.HttpHandler.doHandle(HttpHandler.java:175) at org.glassfish.grizzly.http.server.HttpServerFilter.handleRead(HttpServerFilter.java:235) at org.glassfish.grizzly.filterchain.ExecutorResolver$9.execute(ExecutorResolver.java:119) at org.glassfish.grizzly.filterchain.DefaultFilterChain.executeFilter(DefaultFilterChain.java:284) at org.glassfish.grizzly.filterchain.DefaultFilterChain.executeChainPart(DefaultFilterChain.java:201) at org.glassfish.grizzly.filterchain.DefaultFilterChain.execute(DefaultFilterChain.java:133) at org.glassfish.grizzly.filterchain.DefaultFilterChain.process(DefaultFilterChain.java:112) at org.glassfish.grizzly.ProcessorExecutor.execute(ProcessorExecutor.java:77) at org.glassfish.grizzly.nio.transport.TCPNIOTransport.fireIOEvent(TCPNIOTransport.java:561) at org.glassfish.grizzly.strategies.AbstractIOStrategy.fireIOEvent(AbstractIOStrategy.java:112) at org.glassfish.grizzly.strategies.WorkerThreadIOStrategy.run0(WorkerThreadIOStrategy.java:117) at org.glassfish.grizzly.strategies.WorkerThreadIOStrategy.access$100(WorkerThreadIOStrategy.java:56) at org.glassfish.grizzly.strategies.WorkerThreadIOStrategy$WorkerThreadRunnable.run(WorkerThreadIOStrategy.java:137) at org.glassfish.grizzly.threadpool.AbstractThreadPool$Worker.doWork(AbstractThreadPool.java:565) at org.glassfish.grizzly.threadpool.AbstractThreadPool$Worker.run(AbstractThreadPool.java:545) at java.lang.Thread.run(Thread.java:748) Caused by: java.io.IOException: java.lang.InterruptedException at org.glassfish.grizzly.http.io.OutputBuffer.blockAfterWriteIfNeeded(OutputBuffer.java:973) at org.glassfish.grizzly.http.io.OutputBuffer.write(OutputBuffer.java:686) at org.apache.catalina.connector.OutputBuffer.writeBytes(OutputBuffer.java:355) at org.apache.catalina.connector.OutputBuffer.write(OutputBuffer.java:342) at org.apache.catalina.connector.CoyoteOutputStream.write(CoyoteOutputStream.java:161) at org.glassfish.jersey.servlet.internal.ResponseWriter$NonCloseableOutputStreamWrapper.write(ResponseWriter.java:298) at org.glassfish.jersey.message.internal.CommittingOutputStream.write(CommittingOutputStream.java:229) at edu.harvard.iq.dataverse.api.DownloadInstanceWriter.writeTo(DownloadInstanceWriter.java:334) at edu.harvard.iq.dataverse.api.DownloadInstanceWriter.writeTo(DownloadInstanceWriter.java:49) at org.glassfish.jersey.message.internal.WriterInterceptorExecutor$TerminalWriterInterceptor.invokeWriteTo(WriterInterceptorExecutor.java:263) at org.glassfish.jersey.message.internal.WriterInterceptorExecutor$TerminalWriterInterceptor.aroundWriteTo(WriterInterceptorExecutor.java:250) at org.glassfish.jersey.message.internal.WriterInterceptorExecutor.proceed(WriterInterceptorExecutor.java:162) at org.glassfish.jersey.server.internal.JsonWithPaddingInterceptor.aroundWriteTo(JsonWithPaddingInterceptor.java:106) at org.glassfish.jersey.message.internal.WriterInterceptorExecutor.proceed(WriterInterceptorExecutor.java:162) at org.glassfish.jersey.server.internal.MappableExceptionWrapperInterceptor.aroundWriteTo(MappableExceptionWrapperInterceptor.java:89) ... 66 more Caused by: java.lang.InterruptedException at java.util.concurrent.locks.AbstractQueuedSynchronizer.doAcquireSharedNanos(AbstractQueuedSynchronizer.java:1039) at java.util.concurrent.locks.AbstractQueuedSynchronizer.tryAcquireSharedNanos(AbstractQueuedSynchronizer.java:1328) at org.glassfish.grizzly.impl.SafeFutureImpl$Sync.innerGet(SafeFutureImpl.java:356) at org.glassfish.grizzly.impl.SafeFutureImpl.get(SafeFutureImpl.java:264) at org.glassfish.grizzly.http.io.OutputBuffer.blockAfterWriteIfNeeded(OutputBuffer.java:962) ... 80 more ]]The text was updated successfully, but these errors were encountered: