Detailed tutorial for grasping with MoveIt

Under construction: Only the first part of this tutorial is completed.

This tutorial guides you through the process of executing a simple grasp with the example Jaco hand in Gazebo. The full testing pipeline is described here so that it will be possible to identify possible errors in the node connections early on and correct them.

It is recommended that you check whether the set-up of the object information pipeline is working first. More information about the object information pipeline, please refer to this wiki page.

First, you need to set the required ROS parameters for loading the Gazebo object information service and fake object recognition. This will be needed as soon as Gazebo is launched. At the same time, this launch file loads up the MoveIt! collision object generator:

roslaunch moveit_object_handling gazebo_object_recognition.launch

First, start jaco_on_table with joint controls in Gazebo:

roslaunch jaco_on_table jaco_on_table_gazebo_controlled.launch

And then, in another terminal, also start MoveIt! and RViz:

roslaunch jaco_on_table_moveit jaco_on_table_moveit_rviz.launch

Spawn a cube named "cube1" into the gazebo world, and drop it at height z=1 so that it falls on the table. If the robot moved away from the origin, change the x and y coordinates slightly.

In a new terminal:

rosrun gazebo_test_tools cube_spawner cube1 0 0 1

This test spawner takes as agruments:

- the name of the cube which you must use later to refer to it

- The x,y,z coordinates where to spawn the cube

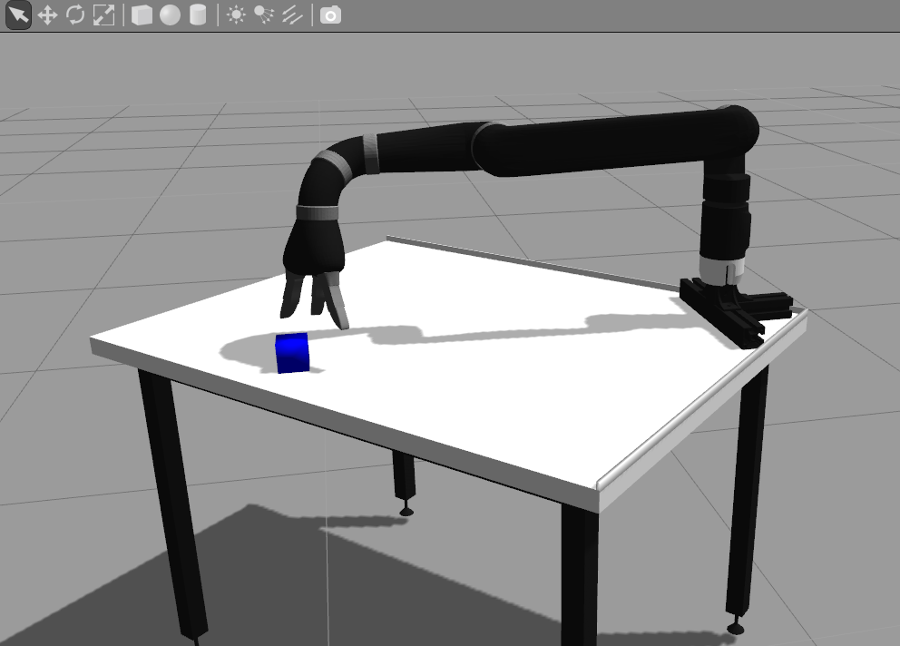

The cube should now have ended up on the table:

Now the robot will have to "recognize" the cube before the robot can grasp it. Because our example set-up does not have sensors and real object recognition running, we are going to do this manually, using a "fake" object recognition.

The "fake object recognisier" (see also background section below, subject "object information pipeline") is a node which continuously recognises the specified objects in the scene. It then publishes its current details to Moveit!.

You can start the fake object recognition for "cube1" with the following command. You may use the same terminal you used to spawn the cube:

rosrun gazebo_test_tools fake_object_recognizer_cmd cube1 1

For more information about this subject, please also refer to the background section ("object information pipeline") below.

After we have "recognized" the object, the cube should be seen in RViz in bright green at the exact same pose as it is in Gazebo.

If the cube does not pop up in Gazebo, probably the publishers/subscribers and servers/clients are not properly connected. Refer to the background section below ("object information pipeline") for more details.

Dragging the visual marker in RViz, see if the cube is actually reachable for the arm. Because the robot has been spawned into the world, it has probably moved when settling in, and is not exactly at the origin.

In this example, it is actually hard to reach for the cube with a specific grasp pose:

If this is the case, try to spawn the cube a bit closer to the arm.