-

Notifications

You must be signed in to change notification settings - Fork 21

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Improve performance for FD multiplication: allow LLVM to optimize away the division by a constant. #43

Conversation

This allows LLVM to generate cheaper operations for dividing by a

constant power-of-ten.

On my machine, this drops the time for multiplying two

FixedDecimal{Int32,2} numbers from 10.30ns to 2.92ns, or around a 70%

improvement.

|

Here's some more info on the optimization LLVM ends up performing: The main idea is that it can replace the expensive division-by-a-constant with a multiplication by its inverse! That is:

which is cheap and fast! :D Hooray! Here's LLVM in action: julia> f(x) = x ÷ 100

f (generic function with 1 method)

julia> @code_native f(3)

.section __TEXT,__text,regular,pure_instructions

; Function f {

; Location: REPL[1]:1

; Function div; {

; Location: REPL[1]:1

movabsq $-6640827866535438581, %rcx ## imm = 0xA3D70A3D70A3D70B

movq %rdi, %rax

imulq %rcx

addq %rdi, %rdx

movq %rdx, %rax

shrq $63, %rax

sarq $6, %rdx

leaq (%rdx,%rax), %rax

;}

retq

nopw %cs:(%rax,%rax)

;}So by inlining |

|

As I mentioned above, this improvement doesn't help for This lets us avoid BigInts for FD{Int128}, which makes a big difference for performance. I'm cleaning up that code now and i'll send a PR when it's done. |

Codecov Report

@@ Coverage Diff @@

## master #43 +/- ##

==========================================

+ Coverage 98.82% 98.83% +<.01%

==========================================

Files 1 1

Lines 170 171 +1

==========================================

+ Hits 168 169 +1

Misses 2 2

Continue to review full report at Codecov.

|

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

LGTM. Can you file a bug on julia for fldmod not being inlined? I think this is an example of a function that definitely should be.

|

Thank you for your contributions, @NHDaly! They are well appreciated. |

Thanks @TotalVerb. :) It's been a team effort over here at RelationalAI -- especially much thanks to @tveldhui who originally had a lot of the ideas I've sent here, and reviewed all my PRs. Sorry i haven't been crediting you directly, Todd! |

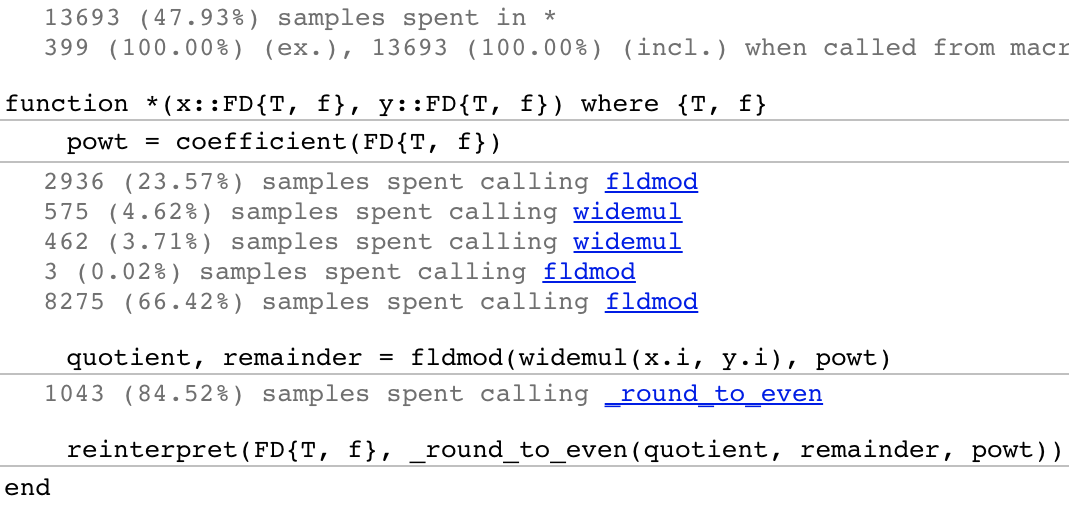

A large part of the runtime cost of

*(x::FD, y::FD)comes from dividing the result ofwidemul(x,y)by the coefficient (10^f).However, that coefficient is a known, compile-time static constant, and so we should be able to replace that expensive division-by-a-constant with cheaper alternatives. LLVM can normally do this optimization, but in this case, it's prevented from doing so in our code because

fldmodis acting as a function barrier. Iffldmodisn't inlined, it's compiled without any knowledge that one of its arguments is a compile-time constant.This PR simply changes our multiplication (and round and ceil) to call a version of fldmod marked

inline, which allows LLVM to optimize the division-by-constant into a cheaper operation.Before this PR, the call to

fldmodtakes around 80% of the time of*according to this profile:(

(2936+3+8275)/(13693+399) == 79.6%)The above is generated from:

After this PR, that number drops to 64%, which doesn't seem like that much, but in my overall benchmark suite, this change consistently improves the time for

*(::FD{Int32,2}, ::FD{Int32,2})by ~70%! So I might be missing something in my profiling.Here are my benchmark results after this change (and the

@evalformaxexp10(Int128)change, in #41):* note: The times in each column are truncated to a minimum of 0.345ns, which is the estimated time for a single clock cycle on my machine.Comparisons are relative to benchmarks run on

master. Those results are here: #37 (comment)For the small numbers, it's fairly noisy (that 8% improvement in

*,Int128is noise, for example) but for the larger numbers it seems fairly consistent between runs.(Almost all of the improvements on the

FD{Int128,2}line come from #41, since inliningfldmoddoesn't seem to help any when you're doingBigIntmath.)