Evaluation

The evaluation function of Koivisto makes use of a neural network. As of Koivisto 5.9, we use a neural network with 2 layers. The architecture has changed from time to time. As of Koivisto 7.11, we employ a king-relative half-network style. It consists of two layers where the input layer shares weights and evaluates the board from both point of views. Both activations from the first layer are concatenated based on the side to move. This is followed by a simple dense Layer which results in the centipawn evaluation of the given position.

A schematic image of the evaluation is shown:

Each step of the network is explained in detail below.

In order to have the network understand a temp-bonus, it is required to mirror the board for blacks pieces. A basic understanding of the tempo can be achieved by having two dense layers wich use the same weights but look at the board from different sides (mirror one side). This will result in the half-architecture where the upper "half" is a dense layer of what the white player can see whereas the lower "half" is the board from blacks perspective.

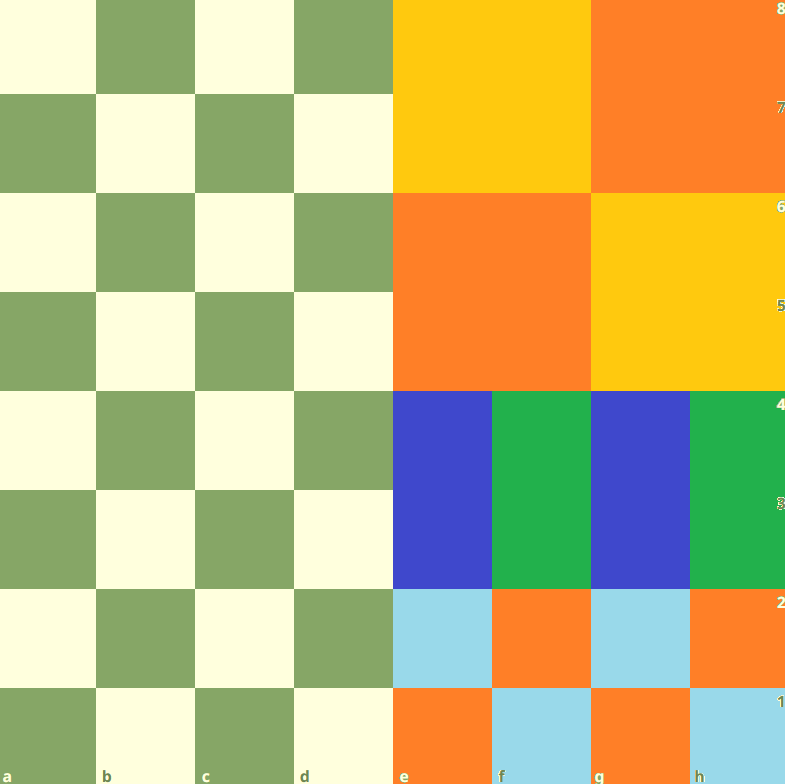

Koivisto uses 12288 inputs per side. This equals 16 x 768. 768 coresponds to 12 x 64 which is required to have a single input for one piece on a specific square on the board. The point of doing this 16 times is that we choose a different subset of inputs based on where our own king stands. Basically the 12288 inputs of one side can be broken down into 16 chunks where there is always a single chunk choosen based on where the king is. In order to avoid overfitting during training, the chunks are placed the following:

where the chunks are mirrored vertically. This allows the network to get a good feeling for king-safety and piece-king relations when the king is near its base rank. If the king decides to move up the board at some point, some generalization will happen.

It's also worth mentioning that the author of ScorpioNN, Daniel Shawul had independently come up with a similar idea for his engine. He nicely pointed this out in TCEC chat and we felt the need to mention it. The origin of the mirror technique has existed in Koivisto since early stages of hand-crafted evaluation.

The entire indexing for a given piece on a certain square viewed from a specific side looks like this:

int nn::Evaluator::index(bb::PieceType pieceType,

bb::Color pieceColor,

bb::Square square,

bb::Color view,

bb::Square kingSquare) {

constexpr int pieceTypeFactor = 64;

constexpr int pieceColorFactor = 64 * 6;

constexpr int kingSquareFactor = 64 * 6 * 2;

const bool kingSide = fileIndex(kingSquare) > 3;

const int ksIndex = kingSquareIndex(kingSquare, view);

Square relativeSquare = view == WHITE ? square : mirrorVertically(square);

if (kingSide) {

relativeSquare = mirrorHorizontally(relativeSquare);

}

return relativeSquare

+ pieceType * pieceTypeFactor

+ (pieceColor == view) * pieceColorFactor

+ ksIndex * kingSquareFactor;

}

int nn::Evaluator::kingSquareIndex(bb::Square relativeKingSquare, bb::Color kingColor) {

// return zero if there is no king on the board yet ->

// requires manual reset

if (relativeKingSquare > 63) return 0;

constexpr int indices[N_SQUARES]{

0, 1, 2, 3, 3, 2, 1, 0,

4, 5, 6, 7, 7, 6, 5, 4,

8, 9, 10, 11, 11, 10, 9, 8,

8, 9, 10, 11, 11, 10, 9, 8,

12, 12, 13, 13, 13, 13, 12, 12,

12, 12, 13, 13, 13, 13, 12, 12,

14, 14, 15, 15, 15, 15, 14, 14,

14, 14, 15, 15, 15, 15, 14, 14,

};

if (kingColor == BLACK) {

relativeKingSquare = mirrorVertically(relativeKingSquare);

}

return indices[relativeKingSquare];

}Since the hidden activation depends on the side to move, we keep track of two accumulators at a time. With every move, we update both accumulators. Final evaluation only requires the computation of the last layer instead of all layers inside the network. For setting/unsetting a piece on the board, we use the following function:

template<bool value>

void nn::Evaluator::setPieceOnSquare(bb::PieceType pieceType, bb::Color pieceColor,

bb::Square square) {

int idxWhite = index(pieceType, pieceColor, square, WHITE);

if (inputMap[idxWhite] == value)

return;

inputMap[idxWhite] = value;

int idxBlack = index(pieceType, pieceColor, square, BLACK);

int idx[N_COLORS]{idxWhite, idxBlack};

for(Color c:{WHITE, BLACK}){

auto wgt = (__m256i*) (inputWeights[idx[c]]);

auto sum = (__m256i*) (summation[c]);

if constexpr (value) {

for (int i = 0; i < HIDDEN_SIZE / STRIDE_16_BIT; i++) {

sum[i] = _mm256_add_epi16(sum[i], wgt[i]);

}

} else {

for (int i = 0; i < HIDDEN_SIZE / STRIDE_16_BIT; i++) {

sum[i] = _mm256_sub_epi16(sum[i], wgt[i]);

}

}

}

}

To ensure that we do not set an input twice, we have an input map which prevents us from setting or unsetting an input twice.

When setting an input, we do add the weights connecting the specific input to the hidden layer to the accumulator sum. Since we use integers, this operation is exact and unsetting reverts the setting without a loss of accuracy.

Since the network training uses floating point operations, the weights need to be transformed in the first step. For the input layer, we use 16-bit integer weights and a 16-bit integer bias. Updating the accumulator incrementally means that the accumulator also uses 16 bit. To prevent overflow, the last layer uses 16-bit weights but uses a fused add-multiply into a 32-bit value. The output-bias is also 32 bit. In order to convert the floating values to integer, we convert them when loading the network at the start of the program.

We make use of two different multiplier for the weights/bias of the first layer and the weights/bias of the second layer.

#define INPUT_WEIGHT_MULTIPLIER (256)

#define HIDDEN_WEIGHT_MULTIPLIER (256)

// read weights

for (int i = 0; i < INPUT_SIZE; i++) {

for (int o = 0; o < HIDDEN_SIZE; o++) {

float value = data[memoryIndex++];

UCI_ASSERT(std::abs(value) < ((1ull << 15) / INPUT_WEIGHT_MULTIPLIER));

inputWeights[i][o] = round(value * INPUT_WEIGHT_MULTIPLIER);

}

}

// read bias

for (int o = 0; o < HIDDEN_SIZE; o++) {

float value = data[memoryIndex++];

UCI_ASSERT(std::abs(value) < ((1ull << 15) / INPUT_WEIGHT_MULTIPLIER));

inputBias[o] = round(value * INPUT_WEIGHT_MULTIPLIER);

}

// read weights

for (int o = 0; o < OUTPUT_SIZE; o++) {

for (int i = 0; i < HIDDEN_SIZE; i++) {

float value = data[memoryIndex++];

UCI_ASSERT(std::abs(value) < ((1ull << 15) / HIDDEN_WEIGHT_MULTIPLIER));

hiddenWeights[o][i] = round(value * HIDDEN_WEIGHT_MULTIPLIER);

}

}

// read bias

for (int o = 0; o < OUTPUT_SIZE; o++) {

float value = data[memoryIndex++];

UCI_ASSERT(std::abs(value) < ((1ull << 31) / HIDDEN_WEIGHT_MULTIPLIER / INPUT_WEIGHT_MULTIPLIER));

hiddenBias[o] = round(value

* HIDDEN_WEIGHT_MULTIPLIER

* INPUT_WEIGHT_MULTIPLIER);