New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

compute: use drop_dataflow

#18442

base: main

Are you sure you want to change the base?

compute: use drop_dataflow

#18442

Conversation

050232f

to

9f2be75

Compare

|

My initial test on staging had two flaws:

Because of these issues, I ran a second test with the following changes:

Apart from that I also increased the number of replica processes to increase the chance of finding issues with the inter-process communication. This is the new replica configuration:

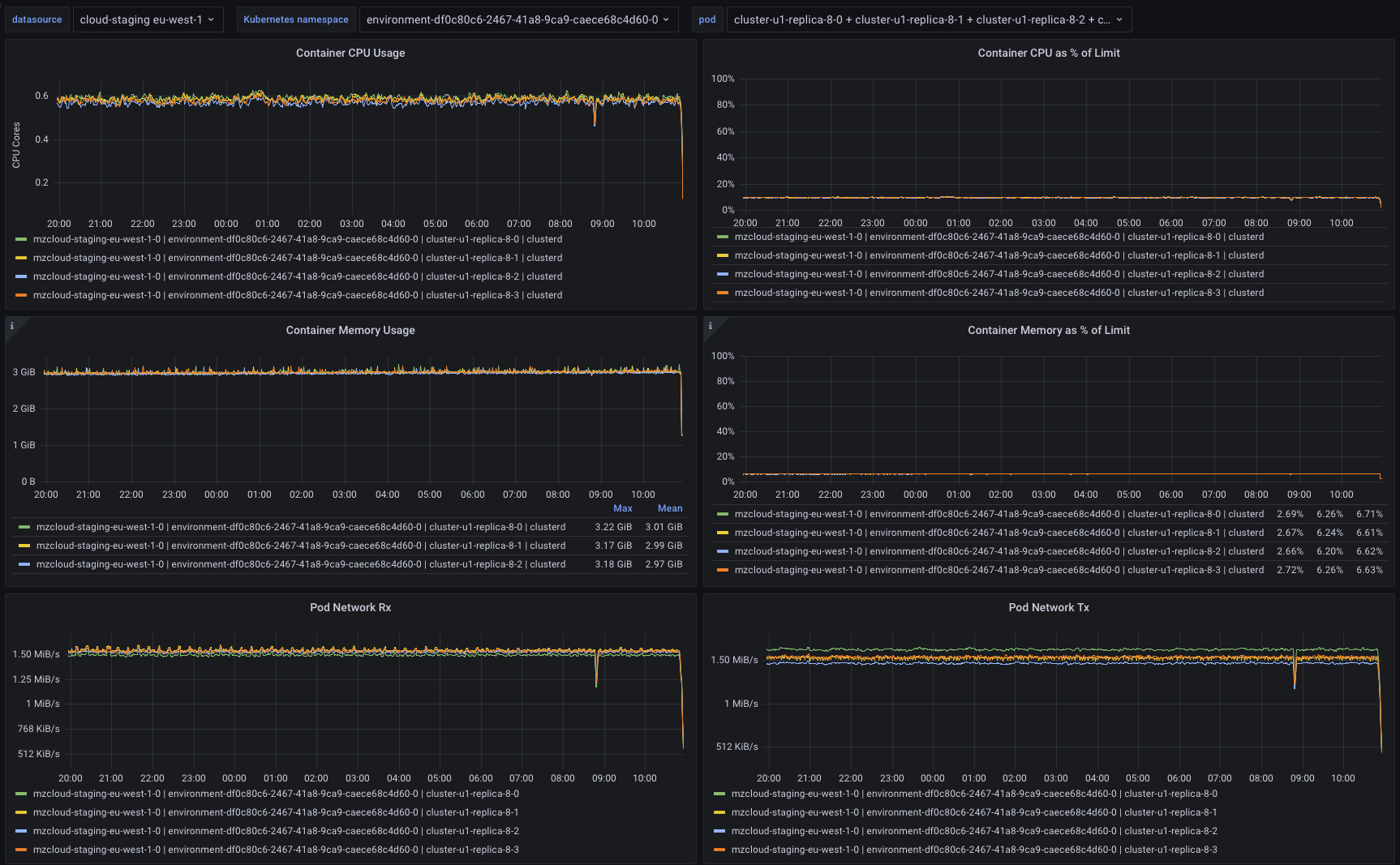

After 18 hours, 123000 dataflows have been created and dropped. As with the first test, none of the replica pods crashed or produced errors, and resource usage looks stable throughout the entire time frame: (The stray green line is me switching on memory profiling.) |

Between 2673e8c and ff516c8, drop_dataflow appears to be working well, based on testing in Materialize (MaterializeInc/materialize#18442). So remove the "public beta" warning from the docstring. Fix TimelyDataflow#306.

f40e78b

to

a9420f1

Compare

This commit moves some code around in preparation for adding support for active dataflow cancellation. These changes are not required, but they slighlty improve readability. * Add a `ComputeState` constructor method. This allows us to make some of the `ComputeState` fields immediately private. * Factor out collection dropping code from `handle_allow_compaction` into a `drop_collection` method.

This commit patches timely to get drop-safetly for reachability log events (TimelyDataflow/timely-dataflow#517). We need to revert this before we can merge.

This commit implements active dataflow cancellation in compute, by invoking timely's `drop_dataflow` method when a dataflow is allowed to compact to the empty frontier.

This commit adds a test verifying that active dataflow cancellation actually works. It does so by installing a divergent dataflow, dropping it and then checking the introspection sources to ensure it doesn't exist anymore.

|

I would like to stress test this with the RQG. @teskje please let me know when the time will be right to do this. |

|

@philip-stoev The time is right now, please go ahead! Note that you have to set the |

|

Item No 1. This situation does not produce a cancellation:

|

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I pushed extensions to your testdrive test, please take them along for the ride to main.

As a separate effort, in order to complement your test, I created a stress test around a divergent WMR dataflow plus INSERT ... SELECT and SUBSCRIBE cursors. Unfortunately:

INSERT ... SELECTs run only one statement at a time- cursors cause CRDB to just consume CPU like crazy

- there must be some other bottleneck in the adapter because even

SETqueries are slow under load.

A size 8-8 cluster was used.

So I do not think I was able to drive as much concurrency as one would want. Either way, there were dozens of active subscribe dataflows in the system (and all of them would be subject to forcible cancellation) There were no panics , deadlocks or obvious memory leaks.

I'm using this PR to track testing of

drop_dataflowfor dropping dataflows in COMPUTE.Testing

ComputeLogDataflowCurrent(retracted inhandle_allow_compaction)DataflowDependency(retracted inhandle_allow_compaction)FrontierCurrent(retracted inhandle_allow_compaction)ImportFrontierCurrent(fixed by compute: make import frontier logging drop-safe #18531)FrontierDelay(retracted inhandle_allow_compaction)PeekCurrent(retracted when peek is served or canceled)PeekDuration(never retracted)TimelyLogOperates(shutdown event emitted inDropimpls for operators and dataflows)Channels(retracted on dataflow shutdown)Elapsed(retracted on operator retraction)Histogram(retracted on operator retraction)Addresses(retracted on operator or channel retraction)Parks(never retracted)MessagesSent(retracted on channel retraction)MessagesReceived(retracted on channel retraction)Reachability(fixed by Drop implementation for Tracker TimelyDataflow/timely-dataflow#517)DifferentialLogArrangementBatches(DD emits drop events in theSpineDropimpl)ArrangementRecords(DD emits drop events in theSpineDropimpl)Sharing(DD emits retraction events in theTraceAgentDropimpl)drop_dataflow#18442 (comment)drop_dataflow#18442 (comment)Motivation

Advances #2392.

Tips for reviewer

Checklist

$T ⇔ Proto$Tmapping (possibly in a backwards-incompatible way) and therefore is tagged with aT-protolabel.