-

Notifications

You must be signed in to change notification settings - Fork 291

BufferingLatencyAndTimingImplementationGuidelines

UNDER CONSTRUCTION

DRAFT

Contributors: Ross Bencina, Phil Burk, Dmitry Kostjuchenko

These guidelines are draft.

- Input latency implementation details aren't addressed yet

- No explicit consideration of blocking i/o latency

- Everything is subject to change following feedback from the community

- People familiar with individual native audio APIs and host API implementations need to provide feedback on the last section that lists details of each host API.

This document is primarily intended for PortAudio host API implementors. It provides guidance for implementing and interpreting PortAudio latency, timing and buffering parameters and relating these to various host API constructs. Developers using PortAudio may also find the material useful, especially the first section of the document describing the latency and timing API from the user's perspective, and the final section, describing attributes of individual host APIs.

PortAudio latency and timing parameters can be considered from either the perspective of the user or the implementor. We first consider the user model as context for understanding the implementation model. We then consider the implementation model, which supports the user model and unifies PortAudio's behavior across a diverse range of Host API buffering and latency schemes.

PortAudio uses a high-level latency model designed to be usable accross all host APIs. It is based on the principle of specifying latencies in seconds (using double precision floating point values).

The user model employs three types of latency values:

- PortAudio reported default high and low latency values, returned by Pa_GetDeviceInfo() in a PaDeviceInfo struct.

- User specified suggested latency parameters passed to Pa_OpenStream() in PaStreamParameters structs

- PortAudio reported actual known latency values available after a stream has been opened, returned by Pa_GetStreamInfo() in a PaStreamInfo struct.

(1) Default latency values are provided as hints from the host API to the user about appropriate Pa_OpenStream() suggestedLatency parameters to use for particular use cases. The meaning of "default" here is as an appropriate starting value for a user interface slider that the end-user may then tune for best performance on their particular system. The definition of PortAudio supplied defaults is necessarily vague since the final latency may depend on Pa_OpenStream() parameters such as framesPerBuffer and sampleRate. Never the less, PortAudio seeks to report workable default values that should result in glitch-free operation. Both "high" and "low" latency defaults are provided to support different use cases. These are described in more detail in the next section.

A user opening a half-duplex stream specifying a default*Latency parameter, default sample rate and paFramesPerBufferUnspecified as the framesPerBuffer parameter should reasonably expect the suggested latency to be honoured. In other situations the final latency may differ from the suggested latency, as explained later.

(2) Suggested latency parameters to Pa_OpenStream() are the latencies requested by the user when a stream is opened. These may range from 0 to infinity. PortAudio will limit the actual latency to within implementable, although not necessarily workable bounds. For example, PortAudio allows the user to request latencies down to zero, in which case PortAudio will seek to reduce latency as much as technically possible, even if this leads to unreliable audio. If there are internal constraints that limit the available latency values, such as restrictions on buffer granularity, PortAudio will round up from the suggested latency to the next valid buffer size value. PortAudio accomodates host-API enforced constraints or limits (e.g. ASIO or JACK may require a fixed buffer size that limits the latency to only one value). Such limits may impact actual latency, however they don't constrain the user's buffer size because PortAudio will adapt between different host and user buffer sizes where necessary.

(3) After a stream is opened PortAudio reports the actual latency of the stream via Pa_GetStreamInfo(), PaStreamInfo::inputLatency and PaStreamInfo::outputLatency. As discussed below, for input PortAudio reports the minimum input latency (callback invocation may be delayed, increasing effective latency), whereas for output, PortAudio reports the maximum output latency (callbacks may be delayed, reducing effective latency). Some PortAudio host API implementations are not able to determine the total latency of a stream. In such cases PortAudio reports the known latencies. (In future PA may seek to model unknown latencies but at present it does not.)

PortAudio reports two default latencies for each direction (input and output) of each device. The defaults are named "low" and "high" and loosely relate to the two use-cases described below.

A recommended value for Pa_OpenStream() suggestedLatency for use in interactive low latency software (audio effects, instruments, etc.)

This is the host API's "preferred" or "default" latency value, if such a value is defined. It is not necessarily the lowest workable latency. For APIs without a preferred or default value this is a value that is generally understood to be stable on most systems for low-latency operation.

It is expected that software using these low latency values should not glitch on the kind of correctly tuned systems commonly used for interactive computer music (i.e. systems with good drivers, no bad driver issues, no overloaded USB buses, no rogue software etc). It is not always possible to predict the breakdown point where the latency is too low to function, therefore on some systems lower latency settings may be possible, while on other systems this value may be too low.

Some host APIs do not provide a true "low latency" mode, for example only offering minimum latencies in excess of 50ms. In these cases the defaultLow*Latency will represent a workable minimum latency rather than a true "low latency" setting.

Low latency operation may also have power-consumption implications due to the high frequency of audio callbacks.

A recommended value for Pa_OpenStream() suggestedLatency for use in software where latency is not critical (e.g. playing sound files). This is a "safe" value that should mask all expected sources of glitch-inducing jitter and transient delay in most systems. Note that if the host API doesn't support a high latency mode this value may be the same as defaultLow*Latency.

Using high latency values may in some cases reduce power-consumption due to a lower frequency of audio callbacks.

PaStreamParameters::suggestedLatency is intended to be a useful user tunable parameter. From the user's perspective, the final actual output latency of the stream should closely match the suggested output latency parameter to Pa_OpenStream(). Where possible the host API should configure internal buffering as close to but no lower than suggestedLatency. In practice this is not always possible. The user should anticipate that in some cases there may be significant difference between the suggested and configured latency and buffering due to constraints imposed by individual devices and host APIs. For example, it is possible for the output latency to be lower than requested if the device or host API limits the maximum host buffer size, or constrains the buffer to a fixed value. Similarly for input the host may constrain the amount of buffering PortAudio can introduce.

The following user-supplied parameters to Pa_OpenStream() may affect actual latency: input and output suggestedLatency, sampleRate, framesPerBuffer, host-API-specific flags. The framesPerBuffer user callback buffer size may be set to paFramesPerBufferUnspecified to give the implementation maximum freedom in selecting host buffer parameters. Opening a stream in half-duplex or full duplex mode may result in different stream latencies because PA sometimes needs to use extra buffering to ensure synchronisation between input and output.

The details of how the Pa_OpenStream parameters are mapped to host API specific values are private to each host API implementation and should not be relevant to the user. Later sections of this document describe unifying principles to align the behavior of individual implementations where possible.

The user's view of PortAudio buffering is relatively opaque. PortAudio arranges for the host API to invoke a user callback function to process audio in "callback buffers." The callback buffer size is often fixed, in which case the callbacks are (ideally, in principle) periodic. In cases where the callback buffer size may vary (paFramesPerBufferUnspecified) the callbacks may be aperiodic but time aligned to the number of sample frames elapsed since the last callback. (In practice, callback periodicity is often violated due to host API specific constraints, but it is assumed to hold for the purposes of the timing model.)

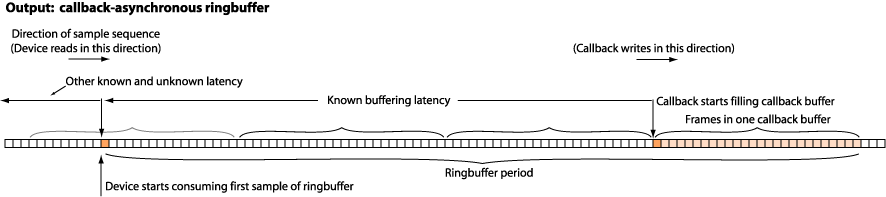

In the output case the user fills the callback buffer which is then passed via the host API to the audio driver and hardware. Some time later the DAC outputs the samples. PortAudio defines the time from the idealised callback time, to when the first sample of the callback buffer appears at the analog output of the DAC as the "actual output latency." The actual output latency corresponds to the distance between the two orange samples highlighted in the diagram below, which shows a snapshot of buffer state at a moment in time. The callback fills the buffer ahead of the location where the DAC reads:

As suggested by the diagram above, some portion of the latency may be unknown. The accuracy of PortAudio's latency reporting is dependent on the host API. PortAudio provides access to the known input and output latencies in the PaStreamInfo::inputLatency and PaStreamInfo::outputLatency fields returned by Pa_GetStreamInfo(). Often we treat the known latency as synonymous with the true actual latency.

When the callback is invoked a PaCallbackTimeInfo structure containing timing information is passed to the callback. PaStreamCallbackTimeInfo::currentTime indicates the actual callback invocation time. PaStreamCallbackTimeInfo::outputBufferDacTime indicates the time at which the first sample of the callback buffer will reach the DAC (the idealised callback invocation time plus the known actual output latency). Similarly for input, PaStreamCallbackTimeInfo::inputBufferAdcTime indicates the time at which the first sample of the callback buffer was captured by the ADC (the idealised callback invocation time minus the known actual input latency).

Keep in mind that the actual output latency is an idealised value based on perfectly scheduled, periodic callbacks. In practice, callback jitter may cause callbacks to be invoked later than the idealised time. The diagram above distinguishes between the idealised and actual callback execution time, showing the actual callback time occuring later (closer to the DAC playback position) than ideal.

Two reasons the actual callback invocation time may deviate from ideal are (1) processing overhead and (2) system scheduling jitter. A third is buffer size adaption mechanisms, which aren't considered here.

With regard to overhead, PortAudio may need to perform some processing prior to invoking the user callback. This is illustrated on the timeline below:

The timeline illustrates idealised callback invocation events and callback execution duration over time. The coloured bar indicates the maximum theoretical timespan of a host callback for a single callback buffer. The light blue/green segment is the portion spent by the PortAudio user callback. The dark blue segments indicate time consumed by PortAudio prior to and following the user callback.

As implied by the previous diagram, PortAudio weakly assumes that internal buffering is configured so that the user callback may consume most of the available CPU time during a callback period. Put another way: the time taken to process N samples is allowed to approach the time taken to play or record N samples. The user should understand that this is a hypothetical limit. PortAudio and system processing and scheduling overhead reduces this number in stream-, device-, and system-dependent ways.

In addition to processing overhead, system scheduling jitter is another major contributor to the difference between idealised and actual callback invocation time.

The diagram above shows how scheduling jitter and processing overhead both delay the callback invocation time and reduce available callback execution duration. In some cases PortAudio or the host API may compensate for scheduling jitter by adding extra buffering allowing the callback to complete later.

Another way to think about idealised callback time and actual output latency is relative to a deadline when the device needs the buffer (marked on the diagram above). The idealised callback time is one buffer period prior to the deadline for delivering the buffer to the device. The actual callback may occur after that time.

Another assumption related to the "100% CPU utilisation" assumption is that when using a specified user callback buffer size, the actual latency of a stream is constrained to be no less than the duration of one user callback buffer period.

Strict conformance to CPU utilisation and latency constraint assumptions is not guaranteed. The actual limit on callback CPU utilisation is usually dependent on factors outside PortAudio's control. PortAudio may also be constrained by other conflicting requirements. For example if the user specifies a fixed buffer size and the host API is unable to supply that buffer size, PortAudio may perform synchronous buffer adaption. This may lower the effective available CPU time and introduce scheduling jitter dependent on the ratio of the host and callback buffer periods.

Even ignoring jitter potentially introduced by PortAudio host/user buffer size adaption, different host APIs have radically different scheduling and jitter behavior. This is one reason why it is often preferable to use paFramesPerBufferUnspecified for low latency operation as it gives PortAudio maximum flexibility in scheduling and dimensioning host buffers.

Thus far we have defined output latency as:

**Maximum output latency: The time from the idealised callback time, to when the first sample of the callback buffer appears at the analog output of the DAC.**

It is the maximum in the sense that it represents the idealised amount of buffering between the callback and the DAC. A late callback generates samples that will reach the DAC sooner. This output latency is both the latency we are interested in specifying to increase stability, but also the latency we are interested in knowing to implement synchronisation, therefore we can say:

**In the case of output latency, (1) the PaDeviceInfo::default*OutputLatency, (2) Pa_OpenStream() suggested output latency and (3) PaStreamInfo::outputLatency all refer to the maximum output latency.**

The situation is different for input. In this case we are interested in receiving samples as soon as they are available. No matter how much buffering we introduce, samples will always become available after some minimum time period.

Thus the PortAudio reported actual input latency represents the earliest or minimum latency:

**Minimum input latency: Travelling backwards in time: the time from the idealised callback time, to when the first sample of the callback buffer entered the ADC as an analog signal.**

Respecting causality you can see that idealised callback time will never be closer to the ADC capture time than this minimum input latency.

In spite of this definition of input latency, extra buffering is often added to account for scheduling delays in the processing of input buffers. This creates an asymmetry between our definition of default and suggested latencies on the one hand, and actual latencies on the other. For stability we are interested in specifying a maximum input latency before overflow, whereas for synchronisation we are interested in knowing the minimum input latency. Therefore the definitions of the PortAudio fields for input latency are:

**In the case of input latency, (1) the PaDeviceInfo::default*InputLatency and (2) Pa_OpenStream() suggested input latency refer to maximum input latency before overflow. (3) PaStreamInfo::inputLatency refers to the minimum input latency.**

As described in the previous section, PaStreamCallbackTimeInfo fields correlate the first samples in the input and output callback buffers to a monotonic clock time returned by Pa_GetStreamTime(). This provides a means to synchronise non-audio events with the audio sample stream.

As with other aspects of PortAudio implementation, the quality of timing information returned by PortAudio is dependent on the host API. In the case of PaStreamCallbackTimeInfo, variability in timestamp quality may be due to the following factors:

- Granularity of the time base used by Pa_GetStreamTime()

- Accuracy and granularity of buffer timestamp information available from the host API

- Accuracy of known latency information, as this may be used to offset internal timestamps to DAC and ADC time

- Accuracy and availability of actual sample rate information used to convert latencies expressed in samples to seconds.

In future we may wish to document actual timing behavior of each native audio API and PortAudio Host-API implementation. For now we note that there is wide variability in timestamp quality accross operating systems and native audio APIs. For this reason we recommend that PortAudio clients assess the timing behavior of their target Host-APIs relative to their application requirements and deploy applicaton-specific clock recovery or time smoothing algorithms as necessary.

See also: PaStreamCallbackTimeInfo Struct Reference

We now turn our attention to the implementation. Here we consider the meaning of public PortAudio parameters from the perspective of host API implementations. We also consider mechanisms of specific native audio APIs and how they interact with PortAudio parameters.

We are mainly concerned with the implementation of PortAudio default, suggested and actual latency values as described above. Interpretation of these values also requires consideration of sample rates, buffer sizes and native audio API buffering models.

Before considering how to interpret and generate user-visible latency parameters it is important to note that total known latency of a stream is the sum of many smaller latencies, including:

-

User-space native audio API buffering latencies

-

Reported device latencies (including hardware latencies, driver latencies, etc.)

-

Known system component latencies. (e.g. "KMixer has latency of 30ms on Windows XP")

-

When a polling timer is involved it is sometimes possible or desirable to introduce a safety margin to accomodate timing jitter. Such a safety margin implies adding latency.

In some cases there may also be "adaption latencies" introduced by mechanisms employed by PortAudio:

-

PA user to host callback buffer size adaption latency (usually 0). Only needed if PortAudio can't configure the host to use a buffer granularity that's an integer multiple of userFramesPerBuffer. The adaption latency is returned by PaUtil_GetBufferProcessorInputLatencyFrames() for input and PaUtil_GetBufferProcessorOutputLatencyFrames() for output.

-

Full-duplex synchronisation/adaption buffer latency. This may be introduced when PA needs to use a ring-buffer to connect separate input and output streams. Not needed when the host API natively supports synchronous full-duplex operation.

-

Sample rate conversion latency. E.g. the CoreAudio implementation uses a system component to perform sample rate conversion under some circumstances.

In general there are latencies that are fixed and known up-front, and those that can only be known once Pa_OpenStream() is called, for example:

-

Some latencies may be expressed in sample frames, in which case the latency in seconds depends on the selected sample rate when the stream is opened.

-

Some latencies defined above may depend on the user framesPerBuffer parameter or on native audio API buffer parameters. E.g. ASIO returns total stream latency only once the buffer size has been configured.

-

Some latencies may not be avilable or known until a stream is opened (some adaption latencies for example).

As noted earlier, there may also be unknown latencies.

There is one fact that is used as the basis of much of the discussion below:

** Usually only "User-space native audio API buffering latencies" are directly adjustable by the host API implementation. **

Some native audio APIs do not provide a comprehensive latency reporting model. In these cases PortAudio may not know the actual latency. The current PortAudio policy is to underreport these latencies by only reporting known components of the latency. No attempt is made to estimate unknown latencies, although in future it may make sense to do this.

Some native audio APIs or drivers do not directly support synchronised full-duplex streams. In these cases it may be difficult for PortAudio to provide deterministic alignment between input and output streams in full-duplex operation. This may manifest as non-deterministic round-trip latency.

Some latency values are represented in frames, while others are represented in seconds. Conversion between these two units of measure requires multiplication or division by a sampling rate (in Hertz).

PortAudio streams have two explicit sample rates: the requested sample rate, passed to Pa_OpenStream() and the nominal actual sample rate reported in PaStreamInfo::sampleRate. These may differ if the native audio API has an accurate measure of the hardware sample rate available to return in PaStreamInfo::sampleRate. If the nominal actual sample rate is unavailable, the requested rate is used for all calculations. The reported actual sample rate may be "nominal" in the sense that it's a value fixed when the stream is opened and may not exactly match the actual sample rate of the running stream. (In reality there is also an actual time varying sample rate, but this is not reported by PortAudio).

PaDeviceInfo::default*Latency values are reported outside the context of a stream and therefore without an explict sample rate.

The following rules should be used to determine the correct sample rate to use when converting between frames and seconds:

-

For PaDeviceInfo default latencies, the PaDeviceInfo::defaultSampleRate should be assumed as the implict sample rate.

-

For Pa_OpenStream() computations such as determining host buffer configuration, the nominal actual sample rate (PaStreamInfo::sampleRate) should be used when available. Otherwise the sampleRate parameter to Pa_OpenStream() should be used. By "when available" here it is understood that there may be cases where a host API implementation needs to convert values before it is able to access the nominal actual sample rate.

-

For Pa_GetStreamInfo() computations such as reporting actual latency, the nominal actual sample rate (PaStreamInfo::sampleRate) should always be used.

Later we describe the different native audio API buffering models in detail. These include:

- opaque callback buffer

- double buffer

- n-buffer scatter-gather

- callback-aperiodic ringbuffer

All of these models have different parameters for dimensioning the buffer set (for example, respectively: a buffer size, a buffer size with implicit buffer count of 2, a buffer size and count, and a large ringbuffer size that may be subdivided by a periodic timer). For the purpose of the discussion below we call these sizes and counts native audio API buffering parameters or just "buffering parameters."

Native audio APIs may place soft or hard constraints on buffering parameter values. For example they may have preferred, default or required values. They may constrain buffer sizes to powers of two, or multiples of some base granularity. The goal of a host API Pa_OpenStream() implementation is to select the "best" buffer parameters. However, this selection is not necessarily trivial.

For a given native audio API buffering model, native audio API buffering parameters determine the user-space native audio API buffering latency by applying some function:

nativeAudioApiBufferingLatency = computeNativeAudioApiBufferingLatencyFromBufferingParameters(nativeAudioApiBufferingParameters)

Note that in general there is no inverse mapping:

// doesn't exist:

nativeAudioApiBufferingParameters - computeNativeAudioApiBufferingParametersFromBufferingLatency(nativeAudioApiBufferingLatency)

One reason is that buffering parameters may be multi-valued (e.g. a size and a count). As a result, multiple buffering parameter values may represent the same latency. It is not desirable to force an (arbitrary) bijective mapping because buffer sizes interact with user framesPerBuffer, and it is desirable to select the best buffering parameters in consideration of framesPerBuffer (and other constraints). In general the choice of the best native audio API buffer parameters depends on Pa_OpenStream() parameters, particularly framesPerBuffer and sampleRate, but also potentially other parameters such as host-API-specific flags.

In the interests of a logical treatment we now consider PaDeviceInfo::default*Latency values. Note that a full understanding of default latency implementation requires understanding of the way PortAudio interprets suggestedLatency. Therefore you may wish to revisit this section after reading about suggestedLatency below.

PaDeviceInfo::default*Latency values represent hints from the host API to the user about appropriate latency values for different use cases. See the user-level model above for a user-level description of the default latencies. The user level description should be used to guide implementation in cases where the guidelines here are insufficient or inapplicable.

Some native audio APIs expose "default," "preferred," or fixed buffering parameters. The native audio API defaults may arise from the system, the driver, or in some cases, a driver-specific user interface. Where possible PaDeviceInfo::default*Latency values should be derived from these native audio API default parameters.

Individual native audio APIs are considered in more detail below during the discussion suggestedLatency. For now we can state a general formula:

nativeAudioApiBufferingLatency = computeNativeAudioApiBufferingLatencyFromBufferingParameters(defaultNativeAudioApiBufferingParameters)

defaultLatency = nativeAudioApiBufferingLatency + fixedKnownDeviceAndSystemLatencies

Where defaultNativeAudioApiBufferingParameters are usually provided by the native audio API and fixedKnownDeviceAndSystemLatencies are computed or derived in a host-API specific manner (but see above and later for lists of possible latency sources).

At the time PaDeviceInfo::default*Latency values are computed, PortAudio doesn't know the sample rate or callback framesPerBuffer that the user will choose. Therefore:

-

As stated earlier, default*Latency values must be computed under the assumption that the sample rate is PaDeviceInfo::defaultSampleRate

-

default*Latency values should be computed based on Pa_OpenStream() behavior when the user passes paFramesPerBufferUnspecified as the user framesPerBuffer parameter. (A corollary of this is that use of the paFramesPerBufferUnspecified parameter in combination with a default latency should usually result in the host API using the native audio API default or preferred host buffer parameters where they are available. See the next section for more on this.)

-

default*Latency values should reflect native audio API default or preferred host buffer parameters. Furthermore, where possible:

-

defaultLowLatency should be derived from the "default" or "preferred" Host API value where available.

-

defaultHighLatency should be derived from an "upper limit" or "max" Host API value, where available.

-

defaultLatency values should relate to the host API's "normal mode." This means that the defaultLatency should be valid without passing any special (possibly host-API specific) flags to Pa_OpenStream(). For example, if a Host API implementation has a special "exclusive" low-latency mode that requires the user to specify a host-API-specific flag, this exclusive low latency value should not be returned as a default latency.

In cases where the native audio API supplies no default or preferred values, a suitable heuristic should be used both for choosing host API buffer parameters, and determining defaults. The heuristic may take into account, for example: anticipated scheduling jitter, known system buffering behavior, documented or experimentally established timing bounds.

As noted above, a number of parameters to Pa_OpenStream() can affect how the suggestedLatency parameter is interpreted. This makes it impossible to define how defaultLatency will be interpreted in all cases (without reference to all internal details and inputs to Pa_OpenStream().) The only strictly testable behavior is:

** Opening a half-duplex callback stream using Pa_OpenStream() parameters: framesPerBuffer=paFramesPerBufferUnspecified, sampleRate=PaDeviceInfo::defaultSampleRate and suggestedLatency=PaDeviceInfo::defaultHigh/Low*Latency with no host API-specific flags should result in a stream with reported actual latency that matches suggestedLatency, modulo any variation between the requested (Pa_OpenStream()) and nominal actual (PaStreamInfo) sample rate. **

Other scenarios such as full-duplex streams, user specified framesPerBuffer, non-default sample rates, or host API-specific modes may result in actual latency values that differ from the supplied suggestedLatency parameter. This may be due to host API implementation or device-specific behavior related to the process of selecting native buffer parameters and/or required adaption latencies.

We now turn to the task of deriving native audio API buffering parameters from user supplied suggestedLatency and other Pa_OpenStream() parameters.

We can consider the interpretation of suggestedLatency at three levels. At the most general level there is commonality in the way that suggestedLatency is interpreted by all host API implementations. At the level of different buffering models we can define specific formulas that should be used by all host API implementations using a particular buffering model. At the lowest level, individual host API implementations may need to employ specific heuristics to resolve buffering parameter constraints, suggested latency and user framesPerBuffer. Here we consider the most general level, later sections consider buffering models and host-API specific details.

PaStreamParameters::suggestedLatency values represent the latency the user would like. The actual latency will be a combination of latencies that PA can affect and latencies that are dictated by the Host API, platform, and sometimes PA itself. Prior to computing host API buffering parameters such as buffer sizes and count, implementations should should subtract all unchanging or computable minimum latencies from the user's suggestedLatency as follows:

targetNativeAudioApiBufferingLatency = suggestedLatency - fixedDeviceAndSystemLatencies - minimumKnownAdaptionLatencies;

targetNativeAudioApiBufferingLatency is then used to choose native audio API buffering parameters. To compute the actual stream latency, the latency resulting from the chosen buffering parameters is added to the device, system and actual adaption latencies.

fixedDeviceAndSystemLatencies includes latencies mentioned earlier in the discussion of possible latency sources. We identify specific latency sources below in the sections for individual host APIs.

minimumKnownAdaptionLatencies is the lower bound on adaption latencies introduced by PortAudio. In many cases it will be zero. Sometimes it may be possible to compute a lower bound on adaption buffering between input and output callbacks in full duplex streams, or the delay introduced by a required sample rate conversion component.

Some system or adaption latencies may not be computable until after the buffer parameters have been chosen. However, implementations should seek to factor out as many known fixed latencies as possible in the computation of targetNativeAudioApiBufferingLatency. The more accurate this value is, the closer the final actual latency will be to the user's suggestedLatency.

When interpreting targetNativeAudioApiBufferingLatency (as derived from suggestedLatency in the previous section) there are two main cases to consider. The cases depend on the value of framesPerBuffer passed to Pa_OpenStream(): 1. paFramesPerBufferUnspecified 2. A user specified framesPerBuffer value.

(1) As noted above, with paFramesPerBufferUnspecified a half duplex stream with suggestedLatency of PaDeviceInfo::defaultLatency should result in a stream with actual latency of PaDeviceInfo::defaultLatency, modulo any variation in the requested and nominal actual sample rate. A full-duplex stream may vary from the suggested values due to adaption latencies. In practice this means that the following assertion should hold:

// assuming paFramesPerBufferUnspecified and default sample rate...

targetNativeAudioApiBufferingLatency defaultLatency - fixedDeviceAndSystemLatencies - minimumKnownAdaptionLatencies;

targetNativeAudioApiBufferParameters computeBufferParameters( targetNativeAudioApiBufferingLatency )

assert( targetNativeAudioApiBufferParameters defaultOrPreferredNativeAudioApiBufferParameters )

(2) When a user specifies a framesPerBuffer value, the actual latency depends on the method used to select the native audio API buffering parameters. As we've already seen this may result in an actual latency that is greater, equal or less than the suggested value. The buffering model or the native audio API may constrain buffer sizes in certain ways. At the extreme, a fixed required host buffer size will result in variations in actual latency being due only to changes in PortAudio buffer adaption latency for different userFramesPerBuffer.

As implied earlier in the user model section, when userFramesPerBuffer is specified there is an expected lower bound on latency of:

expectedLowestLatency = userFramesPerBuffer + fixedDeviceAndSystemLatencies + minimumKnownAdaptionLatencies

Except in cases where native audio API buffer constraints dictate otherwise.

We consider variations due to native audio API buffering models next.

Native audio APIs employ a variety of user-space buffering models to communicate data between client program and the host driver and hardware.

Each buffering model implies different buffering parameters and hence a different way for PortAudio to configure latency. The final native audio API buffering latency is a function of the buffering parameters PortAudio selects.

PortAudio makes decisions about native audio API buffering parameters based on formulas and heuristics that take the following inputs:

- The buffering model

- Native audio API imposed constraints on buffer sizes and/or buffering granularities

- Native audio API preferred/default buffer sizes (especially important when the user doesn't specify a callback framesPerBuffer)

- userFramesPerBuffer a.k.a. Pa_OpenStream() framesPerBuffer parameter, when not paFramesPerBufferUnspecified

- targetNativeAudioApiBufferingLatency derived from PaStreamParameters::suggestedLatency (see above)

- Sample rate (requested or nominal actual depending on availability, as discussed above)

In general, inputs to the buffering calculations should be observed in the following priority: 1. Immutable buffering model and native API constraints 2. Using a native buffer size or period that is a multiple of userFramesPerBuffer 3. targetNativeAudioApiBufferingLatency

We review each buffering model below and state general formulas for computing buffer parameters from targetNativeAudioApiBufferingLatency and userFramesPerBuffer.

(Since this is a draft document, it is possible there are bugs in these formulas. Please report discrepancies or potential errors on the mailing list.)

Host APIs: CoreAudio (but see notes later)

The opaque host managed model exposes very little detail about the actual buffer structure. The buffers themselves are host-managed and passed to the user at each callback. In terms of timing, the host managed callback can be considered a kind of implicit double-buffer, although the host may use a different buffering strategy internally.

In the opaque buffer model, the host callback buffer size is the only degree of freedom with which to tune latency. All other latencies are determined by the system.

We assume here a kind of pseudo-double-buffer latency model, where the size of the host buffer defines the host buffering latency based on the assumption that while one buffer fills, the other plays.

actualNativeAudioApiBufferingLatency = hostFramesPerBuffer

This is illustrated as "known buffering latency" in the figure below.

In practice it is possible that hostFramesPerBuffer latency is pre-factored into fixedDeviceAndSystemLatencies. Therefore it is important to understand the native audio API latency model to determine exactly how to structure the formulas below.

When the user requests paFramesPerBufferUnspecified, choose the closest available host buffer size to targetNativeAudioApiBufferingLatency. Consider breaking ties or snapping to native audio API "default" or "preferred" values.

When userFramesPerBuffer is specified, ideally the host callback buffer size should be the smallest integer multiple of userFramesPerBuffer equal or greater than targetNativeAudioApiBufferingLatency. Computed as follows:

targetNativeAudioApiBufferingLatency = suggestedLatency - fixedDeviceAndSystemLatencies - minimumKnownAdaptionLatencies;

hostFramesPerBuffer = conformToNativeAudioApiBufferingModelConstraints( userFramesPerBuffer, targetNativeAudioApiBufferingLatency )

Where fixedDeviceAndSystemLatencies and minimumKnownAdaptionLatencies are defined above.

The implementation of conformToNativeAudioApiBufferingModelConstraints() is ideally expressed as:

conformToNativeAudioApiBufferingModelConstraints( userFramesPerBuffer, targetNativeAudioApiBufferingLatency ){

// adding (userFramesPerBuffer - 1) then dividing by userFramesPerBuffer rounds up to the next highest multiple

return ( (targetNativeAudioApiBufferingLatency + userFramesPerBuffer - 1) / userFramesPerBuffer ) * userFramesPerBuffer;

}

This method adheres to the general principles that: (1) when there is a trade off, honouring userFramesPerBuffer should be given priority over honouring suggestedLatency, and (2) that userFramesPerBuffer is expected to impose a lower bound on latency.

In cases where hostFramesPerBuffer is constrained to incompatible values by the native audio API (e.g. fixed, enforced range, power of two, etc.) additional heuristics may be required to choose the best hostFramesPerBuffer. These heuristics are discussed under "Additional hostFramesPerBuffer selection heuristics" below. However, all other things being equal, hostFramesPerBuffer should be an integer multiple of userFramesPerBuffer.

When the hostFramesPerBuffer is not an integer multiple of userFramesPerBuffer, PortAudio may introduce additional buffer adaption latencies that were not subtracted from suggestedLatency when computing targetNativeAudioApiBufferingLatency. Theses adaption latencies must be added when computing the final actual latency:

actualLatency = actualNativeAudioApiBufferingLatency + fixedDeviceAndSystemLatencies + totalAdaptionLatencies;

Note that depending on the host API there may be additional system or device latencies that only crystalise once hostFramesPerBuffer is chosen. These should also be added when computing the actual latency.

Host APIs: JACK, ASIO with some devices

This is a special case of the previous opaque host managed buffer model where the device enforces a fixed host buffer size. We mention this here as a simplified case:

hostFramesPerBuffer = hostDefinedValue;

actualNativeAudioApiBufferingLatency = hostFramesPerBuffer;

actualLatency = actualNativeAudioApiBufferingLatency + fixedDeviceAndSystemLatencies + totalAdaptionLatencies;

As above, depending on the host API, hostFramesPerBuffer may already be factored into fixedDeviceAndSystemLatencies.

Host APIs: ASIO, WDM/WaveRT

The double buffer model operates in the same way as the opaque host managed callback buffers model described earlier.

Host APIs: WMME, WDM/WaveCyclic, WDM/WavePci

N-buffer scatter gather is an extension of double-buffering with more than two buffers. In the scatter-gather case the buffers are separate but logically organised in a ring. In a callback-synchronous ring buffer the buffer is a single contiguous ringbuffer with periodic notifications/callbacks spaced equally around the ring. For the purpose of this section the two structures are equivalent.

The actual buffering latency is given by:

actualNativeAudioApiBufferingLatency = hostFramesPerBuffer * (hostBufferCount - 1)

As indicated in the figure below:

Calculation of buffering parameters is similar to the ring buffer case. We would like the value of hostFramesPerBuffer * (hostBufferCount - 1) to be the smallest value equal or greater than targetNativeAudioApiBufferingLatency. When userFramesPerBuffer is specified hostFramesPerBuffer should always be a multiple of userFramesPerBuffer.

We propose methods for calculating buffer parameters for the N-buffer scatter gather model below.

With paFramesPerBufferUnspecified the host API needs to choose both hostFramesPerBuffer and hostBufferCount.

There is no generic formula for deriving the parameters from targetNativeAudioApiBufferingLatency without fixing one or other values. There are two main approaches:

A. Set hostBufferCount to some small integer and divide targetNativeAudioApiBufferingLatency by hostBufferCount:

hostFramesPerBuffer = targetNativeAudioApiBufferingLatency / (hostBufferCount-1)

Three scatter-gather buffers are usually more desirable than two on Windows. It may be desirable to round hostFramesPerBuffer to a multiple of 4, 8 or 16 to ensure good alignment for vectorising accelleration. Other platform-specific factors should also be considered where appropriate.

B. Set hostFramesPerBuffer to a fixed value and then compute hostBufferCount.

hostBufferCount = ((targetNativeAudioApiBufferingLatency + hostFramesPerBuffer - 1) / hostFramesPerBuffer) + 1

Another alternative is to ensure that hostFramesPerBuffer is a power of two and adjust both hostFramesPerBuffer and hostBufferCount to best match targetNativeAudioApiBufferingLatency according to some accuracy requirement.

Windows XP KMixer is said to use 10ms buffers. In this case it may be desirable to match this buffer size to ensure callback periodicity.

As you can see, considerations of the host, and host-specific heuristics are needed here.

The simplest method here is to use userFramesPerBuffer as the hostFramesPerBuffer, giving:

hostFramesPerBuffer = userFramesPerBuffer

hostBufferCount = ((targetNativeAudioApiBufferingLatency + hostFramesPerBuffer - 1) / hostFramesPerBuffer) + 1

A more flexible formula to consider is:

hostFramesPerBuffer = userFramesPerBuffer * M

hostBufferCount = ((targetNativeAudioApiBufferingLatency + hostFramesPerBuffer - 1) / hostFramesPerBuffer) + 1

Where M is chosen either to conform hostFramesPerBuffer to a reasonable range (say 5-15ms) or to limit hostBufferCount to a reasonable maximum (say 8 buffers).

Finally, in all cases the actual latency is given by:

totalAdaptionLatencies = 0; // should be zero since we're ensuring the host buffers are a multiple of hostFramesPerBuffer

actualNativeAudioApiBufferingLatency = hostFramesPerBuffer * (hostBufferCount - 1)

actualLatency = actualNativeAudioApiBufferingLatency + fixedDeviceAndSystemLatencies + totalAdaptionLatencies;

Host APIs: DirectSound, WASPI

The callback-asynchronous ringbuffer is a large contiguous ring buffer. The host API polls the ringbuffer periodically to determine if samples should be consumed or filled. The callback-asynchronous ringbuffer is different from a scatter-gather or synchronous ringbuffer in that there is no requirement for the callbacks to occur at integer divisions of the buffer, or for the buffer to be a multiple of userFramesPerBuffer. The host API has to determine the total length of the ring buffer and the polling period.

Given hostFramesPerBuffer as the total ringbuffer length, the ringbuffer latency can be defined as:

actualNativeAudioApiBufferingLatency = hostFramesPerBuffer - userFramesPerBuffer - pollingTimerJitterMargin

Where pollingTimerJitterMargin is intended to mask the jitter associated with the timer polling the ring buffer (on the order of a few milliseconds.)

With paFramesPerBufferUnspecified hostFramesPerBuffer can be computed similarly to the specified userFramesPerBuffer below.

pollingPeriod ?

hostFramesPerBuffer = pollingPeriod + max( pollingPeriod + pollingTimerJitter, targetNativeAudioApiBufferingLatency )

pollingPeriod will necessarily be a host API specific value based on power consumption requirements and timer granularities. For example, on Windows, high resolution polling requires calling timeBeginPeriod(1) which alters the scheduling behavior for the entire system, therefore it is not desireable to select a high-frequency polling period when the targetNativeAudioApiBufferingLatency is high.

hostFramesPerBuffer = userFramesPerBuffer + max( userFramesPerBuffer + pollingTimerJitter, targetNativeAudioApiBufferingLatency )

pollingPeriod = userFramesPerBuffer

As discussed above, there are a number of factors to consider when faced with freedom to choose certain buffering parameters. Some of them are enumerated below.

The priority should be to support a specified userFramesPerBuffer value without additional adaption latency, and secondarily to honour suggestedLatency.

- native API "default" or "preferred" sizes should be favoured (especially with paFramsPerBufferUnspecified). Depending on the host API it may even be desirable to ignore suggestedLatency in order to honour a "preferred" size. For example, on Mac OSX, increasing the buffer size above the default may have no tangible benefit (not sure that's true, just saying).

- It may be desirable for buffer sizes to be powers of two (this was previously assumed in the WMME version for example, however no benefit is currently observed).

- It may be desirable for buffer sizes to meet multi-word alignments for SSE or other vector optimisations. Given a free choice of buffer size this heuristic should be honoured over selecting buffers of arbitrary integer size.

- For larger buffer sizes, cache line and memory page alignment may be desirable

- When host/user buffer size is required, it is desirable to maximise available callback compute-time and minimise callback jitter.

- Final actual latency should in-general equal or exceed suggestedLatency

- In n-buffer situations it is usually better to have more than 2 buffers as this keeps more samples between the callback and the DAC. 3 should definitely be preferred unless the only option for meeting suggestedLatency is switching to two buffers.

- In n-buffer situations it may be best not to have too many buffers, to avoid context-switch and buffer relay overhead

- In polling ring-buffer situations the above two points mean that it is usually better to poll a ringbuffer more than twice per total buffer duration.

- Polling frequency should be moderated to avoid over-taxing the system. Especially when suggestedLatency is high.

- Where possible the host API should seek to mask polling scheduling jitter by adding additional latency. This is usually only possible in polling callback-aperiodic ringbuffer scenarios.

- Prefer making buffers or polling intervals substantially larger than the system scheduling jitter

- Prefer making system scheduler granularity larger to avoid system load (e.g. timeBeginPeriod(1) should only be used with low-latency streams).

- When native API buffering constraints dictate that callback buffer size adaption is needed, but there are still choices to be made between different host buffer sizes, the best choice should: minimize user callback jitter, maximise available CPU time for each user callback, minimise buffer adaption latency. (When you have an algorithm for that let me know)

Please suggest other considerations or heuristics for discussion on the mailing list.

Currently PortAudio treats all latencies not reported by the native audio API as "unknown." It is possible that PortAudio could model these latencies. A model might include:

- nominal hardware FIFO size of 64 samples (a number mentioned in WDM documentation)

- nominal DAC FIR filter latency 24 samples (a value I read somewhere, check this)

- system mixer components e.g. Windows KMixer latency (On WinXP stated as 30ms, need to validate see [wiki:Win32AudioBackgroundInfo])

- driver buffering (we could guess?)

It may be better to include a conservative estimate of these latencies than to omit them entirely. Especially if we can validate the model across a range of hardware.

| Buffering model: | ? |

| Buffering latency: | ? |

| Buffer size constraints/restrictions: | ? |

| Buffer size constraints expressed as: | ? |

| Device and system latencies: | N/A |

| Exposes preferred or default latency: | ? |

| Unknown latencies: | Hardware buffering and A/D latencies |

| Provides actual device sample rate: | ? |

| PA callback invoked by: | ? |

| Native full duplex: | ? |

| May use host/user buffer size adaption: | ? |

| May use full-duplex synchronisation buffering: | ? |

| May introduce SRC latency: | ? |

FEED ME

| Buffering model: | double buffer |

| Buffering latency: | ASIOCreateBuffers(bufferSize) |

| Buffer size constraints/restrictions: | device dependent (fixed, range, power of two or multiplier granularity ) |

| Buffer size constraints expressed as: | AsioDriverInfo{ bufferMinSize, maxBufferSize. bufferPreferredSize, bufferGranularity } |

| Device and system latencies: | ASIOGetLatencies() returns latencies in frames once host buffer size has been set - thus can be used for reporting actual latency, but not while interpreting suggested latency. |

| Exposes preferred or default latency: | YES (preferred) |

| Unknown latencies: | NONE (actually, I don't think A/D hardware latencies are modelled) |

| Provides actual device sample rate: | ???? |

| PA callback invoked by: | callback from host |

| Native full duplex: | ALWAYS |

| May use host/user buffer size adaption: | YES (when no permissible host buffer size is an integer multiple of userFramesPerBuffer) |

| May use full-duplex synchronisation buffering: | NO |

| May introduce SRC latency: | NO |

PA/ASIO selects a host buffer size based on the following requirements (in priority order):

-

The host buffer size must be permissible according to the ASIO driverInfo buffer size constraints (min, max, granularity or powers-of-two).

-

If the user specifies a non-zero framesPerBuffer parameter (userFramesPerBuffer here) the host buffer should be a multiple of this (subject to the constraints in (1) above).

[NOTE:| Where no permissible host buffer size is a multiple of userFramesPerBuffer, we choose a value as if userFramesPerBuffer were zero (i.e. we ignore it). This strategy is open for review ~ perhaps there are still "more optimal" buffer sizes related to userFramesPerBuffer that we could use.]

- The host buffer size should be greater than or equal to

targetBufferingLatencyFrames, subject to (1) and (2) above. Where it

is not possible to select a host buffer size equal or greater than

targetBufferingLatencyFrames, the highest buffer size conforming to

(1) and (2) should be chosen.

See the following functions in pa_asio.cpp for details:| SelectHostBufferSize, SelectHostBufferSizeForSpecifiedUserFramesPerBuffer, SelectHostBufferSizeForUnspecifiedUserFramesPerBuffer

| Buffering model: | opaque host buffer |

| Buffering latency: | kAudioDevicePropertyBufferFrameSize |

| Buffer size constraints/restrictions: | must be within a range (min,max) |

| Buffer size constraints expressed as: | queryable device parameter kAudioDevicePropertyBufferFrameSizeRange |

| Device and system latencies: | kAudioDevicePropertySafetyOffset, kAudioDevicePropertyLatency, kAudioStreamPropertyLatency |

| Exposes preferred or default latency: | kind of. kAudioDevicePropertyBufferFrameSize is the current/default latency when created |

| Unknown latencies: | unknown |

| Provides actual device sample rate: | ????YES |

| PA callback invoked by: | callback from host |

| Native full duplex: | SOMETIMES (some drivers support full duplex, some don't) |

| May use host/user buffer size adaption: | YES ???CONFIRM??? |

| May use full-duplex synchronisation buffering: | YES (Stephane says we should be avoding this with aggregate devices from 10.5 onwards). |

| May introduce SRC latency: | YES |

For default high latency we use the kAudioDevicePropertyBufferFrameSize default that the device comes up with.

For default low latency we use a small constant that seems to work well on many systems min(64,deviceMinimum).

By default CoreAudio allows the callback to execute for the whole callback period, in which case it has the same timing characteristics as a double-buffer. It also provides a parameter kAudioDevicePropertyIOProcStreamUsage that reduces latency by requiring that the callback complete sooner than one buffer period. PortAudio doesn't currently use this feature.

CoreAudio may not implement the latency model very well at the moment. This needs to be reviewed. See tickets #175, #182 and #95. See also callback time info related tickets #144, #149

| Buffering model: | callback asynchronous ringbuffer |

| Buffering latency: | (bufferSize - userFramesPerBuffer) or (bufferSize - pollingPeriod) |

| Buffer size constraints/restrictions: | DSBSIZE_MIN (4) to DSBSIZE_MAX (0xFFFFFFFF) |

| Buffer size constraints expressed as: | Compile time constants DSBSIZE_MIN and DSBSIZE_MAX |

| Device and system latencies: | N/A |

| Exposes preferred or default latency: | NO |

| Unknown latencies: | Everything below DirectSound API (see below) |

| Provides actual device sample rate: | NO |

| PA callback invoked by: | High priority thread with timer polling (waitable timer object or mm timer signalled Event) |

| Native full duplex: | Compile time option (when PAWIN_USE_DIRECTSOUNDFULLDUPLEXCREATE is defined) |

| May use host/user buffer size adaption: | NO |

| May use full-duplex synchronisation buffering: | NO (input buffer acts as adaption buffer) |

| May introduce SRC latency: | NO |

PA/DirectSound computes buffer sizes using the callback-asynchronous ringbuffer calculations described above. The timer polling period is currently decided as the greater of 1/4 of the user buffer period or 1/16 of the target latency. 1/4 of the user buffer period is intended to guarantee 75% CPU availability. It may be that we need to harmonise timer polling heuristics between implementations.

See CalculateBufferSettings in pa_win_ds.c for details.

Full duplex streams created without PAWIN_USE_DIRECTSOUNDFULLDUPLEXCREATE have unsynchronised input/output buffers and hence indeterminate loopback latency.

On Windows XP DirectSound can apparently bypass KMixer by talking to a hardware pin if one is available. Otherwise it incurs KMixer latency (30ms?). On later Windows versions other smaller system mixer latencies are involved. See our notes here:| [wiki:Win32AudioBackgroundInfo]

| Buffering model: | Opaque host managed |

| Buffering latency: | bufferSize |

| Buffer size constraints/restrictions: | fixed buffer size |

| Buffer size constraints expressed as: | fixed??? |

| Device and system latencies: | Each node can query for input and output latency (see below) |

| Exposes preferred or default latency: | N/A |

| Unknown latencies: | i/o latency is dependent on correct user calibration |

| Provides actual device sample rate: | fixed sample rate??? |

| PA callback invoked by: | callback |

| Native full duplex: | YES |

| May use host/user buffer size adaption: | YES |

| May use full-duplex synchronisation buffering: | NO |

| May introduce SRC latency: | NO |

The latency at a JACK port is a function of the delay introduced by all nodes connected to that port plus the -I or -O user-configured hardware latencies as illustrated below:

-I and -O are configured by the user, after being measured using a physical loopback cable and jack_iodelay(1) see the man page for details:| http://trac.jackaudio.org/browser/jack2/trunk/jackmp/man/jack_iodelay.0

FEED ME

| Buffering model: | N-buffer scatter-gather |

| Buffering latency: | (bufferSize * (bufferCount - 1)) |

| Buffer size constraints/restrictions: | PA limits buffers to 32k since there is a comment indicating some drivers crashed with larger buffers |

| Buffer size constraints expressed as: | PA #defines |

| Device and system latencies: | N/A |

| Exposes preferred or default latency: | NO |

| Unknown latencies: | Everything below WMME API |

| Provides actual device sample rate: | NO |

| PA callback invoked by: | High priority thread blocking on Event |

| Native full duplex: | NO |

| May use host/user buffer size adaption: | NO |

| May use full-duplex synchronisation buffering: | NO |

| May introduce SRC latency: | NO |

PA/WMME computes buffer sizes and counts based on the following procedure:| it begins by assuming a host buffer size of userFramesPerBuffer or a default granularity of 16 frames when userFramesPerBuffer unspecified. It then computes a buffer count based on suggested latency using the formulas above, enforcing a minimum buffer count of 2 or 3 depending on whether the buffer ring is for full or half duplex use. The algorithm then checks whether the buffer count falls within an acceptable range, if not it coalesces multiple user buffers into a single host buffer and then recomputes the buffer count.

When the userFramesPerBuffer exceeds the 32k host buffer size upper limit we try to choose a host buffer size that is a large integer factor of userFramesPerBuffer so as to evenly distribute computation (and timing jitter) across buffers.

See the following functions in pa_win_wmme.c for details:| CalculateBufferSettings, SelectHostBufferSizeFramesAndHostBufferCount, ComputeHostBufferSizeGivenHardUpperLimit, ComputeHostBufferCountForFixedBufferSizeFrames

A survey of workable WMME buffer sizes was conducted on the mailing list using the patest_wmme_find_best_latency_params.c test. The results are graphed in the pdf attached to ticket #185. These results have been used to tune the default latency #defines in pa_win_wmme.c

Full duplex streams have unsynchronised input/output buffers and hence indeterminate loopback latency. The latency is bounded by the size of one host buffer. PA usually sizes input and output buffers to the same size, and allocates one extra input buffer to accomodate any possible phase relationship between input and output buffering.

| Buffering model: | callback asynchronous ringbuffer |

| Buffering latency: | |

| Buffer size constraints/restrictions: | ??? |

| Buffer size constraints expressed as: | ??? |

| Device and system latencies: | ??? |

| Exposes preferred or default latency: | ??? |

| Unknown latencies: | ??? |

| Provides actual device sample rate: | ??? |

| PA callback invoked by: | High priority thread with timer polling ??? or Event notification? |

| Native full duplex: | ??? |

| May use host/user buffer size adaption: | ??? |

| May use full-duplex synchronisation buffering: | ??? |

| May introduce SRC latency: | ??? |

Dmitry has indicated that PA/WASAPI uses the following formula:

static PaUint32 PaUtil_GetFramesPerHostBuffer(PaUint32 userFramesPerBuffer,

PaTime suggestedLatency, double sampleRate, PaUint32 TimerJitterMs)

{

PaUint32 frames userFramesPerBuffer + max( userFramesPerBuffer,

(PaUint32)(suggestedLatency * sampleRate) );

frames += (PaUint32)((sampleRate * 0.001) * TimerJitterMs);

return frames;

}

Mailing list email July 14 2010

| Buffering model: | N-buffer scatter-gather |

| Buffering latency: | (bufferSize * (bufferCount - 1)) |

| Buffer size constraints/restrictions: | ??? |

| Buffer size constraints expressed as: | ??? |

| Device and system latencies: | ??? |

| Exposes preferred or default latency: | ??? |

| Unknown latencies: | ??? |

| Provides actual device sample rate: | ??? |

| PA callback invoked by: | High priority thread with timer polling ??? or Event notification? |

| Native full duplex: | ??? |

| May use host/user buffer size adaption: | ??? |

| May use full-duplex synchronisation buffering: | ??? |

| May introduce SRC latency: | ??? |

| Buffering model: | double buffer |

| Buffering latency: | buffer size / 2 |

| Buffer size constraints/restrictions: | ??? |

| Buffer size constraints expressed as: | driver can change/reject buffer sizes? |

| Device and system latencies: | ??? |

| Exposes preferred or default latency: | ??? |

| Unknown latencies: | ??? |

| Provides actual device sample rate: | ??? |

| PA callback invoked by: | High priority thread with timer polling or Event notification |

| Native full duplex: | ??? |

| May use host/user buffer size adaption: | ??? |

| May use full-duplex synchronisation buffering: | ??? |

| May introduce SRC latency: | ??? |