-

Notifications

You must be signed in to change notification settings - Fork 22

Expanding the Cluster

[Table of Contents](https://github.com/dell-oss/Doradus/wiki/Doradus Administration: Table-of-Contents) | [Previous](https://github.com/dell-oss/Doradus/wiki/Two Node Configuration) | [Next](https://github.com/dell-oss/Doradus/wiki/Multi-Data Center Deployments)

Deployment Guidelines: Expanding the Cluster

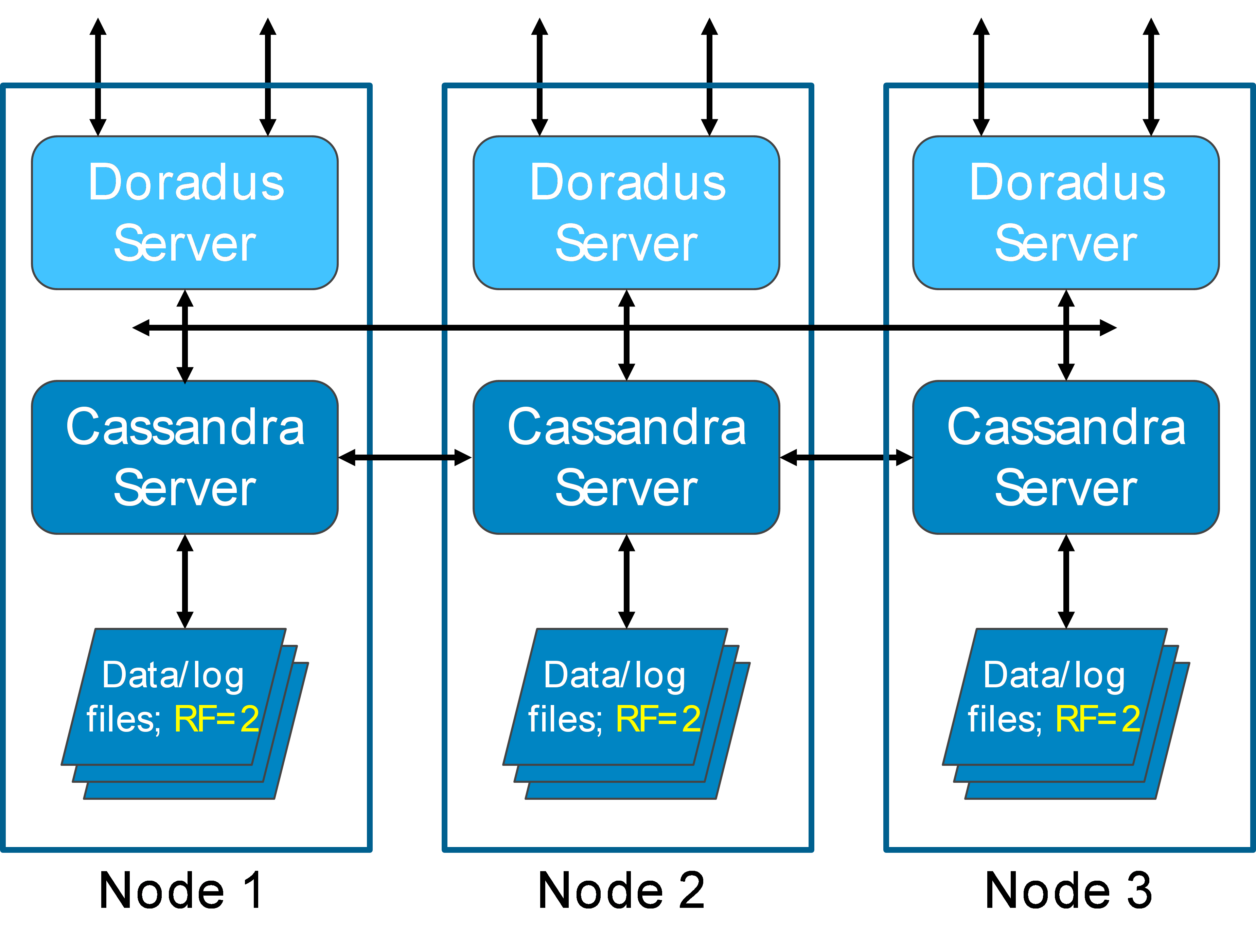

Additional nodes can be added to a cluster to increase processing and storage capacity. With a 3-node cluster, the recommended configuration is to use RF=2. Cassandra will distribute the data evenly within the cluster using a random partition scheme. An example 3-node cluster is illustrated below:

Figure 5 – Expanded Cluster: 3 Nodes, RF=2

In this configuration, every record is stored on a primary node according to its key, but RF=2 causes the record to be replicated to one other node. Hence, each node will have roughly 2/3’s of the database’s total records. Full database services are available if any single process or node fails. Each Doradus instance is configured to distribute requests among all Cassandra instances.

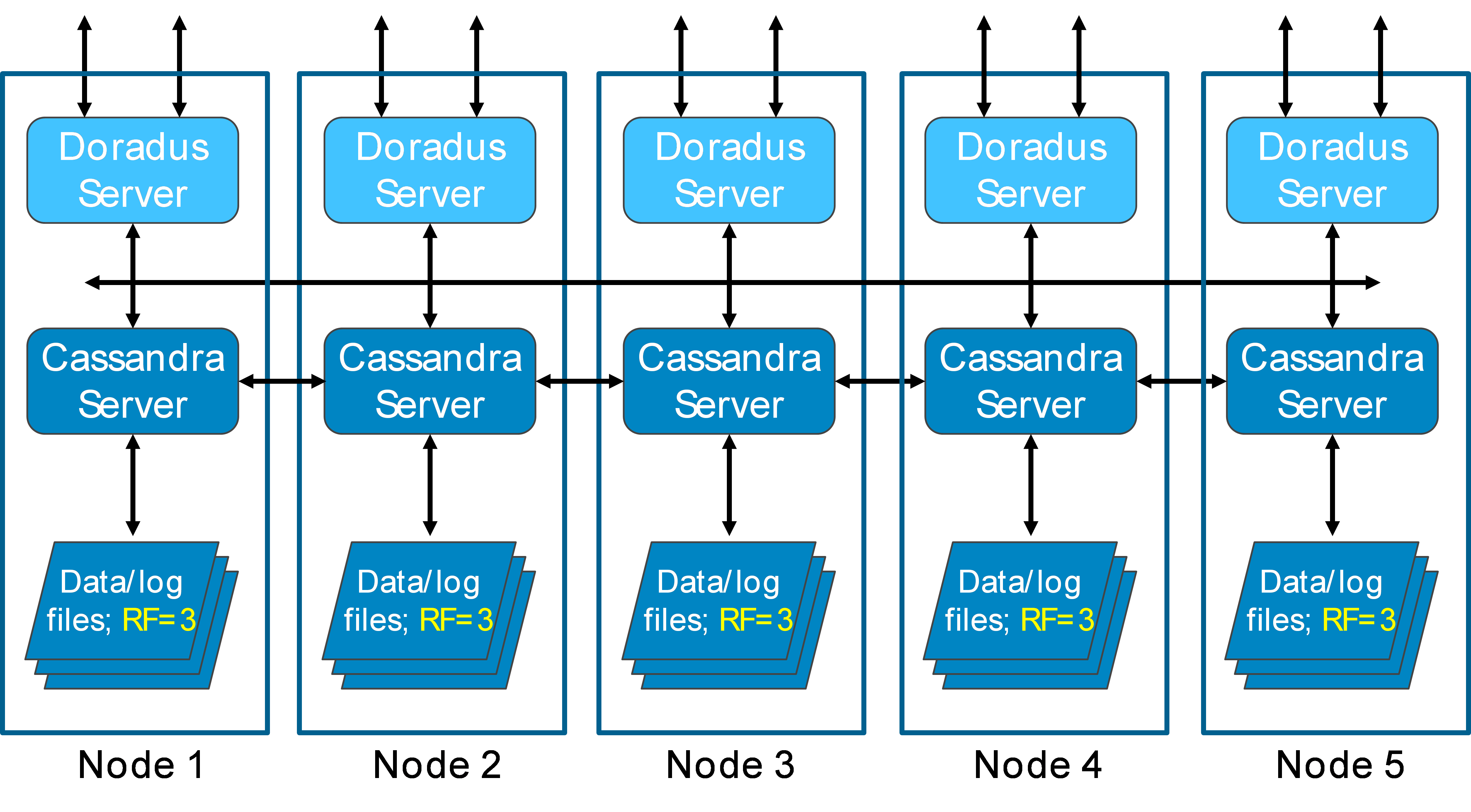

Going further up the scalability ladder, below is an example of a 5-node configuration with RF=3:

Figure 6 – Expanded Cluster: 5 Nodes, RF=3

In this example, RF=3 means that every record will be stored on a primary node and then replicated to two other nodes. This means each node has roughly 3/5’s of all data. Up to 2 nodes can fail and all database services will remain available. This provides increased scalability and fault tolerance.

Technical Documentation

[Doradus OLAP Databases](https://github.com/dell-oss/Doradus/wiki/Doradus OLAP Databases)

- Architecture

- OLAP Database Overview

- OLAP Data Model

- Doradus Query Language (DQL)

- OLAP Object Queries

- OLAP Aggregate Queries

- OLAP REST Commands

- Architecture

- Spider Database Overview

- Spider Data Model

- Doradus Query Language (DQL)

- Spider Object Queries

- Spider Aggregate Queries

- Spider REST Commands

- [Installing and Running Doradus](https://github.com/dell-oss/Doradus/wiki/Installing and Running Doradus)

- [Deployment Guidelines](https://github.com/dell-oss/Doradus/wiki/Deployment Guidelines)

- [Doradus Configuration and Operation](https://github.com/dell-oss/Doradus/wiki/Doradus Configuration and Operation)

- [Cassandra Configuration and Operation](https://github.com/dell-oss/Doradus/wiki/Cassandra Configuration and Operation)