-

Notifications

You must be signed in to change notification settings - Fork 1.2k

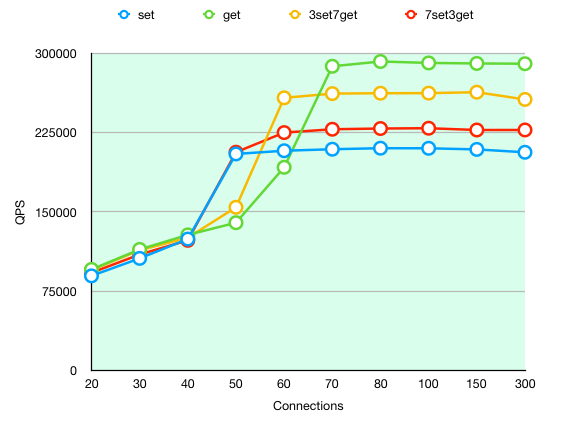

3.2.x Performance

whoiami edited this page Aug 2, 2019

·

5 revisions

Disk: 2T NVME

CPU: Intel(R) Xeon(R) CPU E5-2630 v4 @ 2.20GHz * 40

Memory: 256G

Network Card: 10-Gigabit

OS: CentOS Linux release 7.4

data size: 64bytes

key num: 1,000,000

| TEST | QPS |

|---|---|

| set | 124347 |

| get | 283849 |

| WithBinlog&Slave QPS | NoBinlog&Slave QPS | |

|---|---|---|

| PING_INLINE | 262329 | 272479 |

| PING_BULK | 262467 | 270562 |

| SET | 124953 | 211327 |

| GET | 284900 | 292568 |

| INCR | 120004 | 213766 |

| MSET (10 keys) | 64863 | 111578 |

| MGET (10 keys) | 224416 | 223513 |

| MGET (100 keys) | 29935 | 29550 |

| MGET (200 keys) | 15128 | 14912 |

| LPUSH | 117799 | 205380 |

| RPUSH | 117481 | 205212 |

| LPOP | 112120 | 200320 |

| RPOP | 119932 | 207986 |

| LRANGE_10 (first 10 elements) | 277932 | 284414 |

| LRANGE_100 (first 100 elements) | 165118 | 164355 |

| LRANGE_300 (first 300 elements) | 54907 | 55096 |

| LRANGE_450 (first 450 elements) | 36656 | 36630 |

| LRANGE_600 (first 600 elements) | 27540 | 27510 |

| SADD | 126230 | 208768 |

| SPOP | 103135 | 166555 |

| HSET | 122443 | 214362 |

| HINCRBY | 114757 | 208942 |

| HINCRBYFLOAT | 114377 | 208550 |

| HGET | 284900 | 290951 |

| HMSET (10 fields) | 58937 | 111445 |

| HMGET (10 fields) | 203624 | 205592 |

| HGETALL | 166861 | 160797 |

| ZADD | 106780 | 189178 |

| ZREM | 112866 | 201938 |

| PFADD | 4708 | 4692 |

| PFCOUNT | 27412 | 27345 |

| PFMERGE | 478 | 494 |

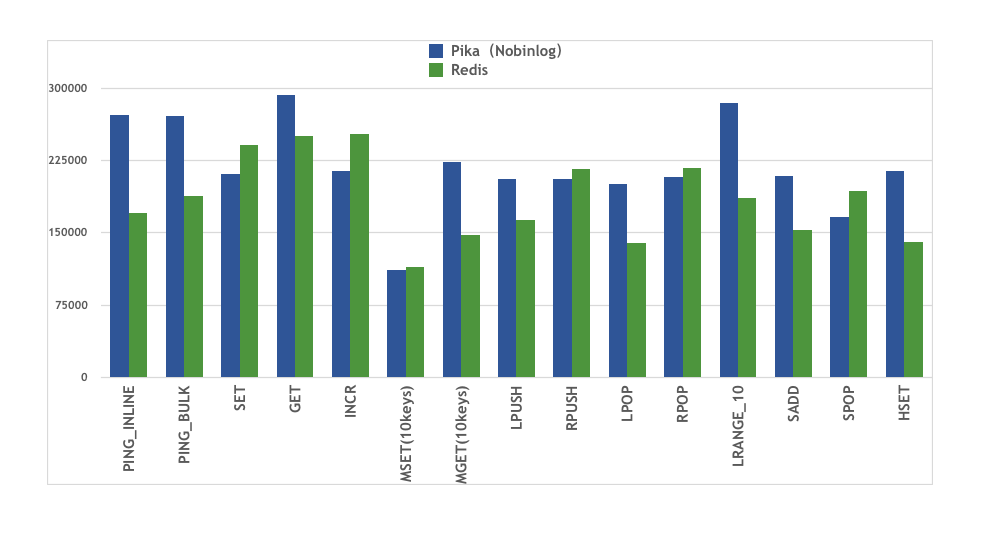

With Redis AOF configuration appendfsync everysec, redis basically write data into memeory. However, pika uses rocksdb, which writes WAL on ssd druing every write batch. That comparation becomes multiple threads sequential write into ssd and one thread write into memory.

Put the fairness or unfairness aside. Here is the performance.

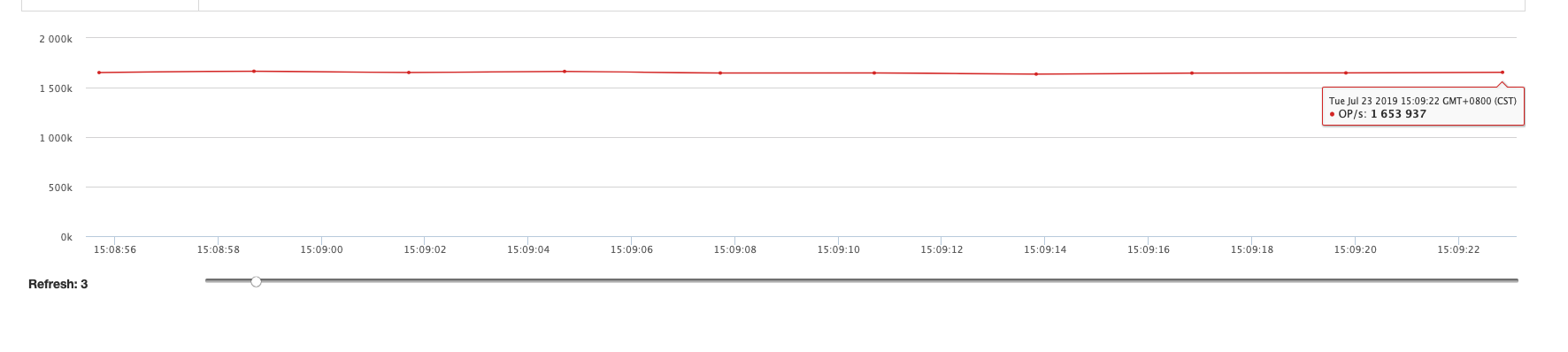

WithBInlog&Slave

4 machine * 2 pika instance (Master)

4 machine * 2 pika instance (Slave)

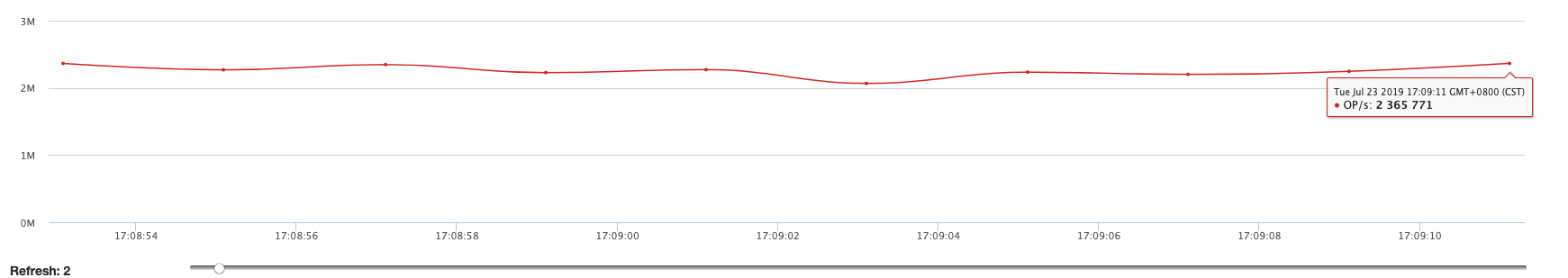

NoBinlog&Slave

4 machine * 2 pika instance (Master)

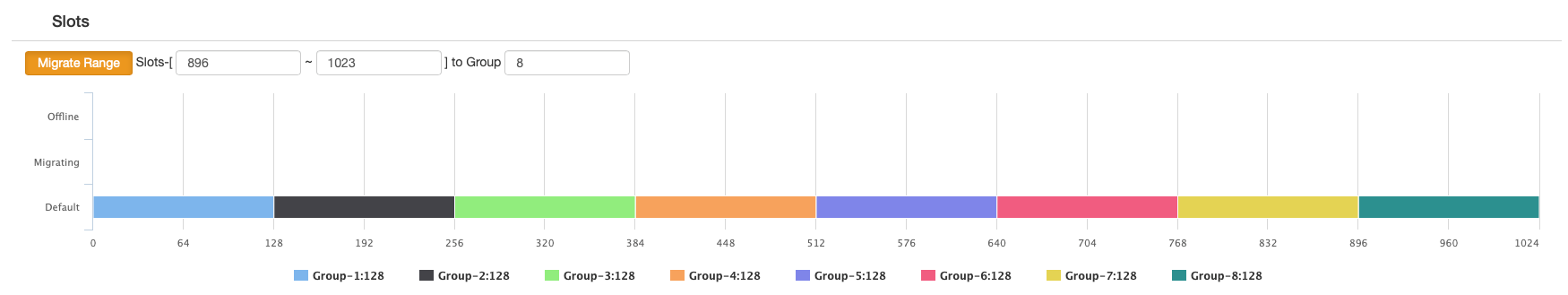

Slots Distribution:

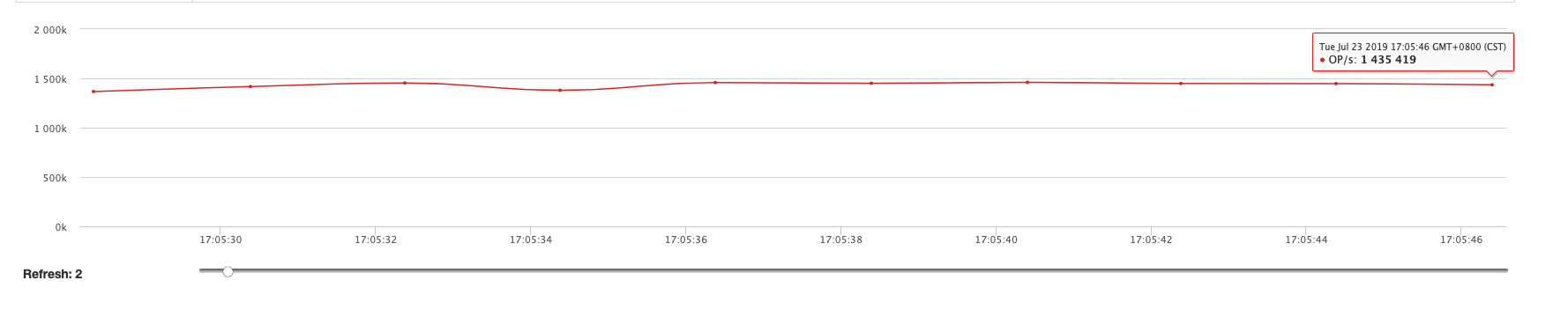

| Command | QPS |

|---|---|

| Set | 1,400,000+ |

| Command | QPS |

|---|---|

| Set | 1,600,000+ |

| Command | QPS |

|---|---|

| Get | 2,300,000+ |

With or without binlog, for Get command, QPS is approximately the same.

- 安装使用

- 支持的语言和客户端

- 当前支持的Redis接口以及兼容情况

- 配置文件说明

- 数据目录说明

- info信息说明

- 部分管理指令说明

- 差异化命令

- Pika Sharding Tutorials

- Pika订阅

- 配合sentinel(哨兵)实现pika自动容灾

- 如何升级到Pika3.0

- 如何升级到Pika3.1或3.2

- Pika多库版命令、参数变化参考

- Pika分片版本命令

- 副本一致性使用说明

- Pika内存使用

- Pika最佳实践

- 整体架构

- 线程模型

- 全同步

- 增量同步

- 副本一致性

- 快照式备份

- 锁的应用

- nemo存储引擎数据格式

- blackwidow存储引擎数据格式

- Pika源码学习--pika的通信和线程模型

- Pika源码学习--pika的PubSub机制

- Pika源码学习--pika的命令执行框架

- Pika源码学习--pika和rocksdb的对接

- pika-NoSQL原理概述

- pika在codis中的探索

- Pika 笔记

- pika 主从同步原理