-

Notifications

You must be signed in to change notification settings - Fork 507

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

[rplidar users] Potential errors with drivers + 360 lidar support #198

Comments

|

First off, I recommend the Melodic branch. It has a bunch more features and is better structured. It will work in Kinetic. Second, it would be helpful to provide me with a bag file I can use to reproduce. My steps to reproduce:

on the bag file, to remove the transformations your recorded from your SLAM/localization runs, so I was just left with the raw data to provide my own.

So make sure you see this error when following those steps on your machines. Third, how did you install Ceres? I've heard of similar issues that were the result of a dirty Ceres install that comes with Google Cartographer. If you've installed Google Cartographer, you will need to remove that Ceres install and install it again via rosdep. Ceres that ships with Cartographer is only suitable for cartographer. This thread #167 may be helpful. |

|

Thanks for the quick response.

|

|

Update: Nothing wrong with the laser frame. I did some further testing. All with the same rosbag and the steps you described above. I tested both the sync/ async nodes with the default configs (adapted laser frame and range).

The error occured in any case. |

|

I have Please post a bag file and I can take a look. Include your tf and tf static and laser scan. Specify how your laser is mounted on the robot. Also specify how you've installed this. |

|

This is the robot: This is my launch file for the lidar: This is the complete rosbag (previously cut to fit on github): |

|

What is Do you see any logging / errors from SLAM Toolbox or anything else when running? Your TF tree also looks weird, why is your laser oriented backwards in frame to the robot? Your wheel encoder TF also updates insanely slow, looks like only 2-3 hz (non-scientifically from looking). I don't know if that's related, but that's far too slow for quality autonomous navigation. You should be getting it at 50hz with the kobuki if memory serves. I'm getting past where you had issues in your first example images, no issue. Also made it past your second issue example, no problem. I see a good loop closure here. I got through the loop closure of the main corridor and a bit more and finally had it happen around 610 seconds into the bag. And I get that pretty deterministically from the 3 times I ran in a row.

Where do you see a laser_frame param? That's ancient. You shouldn't even know about that - are you using Melodic devel like I suggested? You shouldn't need to set any laser frame. Do you reset odometry or otherwise do anything to effect TF / laser scans in your stack? IMU off nuts? Anything? I've mapped spaces 10x larger than this on a regular basis and never had an issue. This is unique and the other users that have reported similarly (if you check the closed tickets) are all results of messed up URDFs or inverted lasers or something wacky. I also don't have any robots with 360 lasers so perhaps there's something there to look at, there was a couple of PRs to enable 360 lidars but maybe something got messed up. My recommendation would be to first critically analyze your URDF, frames, odom, and transformations since that seems to fix all the users so far that have reported similar things. My second recommendation would be to use laser_filters to transition your 360 lidar into a 270 lidar and feed that scan into SLAM Toolbox as a test. If no issues, then its something 360 lidar related. If it still happens, then its robot related. I ran 2 much larger bags when I went out for dinner that I had laying around and I confirmed that mapping a much larger space over the course of several hours is still working fine as a sanity check. Both of these bags were collected with different robots and different lasers from different manufacturers and exceeded 2 hours in length each. |

|

I am using vanilla If I use vanilla if I change @saschroeder and @SteveMacenski can you confirm this? |

|

That is really, really interesting. Is that area that you're in also made of glass where the error happens? I could see how this could happen if it gets some scans that look flipped from the reflection from the glass and then when it looks to loop close, it matches those warped scans and when Ceres runs, it has some internal conflicts. A different loss function would help with that fact. I had not included one prior because I felt that each "measurement" was supposed to be "legit" and so we shouldn't remove some outliers. However, with glass or mirrors, you're right in thinking that would totally break my assumptions that they're all "legit". I would be interested to hear if that fixes your issues in other bag files as well. If so, that seems to be a completely valid solution to the problem. I'd appreciate it if you submitted a PR to mention that behavior in the README.md file. That way future folks can learn from our experiences that in environments with glass/mirrors we should consider using a loss function. Thanks for the debugging @tik0. I also believe in trying to give folks a really excellent out of box experience. I would be OK also with that PR changing to the HuberLoss as the new default. It may be useful as well to expose the loss function weight (currently hardcoded to 0.7 which I tested to generally be good for my testing while developing) so that you can tune this as well since that capability seems necessary for your requirements. I can confirm that the loss function fixes it for me too. I stopped around 1000 seconds because it seemed fine and I needed my CPU back. PS: I'm reasonably happy with the performance of the RP lidar in this environment / dataset. That wasn't expected. |

|

I'd like to add some more information to keep this topic alive. I managed to get very stable results on Maybe @SteveMacenski can tell a story about the parameters in my empiric observations? |

I think we've talked about this one already so I'll skip

I'm really surprised you had to change that. I don't have a good answer off the cuff on that. Are you sure after changing all the other things that this actually made a beneficial impact?

Like I mentioned above, I really didn't expect this to need to be used. I work with mostly professional long range lidars so this is not much of an issue. As you mention, there could be some benefits to adding this for mirrors/glass/cheap(?) lidars. Can you please submit a PR exposing this parameter (the value to assign to the loss function)? This makes sense to me as something to optimize if you have graph closure problems from outliers. Higher here will mean more rigor applied to each point about whether it really fits the graph.

From the description This lets you have a large window of memory to match against, but also what this does ( and what I think you see happening) is that this also effects the minimim chain size for what is considered a loop closure. Ex if this was 5, on scan 6 you could "loop close" against scan 1. If this is 100, you cannot loop close from scans 1-99 on scan 100 since they're all chained together. Making this larger will effective disable smaller loop closures which could be beneficial to reduce the number of instances of this problem you see. |

|

I tested the parameters proposed by @tik0 on my laptop, which worked well, as long as I played the bags with normal speed, even though the CPU load was temporarily higher than the number of real cores. I already did some testing based on @tik0's first comment and found that the HuberLoss function does have an interesting effect on the mapping behavior:

I uploaded some gifs to illustrate this:

Seeing all these 180 degree turns and considering that I can reproduce this in the simulation, I don't think this is about an unreliable sensor/ reflections, but rather a systematic error. I applied a laser filter to test with a range of 270, 230 and 180 degrees. For 270 I get the same initial rotation, but not with 230 and 180 degrees. I stopped the bag (2020-03-27-...) after several minutes as the map quality got really bad. I used the gazebo sim for some quick testing. I set the lidar frame's z-axis rotation to 0, 90, 135 and 180 degrees compared to base_link and tried to reproduce the loop closures with 180° rotation. I will do some more tests with the lidar frame facing forward (possibly on the robot) to verify that it solves the problem. |

|

I've written a small script to flip the tf by 180° and laserscans by -180° inside the bags. Therefore, I am doing virtually nothing to the data. However, after this, everything worked perfectly even with the standard parameters. I can also penetrate the CPU to even higher loads after the flip and slam_toolbox does its job. There might be a real issue in slam_toolbox if the laser frame is not co-aligned with the base_link frame. This shouldn't actually be an issue if all transforms are defined correctly. But, in fact, it is. |

I take your bet and raise you a laser frame yaw parameterization https://github.com/SteveMacenski/slam_toolbox/blob/eloquent-devel/src/laser_utils.cpp#L95-L127 |

|

I printed all variables in the parametrization and they look ok in all cases. However, I've found another minor issue regarding the detection, if the scanner is 360°. I don't know if this holds for all 360°lidar, but for the rplidar A2M8 from the bags the following code changes should be considered: Therefore, the condition in the parameterization does always fail because of Furthermore, if the scanner is then correctly detected as a 360° scanner, this condition shows falsy behavior because of the later calculation of the number of scans. The residual should always be @SteveMacenski as the scan matcher always seems to work, I assume that maybe the orientation of the lidar is not respected when ceres does the loop closure. Because falsy behavior only occurs in these cases. @SteveMacenski any ideas on that? |

Please submit a PR and I'll merge that. Looks good to me. That was a user submitted issue with a RPLidar and apparently it didn't totally work out.

I'm fairly certain it does, you can test yourself with the magazino bags here: https://google-cartographer-ros.readthedocs.io/en/latest/data.html#id78 their robot has 2 laser scanners at 90 degree angles from the base link and it builds fine. I used these bags in developing the (still incomplete) multi-robot synchronous distributed mapping features (so... damn... close... just need someone to help me trouble shoot some optimizer errors). |

|

I ended up turning the lidar on my robot as this was the fastest way to fix it. As described by @tik0 everythings works fine now. I think the overall performance has improved, too. Out of curiosity I tested the hallway_return.bag from the cartographer docs with scan_front/ scan_rear each and the mapping does look strange to me. Though the map itself is build nicely you can see in rviz that the laser scans are misaligned to the map and I see the same characteristic map rotation, that I described in my previous comments, shortly after the robot turns back in the hallway. To me this is a strong indicator that there might be more issues. I guess the bag is just to short or the map to simply for more issues. |

|

Hi, I renamed the ticket to reflect that (mostly because that original name was non-descript). This is an interesting topic, and maybe there is some issue. @saschroeder @tik0 do either of you see where that laser utils for handing the angle may be wrong? Or tracing back into Karto-land where that may not be properly utilized? I'd love to help fix this, but without dropping everything else to try to debug this, I'm not sure I can. If we can at least identify an area that might be wrong, that's something I can sit down and attack.

Can you qualify that a little? (or quantify, though I think probably not :-) )

I'd like also a description on what the "same" you see. Particularly in pertaining to the behaviors you see in this that you also saw in your setup. We might be able to get at something here. Keep in mind that that bag as TF built into it. That offset I think is because you didn't use the rosbag filter script I showed above to remove the existing TF odom->map transforms. I've seen that as well, but as a result of not conditioning the bag for "raw" data without SLAM or localization TF. |

|

I think this is the same issue I was having in: |

|

So we are using the RPLidar S1 on some of our robots. And for some reason it provides the laser scan rotated by 180 degrees. |

Mhm... that also makes me suspect that maybe the laser scans are backwards (though logic would say flipping both 180 should be identical). There are standards about how the laser scan messages should be formulated (e.g. sweep direction). If flipping it works, maybe that relates? The annoying thing is that this is only reported on RP-Lidars and no-one else. Their ROS driver is really bad. It sounds like this may be the result of multiple issues in the drivers, not just the frame. I'm not sure what I can do here until someone here with a sensor finds what's wrong with either the driver or this package regarding it, or someone with another non-RP-lidar or its derivatives 360 sensor verify it happens as well. This isn't something I can debug without a robot equipped with this sensor in front of me. I'd be more than happy to help whoever is willing to work on it any way I can including explaining things, looking at datasets and seeing if I can find the issue, etc. @tik0 was pretty on point in figuring out the other issues, maybe they're interested? |

|

I will dig in the RP lidar S1 part, will try to fix the frame issue and see if that sorts it. |

|

If you need justification to upstream that fix REP-103 has your back https://www.ros.org/reps/rep-0103.html RP-lidar should be in compliance with it. |

|

I also have an RPLIDAR. I also have the cord facing the back of the robot. I am also seeing this same behavior.

Unfortunately I am somewhat of a novice at this stuff along with this being a hobby project so I don't have the time or skills to dig into the RPLIDAR driver myself. I don't hold out a lot of hope at the moment that Slamtec will fix their driver. The last responses I see from the maintainer to an issue on that repo is from 2018. |

|

Hello, we solved this issue by modifying the rplidar ros package, we made a fork of it and modded how the laser scan is read, and exposed the change via a parameter that can be set on the launch file. and for it to do the 'flipping'g you need to set the parameter this is how our launch file looks like: Copy the repository to your work space, make a |

|

I tried the patch recommended by @joekeo and I think it changed things for me, but didn't fix them. Now, instead of rotating, it just shifts. It always happens though after 10 to 15 minutes of running. Unfortunately I don't have a bag file for you, but I will work on that. What I do have is an image. It is obvious in the image that it is offset in one direction significantly. This was harder to see before when the map would rotate instead of only shift. I may just try rotating the scanner on the robot too, to eliminate needing to perform code tricks to deal with this, I appreciate any input you may have for how I can debug this further, and I understand that I haven't given you enough information to actually diagnose this yet. I wanted to share my findings before I forgot though. Update It still rotates also. My issue might not even be related to this? Update: What happens at each of these points (pictured above) is that the transform from map to odom suddenly becomes all zeros. So the "offset" is whatever the accumulated odometry drift has been since the start, which is why it seems somewhat "random" between runs and is sometimes highly rotated and other times just offset. Update: It is 100% repeatable and consistent with the bag file. |

|

Update with bag file, config, and video showing what happens and when. Here is the bag file: Here is the configuration file used: The RPLIDAR A3 is mounted backwards. This happens with or without using the RPLIDAR driver code that flips the lidar image 180 degrees, but this bag file is using that code, hence the transform for the LIDAR appears facing forward. |

|

1.5.4 was incremented a month ago so yeah, its pretty new. Overall, this issue is only being reported by people with RP-lidars (or potentially 360 2D lidars, but so far only RP-lidar users have commented on this thread). I have run this package for now hundreds of hours across dozens (of not low-hundreds) of facilities of various shapes and sizes using typical 2D laser scanners (sick, hokuyo, & similar) without seeing an issue so there's so far not much I can do since I cannot reproduce it. I'd love to merge in a change to fix what you're seeing, but that requires someone to do the leg work to identify the issue and potentially resolve it. But even just telling what the specific issue is could be something I may be able to fix and have a user test. That identify transform is interesting. Are you seeing errors like: https://github.com/SteveMacenski/slam_toolbox/blob/noetic-devel/slam_toolbox/src/slam_toolbox_common.cpp#L358? That's the only place an identity transform would be set at runtime after the first iteration. I looked through the If you can deterministically cause this to happen, awesome. I'd print or set break points in |

|

@chrisl8 I am using the RPLIDAR A2M8 and rplidar_ros on this commit. I had also issues with a falsy calculation of the residual scan, which I have explained here in detail. However, PR #257 does not show this effect on my scanner. |

Here are my summarized findings for future RPLIDAR users who may find themselves hereMap catastrophic rotation due to RPLIDAR mounting axisSometimes the Map rotates itself 180 degrees underneath you. I have had this happen even in Localization mode: I currently have a ROS Answers post open on this issues in case it has anything to do with Slam Toolbox: A similar or possibly the same problem is noted under Issue #281 This appears to be caused by the RPLIDAR being mounted "backwards", that is, with the cord toward the back of the robot.

TL;DR: Ensure the "front" (cord end) of your RPLIDAR is oriented toward the FRONT of your robot. Nobody quite knows why, but it fixes this. NOTE: As of September 2020 there are some alternate solutions if you are using the ROS2 driver provided by allenh1 as of this PR. Angle Increment CalculationYou may see this error as soon as you start up Slam Toolbox: As of September 2020 this issue is fixed by this PR. Sweep DirectionThe Sweep Direction of the RPLIDAR was called into question a few times. I used this code to hack the output into a curve starting at the first point in the scan distance output array: and the output is in a counter-clockwise direction as ROS expects: It may not be immediately obviouse, but the code actually lays down the data into the Clearly the Based on some .bag files I downloaded this seems to be the same data order that the Hokuyo uses, with the The sweep direction from the RPLidar driver is fine.If anybody finds themselves here and needing help with RPLIDAR and Slam Toolbox, feel free to ping me or contact me directly and I will share anything further I've found, or personal help on hacking things up to where I am at. For me, with my RPLIDAR driver edits, Slam Toolbox works very well. |

|

I don’t think the cable forward is 100% necessary, but if you don’t, you must correct the wrong axes on the rplidar driver since they haven’t patched it themselves. Both the axes and the swapping the readings with the python script above would have a similar output. |

* Fix incompatibilities with slam_toolbox: - Fix angle compensate mode to publish angle compensated values - Fix angle_increment calculation - Add optional flip_x_axis option to deal with issue discussed here: SteveMacenski/slam_toolbox#198. Flip x-axis can be used when laser is mounted with motor behind it as rotated TF laser frame doesn't seem to work with slam_toolbox. * Fix whitespace

…ap 180° if the lidar is mounted backwards Set the edges (constraints) based on the robot base frame (base_footprint) instead of sensor frame (laser).

…ap 180° if the lidar is mounted backwards Set the edges (constraints) based on the robot base frame (base_footprint) instead of sensor frame (laser).

…ap 180° if the lidar is mounted backwards Set the edges (constraints) based on the robot base frame (base_footprint) instead of sensor frame (laser).

|

As an update @WLwind has found something interesting in #325. I need to track through it, but it looks like why the rplidar had issues being "backwards" with its origin frame (which by the way still should be fixed by RPlidar to be REP105 compatible!) is the openKarto assumed it to be aligned with the base frame. I need to verify that, since I thought I'd checked that and the I'm a little uncertain then why on some of my testing bag files with the robot having lidars at 45 degree angles to the forward that this never came up. They're relatively short bags (15 minutes) so maybe the optimizer is just good at correcting for it? Maybe why changing the loss function had an outsized impact at hiding the issue? @chrisl8 can you test this PR from your last comment it sounded like you wanted to have the lidar mounted the opposing way. This might be a good way to see if this fixes that issue for you once and for all with map flipping! |

|

@SteveMacenski I rotated my RPLIDAR to set the cord toward the front of my robot last year, which solved the spurious map rotation issue for me. I will try running some bag files through the new and old code this weekend to see if I can obtain a consistent positive and negative result with the old/new code. |

|

Noetic #325 appears to entirely fix the reported issue! I ran the original bag file from this issue through the current main branch a few times and got different results every time, none of which looked reasonable: Then I used Noetic #325 many times and obtained this result every time: The fact that Noetic #325 not only creates a map that is reasonable, but that it is also 100% consistent on every run for this bag file is wonderful! |

|

Great to hear! Now we're just waiting to see how localization is impacted, see this comment (#355 (comment)) if you have a few moments to test in localization mode too to see if you get any warnings out of the ordinary. The only thing blocking me from merging and releasing these updates to binaries for all distros is validation from someone on the localization warnings potentially reported. I couldn't get them locally in my sandbox environment, but I don't have a large production space like that above or localization dataset different from the SLAM run. |

|

@SteveMacenski Just to be clear, "localization" is when I load an existing map and send the robot to points on that map, correct? I did save a map, restart ROS, and load the map using Noetic #325 and let my robot randomly navigate to various points on the map for about 6 hours. The orientation of the map stayed true to the scan data the entire time. This is the area I am testing in: It is in my home, so also not an enormous area. However, I did not look at the log at all. I will set it up and run it again this week and look at the log output. Worst case I might not get to it until this weekend again. |

|

We mean the localization mode available via the localization node https://github.com/SteveMacenski/slam_toolbox/blob/noetic-devel/slam_toolbox/launch/localization.launch#L3 rather than the sync/async mapping modes. You can load a pose graph in these modes and continue adding to the map, but that's different than the pure localization mode. I don't know practically speaking if there would be any difference in whether I expect that you'd see any warnings or not, but just to play it safe, the localization mode is where I was told about this potential warning issue introduced in this PR. That test, if you just did continued mapping in the async/sync nodes, is also a good test and a good datapoint that it A) worked and B) you didn't see a terminal flooded with warnings after 6 hours. |

…e lidar is mounted backwards (#326) * fix the issue (#198) that loop closures can rotate the map 180° if the lidar is mounted backwards Set the edges (constraints) based on the robot base frame (base_footprint) instead of sensor frame (laser). * linting things * add function GetCorrectedAt to simplify getting robot pose * add update() when setting corrected pose after optimizing

…e lidar is mounted backwards (#325) * fix the issue (#198) that loop closures can rotate the map 180° if the lidar is mounted backwards Set the edges (constraints) based on the robot base frame (base_footprint) instead of sensor frame (laser). * linting things * add function GetCorrectedAt to simplify getting robot pose * add update() when setting corrected pose after optimizing

…th exception about pthread_mutex_lock (#386) * fix the issue (#198) that loop closures can rotate the map 180° if the lidar is mounted backwards Set the edges (constraints) based on the robot base frame (base_footprint) instead of sensor frame (laser). * linting things * add function GetCorrectedAt to simplify getting robot pose * add update() when setting corrected pose after optimizing * fix issue (#180) that localization mode may terminate unexpectedly with exception about pthread_mutex_lock

…th exception about pthread_mutex_lock (#390) * fix the issue (#198) that loop closures can rotate the map 180° if the lidar is mounted backwards Set the edges (constraints) based on the robot base frame (base_footprint) instead of sensor frame (laser). * linting things * add function GetCorrectedAt to simplify getting robot pose * add update() when setting corrected pose after optimizing * fix issue (#180) that localization mode may terminate unexpectedly with exception about pthread_mutex_lock

Required Info:

Steps to reproduce issue

I created a minimal repo with bags, config and launch files:

https://github.com/saschroeder/turtlebot_slam_testing

The bug always occurs with rosbags and sometimes in simulation, too. Disabling loop closure prevents the bug.

I can upload more rosbags if necessary.

Expected behavior

Record an accurate map and correctly use loop closure to improve the map.

Actual behavior

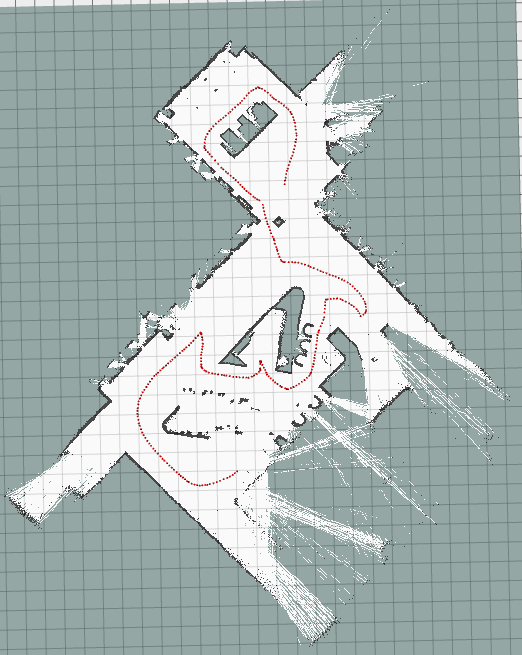

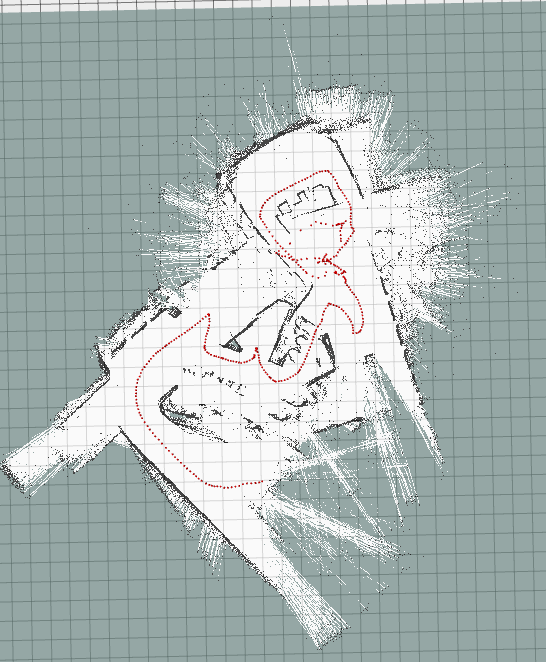

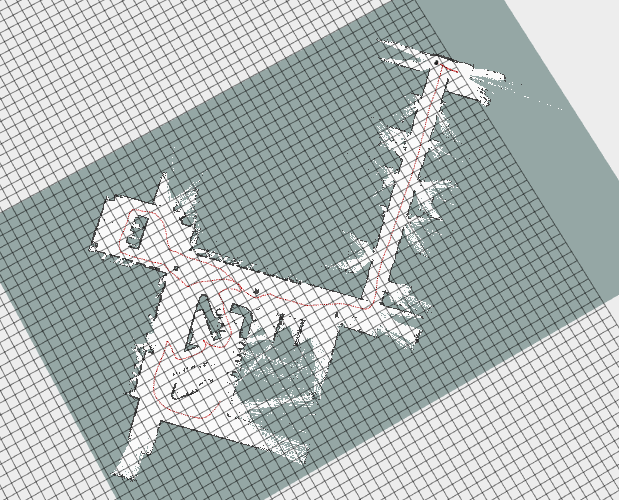

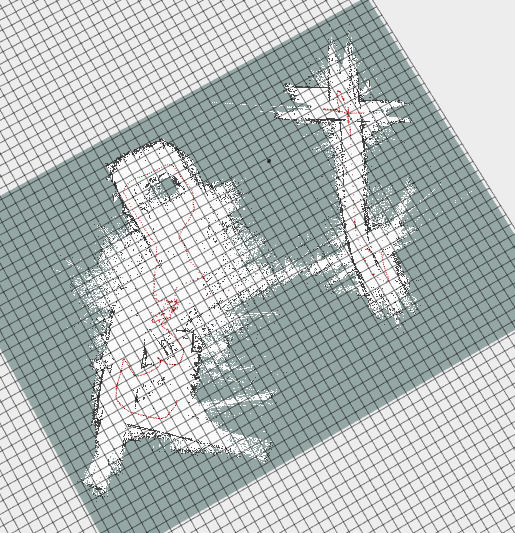

After some time the map is rotated around 180° (may be related or not) and some time after this (seconds to minutes) the pose graph is optimized in a wrong way, causing the map to be broken (see images below).

Additional information

Example 1 (before/after optimization):

Example 2 (before/ after optimization):

The text was updated successfully, but these errors were encountered: