-

Notifications

You must be signed in to change notification settings - Fork 83

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Integrate ensemble methods in AutoML #1253

Conversation

Codecov Report

@@ Coverage Diff @@

## main #1253 +/- ##

==========================================

+ Coverage 99.95% 99.95% +0.01%

==========================================

Files 213 213

Lines 13436 13555 +119

==========================================

+ Hits 13429 13548 +119

Misses 7 7

Continue to review full report at Codecov.

|

|

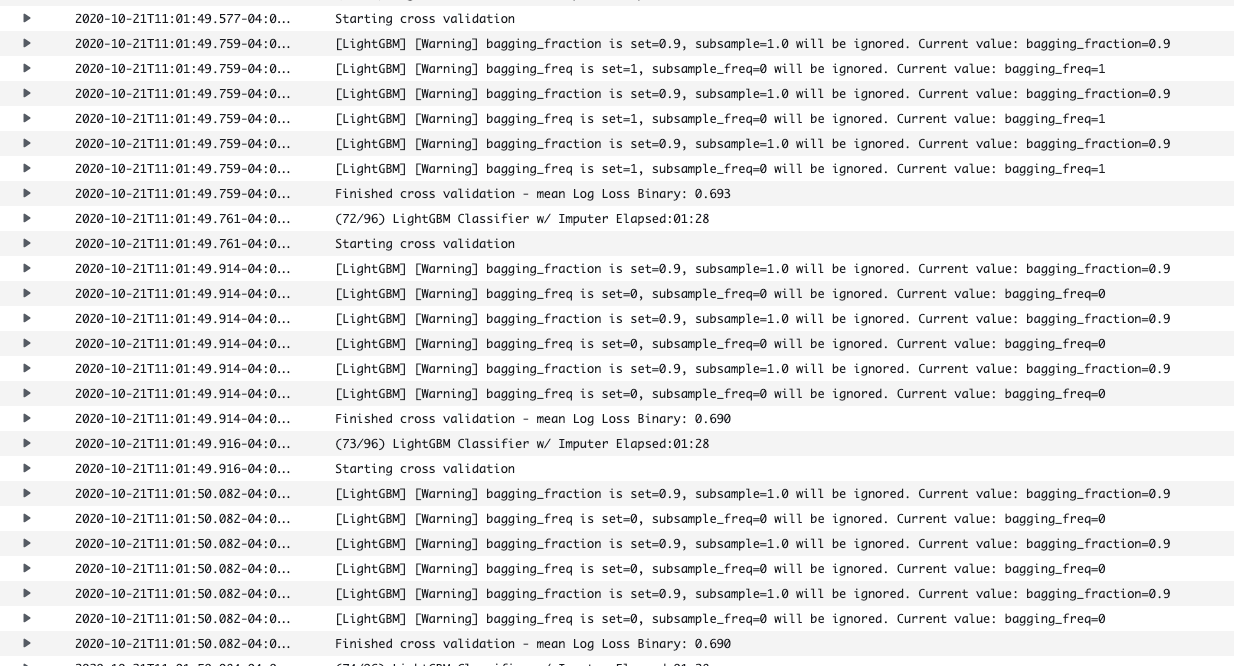

@dsherry Okay! I kicked off a test last night. Seems like there aren't errors now, but I am getting a lot of warnings based on the update: Are we curious and want to wait for tests to complete to see how performance changes with LightGBM? Or is that a nice to have, but not necessary for merging? |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

LGTM on my end - just a couple things to clean up.

…nto 1130_ensemble_in_automl

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@angela97lin I think this is great! I have some questions (mainly for my understanding lol)

| if self.batch_number == 1: | ||

| self._first_batch_results.append((score_to_minimize, pipeline.__class__)) | ||

|

|

||

| if pipeline.model_family not in self._best_pipeline_params and score_to_minimize is not None: |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

How come we check None and not np.nan (the score that AutoML uses when a pipeline fails)?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Haha I think this came out of the test_pipelines_in_batch_return_none test which checks what if the pipeline returns None 😅 When None is returned, we get an TypeError: '<' not supported between instances of 'NoneType' and 'int'; np.nan doesn't throw this error since you can compare (though score_to_minimize < current_best_score will always be False so we never update the value to np.nan)

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@angela97lin so exciting that we're ready to 🚢 this, nice going!

As discussed, let's change the automl default to ensembling=False. Other than that, all that's blocking: one comment in iterative algo, log message in _compute_cv_scores

It would be great to add the missing docstrings as well, and I left a couple other suggestions.

| pipeline_params = pipeline_dict['parameters'] | ||

| input_pipelines.append(pipeline_class(parameters=self._transform_parameters(pipeline_class, pipeline_params))) | ||

| ensemble = _make_stacked_ensemble_pipeline(input_pipelines, input_pipelines[0].problem_type) | ||

| next_batch.append(ensemble) | ||

| else: | ||

| idx = (self._batch_number - 1) % len(self._first_batch_results) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I believe this should be updated to (self._batch_number - 1) % (len(self._first_batch_results) + 1), to match the other modular arithmetic you added in the elif above.

This selects which pipeline class we should tune in the current batch, when we're not in the 0th batch or in a stacked ensemble batch.

This is an aside, but in the future I hope we can figure out how to represent the state here in a less confusing way! I think our requirements for this code have grown a little beyond its means, haha.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Ahh, really good catch. We have to update it if self.ensembling is True:

num_pipeline_classes = (len(self._first_batch_results) + 1) if self.ensembling else len(self._first_batch_results)

idx = (self._batch_number - 1) % num_pipeline_classes

| assert any([p != dummy_binary_pipeline_classes[0]({}).parameters for p in all_parameters]) | ||

|

|

||

| for i in range(1, 5): | ||

| for _ in range(len(dummy_binary_pipeline_classes)): |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This is totally a style nit-pick / me just confusing myself, haha, but why the double-for here?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Ah, the for i in range(1, 5) is every cycle of batches (goes through every pipeline class) so it tests that the ensemble is called at the end of every cycle. And then the inner for loop is for each pipeline class. Really confusing stuff 😂

…nto 1130_ensemble_in_automl

|

@dsherry @freddyaboulton Thanks for all of the great feedback! I've addressed all of the comments you guys made, so this PR should be good to merge :D If there's anything to follow up on, we can open a separate PR after! |

Closes #1130