-

Notifications

You must be signed in to change notification settings - Fork 922

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

ARTEMIS-2676 PageCursorProviderImpl::cleanup can save decoding pages without large messages #3044

Conversation

|

I'm planning to add another commit to try save completely reading the @wy96f @clebertsuconic seems that I cannot assign reviewers, so I'm calling out directly ;) |

|

If you could please wait my PR to be merged first? I'm changing around these methods also.. and I wanted to have a version closer to what we have now as I'm cherry-picking to a production branch based on a previous version. |

|

Sure bud, there is no hurry and this change should be simpler enough to be rebased against your when you have done ;) |

|

I've run the CI tests and this change seems to work fine yay |

|

@clebertsuconic

I see that ddd8ed4 has introduced |

|

@clebertsuconic has confirmed that |

|

@franz1981 there's a conflict with master. can you rebase it please? |

|

@clebertsuconic yep, there has been some new changes around that part recently, so tomorrow will take care of rebasing ;) |

|

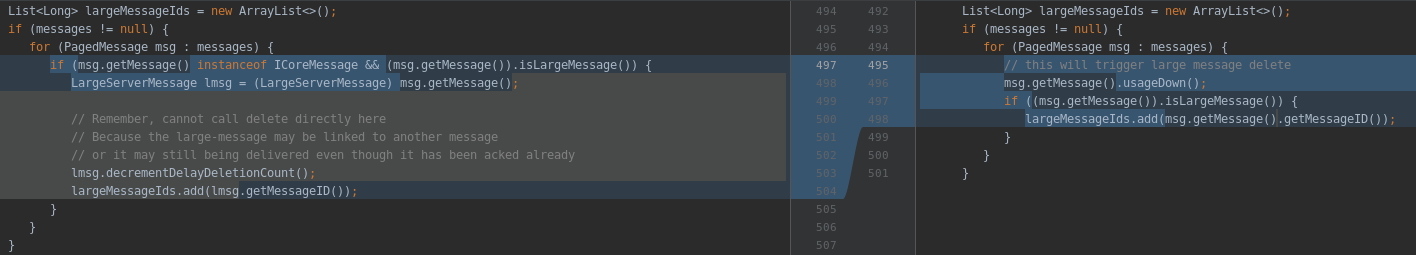

I could fix the rebasing, however your implementation is not valid. Up until CoreMessages, we used a special record type on paging, only used for Large Messages. With AMQP, I didn't use that record at all. instead I used the Persister from the AMQP Protocol. So, you would have to check the Persister Type, at the proper record position. Besides, the following implementation is not self documented... if things change this will likely introduce bugs (lack of encapsulation of this rule): You would need to encapsulate this through PagePosition for Core Records, or check the Persister, and do the proper encapsulation if you really want to implement such feature. |

|

Look at the AMQPLargeMessagePersister. you would need to check that record.... but please you would need to have the proper operations on the OO model.... not much about OO here, but about organizing the code in such way we can find the operation when we need it. I think it's valid to check if the record is for Paging, but you would have to do it in an organized way. |

|

@clebertsuconic I see on public boolean isLargeMessage() {

return message instanceof ICoreMessage && ((ICoreMessage)message).isLargeMessage();

}and @Override

public void encode(final ActiveMQBuffer buffer) {

buffer.writeLong(transactionID);

boolean isLargeMessage = isLargeMessage();

buffer.writeBoolean(isLargeMessage);why we cannot fix check/encode/decode to correctly write the I've added a new commit that tries to minimize the changes and that is taking this route: I will add some tests to validate this new behaviour: please, let me know how it looks 👍 |

|

@clebertsuconic I've tried to come up with a test to cover AMQP large and standard messages: let me know if the way I create and write |

4a08a38

to

996bcdb

Compare

| final PagedMessageImpl msg = new PagedMessageImpl(encodedSize, storageManager); | ||

| msg.decode(fileBufferWrapper); | ||

| assert fileBuffer.get(endPosition) == Page.END_BYTE : "decoding cannot change end byte"; | ||

| msg.initMessage(storage); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@clebertsuconic I see that sometime we use storage and sometime the member storage manager stored on the Page...any idea which one would be the most correct to use?

|

I've rebased against master: the logic should be correct, but I would like to add some unit tests around the introduced logic too :) |

|

I see that this one is a kind of major perf improvement on paging, but I still want to add some more tests on binary compatibility of PagedMessage decoding that I see we currently don't cover on tests |

|

I will try to add the missing tests in the next days |

…without large messages

|

I was thinking about... I need this as part of this release. the change you made here wouldn't break the compatibility if released now... but it would break if released later. up till now we didn't have any other type of large messages... if we released later, we would have to deal with previous versions where you may have AMQPLargeMessage with byte(1), while this PR is expecting byte(2). so, I am merging this now. Besides I have a full pass on this PR as well. |

PageCursorProviderImpl::cleanup is calling PageCursorProviderImpl::finishCleanup that's fully reading pages (when not present in the PageCache), just to trigger large messages delete.

The decoding phase could be skipped and possibly the page read as well.