New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

[AIRFLOW-6544] add log_id to end_of_log mark log record #7141

Conversation

|

Congratulations on your first Pull Request and welcome to the Apache Airflow community! Here are some useful points:

Apache Airflow is a community-driven project and together we are making it better 🚀. In case of doubts contact the developers at: |

Codecov Report

@@ Coverage Diff @@

## master #7141 +/- ##

=========================================

+ Coverage 85.24% 86% +0.76%

=========================================

Files 683 866 +183

Lines 39155 40559 +1404

=========================================

+ Hits 33378 34884 +1506

+ Misses 5777 5675 -102

Continue to review full report at Codecov.

|

| self.handler.stream.write(self.end_of_log_mark) | ||

| if self.write_stdout: | ||

| print() | ||

| self.handler.emit(logging.makeLogRecord({'msg': self.end_of_log_mark})) | ||

|

|

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Doesn't this change where the end of log mark goes?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

yes, I sent email to them and suggested those changes are bad and copied that message to you. Please see email I sent to you and authors of 5528.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

sorry I meant that I sent the email to Andrii and the two authors of 5528.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

from 5528:

When the end_of_log_mark is wrapped in a log record, the end_of_log_mark can no longer be

able to match the log line in _read:

metadata['end_of_log'] = False if not logs \

else logs[-1].message == self.end_of_log_mark.strip()

It leads to the UI keeps calling backend and generates lots of load to ES.

By removing the log_id from the end-of-log mark, it would make it worse as the ui would continue to try to find the end-of-log mark and it won't ever find it as it searches the end-of-log mark by log_id.

I am not sure what the sentence mean by "When the end_of_log_mark is wrapped in a log record". I also observed that the end-of-log mark might end up within the same line of other log lines and it would prevent us from finding the end-of-log mark in those cases. To address that, I always add an obnoxious print right in front of the end-of-log mark line, to ensure the "end-of-log" mark is always in a separate line when printing to console. This is import for filebeat/logstash on kubernetes to pick up the end-of-log mark log line in a separate document.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

It means the last line would be something like [2020-01-16 07:58:32,712] {es_task_handler.py:XXX} INFO [end_of_log_mark] and thus made the reader unable to understand it.

I'm a bit lost how did this removed the log id from the end_of_log_mark. Isn't the log_id we constructed in this file only for log fetching? My understanding is that the log_id is determined when we upload the log, e.g. when we pipe stdout to logstash or when we upload file through filebeat to logstash.

Maybe I was understanding this wrong and there is indeed a bug. In that case I would agree on spliting this change into two PRs for sanity purpose.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Hi @larryzhu2018 for your description:

It looks like you have write_stdout as true, in fact, my pr: #7199 fixed the always true for write_stdout. could you please test the case when write_stdout is False.

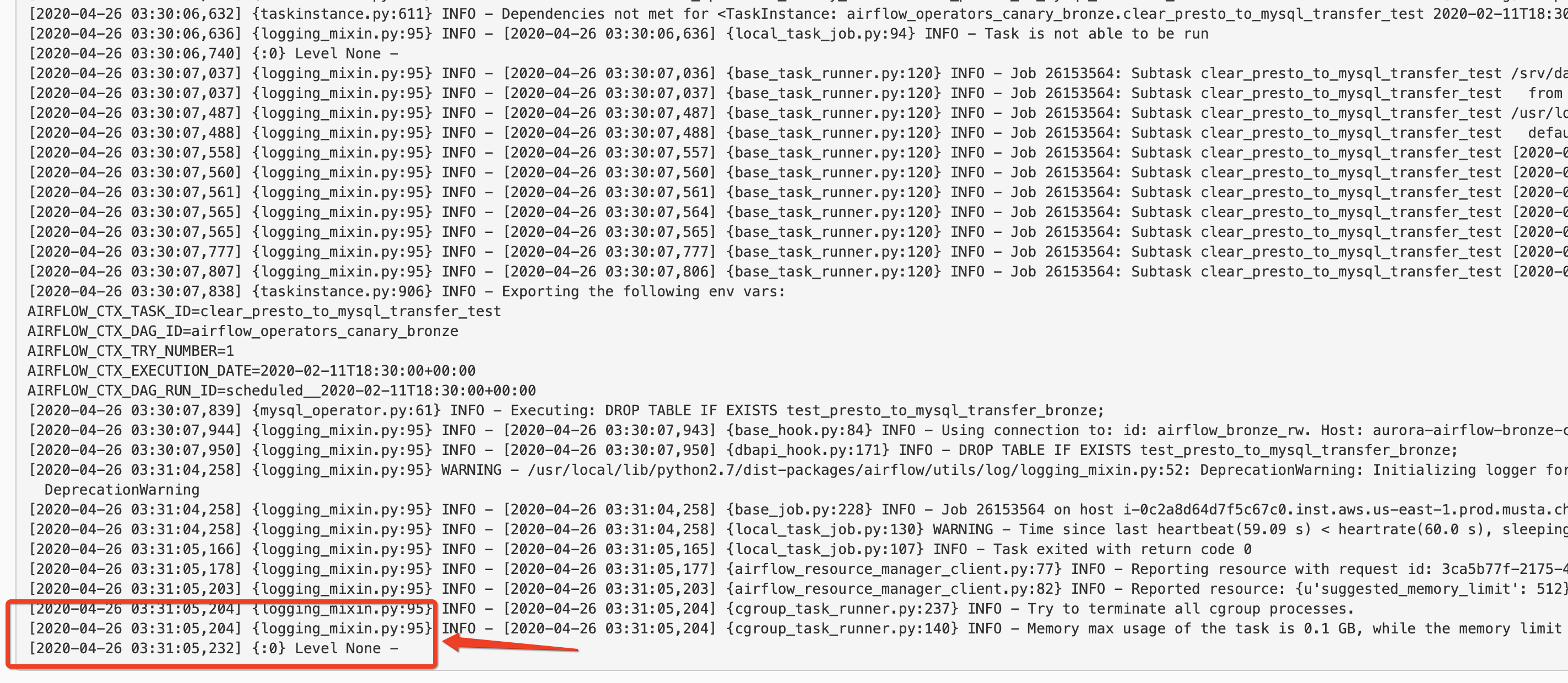

Our set up is:

write_stdout is False

json is False

END_OF_LOG_MARK = u'\u0004\n'

This is what we see in the Kibana

I remember if the end_of_log is wrapped in a logging.makeLogRecord it will start with

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

this works as long as you do not put white spaces into your end_of_log_mark. I think you would just ask for troubles by putting white space characters into the end-of-log mark.

I am not sure why using white space will cause troubles.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Hi @larryzhu2018 for your description:

It looks like you have

write_stdoutastrue, in fact, my pr: #7199 fixed the alwaystrueforwrite_stdout. could you please test the case whenwrite_stdoutisFalse.

[larryzhu2018]: the test case I have in the PR, test_close_with_log_id, is setting write_stdout to False so please check.Our set up is:

write_stdoutisFalse

jsonisFalse

END_OF_LOG_MARK = u'\u0004\n'This is what we see in the Kibana

I remember if the

end_of_logis wrapped in alogging.makeLogRecordit will start with

it is confusing in kibana because you use a white space as the mark. what do you think this would look like if you use "end_of_log_for_airflow_task_instance" as that is what I use in deployment.

There's a lot of disagreement going on here.

@larryzhu2018 Can you please give me DETAILED instructions on how to test this bug and your fix locally, assujming I have no current elasticsearch or kibana set up currently. (Docker would be preferred.)

Please note that I did not change the production code here. I only reverted Ping's recent fix that broke logging using elastic search. I also provided unit test case that tests the log_id logic, show how log_id is used and I also showed here the ingest processor we use so any one who has an elasticsearch can copy and paste and try it out. Is this sufficient? I won't have time to add docker, and elastic ingestion nodes etc as I did not add the elastic-search logging support myself. I only reverted a recent change because the authors did not understand the code logic or how it is supposed to work. Do you see the community service I provided here?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

this works as long as you do not put white spaces into your end_of_log_mark. I think you would just ask for troubles by putting white space characters into the end-of-log mark.

I am not sure why using white space will cause troubles.

see the .strip() call as I quoted earlier. This basically means if you have empty spaces in your end-of-log mark, it won't work and it will confuse the users.

else logs[-1].message == self.end_of_log_mark.strip()

Does this make sense now?

But please do not remove .strip() call to "fix" this. It is hard to guarantee the event pipelines preserve whitespaces so it is best to not use whitespace in your end-of-log mark.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

else logs[-1].message == self.end_of_log_mark.strip()I think this will not work if the

end_of_log_markis wrapped as a log record, as themessagewill be the format that Kevin mentioned and it also depends on thelog formatter.

I did not change any production code but did add a test case to show how this works.

in short, you will need to deploy an ingest log processor, and the ones we have look like this:

"description" : "cluster json log Pipeline",

"processors" : [

{

"rename" : {

"field" : "message",

"target_field" : "raw_message"

}

},

{

"json" : {

"field" : "raw_message",

"add_to_root" : false,

"target_field" : "json_target"

}

},

{

"grok" : {

"field" : "json_target.message",

"patterns" : [

"Job %{DATA:job_id}: Subtask %{DATA} %{GREEDYDATA:json_msg}",

"%{GREEDYDATA}"

]

}

},

{

"json" : {

"field" : "json_msg",

"add_to_root" : true,

"if" : "ctx.job_id != null"

}

},

{

"json" : {

"field" : "raw_message",

"add_to_root" : true,

"if" : "ctx.job_id == null"

}

},

{

"remove" : {

"field" : "json_msg",

"ignore_missing" : true

}

},

{

"remove" : {

"field" : "json_target"

}

},

{

"set" : {

"field" : "event.kind",

"value" : "tasks",

"if" : "ctx.message != null"

}

},

{

"set" : {

"field" : "event.dataset",

"value" : "airflow",

"if" : "ctx.dag_id != null && ctx.task_id != null"

}

},

{

"set" : {

"field" : "log_id",

"value" : "{{dag_id}}-{{task_id}}-{{execution_date}}-{{try_number}}",

"if" : "ctx.event?.dataset == 'airflow'"

}

},

{

"set" : {

"field" : "offset",

"value" : "{{log.offset}}",

"if" : "ctx.event?.dataset == 'airflow'"

}

}

],

"on_failure" : [

{

"set" : {

"field" : "error.message",

"value" : "{{ _ingest.on_failure_message }}"

}

}

]

|

@ashb IMO better to split 2 unrelated changes into 2 tickets:

|

|

If you can spell @ashb correctly, that would be great. |

sorry my mistake :( |

|

@larryzhu2018 Yup, this is two tickets as Andrii mentions. And this seems to revert the change from #6159 -- so you will need to explain this change in much more detail as to why you think it your version is now right. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Issue link: AIRFLOW-6544

The “end of log” marker does not include the primary key of the logs: log_id. The issue is then airflow-web does not know when to stop tailing the logs.

We print the end of log marker using handler emit() method that adds the dag_id, task_id, execution_date and try_number into the log records. Previously we do not have tests to validate the log_id look up logic from elastic search and such a test is added here.

You have to add a description above |

is it correct now? |

|

There's a lot of disagreement going on here. @larryzhu2018 Can you please give me DETAILED instructions on how to test this bug and your fix locally, assujming I have no current elasticsearch or kibana set up currently. (Docker would be preferred.) |

Please note that I did not change the production code here. I only reverted Ping's recent fix that broke logging using elastic search. I also provided unit test case that tests the log_id logic, show how log_id is used and I also showed here the ingest processor we use so any one who has an elasticsearch can copy and paste and try it out. Is this sufficient? I won't have time to add docker, and elastic ingestion nodes etc as I did not add the elastic-search logging support myself. I only reverted a recent change because the authors did not understand the code logic or how it is supposed to work. Do you see the community service I provided here? |

The disagreement is wether it's even broken or not. You say it is, Ping says it isn't. If Ping/Kevin don't respond soon then I'll try and follow your ingest instructions and check our the before and after. |

|

Ping has been terse. I have been going out of my way explaining it, writing test case to show how it is expected to work. I hope once Ping reviews my explanations and unit tests, it would resolve the disagreements. Thanks.

Get Outlook for iOS<https://aka.ms/o0ukef>

…________________________________

From: Ash Berlin-Taylor <notifications@github.com>

Sent: Wednesday, January 29, 2020 2:22:36 PM

To: apache/airflow <airflow@noreply.github.com>

Cc: larryzhu2018 <larryzhu@live.com>; Mention <mention@noreply.github.com>

Subject: Re: [apache/airflow] [AIRFLOW-6544] add log_id to end_of_log mark log record (#7141)

I only reverted Ping's recent fix that broke logging using elastic search.

The disagreement is wether it's even broken or not. You say it is, Ping says it isn't.

If Ping/Kevin don't respond soon then I'll try and follow your ingest instructions and check our the before and after.

—

You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub<https://eur03.safelinks.protection.outlook.com/?url=https%3A%2F%2Fgithub.com%2Fapache%2Fairflow%2Fpull%2F7141%3Femail_source%3Dnotifications%26email_token%3DAKTDZPHJB6JTFQBRABJMQXDRAH6SZA5CNFSM4KFUTS22YY3PNVWWK3TUL52HS4DFVREXG43VMVBW63LNMVXHJKTDN5WW2ZLOORPWSZGOEKI65GI%23issuecomment-579989145&data=02%7C01%7C%7Cb408b76ec8e546fea0bb08d7a509bf67%7C84df9e7fe9f640afb435aaaaaaaaaaaa%7C1%7C0%7C637159333589542743&sdata=EHRK5cN35zxsMFf8RgyUO1zPbSDmabPiDz1t4bj2KKs%3D&reserved=0>, or unsubscribe<https://eur03.safelinks.protection.outlook.com/?url=https%3A%2F%2Fgithub.com%2Fnotifications%2Funsubscribe-auth%2FAKTDZPDRGG225V2BGULDNI3RAH6SZANCNFSM4KFUTS2Q&data=02%7C01%7C%7Cb408b76ec8e546fea0bb08d7a509bf67%7C84df9e7fe9f640afb435aaaaaaaaaaaa%7C1%7C0%7C637159333589552754&sdata=AUalojADZWGighgNd1Dpfa7aCPwvU0iqAmjf64A60MU%3D&reserved=0>.

|

|

Any updates on this? Not sure why, but this seems stuck. Any help wanted? |

can you please help to move this forward? |

|

Can you please help to move this forward? |

testing now |

|

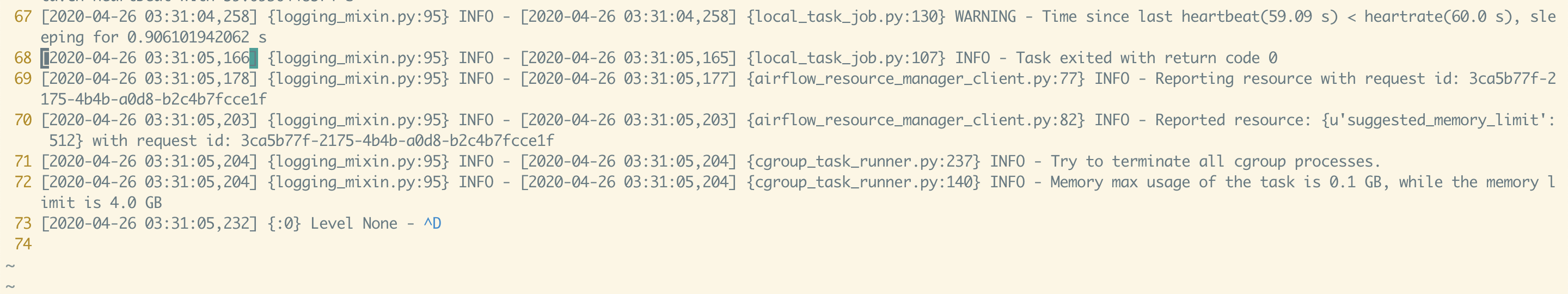

I replicated the change in our internal staging env, the webserver does not stop fetching the log until it timed out. As you can see the last line is Our setting is this is the log from the log file: One thing i also noticed is that in your code, the |

logging pipeline in general does not work with whitespaces. Can you please change this to "end_of_log_for_airflow_task_instance" and also as I mentioned before you will need to turn on json format for the elastic search scenarios to work well because otherwise parsing the dag_id, task_id etc would be harder in elasticsearch. Please see the ingestion pipeline that I shared out earlier. Here are the configurations I use for enabling logging for elasticsearch config:

thanks. I did not change this. this does not impact my scenarios as I deploy airflow in kubernetes and I need to have the write-standout always be true. |

|

This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions. |

The “end of log” marker does not include the primary key of the logs: log_id. The issue is then airflow-web does not know when to stop tailing the logs.

We print the end of log marker using handler emit() method that adds the dag_id, task_id, execution_date and try_number into the log records. Previously we do not have tests to validate the log_id look up logic from elasticsearch and such a test is added here.

Issue link: AIRFLOW-6544

Description above provides context of the change

Commit message/PR title starts with

[AIRFLOW-NNNN]. AIRFLOW-NNNN = JIRA ID*Unit tests coverage for changes (not needed for documentation changes)

Commits follow "How to write a good git commit message"

Relevant documentation is updated including usage instructions.

I will engage committers as explained in Contribution Workflow Example.

* For document-only changes commit message can start with

[AIRFLOW-XXXX].In case of fundamental code change, Airflow Improvement Proposal (AIP) is needed.

In case of a new dependency, check compliance with the ASF 3rd Party License Policy.

In case of backwards incompatible changes please leave a note in UPDATING.md.

Read the Pull Request Guidelines for more information.