New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

ARROW-11266: [Rust][DataFusion] Implement vectorized hashing for hash aggregate [WIP] #9213

Closed

Conversation

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

Use unique_ptr to hold FunctionOptions derived classes instances to fix `invalid-offsetof` python build warnings. ~/arrow/python/build/temp.linux-x86_64-3.6/_compute.cpp:26034:146: warning: offsetof within non-standard-layout type ‘__pyx_obj_7pyarrow_8_compute__CastOptions’ is undefined [-Winvalid-offsetof] x_type_7pyarrow_8_compute__CastOptions.tp_weaklistoffset = offsetof(struct __pyx_obj_7pyarrow_8_compute__CastOptions, __pyx_base.__pyx_base.__weakref__); Closes apache#9274 from cyb70289/offsetof Authored-by: Yibo Cai <yibo.cai@arm.com> Signed-off-by: Benjamin Kietzman <bengilgit@gmail.com>

… -97% for length) # Rational Rust forbids safely accessing uninitialized memory because it is undefined behavior. However, when building `Buffer`s, it is important to be able to _write_ to uninitialized memory regions, thereby avoiding the need to write _something_ to it before using it. Currently, all our initializations are zeroed, which is expensive. apache#9076 modifies our allocator to allocate uninitialized regions. However, by itself, this is not useful if we do not offer any methods to write to those (uninitialized) regions. # This PR This PR is built on top of apache#9076 and introduces methods to extend a `MutableBuffer` from an iterator, thereby offering an API to efficiently grow `MutableBuffer` without having to initialize memory regions with zeros (i.e. without `with_bitset` and the like). This PR also introduces methods to create a `Buffer` from an iterator and a `trusted_len` iterator. The design is inspired in `Vec`, with the catch that we use stable Rust (i.e. no trait specialization, no `TrustedLen`), and thus have to expose a bit more methods than what `Vec` exposes. This means that we can't use that (nicer) API for trustedlen iterators based on `collect()`. Note that, as before, there are still `unsafe` uses of the `MutableBuffer` derived from the fact that it is not a generic over a type `T` (and thus people can mix types and grow the buffer in unsound ways). Special thanks to @mbrubeck for all the help on this, originally discussed [here](https://users.rust-lang.org/t/collect-for-exactsizediterator/54367/6). ```bash git checkout master cargo bench --bench arithmetic_kernels git checkout length_faster cargo bench --bench arithmetic_kernels git checkout 16bc720 cargo bench --bench length_kernel git checkout length_faster ``` ``` Switched to branch 'length_faster' (use "git pull" to merge the remote branch into yours) Compiling arrow v3.0.0-SNAPSHOT (/Users/jorgecarleitao/projects/arrow/rust/arrow) Finished bench [optimized] target(s) in 1m 02s Running /Users/jorgecarleitao/projects/arrow/rust/target/release/deps/arithmetic_kernels-ec2cc20ce07d9b83 Gnuplot not found, using plotters backend add 512 time: [522.24 ns 523.67 ns 525.26 ns] change: [-21.738% -20.960% -20.233%] (p = 0.00 < 0.05) Performance has improved. Found 12 outliers among 100 measurements (12.00%) 9 (9.00%) high mild 3 (3.00%) high severe subtract 512 time: [503.18 ns 504.93 ns 506.81 ns] change: [-21.741% -21.075% -20.308%] (p = 0.00 < 0.05) Performance has improved. Found 4 outliers among 100 measurements (4.00%) 2 (2.00%) high mild 2 (2.00%) high severe multiply 512 time: [508.25 ns 512.04 ns 516.06 ns] change: [-22.569% -21.946% -21.305%] (p = 0.00 < 0.05) Performance has improved. Found 1 outliers among 100 measurements (1.00%) 1 (1.00%) high mild divide 512 time: [1.4711 us 1.4753 us 1.4799 us] change: [-24.783% -23.305% -22.176%] (p = 0.00 < 0.05) Performance has improved. Found 5 outliers among 100 measurements (5.00%) 2 (2.00%) high mild 3 (3.00%) high severe limit 512, 512 time: [373.47 ns 377.76 ns 382.21 ns] change: [+3.3055% +4.4193% +5.5923%] (p = 0.00 < 0.05) Performance has regressed. add_nulls_512 time: [502.94 ns 504.51 ns 506.28 ns] change: [-24.876% -24.299% -23.709%] (p = 0.00 < 0.05) Performance has improved. Found 2 outliers among 100 measurements (2.00%) 2 (2.00%) high severe divide_nulls_512 time: [1.4843 us 1.4931 us 1.5053 us] change: [-22.968% -22.243% -21.420%] (p = 0.00 < 0.05) Performance has improved. Found 24 outliers among 100 measurements (24.00%) 15 (15.00%) low mild 1 (1.00%) high mild 8 (8.00%) high severe ``` Length (against the commit that fixes the bench, `16bc7200f3baa6e526aea7135c60dcc949c9b592`, not master): ``` length time: [1.5379 us 1.5408 us 1.5437 us] change: [-97.311% -97.295% -97.278%] (p = 0.00 < 0.05) Performance has improved. Found 12 outliers among 100 measurements (12.00%) 1 (1.00%) low severe 4 (4.00%) low mild 3 (3.00%) high mild 4 (4.00%) high severe ``` Closes apache#9235 from jorgecarleitao/length_faster Lead-authored-by: Jorge C. Leitao <jorgecarleitao@gmail.com> Co-authored-by: Matt Brubeck <mbrubeck@limpet.net> Signed-off-by: Andrew Lamb <andrew@nerdnetworks.org>

d68f99e

to

c4e7e94

Compare

…atch, remove test::format_batch The `test::format_batch` function does not have wide range of type support (e.g. it doesn't support dictionaries) and its output makes tests hard to read / update, in my opinion. This PR consolidates the datafusion tests to use `arrow::util::pretty::pretty_format_batches` both to reduce code duplication as well as increase type support This PR removes the `test::format_batch(&batch);` function and replaces it with `arrow::util::pretty::pretty_format_batches` and some macros. It has no code changes. This change the following benefits: 1. Better type support (I immediately can compare RecordBatches with `Dictionary` types in tests without having to update `format_batch` and apache#9233 gets simpler) 2. Better readability and error reporting (at least I find the code and diffs easier to understand) 3. Easier test update / review: it is easier to update the diffs (you can copy/paste the test output into the source code) and to review them This is a variant of a strategy that I been using with success in IOx [source link](https://github.com/influxdata/influxdb_iox/blob/main/arrow_deps/src/test_util.rs#L15) and I wanted to contribute it back. An example failure with this PR: ``` ---- physical_plan::hash_join::tests::join_left_one stdout ---- thread 'physical_plan::hash_join::tests::join_left_one' panicked at 'assertion failed: `(left == right)` left: `["+----+----+----+----+", "| a1 | b2 | c1 | c2 |", "+----+----+----+----+", "| 1 | 1 | 7 | 70 |", "| 2 | 2 | 8 | 80 |", "| 2 | 2 | 9 | 80 |", "+----+----+----+----+"]`, right: `["+----+----+----+----+----+", "| a1 | b1 | c1 | a2 | c2 |", "+----+----+----+----+----+", "| 1 | 4 | 7 | 10 | 70 |", "| 2 | 5 | 8 | 20 | 80 |", "| 3 | 7 | 9 | | |", "+----+----+----+----+----+"]`: expected: [ "+----+----+----+----+", "| a1 | b2 | c1 | c2 |", "+----+----+----+----+", "| 1 | 1 | 7 | 70 |", "| 2 | 2 | 8 | 80 |", "| 2 | 2 | 9 | 80 |", "+----+----+----+----+", ] actual: [ "+----+----+----+----+----+", "| a1 | b1 | c1 | a2 | c2 |", "+----+----+----+----+----+", "| 1 | 4 | 7 | 10 | 70 |", "| 2 | 5 | 8 | 20 | 80 |", "| 3 | 7 | 9 | | |", "+----+----+----+----+----+", ] ``` You can copy/paste the output of `actual` directly into the test code for an update. Closes apache#9264 from alamb/remove_test_format_batch Authored-by: Andrew Lamb <andrew@nerdnetworks.org> Signed-off-by: Andrew Lamb <andrew@nerdnetworks.org>

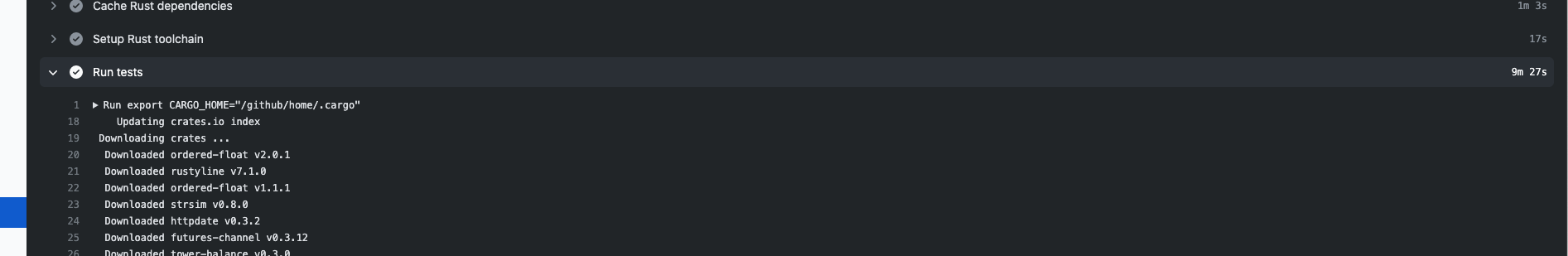

This PR changes the Rust CI job to include the prettyprint feature. (so we have CI coverage of that feature) As @paddyhoran noted, passing in feature flags to the root workspace doesn't do anything, and as it turns out we already invoke `cargo test` for the `arrow` crate separately. Prior to this PR I think that just ran the same tests again with the same features. After this PR, the second run of the arrow tests are run with the `prettyprint` feature Here is evidence that the tests are running twice. I picked a PR (randomly) https://github.com/apache/arrow/pull/9258/checks?check_run_id=1725967358 If you look at the logs for the run-tests action  You can see that the same test is run twice: ``` 2021-01-19T07:02:49.9476111Z test compute::kernels::boolean::tests::test_nonnull_array_is_not_null ... ok 2021-01-19T07:02:49.9476854Z test compute::kernels::boolean::tests::test_nonnull_array_is_null ... ok 2021-01-19T07:02:49.9477616Z test compute::kernels::boolean::tests::test_nonnull_array_with_offset_is_not_null ... ok 2021-01-19T07:02:49.9478427Z test compute::kernels::boolean::tests::test_nonnull_array_with_offset_is_null ... ok 2021-01-19T07:02:49.9479207Z test compute::kernels::boolean::tests::test_nullable_array_is_null ... ok 2021-01-19T07:02:49.9479948Z test compute::kernels::boolean::tests::test_nullable_array_is_not_null ... ok 2021-01-19T07:02:49.9480744Z test compute::kernels::boolean::tests::test_nullable_array_with_offset_is_not_null ... ok 2021-01-19T07:02:49.9481640Z test compute::kernels::boolean::tests::test_nullable_array_with_offset_is_null ... ok 2021-01-19T07:02:49.9487019Z test compute::kernels::boolean::tests::test_nullif_int_array_offset ... ok 2021-01-19T07:02:49.9487773Z test compute::kernels::boolean::tests::test_nullif_int_array ... ok ... 2021-01-19T07:07:23.4568865Z test compute::kernels::boolean::tests::test_nonnull_array_is_not_null ... ok 2021-01-19T07:07:23.4569576Z test compute::kernels::boolean::tests::test_nonnull_array_is_null ... ok 2021-01-19T07:07:23.4570337Z test compute::kernels::boolean::tests::test_nonnull_array_with_offset_is_not_null ... ok 2021-01-19T07:07:23.4571133Z test compute::kernels::boolean::tests::test_nonnull_array_with_offset_is_null ... ok 2021-01-19T07:07:23.4571885Z test compute::kernels::boolean::tests::test_nullable_array_is_not_null ... ok 2021-01-19T07:07:23.4572614Z test compute::kernels::boolean::tests::test_nullable_array_is_null ... ok 2021-01-19T07:07:23.4573383Z test compute::kernels::boolean::tests::test_nullable_array_with_offset_is_not_null ... ok 2021-01-19T07:07:23.4574183Z test compute::kernels::boolean::tests::test_nullable_array_with_offset_is_null ... ok 2021-01-19T07:07:23.4574929Z test compute::kernels::boolean::tests::test_nullif_int_array ... ok 2021-01-19T07:07:23.4575636Z test compute::kernels::boolean::tests::test_nullif_int_array_offset ... ok ``` Closes apache#9262 from alamb/alamb/run_prettyprint_ci Authored-by: Andrew Lamb <andrew@nerdnetworks.org> Signed-off-by: Jorge C. Leitao <jorgecarleitao@gmail.com>

…ull) values The idea of this PR is to have a function `from_iter_values` that (just like `from_iter`) creates an array based on an iterator, but from `T` instead of `Option<T>`. I have seen some places in DataFusion (especially `to_array_of_size`) where an `Array` is generated from a `Vec` of items, which could be replaced by this. The other iterators have some memory / time overhead in both creating and manipulating the null buffer (and in the case of `Vec` for allocating / dropping the Vec) Closes apache#9293 from Dandandan/array_iter_non_null Authored-by: Heres, Daniel <danielheres@gmail.com> Signed-off-by: Jorge C. Leitao <jorgecarleitao@gmail.com>

5e1be03

to

ed6b68a

Compare

d4608a9

to

356c300

Compare

|

@Dandandan I am closing this PR for the time being to clean up the Rust/Arrow PR backlog. Please let me know if this is a mistake |

|

@alamb thanks, probably will open this or a new one if/when I continue with it |

|

Closing this PR for now; We can reopen it if need be |

Sign up for free

to join this conversation on GitHub.

Already have an account?

Sign in to comment

Add this suggestion to a batch that can be applied as a single commit.

This suggestion is invalid because no changes were made to the code.

Suggestions cannot be applied while the pull request is closed.

Suggestions cannot be applied while viewing a subset of changes.

Only one suggestion per line can be applied in a batch.

Add this suggestion to a batch that can be applied as a single commit.

Applying suggestions on deleted lines is not supported.

You must change the existing code in this line in order to create a valid suggestion.

Outdated suggestions cannot be applied.

This suggestion has been applied or marked resolved.

Suggestions cannot be applied from pending reviews.

Suggestions cannot be applied on multi-line comments.

Suggestions cannot be applied while the pull request is queued to merge.

Suggestion cannot be applied right now. Please check back later.

This is a WIP PR for implementing a similar approach to hashing as used in the hash join.

For the hash-aggregate heavy query TCPH query 1 this speeds it up (without collision checking, although that should not significantly impact performance) by ~30%.

TODO:

Benchmark results

PR

Vectorized hashing branch: