-

Notifications

You must be signed in to change notification settings - Fork 62

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Add register_object_store to SessionContext

#55

Conversation

|

It would be nice to figure out a cleaner abstraction for configuration for the different providers in the Maybe a 3 varant |

|

It's possible things have changed since i was working on the I believe this is needed because you still need to create the Then you could register files like how you showed with A quick peak at the datafusion cookbook example for s3 shows how thats done on the rust side. Take what im saying with a grain of salt though as I havent been as involved lately. |

|

@matthewmturner I'm not quite sure what changes you are suggesting do you mind elaborating? The PR basically does the equivalent of the cookbook example, except it generates the clients using default credential providers (like ::from_env) and just dispatches based on the URI scheme, this seems like the most straightforward way to do things |

|

@wseaton I thought the function signature would be similar to the rust api. That being said, im not sure how close the existing python function signatures match their equivalent on the rust side so maybe this isnt a design goal of the python bindings. I also think that it would be good to allow users to create |

Yeah, the builder pattern doesn't really map well into Python space. I'll try to do some research to see how it's done elsewhere

MinIO and Ceph (which I need as well) should be supported by setting |

|

Agree on the builder pattern not mapping as well to python. I was focusing more on the signature for If I recall correctly some of the read options and other configs that are used with session context also use builder pattern and its possible some of those are already exposed in our python bindings somewhere and maybe their implementation could be used as inspiration for how to approach creating object store. |

register_object_store to SessionContextregister_object_store to SessionContext

|

@wseaton have you seen https://github.com/roeap/object-store-python (cc: @roeap) ? Looks like not not all services are fully supported yet, but this project can be used to construct an |

|

@turbo1912 oh wow! thanks for the heads up, I had just refactored to remove the parse redundancy to externalize some of the config and am catching up on this. It looks like |

|

The new api from the last commit looks like this: from datafusion.store import AmazonS3

s3 = AmazonS3(region="us-west-2", bucket_name="my_bucket", endpoint="https://myendpoint.url")

ctx.register_object_store("s3", "my_bucket", s3)@matthewmturner is this closer to what you were thinking? |

|

@wseaton yes! exactly what i had in mind. a nit would be to have it |

@matthewmturner it'd be pretty easy to do cargo features on our side as each provider is gated behind an |

|

ah thats unfortunate. i suppose the alternative would be a totally separate object_store module but im not even sure that would solve it. do you have an idea how large this makes the datafusion python package? last i checked it was quite large because it was datafusion + pyarrow (which is large) and now were adding the full object_store crate. |

|

Not sure how scientific this is (just output from Before:Release:Debug:After:Release build:Debug build: |

|

I was referring more to the size after installing the wheel. for example, right now when i pip install datafusion it comes out to 141mb (datafusion itself is only 25mb and the remainder is pyarrow / numpy). so im curious how big datafusion will be now with full featured object store bundled with it. |

|

@matthewmturner honestly not quite sure how to emulate those numbers locally, when I build with So anywhere from 5-20% bigger would be my guess. Including object storage drivers seems like a good idea and is basically standard for other tools like spark who bundle them with their distributions WRT hadoop (which is in the hundreds of megabytes) |

register_object_store to SessionContextregister_object_store to SessionContext

|

In addition to unifying the API, I also made |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thank you, @wseaton! This is great. 🎉

|

@francis-du @andygrove is there a chance this can get in w/ the 13.0.0 release? |

|

I think it's okay, but it depends on Andy. |

|

@wseaton Hi Will, I think you have a |

|

@francis-du should be good now, lmk how you want to handle the lock conflict |

I usually rebase locally with master branch. |

I would love to release a new version of the Python bindings soon but could use some help. I am not familiar with releasing Python packages. Here is the issue to track releasing the next version (0.7.0 because the version numbers for the Python bindings are independent of the DataFusion version) |

|

@francis-du should be good now edit: rebasing again after #61 |

|

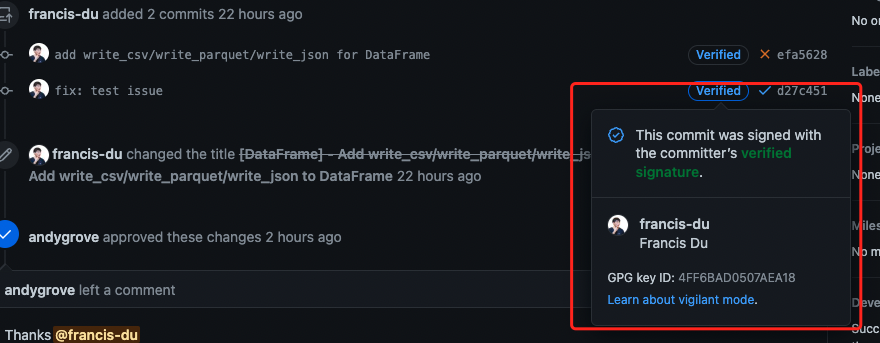

Hi Will. There is a good practice to share with you. If you don't like waiting for maintainers to approval your commit,you can try adding GPG validation. your commit will trigger the CI directly. Like this: |

|

@francis-du ah great, I didn't realize it was commit verification that disabled the CI. I'll be sure to set that up for future contributions. |

|

This PR looks pretty straightforward, amazing work @wseaton 🎊 On the question of testing, another relatively simple approach might be using |

|

@andygrove done. I can also work on @isidentical's suggestions for testing the |

Related to #22

With this function added I can now (with the proper environment set):

I'm currently unable to test the

gcpintegration, may need some help there.