-

Notifications

You must be signed in to change notification settings - Fork 3.1k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

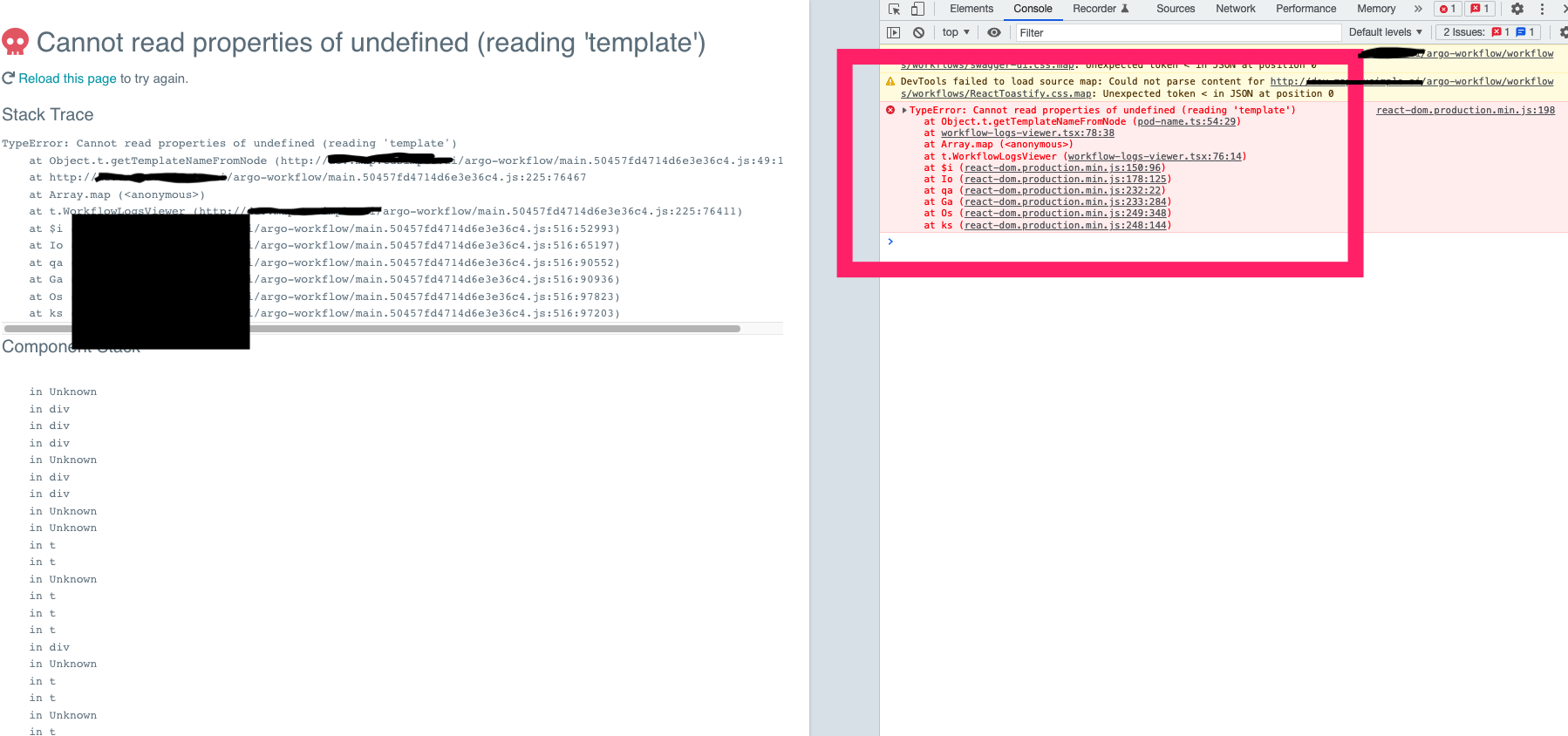

v3.2.6 Argo UI failed to render pod log #7595

Comments

|

@liuzqt Can you share what the pod names are in kubernetes for this workflow that are resulting in the error on the UI? I'm working on a fix and need to make sure there's a 1:1 match between the pod names as they appear in k8s and what our UI pod name function returns Thanks for reporting the issue! |

|

@JPZ13 the Pod UI is not working, I tried to find their information from the json meta, hopefully this can help: "patch-processing-pipeline-ksp78-1623891970": {

"id": "patch-processing-pipeline-ksp78-1623891970",

"name": "patch-processing-pipeline-ksp78.retriable-map-authoring-initializer",

"displayName": "retriable-map-authoring-initializer",

"type": "Pod",

"templateScope": "local/",

"phase": "Succeeded",

"boundaryID": "patch-processing-pipeline-ksp78",

"startedAt": "2022-01-20T01:45:35Z",

"finishedAt": "2022-01-20T01:45:39Z",

"progress": "1/1",

"resourcesDuration": {

"cpu": 3,

"memory": 3

},

# ..."patch-processing-pipeline-ksp78-1769534216": {

"id": "patch-processing-pipeline-ksp78-1769534216",

"name": "patch-processing-pipeline-ksp78.NODE-RETRIABLE-MAP-AUTHORING(1).NODE-GENERATE-EMLANE.pre-emlane-map-builder",

"displayName": "pre-emlane-map-builder",

"type": "Pod",

"templateRef": {

"name": "map-builder-task",

"template": "map-builder"

},

"templateScope": "namespaced/node-generate-emlane-task",

"phase": "Failed",

"boundaryID": "patch-processing-pipeline-ksp78-242024017",

"message": "Error (exit code 1)",

"startedAt": "2022-01-20T01:53:05Z",

"finishedAt": "2022-01-20T01:53:08Z",

"progress": "1/1",

"resourcesDuration": {

"cpu": 4,

"memory": 4

},

# ... |

|

That is super helpful @liuzqt. Thank you for the extra info. I'll tag you on the PR for the fix once it's ready |

Signed-off-by: J.P. Zivalich <jp@pipekit.io>

|

@JPZ13 sorry actually I'm just an Argo user in our company and I have to ask our Argo team to help verify this fix later. I'll update once I get feedback from them. |

|

Thanks @terrytangyuan. I'm looking for the mapping of the json meta of NodeStatuses that @liuzqt provided above to the pod names as they appear in the kubernetes cluster. Also, if they find a minimal reproducible example, that would be super helpful too |

Summary

What happened/what you expected to happen?

What version of Argo Workflows are you running?

3.2.6 (we just upgrade it from 3.2.3 today, so I guess this issue might be introduced between 3.2.3 and 3.2.6. We'll try to roll back to 3.2.3 and see if this issue still exist)

Diagnostics

I'm still not able to find a minimal reproducing example, since this issue only happened in some of our pipelines, and not sure which part of the template triggered this issue.

Message from the maintainers:

Impacted by this bug? Give it a 👍. We prioritise the issues with the most 👍.

The text was updated successfully, but these errors were encountered: