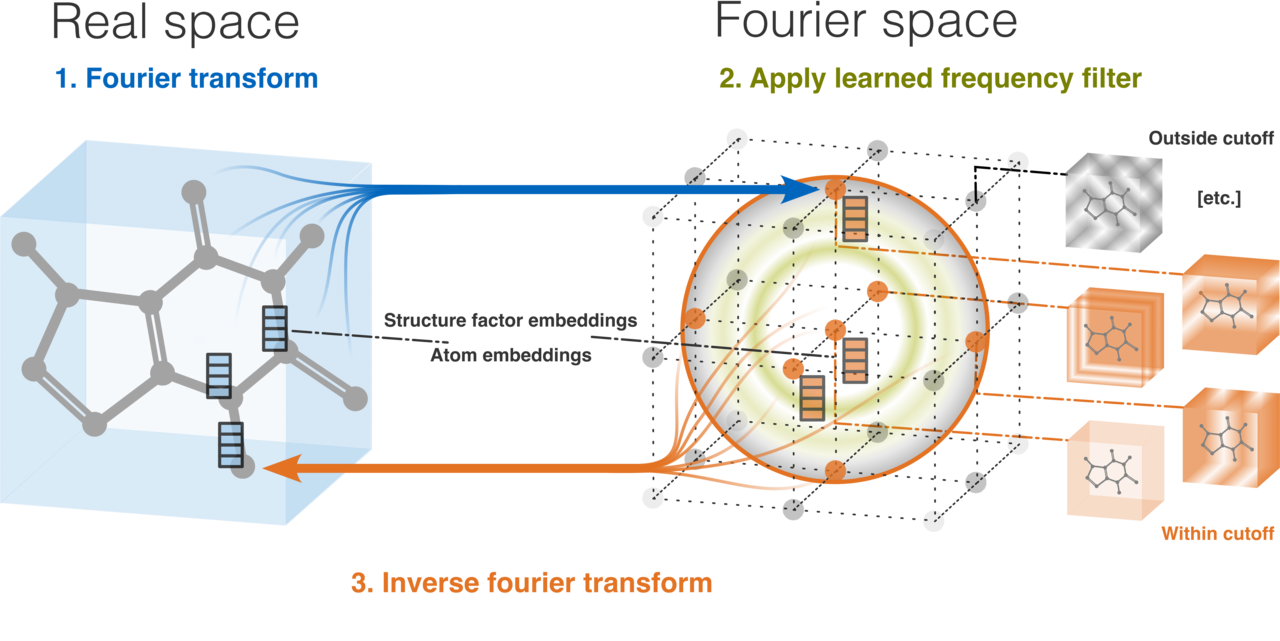

Reference implementation of the Ewald message passing scheme, proposed in the paper

Ewald-based Long-Range Message Passing for Molecular Graphs

by Arthur Kosmala, Johannes Gasteiger, Nicholas Gao, Stephan Günnemann

Accepted at ICML 2023

Models for which Ewald message passing is currently implemented:

Currently supported datasets:

This repository was forked from the Open Catalyst 2020 (OC20) Project, which provides the codebase for training and inference on OC20, as well as on the OE62 dataset which we integrated into the OC20 pipeline.

To setup a conda environment with the required dependencies, please follow the OCP installation instructions. They should work identically in this repository. We further recommend installing the jupyter package to access our example training and evaluation notebooks, as well as the seml package [github] to run and manage (especially longer) experiments from the CLI. To reproduce the long-range binning analyses from the Ewald message passing paper, please install the simple-dftd3 package [installation instructions] including the Python API.

Dataset download links and instructions for OC20 are in DATASET.md in the original OC20 repository.

To replicate our experiments on OC20, please consider downloading the following data splits for the Structure to Energy and Forces (S2EF) task:

- train_2M

- val_id

- val_ood_ads

- val_ood_cat

- val_ood_both

- test

To replicate our experiments on OE62, please download the raw OE62 dataset [media server]. Afterwards, run the OE62_dataset_preprocessing.ipynb notebook to deposit LMDB files containing the training, validation and test splits in a new oe62 directory.

For an interactive introduction, consider using our notebook train_and_evaluate.ipynb that allows training and evaluation of all studied model variants (baselines, Ewald versions, comparison studies) on OE62 and OC20. We also provide the notebook evaluate_from_checkpoint.ipynb to evaluate previously trained models.

Approximate OE62 and OC20 training times for all model variants are provided as guidance in the training notebook. We only recommend notebook-based training for OE62, as OC20 training takes over a day using a single Nvidia A100 graphics card for our cheapest models.

Alternatively, experiments can be started from the CLI using

seml [your_experiment_name] add configs_[oe62/oc20]/[model_variant].yml startFor example, to train the SchNet model variant with added Ewald message passing on OE62, type

seml schnet_oe62_ewald add configs_oe62/schnet_oe62_ewald.yml startExperiments beyond our studied variants can be easily defined by adding or modifying config YAML files. Once an experiment is started, a log file identified by the associated SLURM jobid is generated in logs_oe62 or logs_oc20. Among other information, it contains the path to the model checkpoint file, as well as the path to a tensorboard file that may be used to track progress. Tensorboard files can be found in the logs directory.

Please reach out to arthur.kosmala@tum.de if you have any questions.

Please cite our paper if you use our method or code in your own works:

@inproceedings{kosmala_ewaldbased_2023,

title = {Ewald-based Long-Range Message Passing for Molecular Graphs},

author = {Kosmala, Arthur and Gasteiger, Johannes and Gao, Nicholas and G{\"u}nnemann, Stephan},

booktitle={International Conference on Machine Learning (ICML)},

year = {2023}

}

This project is released under the Hippocratic License 3.0. This concerns only the files added to the OC20 repository, which was itself released under the MIT license.