A library that allows to control the mouse and keyboard with head movement and face gestures. This project is based on the google mediapipe library (https://google.github.io/mediapipe/).

Tested with Python 3.10 and PySide6.4.3.

- Clone repository

- Create a sufolder

mkdir venv - Create virtual environment

python -m venv venv - Activate virtual environment

venv\Scripts\activate.bat(Linux:. venv/bin/activate) - Install packages

pip install -r requirements.txt

python gui.pyto start gui (Linux:sudo ./venv/bin/python3.10 gui.py)Alt+1to toggle mouse controlled by python or system.Escto turn off program. (Used if you lose control over mouse)

Alternatively you can create an executable distribution and run dist\gui.exe (Windows) or dist/gui (Linux)

To create a distribution folder wich includes all necessery .dll and an executable one can use PyInstaller(https://pyinstaller.org). Instructions:

- Follow the installation instructions

- Activate virtual environment

venv/Scripts/activateon windows andsource venv/bin/activateon linux - Install PyInstaller

pip install pyinstaller - Execute build process with

pyinstaller gui.py -D --add-data config;config --add-data data;data --collect-all mediapipeon windows

pyinstaller gui.py -D --add-data config:config --add-data data:data --collect-all mediapipeon linux

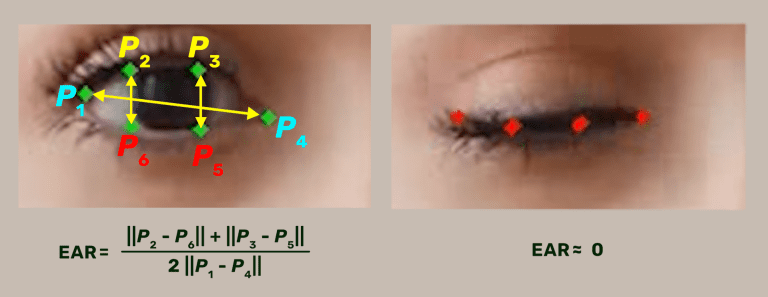

The gesture calculation (e.g. eye-blink) uses the mediapipe facial landmark detection in combination with a modified eye aspect ratio (EAR) algorithm. The EAR algorithm helps to make the gesture invariant to head movements or rotations.

- see function SignalsCalculator.eye_aspect_ratio.

- The idea is based on the article about eye aspect algorithm for driver drowsiness detection.

The work for GestureMouse has been accomplished at the UAS Technikum Wien in course of the R&D-projects WBT (MA23 project 26-02) and Inclusion International (MA23 project 33-02), which has been supported by the City of Vienna.

Have a look at the AsTeRICS Foundation homepage and our other Open Source AT projects:

-

AsTeRICS: AsTeRICS framework homepage, AsTeRICS framework GitHub: The AsTeRICS framework provides a much higher flexibility for building assistive solutions. The FLipMouse is also AsTeRICS compatible, so it is possible to use the raw input data for a different assistive solution.

-

FABI: FABI: Flexible Assistive Button Interface GitHub: The Flexible Assistive Button Interface (FABI) provides basically the same control methods (mouse, clicking, keyboard,...), but the input is limited to simple buttons. Therefore, this interface is at a very low price (if you buy the Arduino Pro Micro from China, it can be under 5$).

-

FLipMouse: The FLipMouse controller: a highly sensitive finger-/lip-controller for computers and mobile devices with minimal muscle movement.

-

FLipPad: The FLipPad controller: a flexible touchpad for controlling computers and mobile devices with minimal muscle movement.

-

AsTeRICS Grid: Asterics Grid AAC Web-App: an open source, cross plattform communicator / talker for Augmented and Alternative Communication (AAC).

Please support the development by donating to the AsTeRICS Foundation: