-

Notifications

You must be signed in to change notification settings - Fork 4.1k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Add ability to limit bandwidth for S3 uploads/downloads #1090

Comments

|

Hello jamesis, |

|

👍 |

3 similar comments

|

👍 |

|

👍 |

|

👍 |

|

👍 |

3 similar comments

|

👍 |

|

👍 |

|

👍 |

|

Under Unix-flavor systems, trickle comes in handy for ad-hoc throttling. You can run commands something like Built-in feature will be really useful, but given cross-platform nature of AWS-CLI, it can cost a lot to implement and maintain it. |

|

Trickle is specifically mentioned in issue #1078 which is linked to in the first comment here. The two (trickle and AWS-CLI) just don't play nice together in my experience. |

|

👍 |

1 similar comment

|

👍 |

|

+1 |

5 similar comments

|

+1 |

|

👍 |

|

+1 |

|

+1 |

|

👍 |

|

(Y) |

|

👍 |

|

👍 this is much needed! |

|

👍 |

|

👍 |

|

Over two years in, and this request is still outstanding. Is there a timeframe by which this could be implemented? |

|

👍 |

4 similar comments

|

👍 |

|

👍 |

|

👍🏿 |

|

👍 |

|

Just nuked the internet in a shared office. |

|

👍 |

|

you can use |

|

👍 |

|

Trickle and large s3 files will cause the trickle to crash |

|

(y) |

|

sorry, Trickle and large s3 files will cause the trickle to crash using boto3 with 10 concurrent(default settings) uploads, changing the concurrent uploads will resolve the issue. I need to add this in the boto3's github, thanks! |

|

👍 So it's been over 2.5 years since this was opened. Is this request just being ignored? |

|

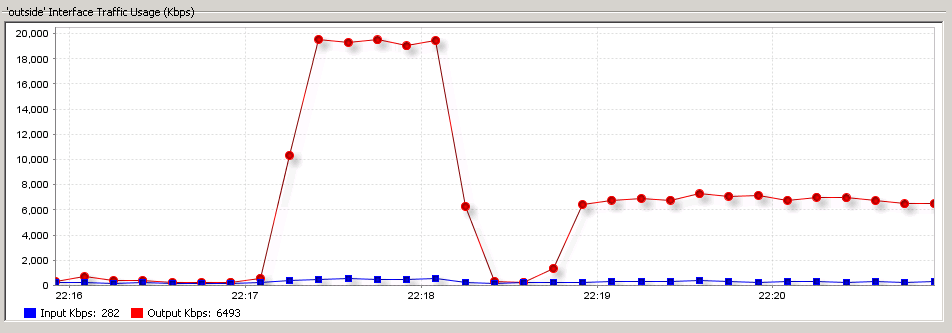

For us we use pv(https://linux.die.net/man/1/pv) in this maner: This solution is not ideal(because it require additional support for filtering and recursion, we do it inside bash loop) but much better than trickle which in our case uses 100% of CPU, and behaves very unstable Here our full usecase of pv(we limit upload speed to 20MB/s == 160Mbit/s) |

|

+1 Real life use case: Very large upload to S3 over DX, do not want to saturate the link and potentially impact production applications using the DX link. |

|

|

|

@ischoonover seems that you don't pass |

|

@tantra35 Size was 1GB. I ended up using s3cmd, which has rate limiting built in with |

|

Implemented in #2997. |

Original from #1078, this is a feature request to add the ability for the

aws s3commands to limit the amount of bandwidth used for uploads and downloads.In the referenced issue, it was specifically mentioned that some ISPs charge fees if you go above a specific mbps, so users need the ability to limit bandwidth.

I imagine this is something we'd only need to add to the

aws s3commands.The text was updated successfully, but these errors were encountered: