-

-

Notifications

You must be signed in to change notification settings - Fork 3.4k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

GPU picking #6991

GPU picking #6991

Conversation

|

First off, this is awesome! I've been hoping for this for quite a while 🙂. I have a ton of opinions, because I've been working on My main suggestion would be to take a step back from the user facing API, and instead just expose the CPU side entity index buffer. The picking example could add the shim code needed to look up the entity at the cursor position, but I'd suggest not baking it into the user facing API simply because of how much discussion needs to happen around how that behaves, which could quickly derail this PR. We could merge as-is, but the API would break significantly. My preference would be for us to just steal the architecture I've written here: https://github.com/aevyrie/bevy_mod_picking/tree/beta to give users maximum flexibility (in another PR). I'm obviously biased, but I've spent hundreds of hours on this and have worked through quite a bit of review feedback on that architecture alone. Edit: concretely, I'd suggest removing these:

Some added questions:

|

|

@aevyrie Thanks a lot for the feedback!

I agree this is likely safer (in terms of not breaking APIs which will definitely not be correct on first iterations). I'll remove the user facing APIs.

Then I'll do this.

I should read your code next and the review you linked.

Currently a buffer and textures are tied to a camera. On the other hand, multiple cameras within a window like for splitscreen is problematic mainly due to not handling viewports, but that's just a TODO not an architectural issue.

Yes- I edited the original post to add a video of picking the animated fox example.

Since buffers and textures are only added to cameras if they have the picking component, there should be negligible (practically zero) overhead. Note that there is some work to do here since I have to do some pipeline specialization and such to make this true, since currently I have hardcoded that the pipelines expect picking. |

d3808c6

to

6bb3c93

Compare

The entire thing is built around the goal of making picking backends simple and composable. The API for writing a backend is dead simple: given a list of pointer locations ( A shader picking backend looking up coordinates in a buffer would probably be <150 LOC. The only complication would be depth, though we might be able to use the depth prepass to get depth data at that position, unless you can build that into the pipeline? Alternatively, the backend could just set the depth to a constant but configurable value for all entities, so you could composite the results from other backends above or below that depth. For example, the current egui backend reports all depths at -1.0, to ensure those intersections are always on top of the results from other backends.

That sounds right to me. When trying to make picking work across viewports and even render-to-texture, I found that using Continuing from the previous question, if each camera had a picking buffer as a component, this would make things pretty straightforward. The backend would look at the render target of the pointer, find the camera with the matching render target, then look up the pointer position in that buffer. |

Worked on this today. Please see the new video, depth (from the GPU's perspective) can now be directly queried (side note: Only works for MSAA == 1 right now).

I will put this as a TODO. This seems like the correct way to me, since it will allow also picking from Also, I got rid of events. Please check out the new API example. When I get around to fixing such this PR such that |

This seems like a sensible limitation for the entity buffer itself, I think it needs to be left aliased. I assume your intent is that the picking pipeline just won't specialize on the MSAA key.

The backends all use raycasting or simple 2d hit testing, and return depth as the world-space distance from the camera. It looks like in your example you might be using the nonlinear (infinite reverse-z) GPU depth. I think world space depth might be more useful when reporting depth from a method. Internally though, it makes sense for the buffer itself to store depth in GPU-native format.

Makes sense to me!

It might be helpful to rename the

That sounds fantastic! I have a bunch of examples I use for testing, we could use that to see if everything is working as expected. 🙂 |

f4000fd

to

9779d69

Compare

6632b3a

to

d1f675a

Compare

I agree there shouldn't be a need for MSAA for picking. Also, I notice the 3d transparency pass does not write depth info. But I think picking a semi-opaque object should be possible. So there is a mismatch there- I added an image to the OP to show what I mean. Also there is the depth prepass PR which I haven't looked at closely. Perhaps that clears things up. Perhaps there is no need for depth related changes in this PR after that.

I agree, I'll purge I'm a bit closer to being ready to try it on your plugin now. EDIT: I forked |

Signed-off-by: Torstein Grindvik <torstein.grindvik@nordicsemi.no>

Signed-off-by: Torstein Grindvik <torstein.grindvik@nordicsemi.no>

Signed-off-by: Torstein Grindvik <torstein.grindvik@nordicsemi.no>

Signed-off-by: Torstein Grindvik <torstein.grindvik@nordicsemi.no>

Signed-off-by: Torstein Grindvik <torstein.grindvik@nordicsemi.no>

Signed-off-by: Torstein Grindvik <torstein.grindvik@nordicsemi.no>

Signed-off-by: Torstein Grindvik <torstein.grindvik@nordicsemi.no>

Signed-off-by: Torstein Grindvik <torstein.grindvik@nordicsemi.no>

Signed-off-by: Torstein Grindvik <torstein.grindvik@nordicsemi.no>

Signed-off-by: Torstein Grindvik <torstein.grindvik@nordicsemi.no>

Signed-off-by: Torstein Grindvik <torstein.grindvik@nordicsemi.no>

Signed-off-by: Torstein Grindvik <torstein.grindvik@nordicsemi.no>

Signed-off-by: Torstein Grindvik <torstein.grindvik@nordicsemi.no>

…round. Pro: Picking not only on mouse movement Signed-off-by: Torstein Grindvik <torstein.grindvik@nordicsemi.no>

Signed-off-by: Torstein Grindvik <torstein.grindvik@nordicsemi.no>

Signed-off-by: Torstein Grindvik <torstein.grindvik@nordicsemi.no>

Signed-off-by: Torstein Grindvik <torstein.grindvik@nordicsemi.no>

Signed-off-by: Torstein Grindvik <torstein.grindvik@nordicsemi.no>

Signed-off-by: Torstein Grindvik <torstein.grindvik@nordicsemi.no>

3a6c6c2

to

9e3c212

Compare

I can make a mod_picking branch that uses this PR. Ping me on discord if you don't hear back within a day or two. |

|

Just wondering if you still plan to work on this @torsteingrindvik or @aevyrie : ) |

I stopped because I wasn't entirely sure if this would be accepted into Bevy (if polished and clean up etc). |

|

I'm happy to help review, I would love to see this merged. |

|

GPU picking is very useful. I think it's absolutely necessary in order to support mesh animations. I'd also be happy to give a review. |

|

@torsteingrindvik @aevyrie Do you two think it would be possible to get this in for the next release? At least to the point where a plugin like aevyrie's bevy_mod_picking could use it as a backend? This seems like the last massive hurdle to get over in order to make animations fully functional. Awesome work btw! :) |

I briefly tried rebasing this PR and seems a lot has changed in Bevy. In the meantime I'm also happy if either

If not I might try rebasing again, or I may recreate the PR on main to get back into it properly. I'm not sure exactly when the next release is (sometime in June?) so I can't promise anything from my side. |

|

We (foresight) could sponsor @IceSentry to work on this ticket part-time at work if you're okay with him taking it over the finish line. I believe this PR predates the depth prepass which IceSentry wrote, so he might be well suited to help out. |

Sure, if they (@IceSentry) would like that this sounds fine by me. |

|

@torsteingrindvik Like @aevyrie mentioned, I'm happy to either help you or take it over. Considering the age of the PR and how much rendering stuff has changed since then it might be easier to just start over. Let me know what you prefer. |

My gut feeling is that if I were to pick it up myself I'd be happier starting from scratch. EDIT: So if you want to pick it up then likely that's a less painful path for you too 🙂 |

Yep, that's my plan. I'll open a draft PR sometimes next week and add you as a co-author. |

|

Awesome, thanks @IceSentry. @torsteingrindvik thanks for all the work you did here, we'll be sure to properly credit you in the new branch. |

|

Superseded via #8784 so closing. |

gpu_picking.mp4

Meshes, UI elements, sprites

many-sprites.mp4

The

many_spritesexample, sprite transparency works (i.e. the picking only happens within the visible part of the sprite even though it is rendered on top of a rectangle).picking_depth.mp4

Skinned meshes, depth queries, see top right text

Make 2d/3d objects and UI nodes pickable!

Try it via

cargo r --example pickingor (temporarily)cargo r --example animated_fox.Opening this as a draft because there's still lots to do and I'm not sure GPU based picking will be accepted.

So I'd like to know if this is something we might merge if it's in a better state, if so I can keep working on it.

What it does for end users

Users may iterate overEventReader<PickedEvent>. These events provide anEntity. Additionally, eitherPickedorUnpicked.The events fire when the cursor moves over an entity (cached, i.e. events only sent when the picked/unpicked status changes).

Users may add a component

Pickingto cameras.This component then allows querying for

Option<Entity>and depth (f32) at any coordinate.How it works summary

An app world system iterates over cursor events, does a lookup into the buffer to see if an entity existsSend relevant events to end users.PreUpdatemaps the buffers, allowing users to access them CPU sidePostUpdateunmaps, allowing future GPU copiesAPI example

A full example is shown inexamples/apps/picking.rs.Remaining work

If this approach gets a "green light" at least this list should be tackled:

Viewports supportUnpick when cursor moves outside of window?No longer event basedTouch events supportNo longer event basedRender nodes now assume picking is used. Will need to useOption<PickingTextures>instead of assuming it.The 3D pass is currently only implemented for the Opaque3d partSpecialize pipelines based on whether picking is used or not. For example, consider adding/removing the entity index vertex attr based on picking/no picking.copy_texture_to_bufferrequires msaa == 1.Try usingWait, this shouldn't matter. The source is still a camera, but the target changes. Shouldn't matter for picking.RenderTargetinstead of cameras. This would allow off-screen picking.pickingexample use more types of render passes, e.g. alpha mask, transparent 3D. Useful both as an example and for testing.Open questions

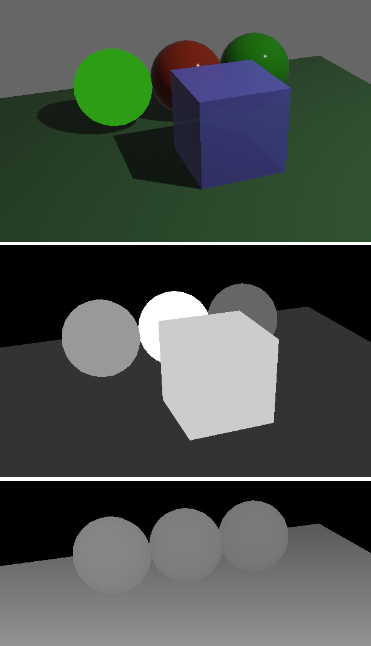

Is sprite batching affected by adding entity indices as a vertex attr?No longer adding a vertex attrWhere vertex attrs are used, would a uniform be better served?TODO: The same forUiBatch.Also the below image shows the

transparency_3dexample's output after the main passes.Top image is color, middle is picking, bottom is depth.

The transparent pass does not write to the depth buffer. How would we then report correct picking depth?

Bugs

If a window's scale factor isn't1.00, the picking is wrong. It still generates events, but at the wrong locations. Needs investigation.Some times on my system the app keeps rendering (no frame drops) but I can't move the cursor for >1 seconds at times. Not sure why. I can't find the perpetrator in tracy since the rendering still works smoothly.Future work / out of scope

Single/few pixel optimization

https://webglfundamentals.org/webgl/lessons/webgl-picking.html

See this article.

There is an optimization available where only a pixel (or a few pixels) around the cursor is rendered instead of the whole scene.

I have not attempted this.

Drag selection

Perhaps if the current approach (i.e. whole texture with entity indices) is kept, drag selection would be relatively easy?

If we look at a rectangle within the texture and look at which entity indices are contained within, we know what was selected.

Increasing pick area

Using exactly the mesh/uinode as the source for picking might be problematic, having it too precise may be annoying for end users.

Perhaps there are some tricks we can do to either the picking texture or just do lookups of the texture in an area to get around this.

Debug overlay

We could add a debug overlay which shows the picking texture.

Activity log

2022 January

Use a storage buffer which works with batches instead.

It's important to set the alpha to 1.0, else the entity index will be alpha blended between other values which makes no sense.