-

Notifications

You must be signed in to change notification settings - Fork 1.3k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Add transient tx map to DaoState to speed up getTx queries #3773

Conversation

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I want to preface this by saying I appreciate you jumping in and tackling one of the performance bottlenecks as one of your first projects. I think this PR will start a great discussion on the future of perf work in this layer of the code.

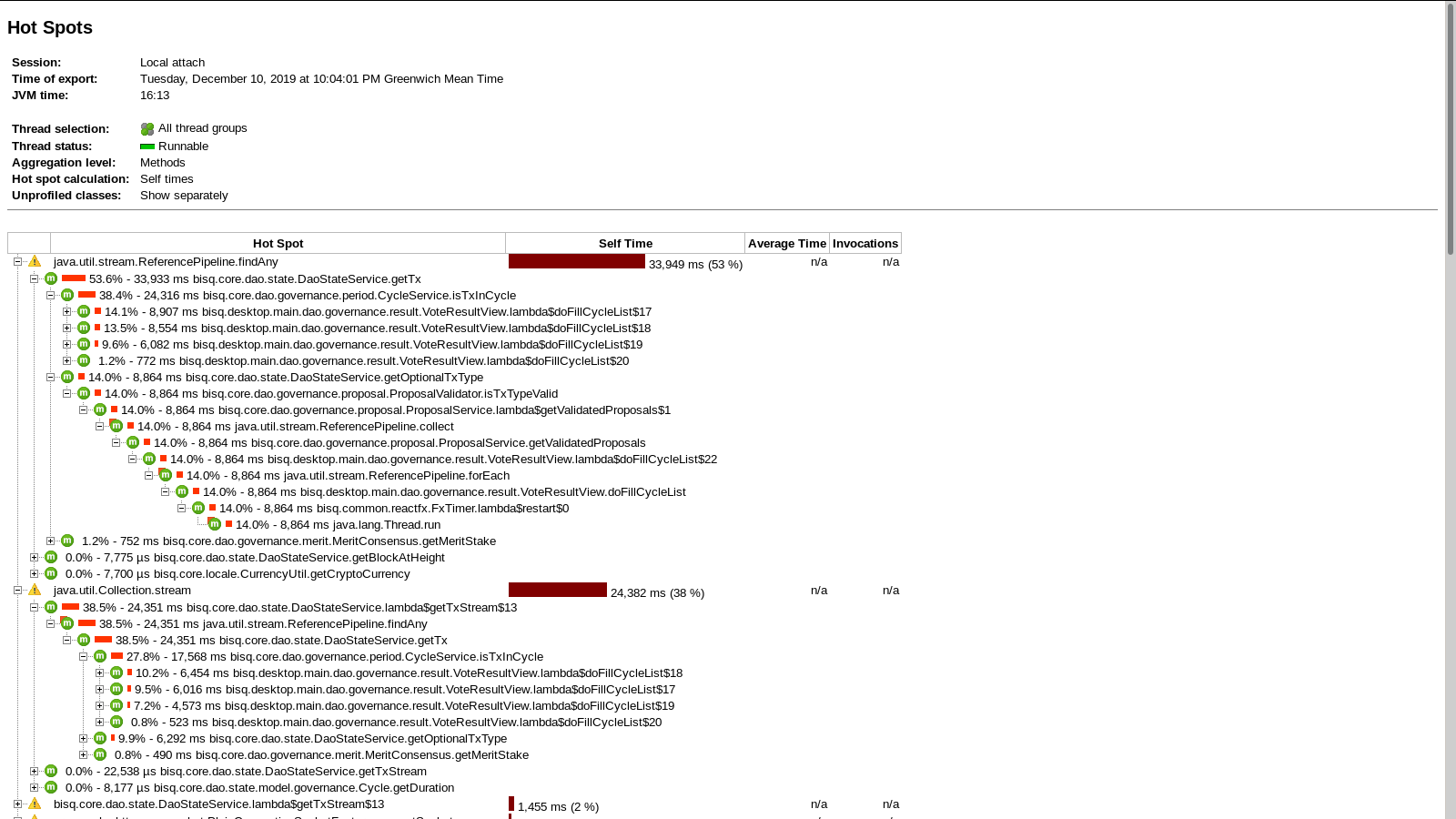

At the concept level, I think that caching dao state will be a requirement for performance. We see the same type of issues show up in jprofiler traces during startup as well.

But, I have reservations with the way this PR solves the problem. I think that applying performance optimizations on code that isn't tested can be extremely harmful and performance optimizations tend to create bugs that are much harder to track down.

The fact that the DAO is untested doesn't lend itself well to this type of work. I would like to know a lot more about how you tested this code.

-

What manual tests did you run and what should be added to the release cycle testing to ensure that caching bugs are found quickly?

-

Do you have any profile traces to share that motivate this particular caching work and the impact this patch has on performance? There are always tradeoffs and understanding which parts get slower (when rebuilding the cache) and which parts get faster (when using the cache) will help weigh the pros and cons.

I've also added comments inline about design choices. I don't have much experience in the DAO and have been scared to touch any of the code due to the current testing and correctness requirements. I will leave the more dao-related feedback to @chimp1984 and @sqrrm.

The reality is that this code will need to change if we want performance gains. I think it would be a good idea to get everyone on the same page with what can/can't be done in this area and how much testing needs to be a prerequiste to changes.

| @@ -348,16 +360,16 @@ public Coin getGenesisTotalSupply() { | |||

| .flatMap(block -> block.getTxs().stream()); | |||

| } | |||

|

|

|||

| public TreeMap<String, Tx> getTxMap() { | |||

| return new TreeMap<>(getTxStream().collect(Collectors.toMap(Tx::getId, tx -> tx))); | |||

| public Map<String, Tx> getTxMap() { | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

It doesn't look like this function is used outside of this file. Probably worth inlining it appropriately.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yes, I noticed it was unused originally. Perhaps it should just be inlined.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I'm always in favor of removing 1-line functions as well as public functions that are unused. Just one less way for people to do the wrong thing in the future.

| } | ||

|

|

||

| public Set<Tx> getTxs() { | ||

| return getTxStream().collect(Collectors.toSet()); | ||

| return new HashSet<>(getTxMap().values()); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

What is the contract difference between getTxMap.values() and getTxStream()? Why have both?

If they have the same data with different performance characteristics I would be in favor of using composition here and having all users go through the cache. It is much less error-prone and easier to reason about when all users just need to deal with one object for txns.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I switched it from getTxStream() to getTxMap.values() for slightly better performance, as more indirection is required to iterate through all the block tx lists (and many of the blocks are empty). They're not interchangeable elsewhere, though, since getTxMap.values() is unordered. It doesn't matter in this case since (before and after) it's just collecting into an unordered set.

Actually, the only place getTxs() is used is in one of the views to get the total number of transactions (using size()), so it doesn't need to collect into a new HashSet and the return type could just be a Collection.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

These are the callers I found of getTxStream(). It seems like most/all of them can be satisfied by the cache, but you have been looking at it longer than me so I might be missing a use case.

getInvalidTxs: size()

getIrregularTxs: size()

getTotalBurntFee: sum()

getTotalAmountOfInvalidatedBsq: sum()

getTotalAmountOfBurntBsq: sum()

getBurntFeeTxs: Set<Tx>

getTx: Optional<Tx>

getTxOutputStream: [boolean, Optional<TxOutput>, Set<TxOutput>

maybeExportToJson: <no idea if this needs to be ordered...>

My point at a high level was that if we are going to build a cache for something, we should just use it for everything. By keeping an interface and users for the old slow way, It just creates additional performance work in the future that could be avoided by doing something better now.

Adding javadocs as you change methods is also a great way to reduce the technical debt and help explain to future users the guarantees or any gotchas that you learned the hard way.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I looked to all usages as well:

Here my comments:

getInvalidTxs

Used in UI for numTxs and in a Set for the chart. As it's a Set order is irrelevant.

getIrregularTxs: size()

Used in UI for numTxs -> order is irrelevant.

getTotalBurntFee: sum()

getTotalAmountOfInvalidatedBsq: sum()

getTotalAmountOfBurntBsq: sum()

Order is irrelevant for sum().

getBurntFeeTxs: Set<Tx>

Used in UI for numTxs and in a Set for the chart. As it's a Set order is irrelevant.

getTx: Optional<Tx>

TxId is unique so order is irrelevant.

getTxOutputStream: [boolean, Optional<TxOutput>, Set<TxOutput>

Used in existsTxOutput and getTxOutput:

Both use txOutputKeyand as txOutputKey is unique tx order is irrelevant.

maybeExportToJson

It dumps txs and txOutputs to disk using the txId and txOutputKey as file name. As both are unique, order is irrelevant.

I guess the explorer will love to get some performance improvement from that change as well ;-). Writing to disk at each new block will be probably a major performance iussue there (another "low hanging fruit" -;) ).

Also looked into all usages of block.getTxs() and I do not see any write beside the addition of a tx in onNewTxForLastBlock. Maybe a public method inside Block encapsulating the write operation and exposing the getTxs() as unmodifiable List would make the code more safe. The read access cannot be replaced by the txMap, but as the write for both data structures happen at only one place I think it is safe (we do not have multiple threads here).

All the other data objects are immutable, only with Block it was hard as during parsing we need to know the state of past transaction in the same block. So keeping that local as we do with txs would have duplicate lots of code in DoaStateService where we need to look up if for instance BSQ is already spent by a previous tx.

|

|

||

| rawBlock.getRawTxs().forEach(rawTx -> | ||

| txParser.findTx(rawTx, | ||

| genesisTxId, | ||

| genesisBlockHeight, | ||

| genesisTotalSupply) | ||

| .ifPresent(txList::add)); | ||

| .ifPresent(daoStateService::onNewTxForLastBlock)); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This seems like a roundabout way to initialize the Block object that is error-prone. It seems easier to reason about if the BlockParser is responsible for initializing the block txs and having the DaoStateService call back in breaks encapsulation.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I didn't want anything outside of DaoStateService to be modifying the block list or the tx list within each block, since the txMap cache/index must be kept in sync at all times. In particular, the block parser is calling TxParser.findTx before each new tx is added, which in turn calls DaoStateService, presumably relying on DaoState being consistent. I think this is why it's difficult to make Block.txs completely immutable, since tx's in the partially constructed last block can depend upon each other.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Ok, I read the comment above this forEach and followed the TxInputParser code. There is definitely a non-encapsulated relationship between the DaoStateService and Block.

If the TxInputParser uses the cache I guess there might not be a better way here without a much larger endeavor to clean up the ownership.

| // Third we add each successfully parsed BSQ tx to the last block | ||

| public void onNewTxForLastBlock(Tx tx) { | ||

| getLastBlock().ifPresent(block -> { | ||

| block.getTxs().add(tx); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I don't think that these getters should be mutable references. I would prefer to see getTxs return an immutable list (pending performance impact) so this type of pattern doesn't propagate. Lombok helps reduce boilerplate but is a major headache for understanding ownership.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yes, it would be nice to make Block completely immutable, of course, but I think that would be quite an extensive change. Perhaps a separate addTx method could be added to Block?

| // Transient data used only as an index - must be kept in sync with the block list | ||

| @Getter | ||

| @JsonExclude | ||

| private transient final Map<String, Tx> txMap; // key is txId |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

How does the memory footprint change with this cache and how is it expected to scale over time?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

It's using a hash table rather than a tree set, so I don't think the additional memory will be a problem - there are only about 10,000 txs or so right now, so I don't think it will take up more than 100KB or so. (The fact that DaoState.blocks is a linked list instead of an array list is probably a more significant memory issue that could be easily fixed.)

| @@ -176,6 +189,10 @@ public Message toProtoMessage() { | |||

| } | |||

|

|

|||

| public static DaoState fromProto(protobuf.DaoState proto) { | |||

| Map<String, Tx> txMap = proto.getBlocksList().stream() | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

The cache initialization code doesn't need to live in the protobuf handlers. The cache is an internal optimization of the DaoState. By passing it in it isn't clear if that state could also live elsewhere and expands the testing breadth.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yes, perhaps it would be slightly nicer to move the txMap initialisation logic into the private DaoState constructor. (It makes no difference either way to anything outside this class.)

The protobuf.DaoState object doesn't include the txMap field, BTW, so it's not being passed in here.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

If I wanted to write a test and instantiate a DaoState I would need to duplicate logic in order to create the cache and pass it in for the behavior to be correct. I realize we don't have those tests right now, but moving towards a structure that enables testing instead of making it more complicated may get people to actually do it.

| // Third we get the onParseBlockComplete called after all rawTxs of blocks have been parsed | ||

| // Third we add each successfully parsed BSQ tx to the last block | ||

| public void onNewTxForLastBlock(Tx tx) { | ||

| getLastBlock().ifPresent(block -> { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Just retrieving the last block opens up bugs when this function is no longer called in the right sequence. I would recommend passing in the block itself or at a minimum asserting that the tx is contained in the block as a defensive technique.

Isn't it also a programming error if getBlock() is not present? It seems like just doing nothing, in that case, is a mistake.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yes, perhaps it would have been better to pass in the last block as a sanity check.

If getBlock() is not present, then the new block must have already been rejected in the method call onNewBlockWithEmptyTxs(block) above, since there are no blocks yet and it isn't the genesis block. In that case, it won't be incorporated into the DaoState and it's BSQ transactions cannot be correctly parsed yet anyway.

(Of course, this all assumes that there is no concurrent modification of the DaoState, but it's obviously not threadsafe anyway.)

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

that there is no concurrent modification of the DaoState

It runs on the UserThread, so there is no concurrent access.

| @@ -115,6 +116,9 @@ public void applySnapshot(DaoState snapshot) { | |||

|

|

|||

| daoState.setChainHeight(snapshot.getChainHeight()); | |||

|

|

|||

| daoState.getTxMap().clear(); | |||

| daoState.getTxMap().putAll(snapshot.getTxMap()); | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

How did caching at the DaoState level compare to caching at the Block level? Keeping object in-sync is complicated and I'd be interested in understanding if the simpler block-level cache has most of the gain without any of the synchronization complication.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I'm not quite sure what you mean by caching at the block level - do you mean adding a transient field of some kind to Block?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yes. Instead of keeping a map of all txns that needs to be kept in sync in the DaoState object, the Block could just cache the list of transactions for itself and the lookup functions changed from O(txns) to O(blocks). I only bring this up because it would require less complexity and since there are no tests it may make it easier to guarantee correctness through just code review.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I fear that might not have much effect on performance as usually there are very few txs in a block. In average we have 1 tx in 2 blocks.

| @@ -115,6 +116,9 @@ public void applySnapshot(DaoState snapshot) { | |||

|

|

|||

| daoState.setChainHeight(snapshot.getChainHeight()); | |||

|

|

|||

| daoState.getTxMap().clear(); | |||

| daoState.getTxMap().putAll(snapshot.getTxMap()); | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

The snapshot is created from the persisted state, but the transient map isn't saved to disk. Is the tx map always empty after applying a snapshot?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

No, it shouldn't be because the snapshot is just another DaoState instance constructed via DaoState.fromProto(), and in that method it recalculates and populates the transient field.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Indeed. The DaoStateStore calls DaoState.fromProto so the cache would be created.

|

Thanks for the quick replies to my comments. I've addressed everything that had questions and the code owners will be able to give you more substantial feedback on what they would like changed, if anything, before this is approved. |

Build a HashMap of all BSQ transactions found, when loading the DaoState from disc, and store it in a transient field which is always kept in sync with the associated list of blocks. (The latter is only modified in a couple of places in DaoStateService, making this straightforward.) This is to speed up daoStateService.getTx(id), which is called from many places and appears to be a significant bottleneck. In particular, the initial load of the results in VoteResultView.doFillCycleList was very slow (taking nearly a minute on a Core i3 machine) and likely to suffer a quadratic slowdown (#cycles * #tx's) over time.

Add getUnorderedTxStream() method to DaoStateService to stream directly from the txMap cache/index wherever it is obviously safe to do so, instead of iterating through the entire block list via getTxStream(). Also make getTxs() return a view of the txMap values in place of a copy. This should improve efficiency slightly.

ce311cd

to

4277cf8

Compare

|

I've amended the original commit in accordance with some of the suggestions above. A sanity check has been added to I've also added another commit to replace all but one use of |

|

Sorry, I meant |

|

Also meant |

|

Is there a reason why you did not use |

|

|

||

| txMap = blocks.stream() | ||

| .flatMap(block -> block.getTxs().stream()) | ||

| .collect(Collectors.toMap(Tx::getId, Function.identity(), (x, y) -> y, HashMap::new)); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I assume the mergeFunction is only passed as you want to have the mapFactory. Not sure what the mergeFunction really should do as conflicts are not expected and not clear how to handle it. Maybe throwing an exception would be more appropriate here? Or maybe just add a comment.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I wanted to guarantee that the output is a HashMap and it looks like the only toMap overload with a mapFactory param also has a mergeFunction param, so I was forced to provide a dummy merge function.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yes, I expected that intention...

I just was wondering how we can be sure to not change behaviour. The previous code used the flatMap.

public Stream<Tx> getTxStream() {

return getBlocks().stream()

.flatMap(block -> block.getTxs().stream());

}

Do you know how potential key conflics would have been handled there? i assume your mergeFunction to overwrite with a new value if it happens is likely the standad behaviour if not otherwise defined. So your mergeFunction is likely better than throwing an exception if flatMap behaves the same. Anyway a bit "esoteric" but the DAO might deserve a bit of extra paranoia ;-)

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

With the (x, y) -> y merge function it's currently using, it will always select the last tx with a given txId from the tx stream when building the map, whereas in the original code getTx is calling findAny on the filtered getTxStream output, which will probably behave the same as findFirst in this case. So perhaps the merge function should be (x, y) -> x to be absolutely sure the behaviour doesn't change.

Also, for consistent merge behaviour, putIfAbsent would need to be substituted into the line:

daoState.getTxMap().put(tx.getId(), tx);

in DaoState.onNewTxForLastBlock.

| // Third we get the onParseBlockComplete called after all rawTxs of blocks have been parsed | ||

| // Third we add each successfully parsed BSQ tx to the last block | ||

| public void onNewTxForLastBlock(Block block, Tx tx) { | ||

| // At least one block must be present else no rawTx would have been recognised as a BSQ tx. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

We should add here the assertDaoStateChange(); check for conistency. All methods which alter the DAO state call that method to guard against changes outside of the permitted process. Only at parsing the DAO state must be changed.

| // Third we add each successfully parsed BSQ tx to the last block | ||

| public void onNewTxForLastBlock(Block block, Tx tx) { | ||

| // At least one block must be present else no rawTx would have been recognised as a BSQ tx. | ||

| Preconditions.checkArgument(block == getLastBlock().orElseThrow()); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This would change the original behaviour.

We used the onNewBlockWithEmptyTxs to add a block, but in case we got into the if path we did not add the block but only log a warning. Adding of transactions would had no effect as it was a local list in the original code. Now we throw an exception in such a case as we expect that the block has been added.

EDIT:

To be more clear. We should use a if case and ignore if the block is not the last block. This would reflect the existing behaviour.

public void onNewBlockWithEmptyTxs(Block block) {

assertDaoStateChange();

if (daoState.getBlocks().isEmpty() && block.getHeight() != getGenesisBlockHeight()) {

log.warn("We don't have any blocks yet and we received a block which is not the genesis block. " +

"We ignore that block as the first block need to be the genesis block. " +

"That might happen in edge cases at reorgs. Received block={}", block);

} else {

daoState.getBlocks().add(block);

if (parseBlockChainComplete)

log.info("New Block added at blockHeight {}", block.getHeight());

}

}

I am not sure if that case is valid and can happen, but as the log suggests there might be tricky edge cases in re-org scenarious where this was a possible scenario. Testing those edge cases is pretty tricky and it can be that it was during development an issue which disappeared later and is not present anymore. But I would prefer to stay very conservative/restrictive in the DAO domain as a consensus bug can have severe consequences and the DAO has a very deep level of complexity. If we are not 100% sure that existing code is wrong I prefer to stick with it, as this code base has been tested excessively and is in production since April.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

NACK for that Preconditions check, otherwise it looks good. Thanks for working on that.

I need a bit more time to go again over all and check if anything might be missing.

Could you provide a comparision of performance gains from that PR?

|

@stejbac @julianknutsen |

|

@sqrrm Would be great if you could review as well. |

Thanks for bringing up the broader discussion! My view is that the DAO code should be only touched if there is a very clear reason. Bugs justify the risk to change the code and critical performance issues. There are some layer in the DAO code which is less critical like requesting the BSQ blocks. But the core, the parsing, DaoState and data structures are highly critical consensus code and have to been taken with lot of care. Regarding testing: For the current state testing can be done by creating all types of DAO transactions and use all DAO features (burn BSQ, voting,...) and create a few cycles with param changes (also radical changes - there we found and fixed a bug which only occurred at more radical changes). The DaoState must be then the same from the original version and the changed version. To resync from genesis is required and running as full DAO node is recommended as well. I usually run 1 full node and one lite node to cover both paths. For dev testing regtest is enough but for the final test this has to be done with the mainnet. A resync with the lite node causes a bit of stress for the live seed nodes, so it should be done only if needed. They only deliver max. 6000 blocks, so it will require repeated BSQ block requests. Also it takes quite a bit of time, but comparing the hashes of the last block gives a the best assurance we have atm regarding the DAO. By the way: As in the past we had problems with merged PRs to the dao domain which have been problematic, we decided that the code inside the dao package in the core module requires an explicit ACK from @ManfredKarrer (see bisq-network/proposals#119). |

|

Just did a quick comparison of the effect that changes have when displaying the DAO vote results. With current master the |

|

Parsing 1042 blocks takes 39 sec with that PR. With master it takes 59 sec. So here the effect is not as big but still good to see some speed up! |

| @@ -624,7 +625,8 @@ public int getNumIssuanceTransactions(IssuanceType issuanceType) { | |||

| return daoStateService.getUnspentTxOutputs(); | |||

| } | |||

|

|

|||

| public Set<Tx> getTxs() { | |||

| // Returns a view rather than a copy of all the txs. | |||

| public Collection<Tx> getTxs() { | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

As the client only is interested in the number of txs, I think we should change that to a method only returning the size. This will further render the getTxs() method in daoStateService needless.

|

|

||

| public Optional<Tx> getTx(String txId) { | ||

| return Optional.ofNullable(getTxMap().get(txId)); | ||

| public Collection<Tx> getTxs() { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This method is only called by DaoFacade where the client is only interested in the size, so I would suggest to add a new getNumTxs() method instead and the other usage is the getUnorderedTxStream() method in the same class, which could use daoState.getTxMap().values().stream() directly. So we can remove the getTxs() completely.

| @@ -115,6 +116,9 @@ public void applySnapshot(DaoState snapshot) { | |||

|

|

|||

| daoState.setChainHeight(snapshot.getChainHeight()); | |||

|

|

|||

| daoState.getTxMap().clear(); | |||

| daoState.getTxMap().putAll(snapshot.getTxMap()); | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I fear that might not have much effect on performance as usually there are very few txs in a block. In average we have 1 tx in 2 blocks.

Ah, the original method delivers the txs ordered by blocks and inside blocks the txs are ordered by inter-block dependencies (as we get it from Bitcoin core). But the |

|

I created a patch with my recommended changes. Feel free to ignore the patch ;-), most of my points have been in the comments above as well, but might be easier to have all in code.... https://gist.github.com/chimp1984/fd856131b1dbb57151dbecccae788fe9 |

|

By the way also the BSQ tx screen seems to be much faster now. Great work on that improvement @stejbac !!! I think this work shows that it is "relatively easy" (if not DAO it's much less risk) to fix some of the major performance bottlenecks and deliver drastical improvements each user can feel. I would love to see those profiling efforts to continue and fix other open issues as well. |

This is why I think in the future it would be good to show the performance graphs in the PR description as a motivation for the changes. Backing up the code change with the performance data can help the reviewers and maintainers justify the risk involved and quickly decide if it is worth pursuing a particular path. In this case, you had to download and run the code to generate the performance gain which isn't very scalable if you or any reviewers have to do that for every change. Obviously Steven saw the perf gain so I am just suggesting to make it more explicit in the PR description moving forward.

I'm not suggesting that every change in the DAO or otherwise needs to refactor and 100% coverage test the code before anything can be done. I'm only suggesting that an explicit plan for how, when, and who tests this code up-front and as part of the PR description will reduce risk and give explicit responsibility so the quality bar can raise over time.

Was any of this testing done so far? In the future, I would like to see this type of text as a separate section in the PR written by the author. As we try and move more of the work off of the maintainers and reviewers, it is important that PR authors can show the testing that is done and have a plan for how this will be tested in the next release as well as long-term. This particular code change is relatively benign, but I think it is just the tip of the iceberg in terms of what will need to be done and an effort to be extremely thoughtful about the motivation, tradeoffs, risks, existing testing, new testing, etc is only going to raise the quality bar for the project long-term.

In this particular case, it isn't clear who is in charge of moving this forward. @stejbac has received a lot of feedback and made some changes, but we need a "decider" to help narrow down the lists of "could do" to "must do" so Steven isn't spinning his wheels.

@stejbac Although it may not seem like it from some of my critiques, I agree. We need more people who will dive in and just get the work done. Obviously, this first one is a bit of a pain with the overarching discussion, but the outcome should be a much better process for this type of work moving forward. That way, we can all spend more time making the software better and less time talking about process. It's great to have you here making Bisq better. |

I would do that once I give it an ACK but author should also do it once the PR is complete. So far there has been one issue I would not agree to. |

Avoid mutating the Block tx list or the DaoState tx cache/index via a Lombok getter. Instead wrap each in an unmodifiable[List|Map] & provide specific mutator methods for use by DaoStateService to add newly parsed transactions or load a DAO snapshot. Also rename txMap to txCache, replace remaining use of getTxStream() in the JSON file exporter with getUnorderedTxStream() (as this is safe) and swap the arguments of the txCache initialisation merge function, for exact consistency with the pre-caching behaviour. Finally, add a missing assertDaoStateChange() and remove a potentially harmful assertion from DaoStateService.onNewTxForLastBlock. This is based on a suggested patch by @chimp1984 in the PR bisq-network#3773 review.

|

I've applied the suggested patch as a third commit, minus a few of the non-mutating accessors added to |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

utACK - I will try to find tomorrow time for testing.

|

I have reviewed the code without testing it and it looks good, after the latest changes. The discussion on performance improvements is great. Would help a lot to have the data in the PR to be able to judge the risk/reward of changes like these. I think it's worth it in this case, with the further information provided, but the effort of testing DAO changes is pretty high. I can only see integration tests being useful when it comes to automated testing, with perhaps a few unit tests being helpful. My experience with unit tests is pretty poor though so perhaps there is a way and I'm just not able to see it. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

ACK

Tested with building the daoState from genesis block (removed dao related resource files and started a new fresh app) with a full DAO node as well tested with regtest several cycles with all transaction types. DaoState was always the same as with a version from master and no issues observed.

Thanks a lot for the valuable PR!

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

utACK - based on #3773 (review)

As agreed DAO changes need an ACK by @ManfredKarrer, so I'll merge this PR based on his review.

Build a

HashMapof all BSQ transactions found, when loading theDaoStatefrom disc, and store it in a transient field which is always kept in sync with the associated list of blocks. (The latter is only modified in a couple of places inDaoStateService, making this straightforward.)This is to speed up

daoStateService.getTx(id), which is called from many places and appears to be a significant bottleneck. In particular, the initial load of the results inVoteResultView.doFillCycleListwas very slow (taking nearly a minute on a Core i3 machine) and likely to suffer a quadratic slowdown (#cycles * #tx's) over time.