-

Notifications

You must be signed in to change notification settings - Fork 0

Home

TestSwarm provides distributed continuous integration testing for JavaScript. It was initially created by John Resig as a tool to support the jQuery project and has since moved to become an official Mozilla Labs project.

NOTE: TestSwarm is currently in an Alpha state. Critical problems may arise during the alpha tests that result in lost data, disconnected clients, and other un-desirable effects. Please keep this in mind while participating in the alpha test.

Much of the reasoning behind the original creation of TestSwarm is explained in the following:

The primary goal of TestSwarm is to take the complicated, and time-consuming, process of running JavaScript test suites in multiple browsers and to grossly simplify it. It achieves this goal by providing all the tools necessary for creating a continuous integration workflow for your JavaScript project.

A walkthrough of TestSwarm.com is available on Vimeo.

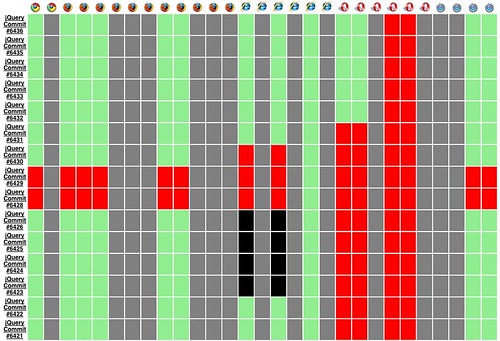

The ultimate result of TestSwarm is a page that looks like this:

It shows source control commits (going vertically) by browser (going horizontally). ‘Green’ results are 100% passing, ‘Red’ results have at least one failure, ‘Black’ results include a critical error, and ‘Grey’ results have yet to be run. The above page should be possible for any project that has a populated JavaScript test suite.

TestSwarm.com is provided as a service (and demonstration of the code base) to a few popular, open source, JavaScript libraries (including jQuery, YUI, Dojo, MooTools, and Prototype). If you wish to use TestSwarm for your own project please follow the instructions below.

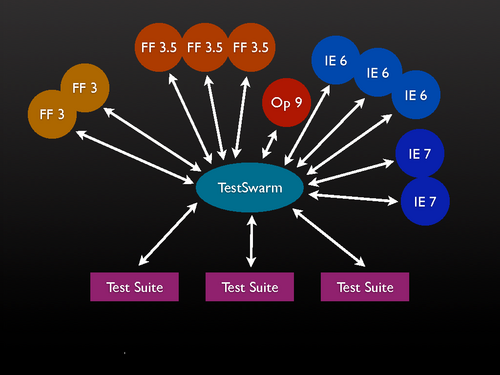

The architecture is as follows:

- There’s a central server which clients connect to and to which jobs are submitted (this is written in PHP and uses MySQL as a backend).

- A client is an instance of the TestSwarm test runner loaded in a browser. One user (e.g. ‘john’) can run multiple clients – and even multiple clients within a single browser. For example you could open 5 tabs in Firefox 3 each with a view of the test runner and would have 5 clients connected.

- The test runner is very simple – it’s mostly implemented in JavaScript. It pings the server every 30 seconds asking for a new test suite to run. If there is one it executes it (inside an iframe) and then submits the results back to the central server. If there isn’t a test suite available it just goes back to sleep and waits.

- A job is a collection of two things: test suites and browsers. When a project submits a job they specify which test suites to run (it may only be one test suite – but in the case of jQuery I break apart the main suite into sub-suites for convenience and parallelization) and which browsers you wish to run against.

- This ends up generating a bunch of ‘runs’ (a run is one specific browser running one specific test suite). A run can be executed multiple times (the minimum is once). For example a project could say “Make sure this suite is run at least 5 times in Firefox 3.”

Essentially, the server just acts as a giant queue. Jobs (with suites and browsers) come in and get pushed onto the queue, clients are constantly pinging the server asking for new test suites to run, when a suite is found the client executes it and the results are brought back to the server and aggregated.

An important aspect of TestSwarm is its ability to proactively correct bad results coming in from clients. As any web developer knows: Browsers are surprisingly unreliable (inconsistent results, browser bugs, network issues, etc.). Here are a few of the things that TestSwarm does to try and generate reliable results:

- If a client loses its internet connection or otherwise stops responding its dead results will be automatically cleaned up by the swarm.

- If a client is unable to communicate with the central server it’ll repeatedly re-attempt to connect (even going so far as to reload the page in an attempt to clear up any browser-born issues).

- The client has a global timeout to watch for test suites that are uncommunicative.

- The client has the ability to watch for individual test timeouts, allowing for partial results to be submitted back to the server.

- Bad results submitted by clients (e.g. ones with errors, failures, or other timeouts) are automatically re-run in new clients in an attempt to arrive at a passing state (the number of times in which the test is re-run is determined by the submitter of the job).

All together these strategies help the swarm to be quite resilient to misbehaving browsers, flaky internet connections, or even poorly-written test suites.

If you are a casual user of some open source JavaScript libraries and wish to help them generate high-quality test results for their project then TestSwarm is the perfect way to get involved. Right now TestSwarm.com is open for alpha testing.

- To start, you should create an account on TestSwarm.com

- Then, you should connect to the swarm (you’ll see a link in the page header providing more information).

- You can open your run page in as many tabs and in as many browsers as you wish. The more browsers you open it in, the more resources you’ll be providing to the swarm.

- If you encounter any problems please connect to the swarm and submit a bug report.

Don’t be alarmed if you don’t see any tests to run. Tests are submitted whenever a commit occurs – and depending upon the number of clients connected – tests may be pushed to you infrequently.

At this time TestSwarm.com is provided as a service (and demonstration of the code base) to a few popular, open source, JavaScript libraries. If you wish to use TestSwarm for your own projects you’ll need to set up your own swarm installation. It’s assumed that you already have a JavaScript project that has a test suite associated with it.

To get a swarm up and running you’ll need to observe the following steps:

- Download the source code and follow the installation guide. TestSwarm requires PHP and MySQL and makes use of Apache rewrite rules. To configure the browser list you’ll need to edit the browsers in the useragents db table (unfortunately there isn’t a good UI for it yet).

- To start pushing tests into your swarm you’ll need to set up a script to automatically send in new commits (if that’s how you chose to submit new jobs). Some sample scripts can be seen in the ‘scripts’ directory. The provided scripts work with both SVN and Git. If you have a post-commit hook you can run the script at that time – or perhaps hooked to a cron job.

- You can also submit jobs manually through a web interface – but it’s doubtful that this will be immediately useful (scripts are much preferred). http://testswarm.com/?state=addjob

- TestSwarm comes with support for the following test frameworks built in (with more to come): QUnit (jQuery), UnitTestJS (Prototype), JSSpec (MooTools), JSUnit, Selenium, and Dojo Objective Harness.

- An important point that’s noted in the example scripts is that in order for a suite to run you’ll need to add in a single script tag. That tag points back to the /js/inject.js script on the TestSwarm server – this injection script allows the test suite to capture and communicate with TestSwarm itself. If you’re using any of the already-integrated test suites (noted previously) then you’re off to the races – otherwise you’ll need to build in support for your own suite (how to do this is noted below, and shown in /js/inject.js).

- To add your own test suite in you’ll need to add in an HTML serialization hook, overriding

window.TestSwarm.serialize. Additionally, when the test suite is completed you’ll need to callwindow.TestSwarm.submitwith the total number of failures, errors, and total number of tests run. Optionally you can callwindow.TestSwarm.heartbeat()after every test completes to provide better test timeouts. - Note that in order to submit a job you’ll need an AUTH token. You can get this by looking in the users db table for the specific user that you’re submitting jobs under.

- At this point you should be able to start pushing test suites to your swarm and allowing clients to connect and run tests.

If you have any questions, or encounter any problems, please feel free to discuss them on the Google Group or submit a bug to the bug tracker.

Selenium provides a quite-full stack of functionality. It has a test suite, a test driver, automated browser launching, and the ability to distribute test suites to many machines (using their grid functionality). There are a few important ways in which TestSwarm is different:

- TestSwarm is test suite agnostic. It isn’t designed for any particular test runner and is capable of supporting any generic JavaScript test suite.

- TestSwarm is much more decentralized. Jobs can be submitted to TestSwarm without clients being connected – and will only be run once clients eventually connect.

- TestSwarm automatically corrects mis-behaving clients and malformed results.

- TestSwarm provides a full continuous integration experience (hooks in to source control and a full browser-by-commit view which is critical for determining the quality of commits).

- TestSwarm doesn’t require any browser plugins or extensions to be installed – nor does it require that any software be installed on the client machines.

For a number of corporations Selenium may already suit your needs (especially if you already have a form of continuous integration set up).

There are many other browser launching tools (such as Watir) but all of them suffer from the same problems as above – and frequently with even less support for advanced features like continuous integration.

A popular alternative to the process of launching browsers and running test suites is that of running tests in headless instances of browsers (or in browser simulations, like in Rhino). All of these suffer from a critical problem: At a fundamental level you are no longer running tests in an actual browser – and the results can no longer be guaranteed to be identical to an actual browser. Unfortunately nothing can truly replace the experience of running actual code in a real browser.