New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Adds N-step learning for DQN-based agents. #317

Conversation

…asses that into replaybuffer

|

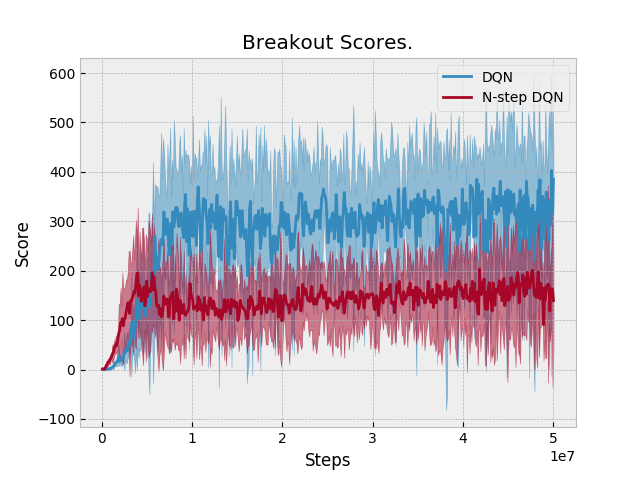

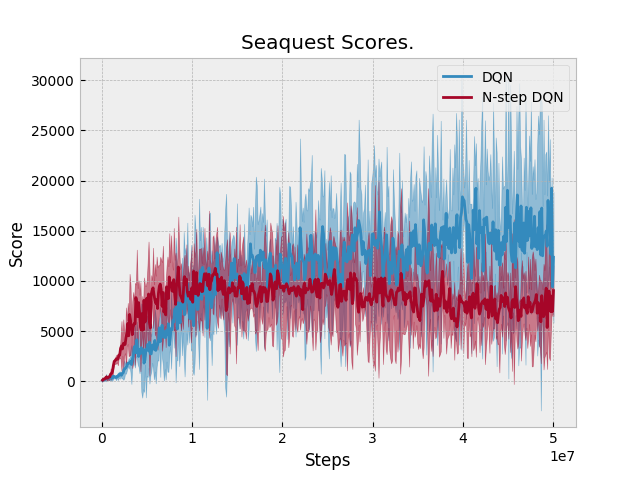

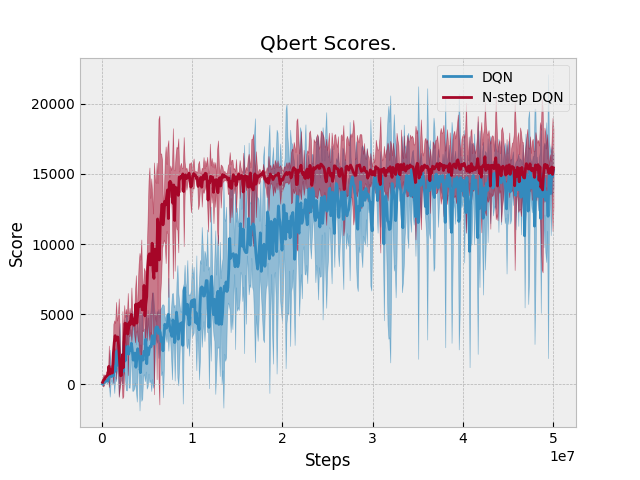

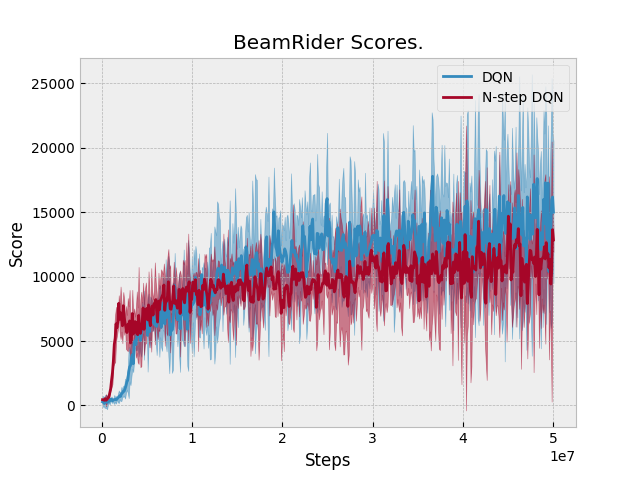

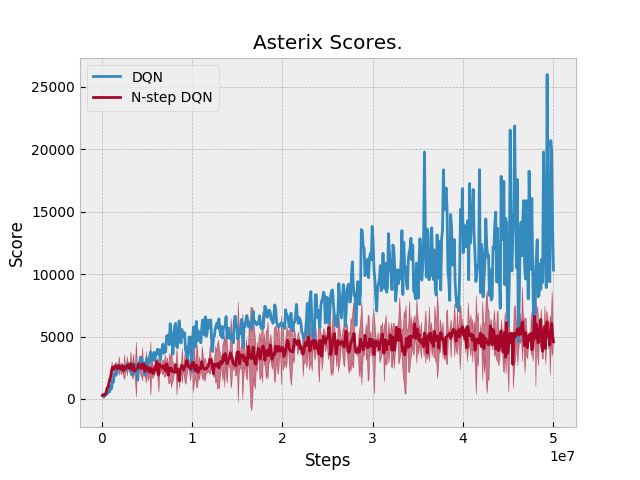

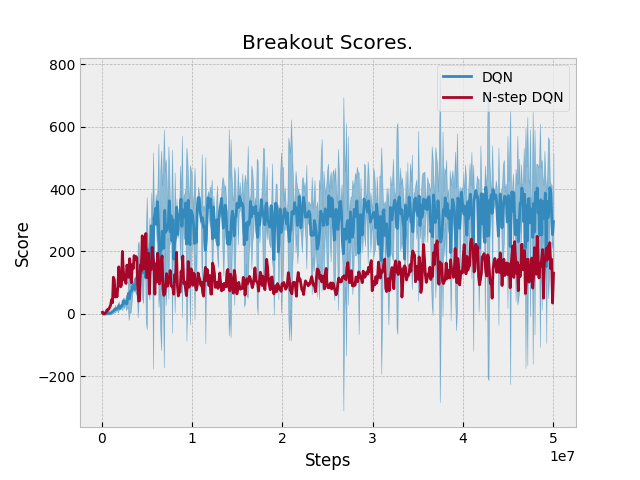

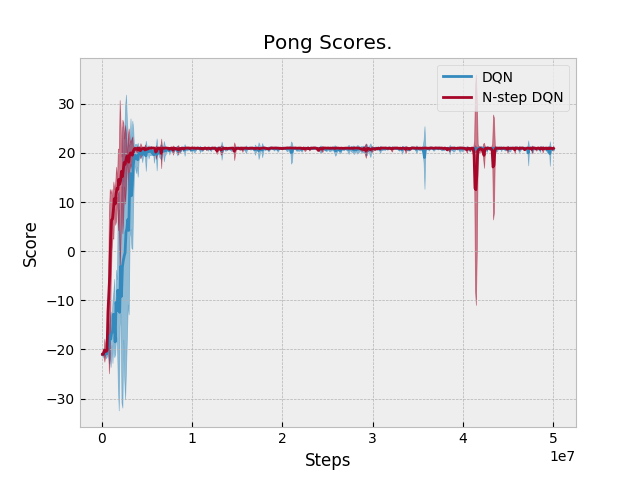

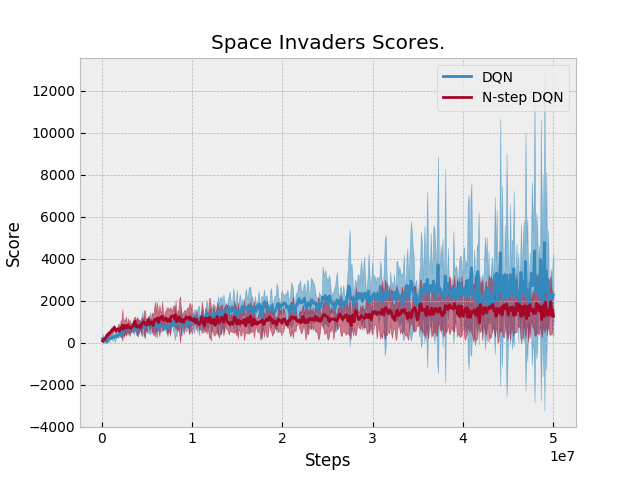

Results: N-step performs equal or slightly better than DQN in two domains: Qbert and Pong. Otherwise, N-step DQN performs worse. Unfortunately, we don't have a baseline to compare against. |

|

Just eyeballed this PR's DQN (which is actually double DQN) results and compared them to the Tuned DQN results from: The results look similar, so it suggests that the n-step DQN implementation of 1-step DQN does not adversely affect performance. |

7 similar comments

|

Just eyeballed this PR's DQN (which is actually double DQN) results and compared them to the Tuned DQN results from: The results look similar, so it suggests that the n-step DQN implementation of 1-step DQN does not adversely affect performance. |

|

Just eyeballed this PR's DQN (which is actually double DQN) results and compared them to the Tuned DQN results from: The results look similar, so it suggests that the n-step DQN implementation of 1-step DQN does not adversely affect performance. |

|

Just eyeballed this PR's DQN (which is actually double DQN) results and compared them to the Tuned DQN results from: The results look similar, so it suggests that the n-step DQN implementation of 1-step DQN does not adversely affect performance. |

|

Just eyeballed this PR's DQN (which is actually double DQN) results and compared them to the Tuned DQN results from: The results look similar, so it suggests that the n-step DQN implementation of 1-step DQN does not adversely affect performance. |

|

Just eyeballed this PR's DQN (which is actually double DQN) results and compared them to the Tuned DQN results from: The results look similar, so it suggests that the n-step DQN implementation of 1-step DQN does not adversely affect performance. |

|

Just eyeballed this PR's DQN (which is actually double DQN) results and compared them to the Tuned DQN results from: The results look similar, so it suggests that the n-step DQN implementation of 1-step DQN does not adversely affect performance. |

|

Just eyeballed this PR's DQN (which is actually double DQN) results and compared them to the Tuned DQN results from: The results look similar, so it suggests that the n-step DQN implementation of 1-step DQN does not adversely affect performance. |

|

Can you resolve the conflicts? |

|

Looks good! |

Known affected agents:

-DQN