ScalityS3 on a Raspberry Pi 3 with Raspbian (Debian Jessie 8.0)

The Raspberry Pi 3 single board computer from the Raspberry Pi Foundation has a 1.2 GHz quad-core ARMv8 processor, 1 GByte main memory and a 10/100 Mbps Ethernet interface.

This installation tutorial explains the installation of a Raspberry Pi 3 device from scratch and the configuration of s3cmd on your computer.

This implies that you have already an installation of s3cmd on the system you want to use for the interaction with the Scality S3 storage service.

$ wget https://downloads.raspberrypi.org/raspbian_lite/images/raspbian_lite-2017-03-03/2017-03-02-raspbian-jessie-lite.zip

$ unzip 2017-03-02-raspbian-jessie-lite.zip

Check which one is the correct device! If you use an internal card reader, it is often /dev/mmcblk0.

# dd bs=1M if=2017-03-02-raspbian-jessie-lite.img of=/dev/sdb && sync

Default login of this image is pi/raspberry. To become user root, execute sudo su.

Older Raspian versions started the SSH server by default. Because of security reasons (as explained here), all Raspian versions since version 2016-11-25 (see the release notes) have the SSH server disabled by default. To get the SSH server automativally activated during boot time, create a file ssh with any content (or just an empty file) inside the boot partition (it is the first partition) of the micro SD card.

# mount /dev/sdb1 /media/

# touch /media/ssh

# umount /media/

Insert the micro SD card into the Raspberry Pi computer, connect it with the ethernet cable and the micro USB cable for power suppy and switch on the power supply. The operating system will try to fetch network configuration by using DHCP on the Ethernet interface per default. If you activated the SSH server, you can now log in via SSH.

The system used with this tutorial had these characteristics:

$ lsb_release -a

No LSB modules are available.

Distributor ID: Raspbian

Description: Raspbian GNU/Linux 8.0 (jessie)

Release: 8.0

Codename: jessie

$ uname -a

Linux raspberrypi 4.4.50-v7+ #970 SMP Mon Feb 20 19:18:29 GMT 2017 armv7l GNU/Linux

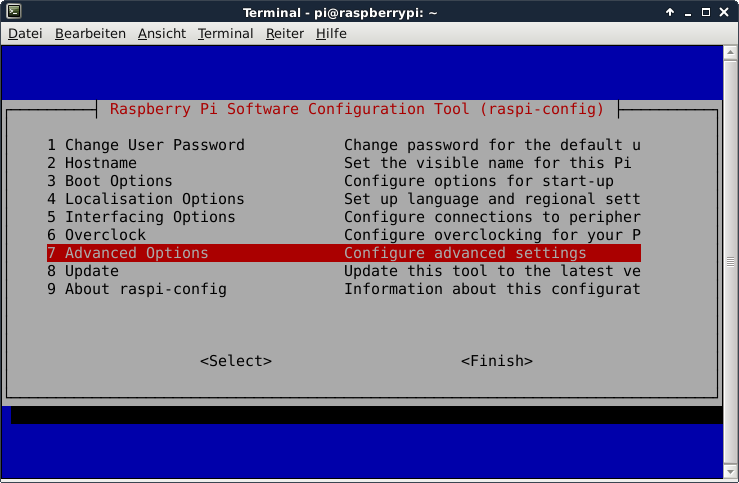

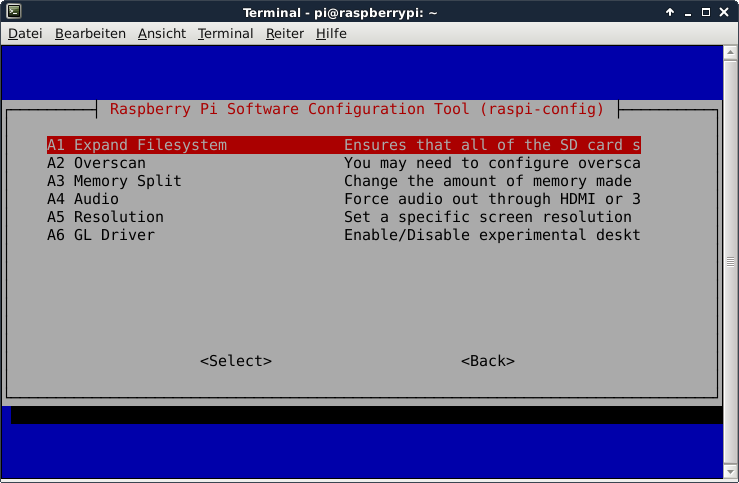

$ sudo raspi-config

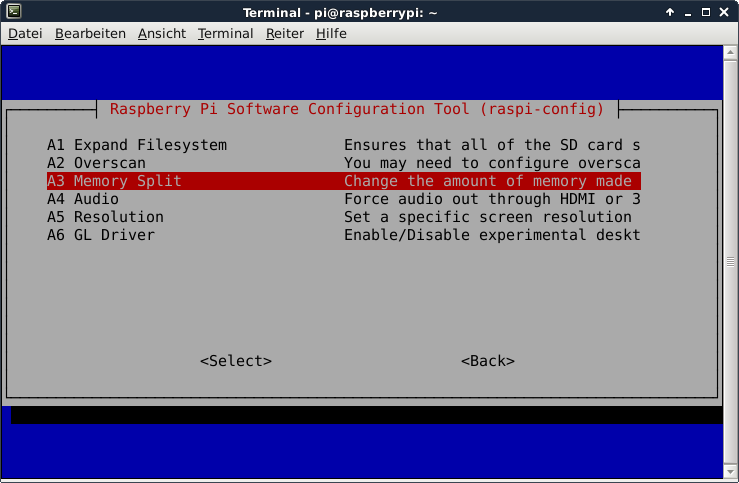

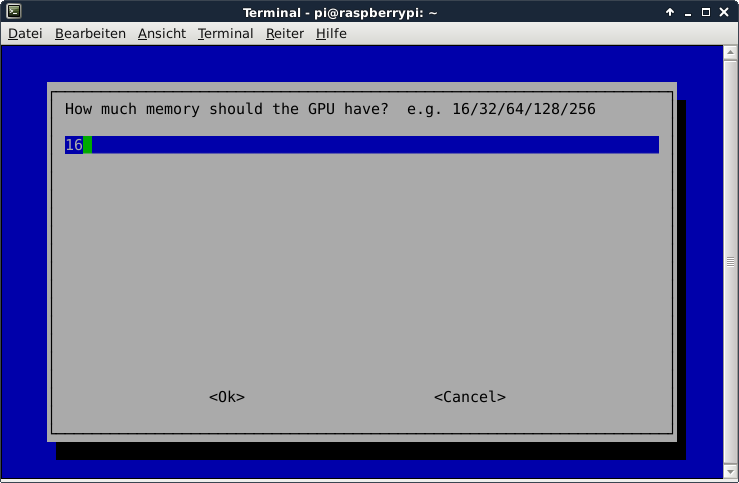

This is not required for running the Scality S3 Server, but the Raspberry Pi 3 has just 1 GB of main memory which is not much at all and there is no need to waste 48 MB.

A part of the main memory (just 1 GB) is assigned to the GPU. A pure server does not need a GPU at all. The share can me specified via the raspi-config tool. The minimum value is 16 MB, which is more useful compared with the dafault value (64 MB).

After the new value is specified and after a reboot, the new value should be visible:

# vcgencmd get_mem gpu

gpu=16M

This is not required for running Scality S3, but it is always useful to configure the operating system properly

$ sudo dpkg-reconfigure tzdata

$ cat /etc/timezone

Europe/Berlin

This is not required for running Scality S3, but it is always useful to have the correct time on a computer

$ sudo apt-get update && sudo apt-get install -y ntp ntpdate

Now the time sould be synchronized with several NTP servers.

$ ntpq -p

remote refid st t when poll reach delay offset jitter

==============================================================================

*time3.hs-augsbu 129.69.1.153 2 u - 128 377 31.891 7.194 1.053

-hotel.zq1.de 235.106.237.243 3 u 113 128 377 38.442 0.272 0.896

+bandersnatch.ro 213.239.154.12 3 u 75 128 377 28.497 6.777 3.761

-ntp.janetzki.eu 46.4.77.168 3 u 90 128 377 35.747 -0.316 27.235

+fritz.box 194.25.134.196 3 u 62 128 377 0.368 4.667 0.674

Check the time and date:

# date -R

Sat, 01 Apr 2017 14:57:33 +0200

Some of them are not required for the installation of Scality S3, but just nice to have.

$ sudo apt-get install -y curl htop joe nmap git python build-essential

Install Node.js and npn from here.

$ curl -sL https://deb.nodesource.com/setup_6.x | sudo -E bash -

$ sudo apt-get install -y nodejs

$ npm -version

3.10.10

$ nodejs --version

v6.10.2

1 GB of main memory is not enough to run Scality S3. In order to solve this problem, a Swap partition of at least 1 GB of size or a Swap file is required. These commands create a Swap file with 2 GB of size.

$ sudo mkdir -p /var/cache/swap

$ sudo fallocate -l 2G /var/cache/swap/swap0

$ sudo chmod 0600 /var/cache/swap/swap0

$ sudo mkswap /var/cache/swap/swap0

$ sudo swapon /var/cache/swap/swap0

Insert this line into the file /etc/fstab

/var/cache/swap/swap0 none swap sw 0 0

$ cd ~ && git clone https://github.com/scality/S3.git

$ cd ~/S3/

$ npm install

$ npm start

Just these lines need to be modified:

access_key = accessKey1

host_base = <the_ip_of_your_raspberry>:8000

host_bucket = <the_ip_of_your_raspberry>:8000

secret_key = verySecretKey1

use_https = False

Now s3cmd should work properly with the Scality S3 service.

$ s3cmd mb s3://testbucket

Bucket 's3://testbucket/' created

$ s3cmd ls

2017-05-02 07:40 s3://testbucket

$ s3cmd put /etc/hostname s3://testbucket

upload: '/etc/hostname' -> 's3://testbucket/hostname' [1 of 1]

6 of 6 100% in 0s 62.34 B/s done

$ s3cmd ls s3://testbucket

2017-05-02 07:41 6 s3://testbucket/hostname

$ s3cmd get s3://testbucket/hostname /tmp/

download: 's3://testbucket/hostname' -> '/tmp/hostname' [1 of 1]

6 of 6 100% in 0s 126.77 B/s done

$ s3cmd del s3://testbucket/hostname

delete: 's3://testbucket/hostname'

$ s3cmd rb s3://testbucket

Bucket 's3://testbucket/' removed

The user access key and secret access key can be specified in this file ~/S3/conf/authdata.json.

The user access key and secret access key can also be specified via the environment variables SCALITY_ACCESS_KEY_ID and SCALITY_SECRET_ACCESS_KEY. If these are set, anything in the file authdata.json will be ignored.

In the configuration file ~/S3/config.json, the port number is specified. The default port number of Scality S3 is 8000.

The metadata will be saved in the localMetadata directory and the object data will be saved in the localData directory within the ~/S3 directory. According to the tutorial here, it is possible to specify the path of the object data and metadata directories with the environment variables S3DATAPATH and S3METADATAPATH. Example:

mkdir -m 700 $(pwd)/myFavoriteDataPath

mkdir -m 700 $(pwd)/myFavoriteMetadataPath

export S3DATAPATH="$(pwd)/myFavoriteDataPath"

export S3METADATAPATH="$(pwd)/myFavoriteMetadataPath"

npm start

- Nimbus Cumulus on...

- Minio on...

- S3ninja on...

- S3rver on...

- Fake S3 on...

- Scality S3 on...

- OpenStack Swift on...

- Riak CS on...

- Measurements with s3perf and...

- Measurements with gsutil and...