-

Notifications

You must be signed in to change notification settings - Fork 463

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Service proxy causing high CPU usage with ~2.7K services #962

Comments

|

Just for the sake of reference, our kube-router configuration is pretty standard: apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

labels:

k8s-app: kube-router

tier: node

name: kube-router

namespace: kube-system

spec:

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: kube-router

tier: node

template:

metadata:

creationTimestamp: null

labels:

k8s-app: kube-router

tier: node

spec:

containers:

- args:

- --run-router=true

- --run-firewall=true

- --run-service-proxy=true

- --kubeconfig=/var/lib/kube-router/kubeconfig

- --bgp-graceful-restart

- -v=1

- --metrics-port=12013

env:

- name: NODE_NAME

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: spec.nodeName

- name: KUBE_ROUTER_CNI_CONF_FILE

value: /etc/cni/net.d/10-kuberouter.conflist

image: docker.io/cloudnativelabs/kube-router:v0.4.0

imagePullPolicy: IfNotPresent

livenessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 20244

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 3

successThreshold: 1

timeoutSeconds: 1

name: kube-router

resources:

requests:

cpu: 100m

memory: 250Mi

securityContext:

privileged: true

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /lib/modules

name: lib-modules

readOnly: true

- mountPath: /etc/cni/net.d

name: cni-conf-dir

- mountPath: /var/lib/kube-router/kubeconfig

name: kubeconfig

readOnly: true

dnsPolicy: ClusterFirst

hostNetwork: true

initContainers:

- command:

- /bin/sh

- -c

- set -e -x; if [ ! -f /etc/cni/net.d/10-kuberouter.conflist ]; then if [

-f /etc/cni/net.d/*.conf ]; then rm -f /etc/cni/net.d/*.conf; fi; TMP=/etc/cni/net.d/.tmp-kuberouter-cfg;

cp /etc/kube-router/cni-conf.json ${TMP}; mv ${TMP} /etc/cni/net.d/10-kuberouter.conflist;

fi

image: busybox

imagePullPolicy: Always

name: install-cni

resources: {}

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /etc/cni/net.d

name: cni-conf-dir

- mountPath: /etc/kube-router

name: kube-router-cfg

priorityClassName: system-node-critical

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

serviceAccount: kube-router

serviceAccountName: kube-router

terminationGracePeriodSeconds: 30

tolerations:

- key: CriticalAddonsOnly

operator: Exists

- effect: NoSchedule

operator: Exists

volumes:

- hostPath:

path: /lib/modules

type: ""

name: lib-modules

- hostPath:

path: /etc/cni/net.d

type: ""

name: cni-conf-dir

- configMap:

defaultMode: 420

name: kube-router-cfg

name: kube-router-cfg

- hostPath:

path: /var/lib/kube-router/kubeconfig

type: ""

name: kubeconfig

templateGeneration: 25

updateStrategy:

rollingUpdate:

maxUnavailable: 1

type: RollingUpdate |

|

I see you're running kube-router 0.4.0, have you tried 1.0.0 or 1.0.1? |

|

I can’t test kube-router v1 in production, but, testing locally, v1.0.1 appears to have the same behavior. Here’s how to reproduce it locally, btw. Steps to reproduce (with minikube)

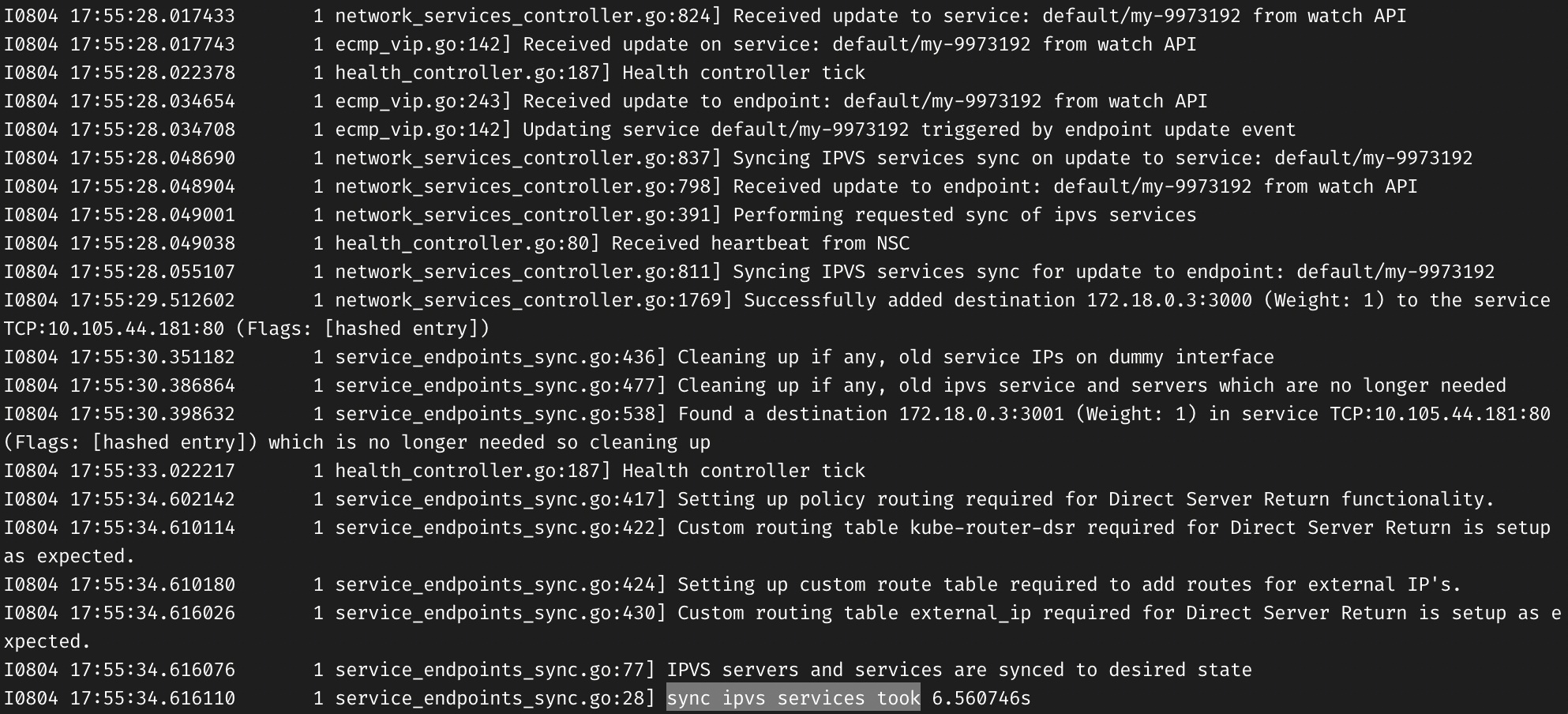

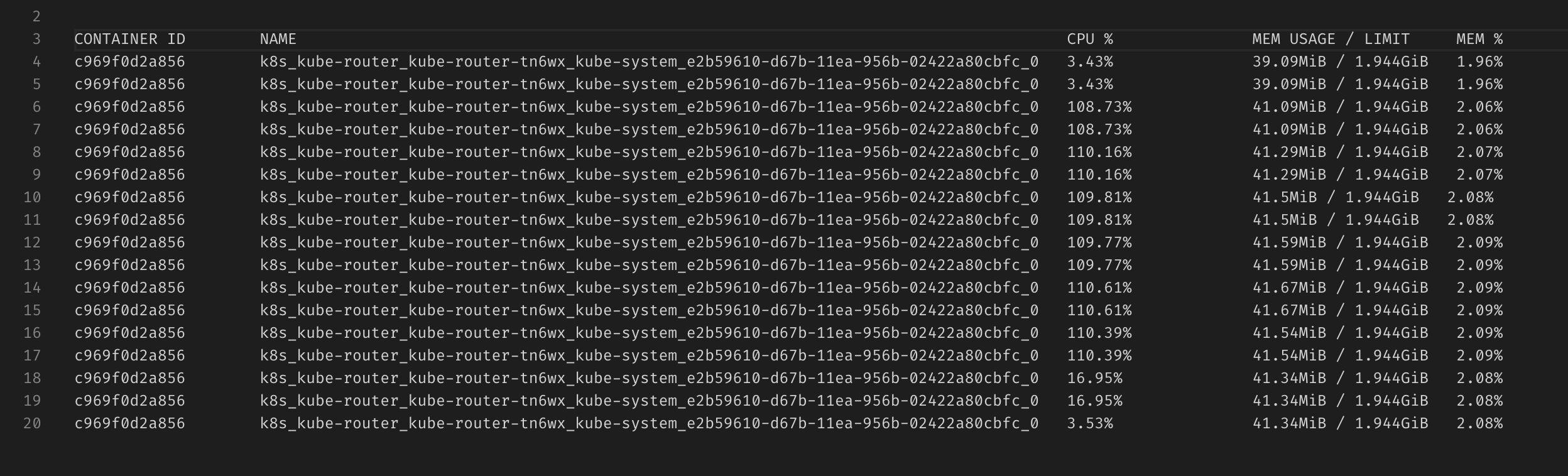

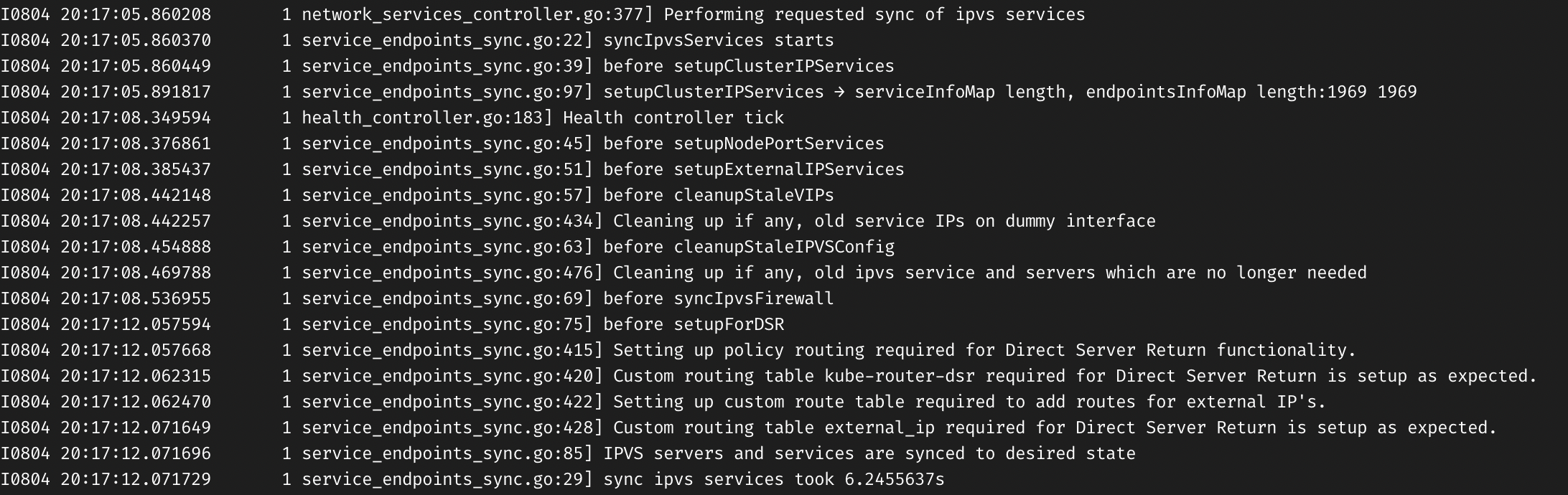

Observed behaviorThe picture is similar for both IPVS resync takes ~6 seconds: The CPU usage (tracked by |

|

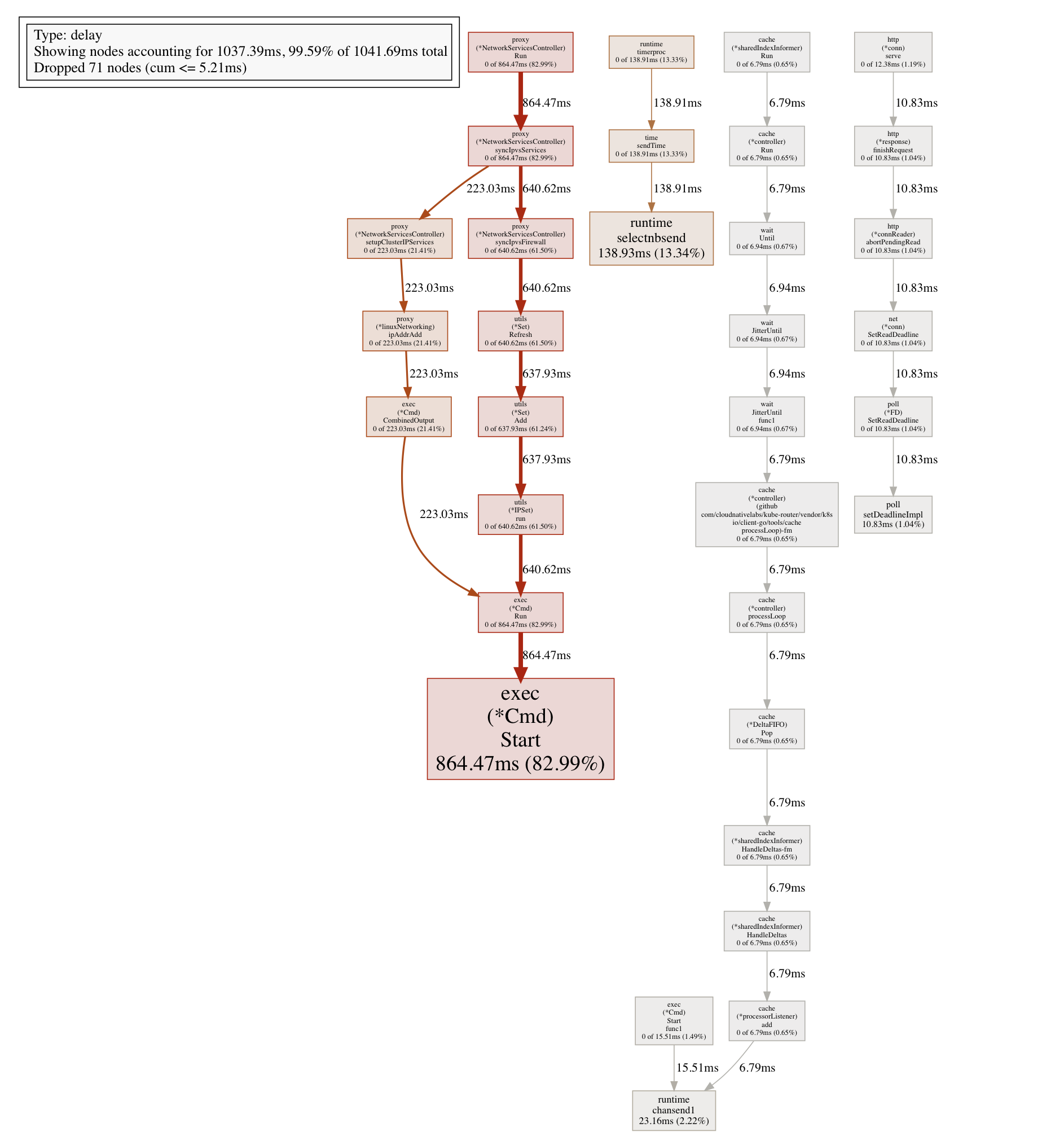

While I’m trying to figure out how to debug/profile The slowest functions appear to be

|

|

Not sure if this helps, but here’s a 15-second CPU trace I captured through |

|

Looking further into this. Here’s the scheduler latency profile generated from the above trace. Most of the time is spent in external Initially, I assumed I might be misunderstanding the profile (I don’t have any Go debugging experience). However, this actually seems correct. I’ve tried adding a bunch of log points into kube-router/pkg/controllers/proxy/network_services_controller.go Lines 704 to 714 in e35dc9d

Each TBH not sure how to proceed from here. A good solution would be, perhaps, to avoid full resyncs on each change – and, instead, carefully patch existing networking rules. But I don’t know what potential drawbacks this might have, nor am I experienced with Go/networking enough to do such change. |

|

Wait, I might’ve found an easy win!!

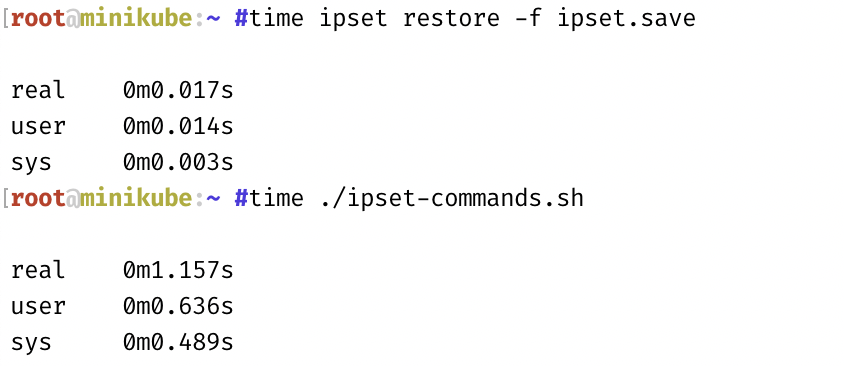

The list of rules looks exactly like the list of I measured both approaches, and with the same list of rules (but different sets, of course), |

|

That seems like a reasonable approach to me. This is very similar to the approach we intend to take with iptables/nftables for the NPC in 1.2. As a matter of fact it looks like some of the functionality already exists in Do you feel comfortable submitting a PR for this work? If so, we could probably put it in our 1.2 release which is going to focus on performance. We're currently working on fixing bugs in 1.0 and addressing legacy go and go library versions for 1.1. |

This commit updates kube-router to use `ipset restore` instead of calling `ipset add` multiple times in a row. This significantly improves its performance when working with large sets of rules. Ref: cloudnativelabs#962

|

Ha, I was just finishing the PR for this. Here you go: #964 |

|

Now, a question about In 725bff6, @murali-reddy mentioned that he’s calling Locally, if I’m replacing - out, err := exec.Command("ip", "route", "replace", "local", ip, "dev", KubeDummyIf, "table", "local", "proto", "kernel", "scope", "host", "src",

- NodeIP.String(), "table", "local").CombinedOutput()

+ err = netlink.RouteReplace(&netlink.Route{

+ Dst: &net.IPNet{IP: net.ParseIP(ip), Mask: net.IPv4Mask(255, 255, 255, 255)},

+ LinkIndex: iface.Attrs().Index,

+ Table: 254,

+ Protocol: 0,

+ Scope: netlink.SCOPE_HOST,

+ Src: NodeIP,

+ })the second bottleneck gets resolved. With this change, for me, Questions:

|

|

Okay, I think I have answers to both questions. Here’s the second PR: #965 |

Hey,

We’re running a Kubernetes cluster with ~150 nodes and ~2.7K services. Each service typically matches one pod. Service endpoints are updated quite often (e.g., due to pod restarts).

Each time a service endpoint is updated, kube-router seems to perform a full resync of services. Due to the number of services, the resync takes ~16 seconds on a

m5a.largeEC2 instance:While doing the resync, kube-router aggressively consumes the CPU:

which affects other pods running on that instance.

Is there any way to reduce the CPU usage or make service resyncs happen faster? The ultimate goal is to make sure kube-router doesn’t affect other pods from that node.

(CPU limits are not a solution, unfortunately. We don’t want to apply CPU limits to

kube-routerbecause that’d make IPVS resyncs longer, and with long resyncs, we’d start experiencing noticeable traffic issues. E.g. if a service endpoint changes, and it takes kube-router 1 minute to perform an IPVS resync, the traffic to that service will be blackholed or rejected for the full minute.)The text was updated successfully, but these errors were encountered: