New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Update to CUDA 11.4.1 #7257

Update to CUDA 11.4.1 #7257

Conversation

Update to CUDA 11.4.1 (11.4.20210728): * CUDA runtime version 11.4.108 * NVIDIA drivers version 470.57.02 Add support for GCC 11 and clang 12. See https://docs.nvidia.com/cuda/cuda-toolkit-release-notes/index.html .

|

A new Pull Request was created by @fwyzard (Andrea Bocci) for branch IB/CMSSW_12_1_X/master. @cmsbuild, @smuzaffar, @mrodozov, @iarspider can you please review it and eventually sign? Thanks.

|

|

please test |

|

Now that all externals are fixed, it should be OK to upgrade the default CMSSW releases to CUDA 11.4 . |

|

backport #7197 |

|

enable gpu |

|

please test for slc7_amd64_gcc10 |

|

please test for slc7_aarch64_gcc9 |

|

please test for slc7_ppc64le_gcc9 |

|

+1 Summary: https://cmssdt.cern.ch/SDT/jenkins-artifacts/pull-request-integration/PR-655e55/18155/summary.html Comparison SummarySummary:

|

|

please test |

|

-1 Failed Tests: UnitTests The following merge commits were also included on top of IB + this PR after doing git cms-merge-topic: You can see more details here: Unit TestsI found errors in the following unit tests: ---> test testFWCoreConcurrency had ERRORS ---> test testFWCoreUtilities had ERRORS ---> test TestFWCoreServicesDriver had ERRORS |

|

+1 Summary: https://cmssdt.cern.ch/SDT/jenkins-artifacts/pull-request-integration/PR-655e55/18179/summary.html The following merge commits were also included on top of IB + this PR after doing git cms-merge-topic: You can see more details here: GPU Comparison SummarySummary:

Comparison SummaryThe workflows 140.53 have different files in step1_dasquery.log than the ones found in the baseline. You may want to check and retrigger the tests if necessary. You can check it in the "files" directory in the results of the comparisons Summary:

|

|

@smuzaffar could you point me to the logs of the jobs that failed to use the GPU ? |

|

@fwyzard , no job failed. It is just that due to newer cuda driver in CMS our tests are not actually using the GPUs |

Are you sure they didn't use the GPU ? For

If we don't use the GPU there should be a warning about it from the |

|

by the way, I forced set the env to use system cuda driver and gpu workflows worked fine |

|

The expected behaviour when the NVIDIA drivers from CMSSW are newer than those from the system is that CMSSW uses it's own copy of the drivers to run. |

Do you have the logs from these commands ? |

|

No, the gpu condor node I was working on destroyed few mins ago :-( I am testing it again |

|

@fwyzard , logs of runTheMAtrix for gpu workflows are avauilable under https://muzaffar.web.cern.ch/gpu/ now. Note that in this case we used system cuda driver |

|

So, here is the log we expect when a GPU workflow is running on a GPU (from the And here is the log we expect whgen the same configuration is run without a GPU: The job should be successful in both cases - the only difference being the warning from the |

OK, so running with the system driver (v460) and CUDA 11.4 works out of the box. And I interpret the results from the PR test that also using the driver bundled with CMSSW works. |

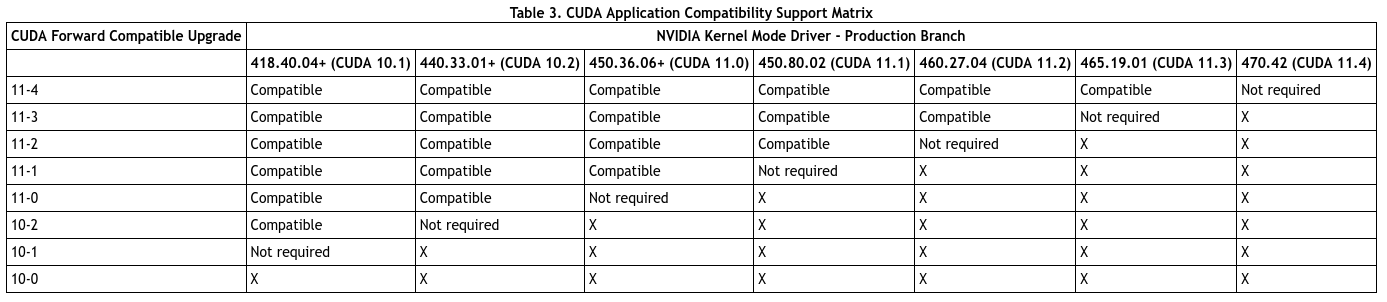

Which matches the release notes, that say we should be able to run on any driver >= v450.80.02 |

|

While, according to the compatibility guide, using the compatibility drivers that we ship with CMSSW would be required if the system drivers are older, from CUDA 10.1 or 10.2: |

|

thanks @fwyzard , there was nothing in the logs about GPU usage or device found message thatis why I was thinking that may be GPU was not used. Running these workflows on non-GPU system shows that so all looks good for this PR to go in |

|

+externals |

|

This pull request is fully signed and it will be integrated in one of the next IB/CMSSW_12_1_X/master IBs (tests are also fine). This pull request will now be reviewed by the release team before it's merged. @perrotta, @dpiparo, @qliphy (and backports should be raised in the release meeting by the corresponding L2) |

That's a good point. @makortel, maybe we should add a simple message from the CUDAService that confirms which GPUs and runtime are used ?

? |

|

Seems that printout like that would be useful. I'd go with |

|

We already have a more verbose |

|

Good point, I got confused by the order of that printout and the application of MessageLogger configuration (that suppresses INFO by default). Maybe the |

|

I actually managed to use |

Update to CUDA 11.4.1 (11.4.20210728):

Add support for GCC 11 and clang 12.

See https://docs.nvidia.com/cuda/cuda-toolkit-release-notes/index.html .