-

Notifications

You must be signed in to change notification settings - Fork 116

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Docker Engine Memory leak #178

Comments

|

Thanks for your report. Unfortunately once the memory has been touched by the Linux kernel within the VM and therefore becomes populated RAM in the hyperkit process (by the usual OS page faulting mechanisms) there is no way for the guest kernel to then indicate back to the hypervisor and therefore the host when that RAM is free again and to turn those memory regions back into unallocated holes. However since the RAM is unused in the guest it should not be touched by anything in the VM and therefore not by the hyperkit process either and therefore I would expect it to eventually get swapped out to disk in favour of keeping actual useful/active data for other processes in RAM, just as it would for any large but idle process. It sounds like you are checking the virtual address size ( I'm afraid that compacting the |

|

Hey all. Sorry to revive a dead thread, but I would like this ticket to be reopened. After looking into it, the reserved memory for hyperkit process sits around 1.6-1.7 GB (based on my docker memory settings) even when trying to apply significant memory pressure. (This is of course without any running containers) Output of I wrote a simple python script to store crap into memory, then I spam opened as many google chrome tabs as I could (best way to apply memory pressure I could think of). Here is the script I used: import sys

import threading

from unittest import TestCase

items = []

class WastefulThread(threading.Thread):

def run(self):

for i in range(0, 10000000000):

items.append(i)

if i % 100000 == 0:

sys.stdout.write('\r' + str(i))

blah = items[0:i]

for item in items:

sys.stdout.write('\r{}'.format(item))

class WasteMemory(TestCase):

def test_waste(self):

for i in range(8):

thread = WastefulThread(name='Thread-{}'.format(i))

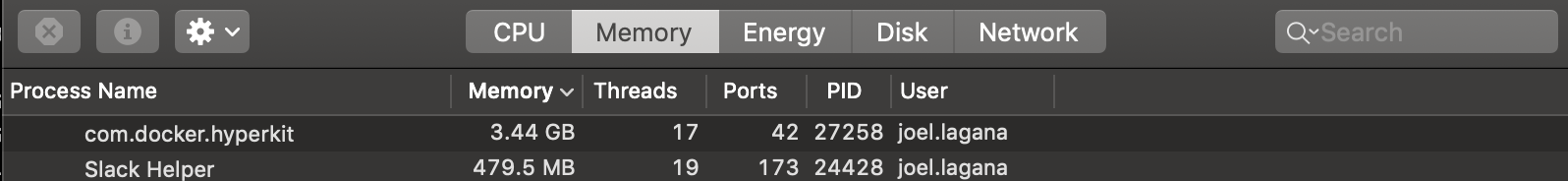

thread.start()and here is a gif showing the memory usage of the top four processes sorted by reserved memory (RES): Here is a higher quality gifv link. The memory of footprint of the Here is an image of the memory after the wasteful process got to run a little longer... A larger span of the memory and swap usage: TLDR: It appears that the |

|

I have also crawled around the related issues and haven't found anything that explains the reasons or points to a solution for this issue. Please let me know if I can provide any more information. I believe the GIF I provided doesn't show full memory reservation (roughly 16 GB); however, during other tests I ran it was almost 100% maxed through similar means and the RES portion of the |

|

Having docker idle in my toolbar lands it at 1.3GB of RAM I really don't understand how it is even possible. This is very shortly after booting, before using any containers. |

|

Can this be reopened please? |

|

I just fresh started my computer with no containers running and found hyperkit using 9GB of RAM. Then after restarting docker it was still using 3 GB. This needs to be reopened. |

|

Yes please reopen.. with no containers open, its ~5G of RAM being used. This is significantly impacting the avaialble resources and slowing down other things we need to run. A possible bug introduced in the latest version? |

|

same situation here, just restarted my laptop, no containers and com.docker.hyperkit ~2G of RAM |

|

Exact same situation here as well. Sitting at 2.61 GB without a single container running. |

|

2.91 GB here. top of the chart for me :) Just doesn't seem right. |

|

Something is going wrong. Reopen this ticket, damn. The explanation at the top is not plausible enough. |

|

I'm seeing the same thing on PSA: If you're reporting the same issue, please attach the version of Docker you're running using |

|

Same problem, |

|

I'm running at 5.05Gb now, brand new MacBook never even run a docker image on it. This can't be right.

|

|

2 GB usage here. No containers running. |

|

This is my call graph while Docker is completely idle (no containers running). Version info: |

|

cpu is loaded too much as well as ram and my macbook lagging :( this problem was appeared after updating from high sierra to mojave. It's really annoying, folks, do you have maybe some thoughts/estimates when the problem can be fixed? |

|

@kyprifog Because it, as stated by ijc in the first comment, is working as intended. I agree that it's pretty bad. The issue stems from hyperkit, and Macs aren't usually used as production-servers, so they can't be bothered to make it any better then it is right now. |

|

+1 |

1 similar comment

|

+1 |

|

Personally I've migrated to maintaining dedicated start/stop scripts for most of the projects I work on. The majority of the containers I used to deal with were reasonably easy to replace with |

|

It seems that this issue shows the attitude of the Docker team towards one of the most critical issues ever. Instead of working on a solution - even if that involves hyperkit & possibly even Apple itself it the issue goes deeper to the OS level - what does Docker team do? Absolutely nothing. Problem is unresolved for four years now. |

|

Please reopen this issue. |

|

open the issue please |

|

+1 also seeing this issue. After a fresh restart, not running any containers, docker is using 4.36GB of memory. |

|

I installed latest Docker desktop on Mac - Catalina - I always quit docker if I am not using it, otherwise about 3GB ram is consumed even with no containers |

|

+1 Please Reopen the issue |

|

+1 would love if the team would focus on a fix; I'm seeing this on OSX mojave. 3.5GB memory and 5.6% cpu, and I'm just running a single tiny container that is mostly sleeping.

|

|

Same. Please reopen this issue. I'm running at 3.7GB memory on a simple Wordpress application. |

|

+1 Please Reopen the issue, it's a lot of memory ): |

|

+1 please solve this |

|

No containers running and 2.43gb of memory consumed. osx 10.14.6 |

|

TLDR: Docker Desktop for Mac initially consumes so much memory because it is running a VM in the background [1], and it appears to hold everything it ever consumes (but actually doesn't) because of an apparent bug in memory reporting in Mac OS X itself [2] [1] Docker Desktop for Mac runs a Linux VM via https://github.com/moby/hyperkit , as documented at https://docs.docker.com/docker-for-mac/docker-toolbox/ . This is how you can run Linux containers on Mac, it is passing them off to the daemon running there. That VM alone consumes 2GB of memory by default, although it can be pulled back to 1GB in preferences. [2] https://docs.google.com/document/d/17ZiQC1Tp9iH320K-uqVLyiJmk4DHJ3c4zgQetJiKYQM/edit has extensive documentation and testing. Critically: "The system is able to recover memory from Docker Desktop and give it to other processes if the system is under memory pressure. This is not reflected in the headline memory figure in the Activity Monitor, but can be seen by looking at the Real Memory." and "Since the qemu and hyperkit processes show the same double-accounting problem in Activity Monitor despite them being completely separate codebases, we conclude the bug must be in macOS itself, probably the Hypervisor.framework. We have reported the bug to Apple as bug number 48714544." |

|

please fix help |

/sigh This "conclusion" again fails to address the concerns I posted here: #178 (comment). THE RESERVED MEMORY IS STILL FAR TOO HIGH FOR AN IDLE PROCESS. It never dips below 1.6 GB in my testing and this has NOTHING to do with the double reporting bug. I'm aware of the distinctions between Virtual Memory and Reserved Memory, so a bug in VIRT reporting is great to know about, but I couldn't care less as it isn't relevant. In my examples, RES memory is right there for show and it doesn't go down AT ALL from first boot. Watch the GIF again. This "virtual memory is double reported" is a distraction. This is NOT RELATED to the issue I'm reporting. We all know virtual memory doesn't matter here. But the reserved memory not being freed up? That's a problem. Honestly, I need to open a new issue with a link to this thread. Since the issue I'm seeing isn't a memory leak. It's a lack of freeing memory when pressure is applied... |

|

Are you considering the fact that a Linux VM with a minimum of 1GB of memory (and a current default of 2GB) hard-allocated to it is running? That's the [1] part of the message. Even if the VM isn't consuming its max, it's normal behavior for hypervisors in general to claim and not release the max for guest VMs. They don't have the OS-level integration with the guest that would be required to "know" how much was actually needed or consumed by default, and even if they did it could be dangerous for stability to dynamically allocate because a surge could send the host into swap or worse depending on how close to its capacity it already was. When you are on Linux, the Docker daemon is "a process" because containers will reuse the Linux kernel you're already running. On Mac and Windows that isn't possible, so it's more than a process - it's a Linux VM with a Docker process running. |

|

I'm facing the same issue. |

|

I am facing the same issue. 2 GB memory usage right after starting up my machine. |

|

I also facing this issue, almost 3GB here! |

|

Try to use just specific project directories (for volumes) in Resources -> File Sharing. My com.docker.hyperkit process is using 1,86 GB of memory. |

|

I also face the same issue, No containers are running |

Actually, it seems I missed that part when I was originally replying to your comment. That would explain the constant reserved memory readings I have seen. Uggh, that's a lot of overhead to have running constantly. It would be nice if the docker daemon was smart enough to spin down this VM when there are no containers running. Sure it would result in slower startups, but that would be a nice configuration option. Something like "memory conscious-mode" or something that you can opt into that will result in slightly longer spin ups for the first running container you launch because it needs to boot the hyperkit. It could even have a configurable decay time before the hyperkit gets spun back down (once the last running container is stopped). Anyway just a thought. It certainly would make leaving the docker daemon on in the background a lot easier for me. |

|

Closed issues are locked after 30 days of inactivity. If you have found a problem that seems similar to this, please open a new issue. Send feedback to Docker Community Slack channels #docker-for-mac or #docker-for-windows. |

Expected behavior

The process com.docker.hyperkit after killing all the docker containers need to free the used memory to the initial state (50 mb)

Actual behavior

After killing all the docker containers the process com.docker.hyperkit still using 3.49 GB.

Information

Diagnostic ID: EB6AFE2E-34AA-4617-B849-D79863CDC40C

Docker for Mac: 1.12.0 (Build 10871)

macOS: Version 10.11.6 (Build 15G31)

[OK] docker-cli

[OK] app

[OK] moby-syslog

[OK] disk

[OK] virtualization

[OK] system

[OK] menubar

[OK] osxfs

[OK] db

[OK] slirp

[OK] moby-console

[OK] logs

[OK] vmnetd

[OK] env

[OK] moby

[OK] driver.amd64-linux

Steps to reproduce

docker-compose up -d

version: '2'

services:

student:

image: docker:dind

ports:

- "8000-8010"

privileged: true

volumes:

- /tmp/docker-training:/docker-training

docker-compose scale student=10

docker-compose down

4.-

docker ps -> no containers running, but consuming a lot of memory com.docker.hyperkit (3,49 gb)

Only restarting the docker engine VirtualMachine (using hyperkit) free the memory.

The text was updated successfully, but these errors were encountered: