-

-

Notifications

You must be signed in to change notification settings - Fork 1.7k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

blockmap Forbidden - autoUpdater - private S3 bucket #4030

Comments

|

I can see somebody made a change to the blockmap headers here: I don't think it takes an S3 region into account though. Is there some other way to configure this? |

|

You have to add region and channel name @stuartcusackie |

|

@mvkanha I already have the region set in my builder config. Do I really need the channel?? I have tried adding a channel and I still have same problem. This is how I am doing it: And in my electron-main.js file: But I am getting the same problem: The region is still missing from the Blockmap request. |

|

I think you should add endpoint to configuration. |

|

Obviously, if bucket is private, electron-updater cannot access it. |

|

@develar You actually can access a private bucket by updating the autoUpdater request headers using the aws4 signing package. Example (inspired by #2355): This works except for the problem with the region in the Blockmap request. I will try what @mvkanha has said and set an endpoint in my publish config... |

|

@stuartcusackie PR will be accepted, should be easy to find error and fix it. |

|

@develar Thanks. I will try to find where the region can be added in the autoUpdater code. @mvkanha Adding the endpoint and channel didn't work. I get an aws error when I do that: |

|

Maybe the region isn't the problem. I wonder if the blockmap requests need two more aws signing requests (one for the old blockmap and one for the new)... |

|

Did you ever get this figured out @stuartcusackie? |

|

@justinwaite Unfortunately, I did not. |

|

I have this problem as well. It happens even if I have differentialPackage set to false such that there are no blockmaps uploaded to S3. Is there a way to disable the requests for blockmaps? |

I guess you are right. I checked the server log for the download events, and there exists two requrests for both old and new blockmap files, and they all have "SignatureDoesNotMatch" access deny (perhaps it used the signed header we set for requestheader for the actual EXE, however the path part shall not be the same). I am not sure how to inject correct headers for downloading blockmap.. |

I took a quick look at the code, this is where the requests for the blockmaps are: Here's where it uses the headers:

Happy to review a PR on this if someone is willing to give it a stab 🙂 |

|

Facing the same issue. @stuartcusackie were you able to make it work? |

@bansari-electroscan I did not. I'm still using a public bucket. |

|

Update

|

|

@ktc1016 were you able to make it work? |

|

@leena-prabhat after reviewing my detailed logs, it looks like the 403 forbidden error message was just captured by my "try-catch" statements. Therefore, my solution did not fix the original issue but I'm still able to use a private bucket for this. I'll update my comment to avoid further confusions. |

|

Hey, anyone got anything or any combination to work on it ? I tried multiple scenarios but none success. facing issues from SignatureDoesNotMatch - The request signature we calculated does not match the signature you provided. Check your key and signing method. This is even after setting the feed url. |

|

It is not a solution to this problem but a workaround. We developed a lambda function that returns a signedUrl in the Location header, the autoUpdater automatically redirects to the signedUrl. // in electron app

autoUpdater.setFeedURL({

provider: 'generic',

url: `https://my-lambda-function.com/`,

useMultipleRangeRequest: false,

});

// api key to access the lambda function via Api Gateway

autoUpdater.requestHeaders = { 'x-api-key': API_KEY };in lambda function: // create a signedUrl based on the pathParameters

return {

statusCode: 302,

headers: { Location: signedUrl },

} |

|

I also had this issue during developing. It's not a solution but I end up using aws-sdk to download my file if electron-updater found any updated version in my private S3. First, disable autoDownload: and the download looks something like this: |

|

Were you able to find out how to achieve it for a private s3 bucket? It's still not working for me. @stuartcusackie @develar @mayankvadia @justinwaite ? |

|

@rohanrk-tricon Did you found any solution around this? Tried public and private buckets, played around with the request options. Nothing works. It's always signature mismatch error. :( |

|

Not sure where it is failing tbh. Is the electron distributable being signed/modified after electron-builder produces the app-update file pre-upload to s3? That would change the signature of the file after the update hash has been generated. |

|

@bansari-electroscan @mmaietta I was finally able to make it work. This is my AppUpdater class which performs all auto-update events getOptions() will attach required authentication headers(accessKeyId and secretAccessKey) to each call to the s3 bucket. This is how I have set my env file which will hold all aws info You can see what auto updater logs in this file path C:\Users\user\AppData\Roaming\Electron\logs\main.log Note: It will be easy for you if you enter all the sensitive info in the code directly as a string until you test it, later you can move it to env file. |

@rohanrk-tricon Thanks for the great info and sorry for jumping in. Even I am in a similar dilemma. Can you please also share the S3 bucket policy that you have set for it ? |

Also check if you have enabled CORS(Cross-Origin Resource Sharing). |

|

Hello @rohanrk-tricon , can you post your CORS config ? I am getting this error One question about the policy you just sent, are you attaching to an user or setting directly in the bucket ? Directly in the bucket it says and these are my options logging: Thanks in advance |

|

i am getting error 403 forbidden after following all these steps. |

|

any updates on this? |

|

Nowadays we still facing the same problem with version 6.1.4. |

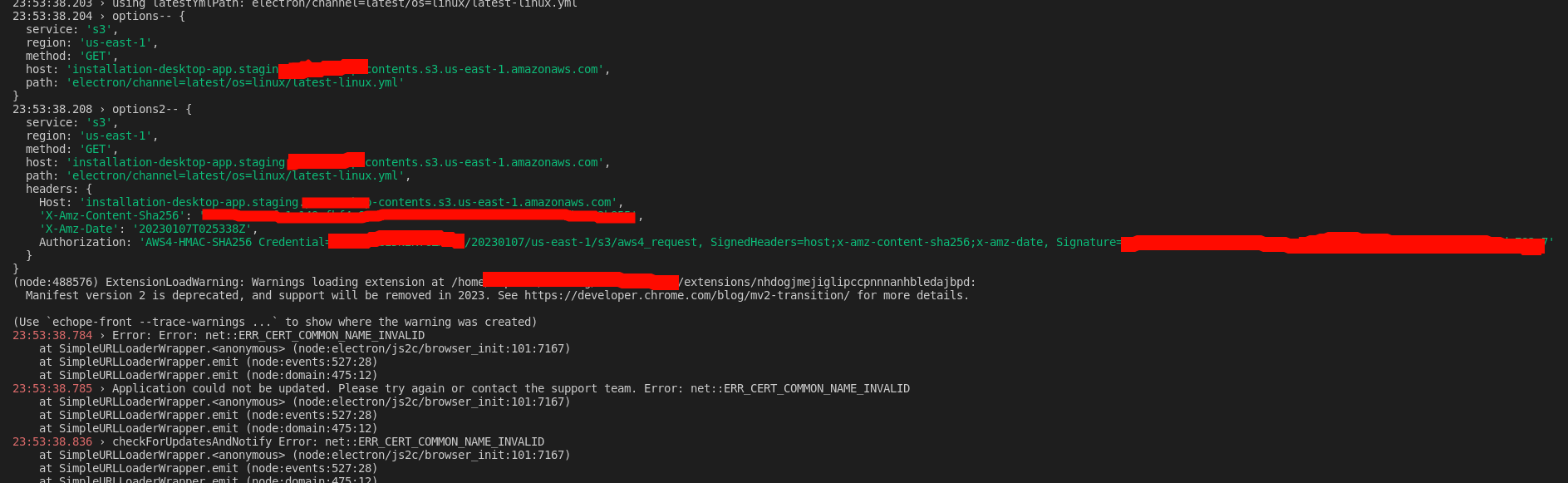

I am getting this error after removing public access from my S3 bucket through the AWS console. It also happens when I grant full public access to the bucket and use 'acl': 'private' in my publish config.

The actual Setup file seems to download fine.

It is a private repository but I have given my AWS user FullS3Access and it still doesn't work.

Is this the problem?:

It can see that the aws region is missing from my autoUpdater blockmap requests:

Download block maps (old: "https://mybucket.s3.amazonaws.com/releases/electron/win/MyApp%20Setup%200.0.6.exe.blockmap", new: https://mybucket.s3.amazonaws.com/releases/electron/win/MyApp%20Setup%200.0.7.exe.blockmap)The files are actually located at:

https://mybucket.s3-eu-west-1.amazonaws.com/releases/electron/win/MyApp+Setup+0.0.6.exe.blockmapSo the region is being added correctly to my autoUpdater.requestHeaders but I have no control over the blockmap request urls.

I am using aws4 to sign my request headers.

The text was updated successfully, but these errors were encountered: