In this project I created a structured streaming with common technologies.

It was a good practice to use these technologies while working together.

-

Producer:

Simple Flask App by ordering food and beverages.

Flask App get that data and load to Kafka topic for every order. -

Consumer:

Spark Session to read stream from Kafka topic.

PySpark reads streaming from Kafka, does some data processing and writes to Elasticsearch. -

Visualization:

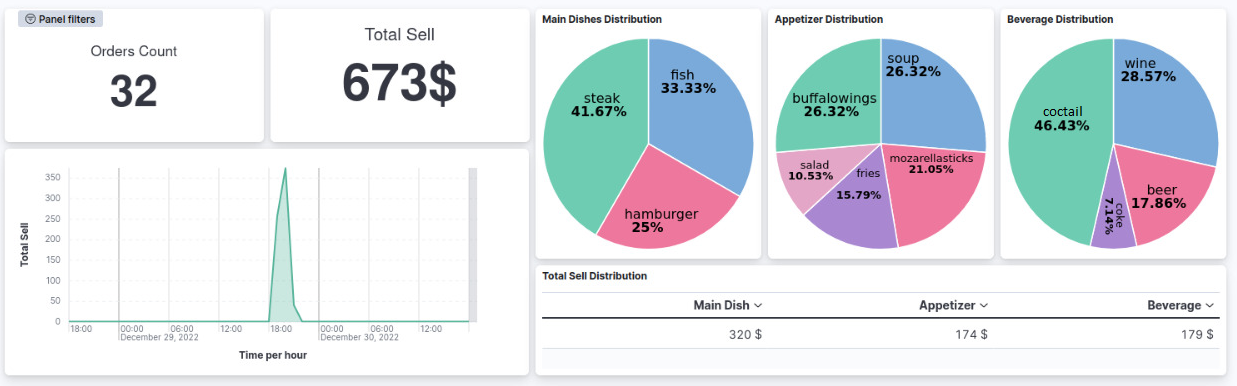

Kibana to visualize Elasticsearch data.

Elasticsearch index and Kibana dashboard operations handled by init_db

Pipeline

Flask App

Kibana Dashboard

$ sudo docker-compose up

is fine to start all services in proper sequence.

Flask App: localhost:5000

Kibana Dashboard: localhost:5602