gym-adserver is an OpenAI Gym environment for reinforcement learning-based online advertising algorithms. gym-adserver is one of the official OpenAI environments.

The AdServer environment implements a typical multi-armed bandit scenario where an ad server agent must select the best advertisement (ad) to be displayed in a web page.

Each time an ad is selected, it is counted as one impression. A displayed ad can be clicked (reward = 1) or not (reward = 0), depending on the interest of the user. The agent must maximize the overall click-through rate.

| Attribute | Value | Notes |

|---|---|---|

| Action Space | Discrete(n) | n is the number of ads to choose from |

| Observation Space | Box(0, +inf, (2, n)) | Number of impressions and clicks for each ad |

| Actions | [0...n] | Index of the selected ad |

| Rewards | 0, 1 | 1 = clicked, 0 = not clicked |

| Render Modes | 'human' | Displays the agent's performance graphically |

You can download the source code and install the dependencies with:

git clone https://github.com/falox/gym-adserver

cd gym-adserver

pip install -e .Alternatively, you can install gym-adserver as a pip package:

pip install gym-adserverYou can test the environment by running one of the built-in agents:

python gym_adserver/agents/ucb1_agent.py --num_ads 10 --impressions 10000Or comparing multiple agents (defined in compare_agents.py):

python gym_adserver/wrappers/compare_agents.py --num_ads 10 --impressions 10000The environent will generate 10 (num_ads) ads with different performance rates and the agent, without prior knowledge, will learn to select the most performant ones. The simulation will last 10000 iterations (impressions).

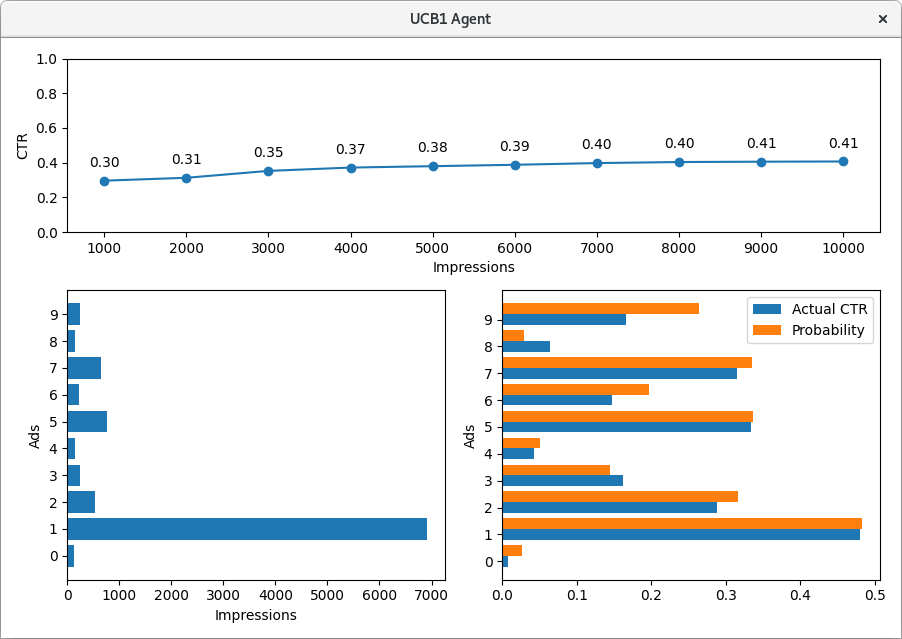

A window will open and show the agent's performance and the environment's state:

The overall CTR increases over time as the agent learns what the best actions are.

During the initialization, the environment assigns to each ad a "Probability" to be clicked. Such a probability is known by the environment only and will be used to draw the rewards during the simulation. The "Actual CTR" is the CTR actually occurred during the simulation: with time, it approximates the probability.

The effective agent will give most impressions to the most performant ads.

The gym_adserver/agents directory contains a collection of agents implementing the following strategies:

- Random

- epsilon-Greedy

- Softmax

- UCB1

Each agent has different parameters to adjust and optimize its performance.

You can use the built-in agents as a starting point to implement your own algorithm.

You can run the unit test for the environment with:

pytest -v- Extend AdServer with the concepts of budget and bid

- Extend AdServer to change the ad performance over time (currently the CTR is constant)

- Implement Q-learning agents

- Implement a meta-agent that exploits multiple sub-agents with different algorithms

- Implement epsilon-Greedy variants