-

Notifications

You must be signed in to change notification settings - Fork 1.5k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Credentials are not retrieved from AWS IMDSv2 when running on EC2 #2840

Comments

|

This exact same issue is affecting us with our fluent bit Kubernetes daemonset. We are using IMDSv2 on our EKS nodes and fluentbit is unable to communicate with our elastic search cluster. As a result, we have to turn off the AWS_Auth parameter. This should be a high priority as this is a security risk for many users. |

|

Check if you are affected by the hop limit - increase it to 2:

|

|

This issue is stale because it has been open 30 days with no activity. Remove stale label or comment or this will be closed in 5 days. |

|

Still an issue, nothing to do with the hop limit. The code to handle IMDSv2 simply is not used for obtaining credentials. |

|

This issue is stale because it has been open 30 days with no activity. Remove stale label or comment or this will be closed in 5 days. |

|

Commenting to keep this issue alive as I cant edit / remove labels. |

|

Hi, I try to run Fluent bit on a Windows server 2016, the Cloudwatch plugins seem unable to authenticate using the Instance Profile. |

|

Can we try this with 1.7.x and see if it reproducing? |

|

This issue is stale because it has been open 30 days with no activity. Remove stale label or comment or this will be closed in 5 days. |

|

This issue was closed because it has been stalled for 5 days with no activity. |

|

Sorry folks. This is a feature gap which I had meant to address late last year but then lost it with too many other higher priority feature requests and bugs. We will get someone to work on this soon. |

|

This issue is stale because it has been open 30 days with no activity. Remove stale label or comment or this will be closed in 5 days. |

|

This issue is preventing using the S3 output plugin. The current workaround to use IMDSv1 is a security breach. Do you have an ETA to have it resolved? |

|

@shalevutnik Yes, I understand this is very important, but I am stretched very thin lately. Unfortunately I can't give a promise any exact ETA yet but I have gotten someone from my team assigned to start work on this soon. |

|

Hi 👋 I am currently working on adding IMDSv2 support to AWS Fluent Bit plugins. Thank you for your patience. I will update you on the progress of this feature. |

|

Hi @kdalporto. This is not the hops limit issue any more, since you have hops limits correctly set to 2 at it looks like from your error logs Fluent Bit is not having that problem. It seems like the IMDS may be unreachable. Is it possible for you to try to curl 169.254.169.254 on your instance? This should return a token. |

|

@matthewfala yes that returns a ~56 character token when running on the node instance where fluent-bit is running. I'm also able to manually upload objects to the destination bucket via the CLI. I currently have HttpTokens set to required. |

|

That's strange. Your error message should only come up The following curl should return with a status code of 401 which indicates IMDSv2 availability. It's not clear why this request is failing (not returning anything) (401 is expected). |

|

That curl does indeed lead to a 401: |

|

@matthewfala, I have a bit of an update. I've realized on two separate occasions that logs have gotten sent to S3, but I wasn't sure why. This morning it realized it had occurred again, as a result of me deleting my kubernetes deployment, the logs were sent to S3. This is consistent with the documentation snippet: "If Fluent Bit is stopped suddenly it will try to send all data and complete all uploads before it shuts down." At the moment, I don't understand why it seems to be able to send to S3 on shutdown, but fails during normal operations. Update: I tried to reproduce the above scenario, however no logs were sent on shutdown this time. |

|

I'm not sure what the issue could be. The process of obtaining credentials during shutdown is the same as the process of obtaining credentials during normal operations. That is if the inputs (some of which have network activity) are not interfering with our requests. One thing that might be happening is that the input collectors are shut down, while the output plugins are still sending out logs. If the input plugin that is interfering with our network requests is stopped, then then that might explain why on shut down we are able to reach IMDS and during normal operations we are not. What input plugins are you using? anything that might require networking such as Prometheus? I have a custom image which adds IMDSv1 fallback support and also some extra debug statements for IMDS problems. If you want to test this out and send the resulting logs, they could help us figure out what the problem is: Here's the image repo and tag - |

|

Yes, Prometheus is running in our deployment. I'll try to utilize that image and grab the logs. |

|

Circling back on this... The issue was that the overall Kubernetes deployment repo we use specifically blocks pods from accessing IMDS in the namespace fluent-bit is deployed in, but access is still available from the instance level. I've confirmed running fluent-bit in it's own separate namespace allows fluent-bit to send logs to S3 with IMDS. |

|

@kdalporto Thanks for this post. I had forgotten about that, I believe its recommended in EKS and ECS to block containers from accessing IMDS. |

|

Awesome @kdalporto. I'm glad to hear that this is no longer an issue for you. Thank you for letting us know. |

|

Hi, I'm using Fluent Bit v1.8.15 / aws-for-fluent-bit 2.23.4 on AWS EKS and I'm still getting this in the logs [2022/04/29 11:16:43] [error] [filter:aws:aws.3] Could not retrieve ec2 metadata from IMDS I'm using IMDSv2 with the correct hop limit: curl -H "X-aws-ec2-metadata-token: INVALID" -v http://169.254.169.254/ is reporting 401 Sending logs to Cloudwatch does work though (at least for now). So I'm not sure if this is an error message which refers to IMDSv1 while IMDSv2 is working fine. |

|

@Ahlaee Is there more log output than that? CC @matthewfala |

|

@PettitWesley Everything else looks ok: Fluent Bit v1.8.15

[2022/04/29 10:33:43] [ info] [engine] started (pid=1) After that it creates the Log Streams. And then it repeats indefinitely: [2022/04/29 20:16:46] [error] [filter:aws:aws.3] Could not retrieve ec2 metadata from IMDS Logs are forwarded to cloudwatch nonetheless. |

|

@Ahlaee Ah this is the EC2 filter... and I think I might know the problem, you might have IMDS blocked for containers- this is a common/best practice. Does your setup include any of this? https://aws.amazon.com/premiumsupport/knowledge-center/ecs-container-ec2-metadata/ |

|

@PettitWesley No, our setup runs on EKS not ECS. I never configured anything related to networking modes when spinning up the cluster using the console. As far as I understand from the linked article, having IMDS blocked is an intentional setting that must be included in the user data of the Amazon EC2 instance. I didn't include anything related to this. It might be implicitly included by AWS in the cluster creation process. |

|

@Ahlaee Hmm you're right, this looks like the right link for EKS IMDS related things: aws/containers-roadmap#1109

Yea so the filter is failing, creds must be succeeding. Can you please share your full config? Also since you have IMDSv2 required (tokens required), then you need to set the config in the AWS filter: https://docs.fluentbit.io/manual/pipeline/filters/aws-metadata |

|

I was following the AWS documentation when setting up fluent-bit for EKS: https://docs.aws.amazon.com/AmazonCloudWatch/latest/monitoring/Container-Insights-setup-logs-FluentBit.html Their fluent-bit.yaml which is linked under contains an older image of the software, that doesn't support IMDSv2 and also has the imds_version filter set to v1. Setting the image version to 2.23.4 and the filter to imds_version v2 as you described above solved the issue for me. :) Thank you! |

|

I concur with @Ahlaee , using EKS with AWS supplied docs for setting up fluent-bit to Cloudwatch, also setting the image to 2.23.4 and imds_version v2 solved the issue for me aswell |

|

Just setting |

|

Seems like for me just helped changed |

|

In Oct 2022 the container image version in this manifest were new enough for IMDS v2 but configuration still contained 'imds_version v1' in two places. Updating 'v1' to 'v2' (in two places) was enough to fix that. |

imds_version causing logging errors ([2023/06/01 18:43:56] [error] [filter:aws:aws.3] Could not retrieve ec2 metadata from IMDS) fluent/fluent-bit#2840 (comment)

|

FWIW, I just re-deployed fluent-bit |

|

https://repost.aws/knowledge-center/ecs-container-ec2-metadata Hop limit of 2 is required when using Docker/containers. https://awscli.amazonaws.com/v2/documentation/api/latest/reference/ec2/modify-instance-metadata-options.html |

Bug Report

Describe the bug

Credentials are not retrieved from AWS Instance Metadata Service v2 (IMDSv2) when running on EC2. This causes plugins that require credentials to fail (e.g.:

cloudwatch).To Reproduce

Steps to reproduce the problem:

Create an EC2 instance with metadata version 2 only selected on the

Advanced Detailssection of theConfigure Instancestep.NB: I have used Amazon Linux 2 AMI (HVM), SSD Volume Type - ami-09f765d333a8ebb4b (64-bit x86) in this example

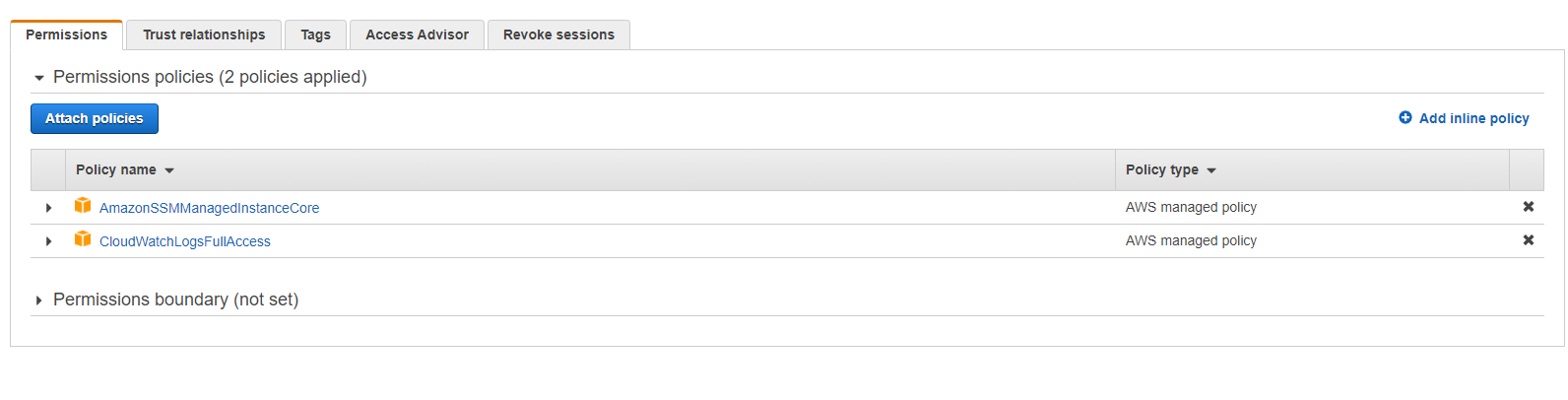

As I will be using the

cloudwatchoutput to demonstrate this issue I have assigned a very loose role to the instance:I created and assigned fully open security group to remove that as a potential issue.

Install Fluent Bit as per https://docs.fluentbit.io/manual/installation/linux/amazon-linux

Apply the following configuration:

sudo service td-agent-bit restartExpected behaviour

Expected fluent bit to obtain temporary credentials from the instance metadata service and forward the logs to cloudwatch.

Observed behaviour

Fluent bit fails to obtain credentials and the cloudwatch stream is not created & logs are not sent.

Your Environment

Filtersand plugins:cloudwatch(output)systemd(input)Additional context

Firstly, thank you for this great bit of software 👍

In an AWS environment disabling IMDSv1 is considered best security practice due to the security venerability that it creates. We would like to follow this recommendation but currently can't with the issue described above.

I note that the AWS Metadata filter has a option to allow a user to select between IMDSv1 and v2 and it appears that the code to retrieve the token and pass it in the metadata request header as required by IMDSv2 is already implemented in the codebase but is not used for obtaining credentials.

NB: The above configuration works fine and without issue when IMDSv1 is enabled on the EC2 instance.

The text was updated successfully, but these errors were encountered: