New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Calling self.start as an instance method for a Spider

#101

Comments

|

Why not build a plugin to solve your problem? Just like this https://github.com/python-ruia/ruia-motor |

|

I need to be able to run a function after the spider has ended - would that be possible with a plugin? |

|

Ruia has a hook function called after_start |

|

|

|

you can use @classmethod: for example: @classmethod

def download(cls):

cls.start()or make the Spider as a Downloader's property def __init__(self, *args, **kwargs):

self.target_spider = Spider |

|

I tried the I expect I'll have to use the property method which isn't too bad either. Thanks for pointing those out! |

from ruia import Spider

async def retry_func(request):

request.request_config["TIMEOUT"] = 10

class RetryDemo(Spider):

start_urls = ["http://httpbin.org/get"]

request_config = {"RETRIES": 3, "DELAY": 0, "TIMEOUT": 10, "RETRY_FUNC": retry_func}

@classmethod

def downloader(cls):

cls.start()

async def parse(self, response):

pages = ["http://httpbin.org/get?p=1", "http://httpbin.org/get?p=2"]

async for resp in self.multiple_request(pages):

yield self.parse_item(response=resp)

async def parse_item(self, response):

json_data = await response.json()

print(json_data)

if __name__ == "__main__":

RetryDemo.downloader() |

|

Awesome! That worked, even with a parent class: from ruia import Spider

import time

async def retry_func(request):

request.request_config["TIMEOUT"] = 10

class Downloader(Spider):

concurrency = 150

worker_numbers = 8

# RETRY_DELAY (secs) is time between retries

request_config = {

"RETRIES": 10,

"DELAY": 0,

"RETRY_DELAY": 0.1

}

@classmethod

def download(cls):

cls.start()

class RetryDemo(Downloader):

start_urls = ["http://httpbin.org/get"]

request_config = {"RETRIES": 3, "DELAY": 0, "TIMEOUT": 10, "RETRY_FUNC": retry_func}

async def parse(self, response):

pages = ["http://httpbin.org/get?p=1", "http://httpbin.org/get?p=2"]

async for resp in self.multiple_request(pages):

yield self.parse_item(response=resp)

async def parse_item(self, response):

json_data = await response.json()

print(json_data)

if __name__ == "__main__":

RetryDemo.download()My mistake was that I was trying to run my spider like this: spider = Spider()

spider.download()Which isn't possible as the Spider.download()It worked perfectly. Thank you very much for your help! |

|

This way of use is also a plugin |

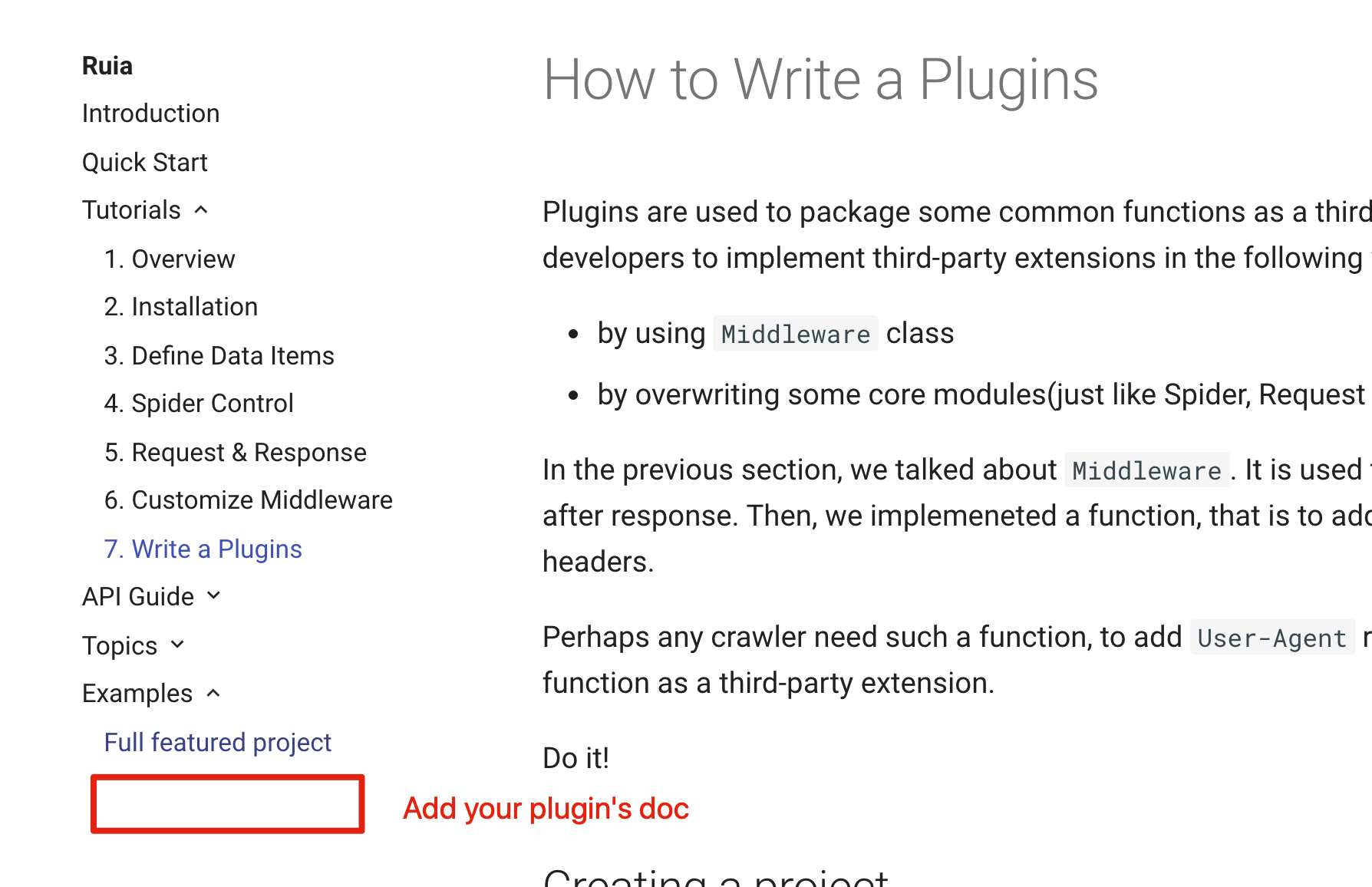

I see, I wasn't sure what you meant before! Thanks again! BTW, is there documentation for a plugin (in Chinese or English)? If not I may write some, if you would like. |

I have the following parent class which has reusable code for all the spiders in my project (this is just a basic example):

I use it by sub-classing for each different spider. However, it seems that

self.startcannot be accessed as an instance for spiders (since it's aclassmethod) - giving this error:Any idea how I can solve this issue whilst maintaining the structure I am trying to implement?

The text was updated successfully, but these errors were encountered: