The Grounding DINO model was proposed in Grounding DINO: Marrying DINO with Grounded Pre-Training for Open-Set Object Detection by Shilong Liu, Zhaoyang Zeng, Tianhe Ren, Feng Li, Hao Zhang, Jie Yang, Chunyuan Li, Jianwei Yang, Hang Su, Jun Zhu, Lei Zhang. Grounding DINO extends a closed-set object detection model with a text encoder, enabling open-set object detection. The model achieves remarkable results, such as 52.5 AP on COCO zero-shot.

The abstract from the paper is the following:

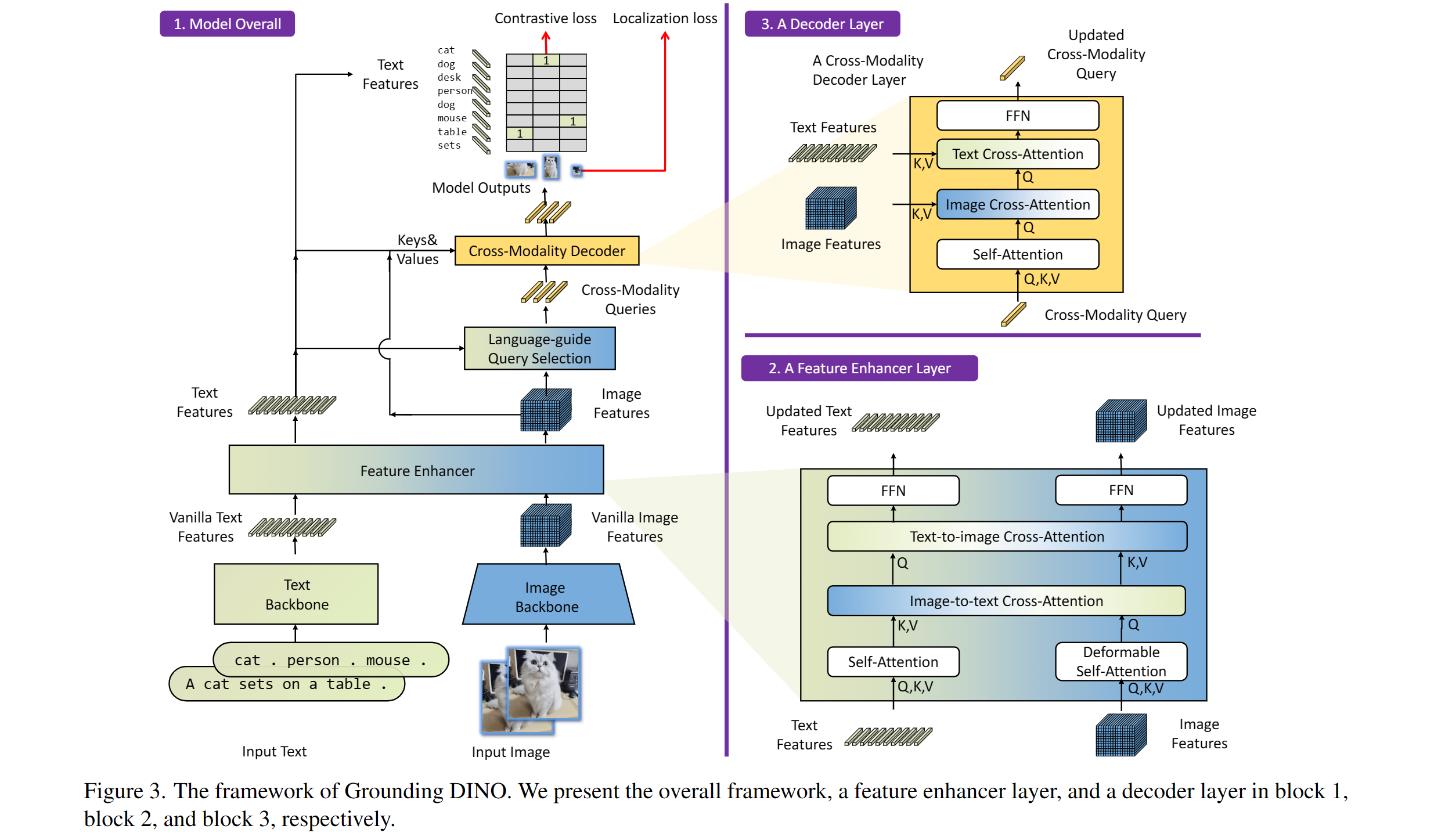

In this paper, we present an open-set object detector, called Grounding DINO, by marrying Transformer-based detector DINO with grounded pre-training, which can detect arbitrary objects with human inputs such as category names or referring expressions. The key solution of open-set object detection is introducing language to a closed-set detector for open-set concept generalization. To effectively fuse language and vision modalities, we conceptually divide a closed-set detector into three phases and propose a tight fusion solution, which includes a feature enhancer, a language-guided query selection, and a cross-modality decoder for cross-modality fusion. While previous works mainly evaluate open-set object detection on novel categories, we propose to also perform evaluations on referring expression comprehension for objects specified with attributes. Grounding DINO performs remarkably well on all three settings, including benchmarks on COCO, LVIS, ODinW, and RefCOCO/+/g. Grounding DINO achieves a 52.5 AP on the COCO detection zero-shot transfer benchmark, i.e., without any training data from COCO. It sets a new record on the ODinW zero-shot benchmark with a mean 26.1 AP.

Grounding DINO overview. Taken from the original paper.

This model was contributed by EduardoPacheco and nielsr. The original code can be found here.

- One can use [

GroundingDinoProcessor] to prepare image-text pairs for the model. - To separate classes in the text use a period e.g. "a cat. a dog."

- When using multiple classes (e.g.

"a cat. a dog."), usepost_process_grounded_object_detectionfrom [GroundingDinoProcessor] to post process outputs. Since, the labels returned frompost_process_object_detectionrepresent the indices from the model dimension where prob > threshold.

Here's how to use the model for zero-shot object detection:

import requests

import torch

from PIL import Image

from transformers import AutoProcessor, AutoModelForZeroShotObjectDetection,

model_id = "IDEA-Research/grounding-dino-tiny"

processor = AutoProcessor.from_pretrained(model_id)

model = AutoModelForZeroShotObjectDetection.from_pretrained(model_id).to(device)

image_url = "http://images.cocodataset.org/val2017/000000039769.jpg"

image = Image.open(requests.get(image_url, stream=True).raw)

# Check for cats and remote controls

text = "a cat. a remote control."

inputs = processor(images=image, text=text, return_tensors="pt").to(device)

with torch.no_grad():

outputs = model(**inputs)

results = processor.post_process_grounded_object_detection(

outputs,

inputs.input_ids,

box_threshold=0.4,

text_threshold=0.3,

target_sizes=[image.size[::-1]]

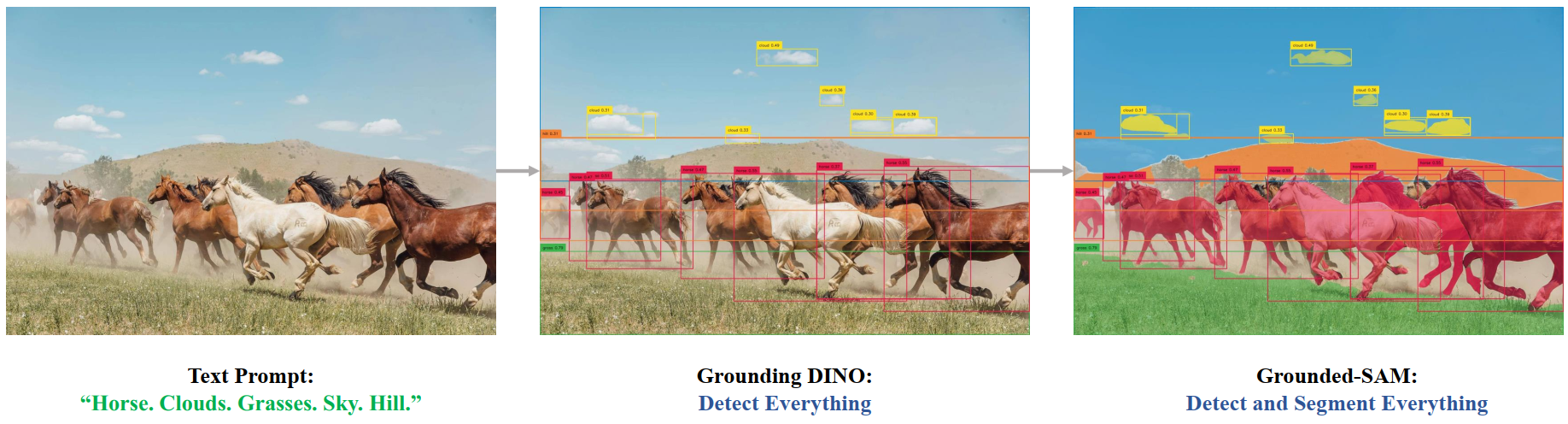

)One can combine Grounding DINO with the Segment Anything model for text-based mask generation as introduced in Grounded SAM: Assembling Open-World Models for Diverse Visual Tasks. You can refer to this demo notebook 🌍 for details.

Grounded SAM overview. Taken from the original repository.

A list of official Hugging Face and community (indicated by 🌎) resources to help you get started with Grounding DINO. If you're interested in submitting a resource to be included here, please feel free to open a Pull Request and we'll review it! The resource should ideally demonstrate something new instead of duplicating an existing resource.

- Demo notebooks regarding inference with Grounding DINO as well as combining it with SAM can be found here. 🌎

[[autodoc]] GroundingDinoImageProcessor - preprocess - post_process_object_detection

[[autodoc]] GroundingDinoProcessor - post_process_grounded_object_detection

[[autodoc]] GroundingDinoConfig

[[autodoc]] GroundingDinoModel - forward

[[autodoc]] GroundingDinoForObjectDetection - forward