Testing Strategy

Arnaud Bailly edited this page May 28, 2021

·

5 revisions

We are aiming for outside-in TDD approach:

- we start as early as possible with End-to-End tests, gradually making them more complex as we develop the various components but starting with something simple (like a system-level but dummy chain and hydra network)

- We flesh out other integration tests as needed, when we refine the technological stack used for the various bits and pieces

- We do most of our work in the Executable Specifications layer while we are developing the core domain functions, eg. the Head protocol. The rationale being this is the level at which we can test the most complex behaviours in the fastest and safest possible way as we everything runs without external dependencies or can even run as pure code using io-sim

- We tactically drop to Unit tests level when dealing with the protocol's "fine prints"

This diagram illustrates the various level of automated tests we run while developing Hydra node, and their relationship.

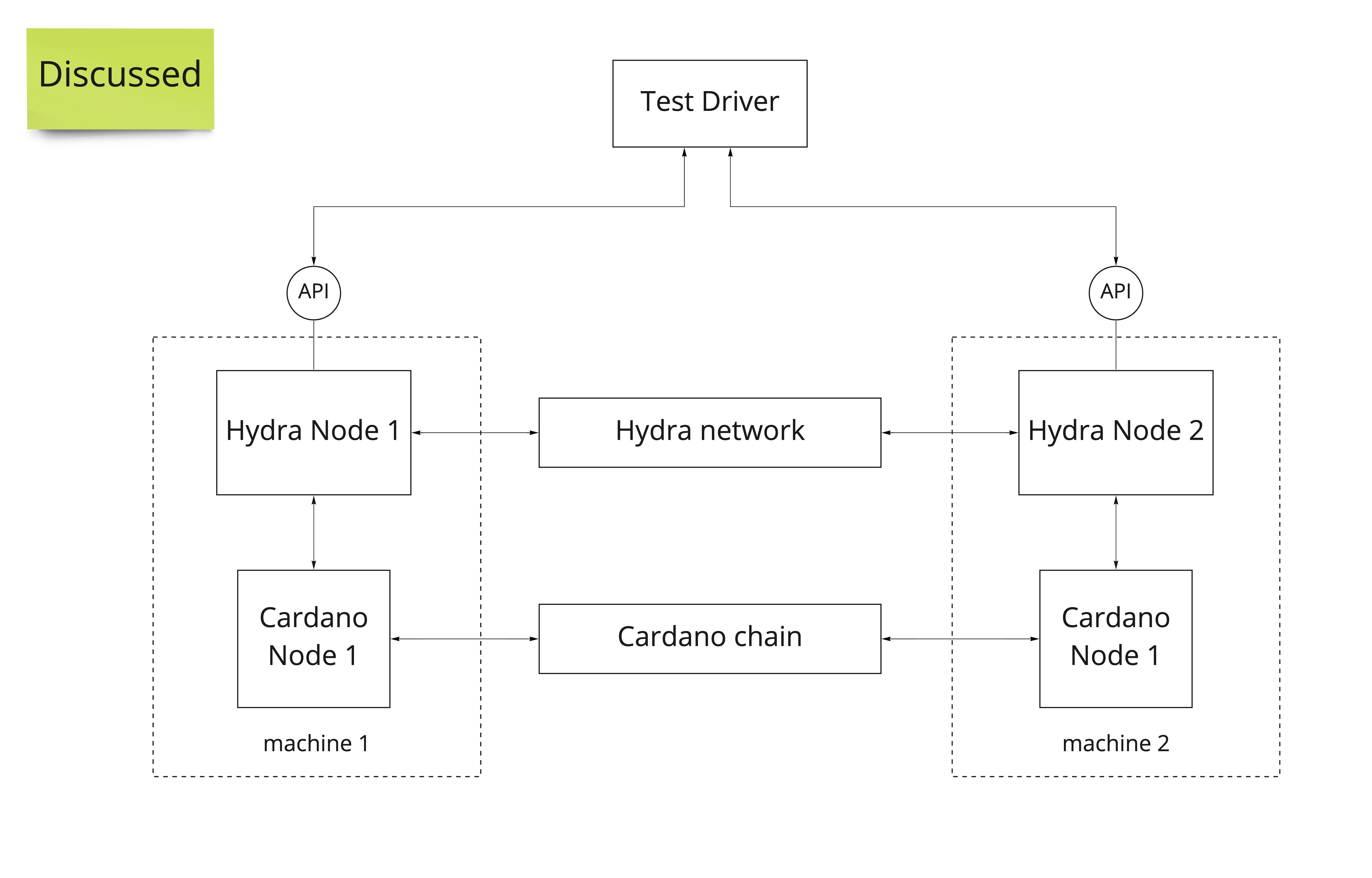

- Goal: Demonstrate working solution with all the bits and pieces in place

- Structure: A complete cluster of Hydra node processes, possibly distributed on different VMs or containers, connected to an actual test chain (possibly private to the Hydra nodes, or the testchain), interconnected through so-called Hydra network whatever that be. Tests are driven from a public (HTTP or other) API exposed by nodes

-

Scenarios:

- A few functional scenarios checking all the parts are talking to each other correctly

- Also used for load/resilience assessment (eg. real-life sized benchmarks)

- Goal: Drive and demonstrate correctness of Hydra Head effectful cluster implementation

-

Structure: A "cluster" of Hydra nodes w/in a single process, connected through mock chain and network. Can be run either in

IOor as pure code usingio-sim. Tests are driven through each node's client API in Haskell. -

Scenarios:

- Somewhat detailed Head protocol (eg. On/Off-chain State machine) w/ various interleaving of messages and state of nodes, payloads, transactions conflicts and ledger states... Focus on interaction between each node's state machine rather than completeness of the Head SM protocol proper, but there might be overlap with unit tests

- Goal: Drive and Demonstrate robustness/correctness of Hydra Head networking layer

-

Structure:

- Tests are driven through each network's client internal API in Haskell. This can imply some test-only public interface in the case of a multi-process or multi-container setup

- several network clients w/in a single process, connected through a "real" network layer (could require the need of external process if using a centralised message-broker solution, or could all be in-process using real network sockets, or combinations of various transport connections).

- or several "containers" connected through a more realistic stack (physical/virtual NICs, DNS,...)

-

Scenarios:

- Test communication layer between nodes, connections failures, timeouts behaviours, routing...

- Check interoperability of versions, upgrades and downgrades

- Goal: Drive development of PAB integration

- Structure: A single in-process Hydra node connected to a PAB-enabled client, running in emulator mode. Tests are driven through each node's client API in Haskell.

-

Scenarios:

- Test Node-to-Chain interactions and correctness of both sides implementation of the on-chain interface

- Goal: Drive and demonstrate correctness of pure Head protocol implementation and various components of our system

-

Structure:

- For Head protocol: A single head instance with a mock ledger. Tests are driven through the pure state machine function.

- For components: Pure functions

-

Scenarios:

- Complete head protocol, possibly using

hydra-simas an "oracle" - QuickCheck properties

- Complete head protocol, possibly using